Increase of WIPHY temperature when you are doing scan is not weird, as you are actively using it and as long as its normal that is expected.

Yes, sure.

But there is a characteristic change in temperature.

Let me remind you that the temperature before scanning is 10-15 degrees lower for any length of time before scanning and does not decrease after it has been completed.

But the values are certainly not critical. I remembered this as an indication of a global change in the operating mode of the chip.

I remembered this in the hope that it might prompt a rethink.

I've been suffering from this since the last few releases. Enabling/disabling packet steering and QoS didn't help at all. And today I flashed the r24328-91169898ce and within 3 hours I got 3 reboots ![]()

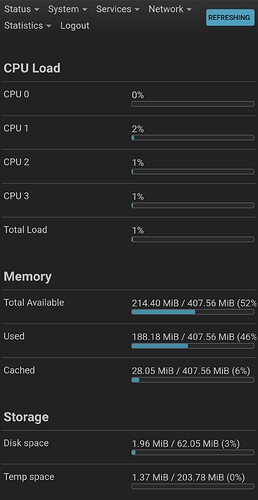

I have several devices connected to wifi and 2 devices wired. And whenever I do a speedtest etc, I see memory usage increases and for some reason it's not getting released back.

Device: ax3600

I have no idea how/why it stopped doing this for me.

Have you tried setting the irq's and rebooting as per here?

If that works, you can put this script in your rc.local (local startup), before the exit 0:

##########

# IPQ807 does not properly define interrupts, so irqbalance does not work. Set static values.

# https://github.com/Irqbalance/irqbalance/issues/258

cat > /tmp/set-ipq807-affinity.sh << 'EOF'

#!/bin/sh

set_affinity() {

irq=$(awk "/$1/{ print substr(\$1, 1, length(\$1)-1); exit }" /proc/interrupts)

[ -n "$irq" ] && echo $2 > /proc/irq/$irq/smp_affinity

logger -t /tmp/set-ipq807-affinity.sh "Setting Affinity: $1 ($irq) to $2"

}

# assign 4 rx interrupts to each core

set_affinity 'reo2host-destination-ring1' 1

set_affinity 'reo2host-destination-ring2' 2

set_affinity 'reo2host-destination-ring3' 4

set_affinity 'reo2host-destination-ring4' 8

# assign 3 tcl completions to last 3 cores

set_affinity 'wbm2host-tx-completions-ring1' 2

set_affinity 'wbm2host-tx-completions-ring2' 4

set_affinity 'wbm2host-tx-completions-ring3' 8

# assign 3 ppdu mac interrupts to last 3 cores

set_affinity 'ppdu-end-interrupts-mac1' 2

set_affinity 'ppdu-end-interrupts-mac2' 4

set_affinity 'ppdu-end-interrupts-mac3' 8

# assign lan/wan to core 4

set_affinity 'edma_txcmpl' 8

set_affinity 'edma_rxfill' 8

set_affinity 'edma_rxdesc' 8

set_affinity 'edma_misc' 8

exit 0

EOF

chmod 777 /tmp/set-ipq807-affinity.sh

/tmp/set-ipq807-affinity.sh

##########

I didn't have the script and placed it to run on it on boot. I'll observe for a while to see if it helps.

Fri Nov 10 09:34:48 2023 user.notice /tmp/set-ipq807-affinity.sh: Setting Affinity: reo2host-destination-ring1 (66) to 1

Fri Nov 10 09:34:48 2023 user.notice /tmp/set-ipq807-affinity.sh: Setting Affinity: reo2host-destination-ring2 (67) to 2

Fri Nov 10 09:34:48 2023 user.notice pbr: Reloading pbr due to firewall action: includes

Fri Nov 10 09:34:48 2023 user.notice /tmp/set-ipq807-affinity.sh: Setting Affinity: reo2host-destination-ring3 (68) to 4

Fri Nov 10 09:34:48 2023 user.notice /tmp/set-ipq807-affinity.sh: Setting Affinity: reo2host-destination-ring4 (69) to 8

Fri Nov 10 09:34:48 2023 user.notice /tmp/set-ipq807-affinity.sh: Setting Affinity: wbm2host-tx-completions-ring1 (48) to 2

Fri Nov 10 09:34:48 2023 user.notice /tmp/set-ipq807-affinity.sh: Setting Affinity: wbm2host-tx-completions-ring2 (53) to 4

Fri Nov 10 09:34:48 2023 user.notice /tmp/set-ipq807-affinity.sh: Setting Affinity: wbm2host-tx-completions-ring3 (56) to 8

Fri Nov 10 09:34:48 2023 user.notice /tmp/set-ipq807-affinity.sh: Setting Affinity: edma_txcmpl (32) to 8

Fri Nov 10 09:34:48 2023 user.notice /tmp/set-ipq807-affinity.sh: Setting Affinity: edma_rxfill (33) to 8

Fri Nov 10 09:34:48 2023 user.notice /tmp/set-ipq807-affinity.sh: Setting Affinity: edma_rxdesc (35) to 8

Fri Nov 10 09:34:48 2023 user.notice /tmp/set-ipq807-affinity.sh: Setting Affinity: edma_misc (36) to 8

One question about this: I see three interrupts with the name: ppdu-end-interrupts-mac1, ppdu-end-interrupts-mac2, ppdu-end-interrupts-mac3. I can't see in yours and others scripts reassign this three elements. Maybe is not good for performance and better to let all of them in the same CPU?

I actually haven't seen any other scripts (at least with an explanation) place ppdu* interrupts manually. Given hnyman's work in testing this, I followed his findings, as well as what I observed in the QSDK affinity file referenced in the same thread.

My AX3600 has had a roughly 2 week uptime, and over this period my interrupts look like this below. If you have references on these interrupts please share the links for sure. In my case I think moving them to CPU1 and CPU2 would distribute load better than current.

root@RM-AX3600:~# cat /proc/interrupts

CPU0 CPU1 CPU2 CPU3

9: 0 0 0 0 GIC-0 39 Level arch_mem_timer

13: 129234707 159095538 195460975 170400679 GIC-0 20 Level arch_timer

16: 0 0 0 0 MSI 0 Edge PCIe PME, aerdrv

17: 0 0 0 0 GIC-0 239 Level bam_dma

18: 0 0 0 0 GIC-0 270 Level bam_dma

19: 1744674 0 0 0 GIC-0 178 Level bam_dma

20: 2 0 0 0 GIC-0 354 Edge smp2p

21: 10 0 0 0 GIC-0 340 Level msm_serial0

23: 0 0 0 0 GIC-0 216 Level 4a9000.thermal-sensor

24: 0 0 0 0 GIC-0 35 Edge wdt_bark

25: 0 0 0 0 GIC-0 357 Edge q6v5 wdog

26: 0 0 0 0 pmic_arb 51380237 Edge pm-adc5

27: 5 0 0 0 GIC-0 47 Edge cpr3

28: 0 0 0 0 smp2p 0 Edge q6v5 fatal

29: 1 0 0 0 smp2p 1 Edge q6v5 ready

30: 0 0 0 0 smp2p 2 Edge q6v5 handover

31: 0 0 0 0 smp2p 3 Edge q6v5 stop

32: 503 0 0 466484252 GIC-0 377 Level edma_txcmpl

33: 0 0 0 0 GIC-0 385 Level edma_rxfill

34: 0 0 0 0 msmgpio 34 Edge keys

35: 2272 0 0 514803105 GIC-0 393 Level edma_rxdesc

36: 0 0 0 0 GIC-0 376 Level edma_misc

37: 31 0 0 0 MSI 524288 Edge ath10k_pci

38: 64 0 0 0 GIC-0 353 Edge glink-native

39: 5 0 0 0 GIC-0 348 Edge ce0

40: 210156795 0 0 0 GIC-0 347 Edge ce1

41: 144558442 0 0 0 GIC-0 346 Edge ce2

42: 8070494 0 0 0 GIC-0 343 Edge ce3

43: 1 0 0 0 GIC-0 443 Edge ce5

44: 6432013 0 0 0 GIC-0 72 Edge ce7

45: 0 0 0 0 GIC-0 334 Edge ce9

46: 1 0 0 0 GIC-0 333 Edge ce10

47: 0 0 0 0 GIC-0 69 Edge ce11

48: 0 23839523 0 0 GIC-0 189 Edge wbm2host-tx-completions-ring1

49: 0 0 0 0 GIC-0 323 Edge reo2ost-exception

50: 5956386 0 0 0 GIC-0 322 Edge wbm2host-rx-release

51: 0 0 0 0 GIC-0 209 Edge rxdma2host-destination-ring-mac1

52: 1 0 0 0 GIC-0 212 Edge host2rxdma-host-buf-ring-mac1

53: 0 0 27699917 0 GIC-0 190 Edge wbm2host-tx-completions-ring2

54: 0 0 0 0 GIC-0 211 Edge rxdma2host-destination-ring-mac3

55: 1 0 0 0 GIC-0 235 Edge host2rxdma-host-buf-ring-mac3

56: 0 0 0 25713312 GIC-0 191 Edge wbm2host-tx-completions-ring3

57: 0 0 0 0 GIC-0 210 Edge rxdma2host-destination-ring-mac2

58: 0 0 0 0 GIC-0 215 Edge host2rxdma-host-buf-ring-mac2

59: 28774 0 0 0 GIC-0 321 Edge reo2host-status

60: 60344990 0 0 0 GIC-0 261 Edge ppdu-end-interrupts-mac1

61: 1 0 0 0 GIC-0 255 Edge rxdma2host-monitor-status-ring-mac1

62: 164789382 0 0 0 GIC-0 263 Edge ppdu-end-interrupts-mac3

63: 1 0 0 0 GIC-0 260 Edge rxdma2host-monitor-status-ring-mac3

64: 0 0 0 0 GIC-0 262 Edge ppdu-end-interrupts-mac2

65: 0 0 0 0 GIC-0 256 Edge rxdma2host-monitor-status-ring-mac2

66: 6303088 0 0 0 GIC-0 267 Edge reo2host-destination-ring1

67: 0 8443146 0 0 GIC-0 268 Edge reo2host-destination-ring2

68: 0 0 8190445 0 GIC-0 271 Edge reo2host-destination-ring3

69: 0 0 0 6967872 GIC-0 320 Edge reo2host-destination-ring4

IPI0: 98159 137229 117785 118281 Rescheduling interrupts

IPI1: 136800210 206543636 322121907 82920556 Function call interrupts

IPI2: 0 0 0 0 CPU stop interrupts

IPI3: 0 0 0 0 CPU stop (for crash dump) interrupts

IPI4: 0 0 0 0 Timer broadcast interrupts

IPI5: 115165 80435 100828 107198 IRQ work interrupts

IPI6: 0 0 0 0 CPU wake-up interrupts

Err: 0

It helped a lot and no OOMs so far! Thank you. Memory usage looks solid.

Just for my understanding: how do IRQ assignments affect memory usage?

Not directly for sure.

The only thing I can think of which indirectly makes this happen is that the increase of efficiency of resource utilization. Specifically, that IRQ balancing increases parallelism across cores, which can prevent resource contention and optimize the use of available memory. When combined with the qca closed source blob (which Robimarko and Ansuel I know love), and ath11k's memory hunger, this interaction may become more important.

@robimarko based on these findings and confirmation above, do you think it makes sense to include the above script or a flavor of it for ipq807 devices in firmware? It's dynamic so the issue of direct number based assignment (which for example changed between 5.15 and 6.1) is a non-concern.

[EDIT] BTW, current uptime and memory info. Memory usage has been rock solid.

Uptime 12d 6h 29m 36s

Total Available 129 / 406 MB

Used 270 / 406 MB

Cached 27 / 406 MB

Thanks, I've placed the script and see how it goes after reboot.

If its dynamic, then it should be good to include it by default as at worst case it will increase the performance a bit.

I've the ppdu* moved across cores since some months ago. But I don't know if it's good or not from a performance view. My two AX3600 seem stable, I can get weeks of uptime without problem. I usually only restart for updating to latest snapshot.

I don't fully understand this, but with the irq script in rc local I have 160 mb instead of 60mb ram free ![]()

No issues with this in my rc.local for my ipq8074. I recon I had to set it like this to get better performance when doing --bidir tests. The IRQ did change running NSS basics so I guess a script is better.

echo 1 > /sys/class/net/lan/queues/rx-0/rps_cpus

echo 2 > /sys/class/net/lan/queues/rx-1/rps_cpus

echo 4 > /sys/class/net/lan/queues/rx-2/rps_cpus

echo 8 > /sys/class/net/lan/queues/rx-3/rps_cpus

echo 1 > /sys/class/net/lan/queues/tx-0/xps_cpus

echo 2 > /sys/class/net/lan/queues/tx-1/xps_cpus

echo 4 > /sys/class/net/lan/queues/tx-2/xps_cpus

echo 8 > /sys/class/net/lan/queues/tx-3/xps_cpus

# echo 8 > /proc/irq/66/smp_affinity

# wbm2host-tx-completions-ring

echo 2 > /proc/irq/65/smp_affinity

echo 4 > /proc/irq/70/smp_affinity

echo 8 > /proc/irq/73/smp_affinity

# ppdu-end-interrupts-mac

echo 8 > /proc/irq/77/smp_affinity

echo 4 > /proc/irq/79/smp_affinity

echo 2 > /proc/irq/81/smp_affinity

# reo2host-destination-ring

echo 1 > /proc/irq/83/smp_affinity

echo 2 > /proc/irq/84/smp_affinity

echo 4 > /proc/irq/85/smp_affinity

echo 8 > /proc/irq/86/smp_affinity

One question tho, if you set f or 3 or c, but don't use irqbalance. Then it will not work?

Great - yes that's also what I saw as well.

Based on McGiverGim's input, I have updated the script in above post to also include

ppdu-end-interrupts-mac-* assigning to cores 2, 3, and 4.

This script should fix the ath11k memory leak?

Does it work also on AX6 (IPQ807X) ?

Please test and report back your results.

This is my memory usage without the script, using stable 23.05 release.

You think I need to use the script?

I used to start at 150MB and run out. You have more free, but it would be interesting to see if your free amount increases.