BACKGROUND

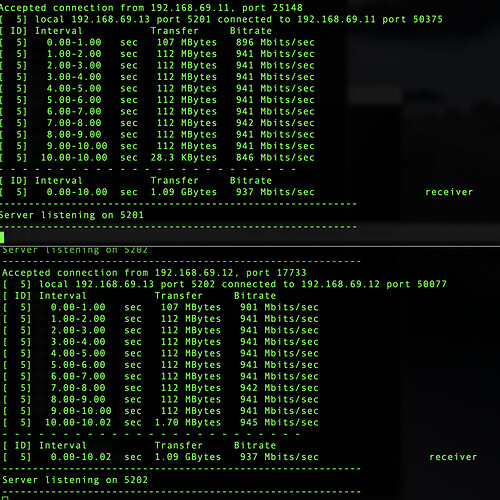

I recently upgraded my unmanaged switch to a Linksys LGS326 managed switch with support for LACP link aggregation. I was able to connect my three TrueNAS CORE NAS systems through the switch using 2 port LCAP and was able to confirm simultaneous bandwidth from multiple clients using iperf3

OPENWRT LACP

I wanted to test my Linksys WRT1900AC in static or dynamic LAG mode and test for any performance improvement before I spend any coin on a new wifi router. I am using the WRT1900AC in AP mode, thus no firewall.

WHAT I HAVE TRIED

I have read most of the forum/reddit posts from others attempting to get LAG working, but they all seem dated or I was not successfull using the same methods.

-

19.07.07 I tried installing the kmod-bonding, proto-bonding, mii-tools, etc. and manually tweaking the /etc/rc.local and /etc/config/network files without any success since there is no LuCI interface

-

21.02.0-rc3 I also tried the same thing and tried using the LuCI interface.

- This first thing I noticed is the default MTU for the switch eth0 device is 1508 instead of 1500, but can be easily overridden by configuring the interface and manually entering the correct MTU value. Not sure if this is a known bug or not.

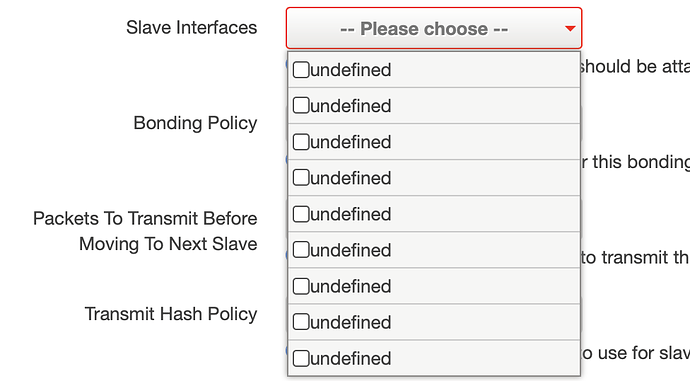

- Rebooting after installing the bonding packages, when I attempt to add a new LAG interface, the slave devices list does not populate with names to select from. You can select two (or more) of the blank names and save the new LAG interface and manually edit the /etc/config/network file to add the devices, then manually restart the new LAG interface.

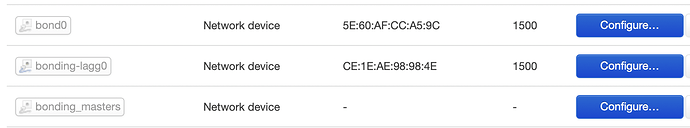

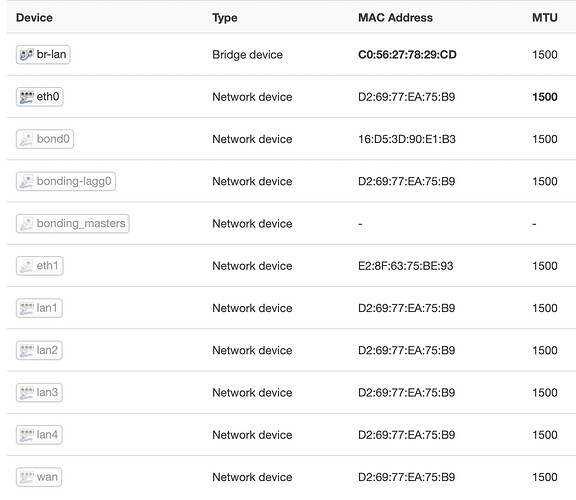

- I noticed that after installing the bonding packages, there are two new devices (bond0 and bonding_masters) which are greyed out until you click configure and save. The third is the manually created bonding-lagg0 interface, which only appears after rebooting after the interface was first created.

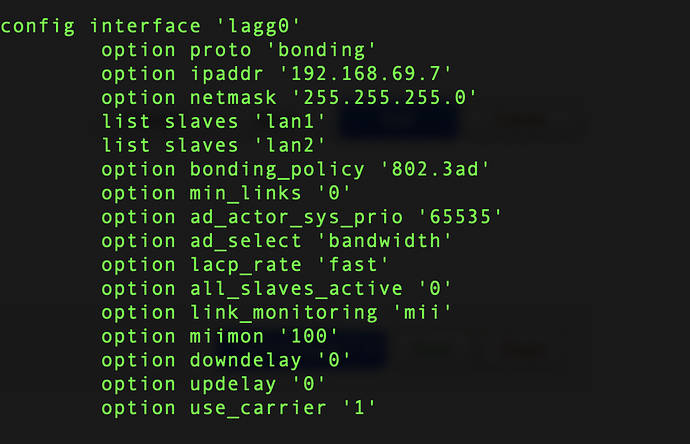

- This results in the following /etc/config/network file

config interface 'loopback'

option device 'lo'

option proto 'static'

option ipaddr '127.0.0.1'

option netmask '255.0.0.0'

config globals 'globals'

option ula_prefix 'fd50:a554:79fe::/48'

config device

option name 'br-lan'

option type 'bridge'

option macaddr 'c0:56:27:78:29:cd'

list ports 'lan3'

list ports 'lan4'

config interface 'lan'

option device 'br-lan'

option proto 'static'

option netmask '255.255.255.0'

option ip6assign '60'

option ipaddr '192.168.69.2'

option gateway '192.168.69.5'

list dns '192.168.69.5'

config device

option name 'eth0'

option mtu '1500'

config interface 'lagg0'

option proto 'bonding'

option ipaddr '192.168.69.7'

option netmask '255.255.255.0'

list slaves 'lan1'

list slaves 'lan2'

option bonding_policy '802.3ad'

option min_links '0'

option ad_actor_sys_prio '65535'

option ad_select 'bandwidth'

option lacp_rate 'fast'

option all_slaves_active '0'

option link_monitoring 'mii'

option miimon '100'

option downdelay '0'

option updelay '0'

option use_carrier '1'

- When I reboot, I get the following console messages.

[ 15.284672] mvneta f1070000.ethernet eth0: configuring for fixed/rgmii-id link mode

[ 15.293054] mvneta f1070000.ethernet eth0: Link is Up - 1Gbps/Full - flow control off

[ 15.302912] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

[ 15.314004] mv88e6085 f1072004.mdio-mii:00 lan3: configuring for phy/gmii link mode

[ 15.328498] 8021q: adding VLAN 0 to HW filter on device lan3

[ 15.334637] br-lan: port 1(lan3) entered blocking state

[ 15.339908] br-lan: port 1(lan3) entered disabled state

[ 15.353321] device lan3 entered promiscuous mode

[ 15.358027] device eth0 entered promiscuous mode

[ 15.469607] mv88e6085 f1072004.mdio-mii:00 lan4: configuring for phy/gmii link mode

[ 15.484302] 8021q: adding VLAN 0 to HW filter on device lan4

[ 15.503175] br-lan: port 2(lan4) entered blocking state

[ 15.508451] br-lan: port 2(lan4) entered disabled state

[ 15.520505] device lan4 entered promiscuous mode

[ 15.540797] mv88e6085 f1072004.mdio-mii:00: p5: already a member of VLAN 1

[ 15.603108] bonding: bonding-lagg0 is being created...

[ 15.606323] mv88e6085 f1072004.mdio-mii:00 lan4: Link is Up - 1Gbps/Full - flow control rx/tx

[ 15.622705] br-lan: port 2(lan4) entered blocking state

[ 15.627975] br-lan: port 2(lan4) entered forwarding state

[ 15.640976] IPv6: ADDRCONF(NETDEV_CHANGE): br-lan: link becomes ready

[ 16.660987] mv88e6085 f1072004.mdio-mii:00 lan1: configuring for phy/gmii link mode

[ 16.671707] 8021q: adding VLAN 0 to HW filter on device lan1

[ 16.684205] bonding-lagg0: (slave lan1): Enslaving as a backup interface with a down link

[ 17.701219] mv88e6085 f1072004.mdio-mii:00 lan2: configuring for phy/gmii link mode

[ 17.711499] 8021q: adding VLAN 0 to HW filter on device lan2

[ 17.717700] bonding-lagg0: (slave lan2): Enslaving as a backup interface with a down link

[ 17.743692] 8021q: adding VLAN 0 to HW filter on device bonding-lagg0

- When I move my LAN connection from LAN4 to LAN1 (1 of 2 ports in LACP), I first get an error message that no response from partners, which seems correct. Once I re-enable LACP in the switch it seems to become active.

[ 524.852777] mv88e6085 f1072004.mdio-mii:00 lan4: Link is Down

[ 524.858776] br-lan: port 2(lan4) entered disabled state

[ 528.368370] mv88e6085 f1072004.mdio-mii:00 lan1: Link is Up - 1Gbps/Full - flow control rx/tx

[ 528.406056] bonding-lagg0: (slave lan1): link status definitely up, 1000 Mbps full duplex

[ 528.414261] bonding-lagg0: Warning: No 802.3ad response from the link partner for any adapters in the bond

[ 528.423974] bonding-lagg0: active interface up!

[ 528.428732] IPv6: ADDRCONF(NETDEV_CHANGE): bonding-lagg0: link becomes ready

[ 623.961329] mv88e6085 f1072004.mdio-mii:00 lan1: Link is Down

[ 624.016656] bonding-lagg0: (slave lan1): link status definitely down, disabling slave

[ 624.024523] bonding-lagg0: now running without any active interface!

[ 626.711052] mv88e6085 f1072004.mdio-mii:00 lan1: Link is Up - 1Gbps/Full - flow control off

[ 626.766641] bonding-lagg0: (slave lan1): link status definitely up, 1000 Mbps full duplex

[ 626.774854] bonding-lagg0: active interface up!

[ 654.653535] mv88e6085 f1072004.mdio-mii:00 lan1: Link is Down

[ 654.726783] bonding-lagg0: (slave lan1): link status definitely down, disabling slave

[ 654.734650] bonding-lagg0: now running without any active interface!

[ 657.511193] mv88e6085 f1072004.mdio-mii:00 lan1: Link is Up - 1Gbps/Full - flow control rx/tx

[ 657.606777] bonding-lagg0: (slave lan1): link status definitely up, 1000 Mbps full duplex

[ 657.614990] bonding-lagg0: active interface up!

- I also tried the latest daily snapshots, which yields the same results.

QUESTIONS/PROBLEMS

-

When I move my LAN connection from LAN4 to LAN1, the router is no longer reachable via ping or the LuCI interface. I was hesitant to remove LAN3 and LAN4 from the BR-LAN bridge as that is my only recourse to SSH in to make changes if the LACP is not working correctly.

-

What tweaks do I need to make to my /etc/config/network file to allow the router to be accessible via the LAN IP address and to access my firewall?

-

Are their other tweaks to the LAN interface or BR-LAN device that I need to make? I seem to recall reading that some had to create a VLAN in order to attach interfaces to the LACP bond, but the instructions were never very clear how to actually do that.

-

I noticed that all the LAN[1,2,3,4] MAC addresses are the same as ETH0 and BONDING-LAGG0 devices. Do I need to manually override the ones in the LAG bond? My switch is configured to use MAC+IP