Hi,

Been wondering some weeks already why I have in several morning oom_reaper: reaped process 30245 (dnsmasq) type of messages around 9am in my router kernel/system log.

Found out that when I'm opening my Taxi App to get a ride to work, it basically opens several instances of dnsmasq and runs out of memory and kills it due to oom.

This problem is repeatable!

Problem is mostly lenient as dnsmasq/system survives the killing of extra dnsmasq instances and I'm actually able to get my cab to work in the morning using the App.

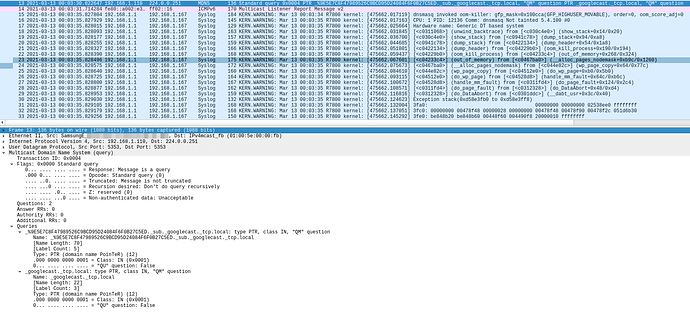

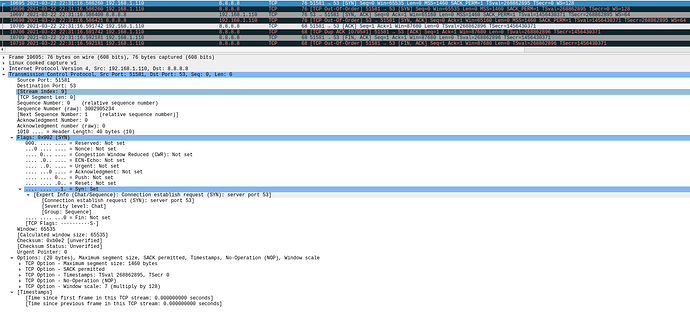

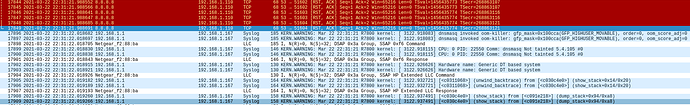

When opening my Taxi App, tcpdump from lan interface shows a MDNS message received from my Samsung S7 192.168.1.110, after which system will start dumping kernel logs to my remote server 192.168.1.167.

Related syslog dump for the above "Taxi App" opening

Fri Mar 12 23:56:31 2021 daemon.notice netifd: wan (2137): udhcpc: sending renew to 172.17.0.200

Fri Mar 12 23:56:31 2021 daemon.notice netifd: wan (2137): udhcpc: lease of aaa.bbb.ccc.ddd obtained, lease time 21600

Sat Mar 13 00:03:34 2021 kern.warn kernel: [475662.017119] dnsmasq invoked oom-killer: gfp_mask=0x100cca(GFP_HIGHUSER_MOVABLE), order=0, oom_score_adj=0

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.017163] CPU: 1 PID: 12136 Comm: dnsmasq Not tainted 5.4.100 #0

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.025664] Hardware name: Generic DT based system

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.031845] [<c0311068>] (unwind_backtrace) from [<c030c4e0>] (show_stack+0x14/0x20)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.036700] [<c030c4e0>] (show_stack) from [<c0941c78>] (dump_stack+0x94/0xa8)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.044685] [<c0941c78>] (dump_stack) from [<c0422134>] (dump_header+0x54/0x1a8)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.051801] [<c0422134>] (dump_header) from [<c04229b0>] (oom_kill_process+0x190/0x194)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.059437] [<c04229b0>] (oom_kill_process) from [<c04233c4>] (out_of_memory+0x268/0x324)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.067601] [<c04233c4>] (out_of_memory) from [<c0467ba0>] (__alloc_pages_nodemask+0xb9c/0x1260)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.075673] [<c0467ba0>] (__alloc_pages_nodemask) from [<c044e82c>] (wp_page_copy+0x64/0x77c)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.084610] [<c044e82c>] (wp_page_copy) from [<c04512e0>] (do_wp_page+0xb0/0x5b0)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.093115] [<c04512e0>] (do_wp_page) from [<c04528d8>] (handle_mm_fault+0x64c/0xb6c)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.100672] [<c04528d8>] (handle_mm_fault) from [<c0311fd4>] (do_page_fault+0x124/0x2c4)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.108571] [<c0311fd4>] (do_page_fault) from [<c0312328>] (do_DataAbort+0x48/0xd4)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.116816] [<c0312328>] (do_DataAbort) from [<c0301ddc>] (__dabt_usr+0x3c/0x40)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.124623] Exception stack(0xd58e3fb0 to 0xd58e3ff8)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.132004] 3fa0: 00000000 00000000 02538ee0 ffffffff

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.137051] 3fc0: 00000000 00478f48 00000028 00000000 00478f48 00478f90 00478f2c 051d6b30

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.145292] 3fe0: be848b20 be848b60 00448f60 004490f8 20000010 ffffffff

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.153654] Mem-Info:

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.160457] active_anon:68806 inactive_anon:738 isolated_anon:0

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.160457] active_file:15 inactive_file:13 isolated_file:17

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.160457] unevictable:0 dirty:40 writeback:3 unstable:0

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.160457] slab_reclaimable:2222 slab_unreclaimable:3585

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.160457] mapped:513 shmem:3154 pagetables:318 bounce:0

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.160457] free:3922 free_pcp:0 free_cma:0

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.173293] Node 0 active_anon:275224kB inactive_anon:2952kB active_file:60kB inactive_file:52kB unevictable:0kB isolated(anon):0kB isolated(file):68kB mapped:2052kB dirty:160kB writeback:12kB shmem:12616kB writeback_tmp:0kB unstable:0kB all_unreclaimable? no

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.196380] Normal free:15688kB min:16384kB low:20480kB high:24576kB active_anon:275224kB inactive_anon:2952kB active_file:80kB inactive_file:88kB unevictable:0kB writepending:172kB present:424960kB managed:410656kB mlocked:0kB kernel_stack:856kB pagetables:1272kB bounce:0kB free_pcp:0kB local_pcp:0kB free_cma:0kB

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.224233] lowmem_reserve[]: 0 0 0

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.246474] Normal: 129*4kB (UME) 22*8kB (UME) 24*16kB (UE) 7*32kB (ME) 12*64kB (UE) 6*128kB (UM) 17*256kB (UE) 9*512kB (U) 1*1024kB (M) 1*2048kB (M) 0*4096kB = 14868kB

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.250036] 3213 total pagecache pages

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.265030] 0 pages in swap cache

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.268685] Swap cache stats: add 0, delete 0, find 0/0

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.272098] Free swap = 0kB

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.277620] Total swap = 0kB

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.280429] 106240 pages RAM

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.283380] 0 pages HighMem/MovableOnly

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.286333] 3576 pages reserved

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.290397] Tasks state (memory values in pages):

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.293539] [ pid ] uid tgid total_vm rss pgtables_bytes swapents oom_score_adj name

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.298219] [ 180] 81 180 260 23 8192 0 0 ubusd

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.306742] [ 181] 0 181 176 10 8192 0 0 askfirst

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.315142] [ 225] 0 225 204 12 6144 0 0 urngd

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.323736] [ 685] 514 685 267 42 8192 0 0 logd

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.331888] [ 686] 0 686 293 23 10240 0 0 logread

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.340134] [ 738] 0 738 500 72 10240 0 0 rpcd

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.348632] [ 807] 323 807 252 33 8192 0 0 chronyd

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.356655] [ 1025] 0 1025 215 11 8192 0 0 dropbear

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.365221] [ 1145] 0 1145 1141 74 10240 0 0 hostapd

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.373656] [ 1146] 0 1146 1117 52 10240 0 0 wpa_supplicant

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.382152] [ 1208] 0 1208 393 37 10240 0 0 netifd

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.390931] [ 1360] 0 1360 313 30 10240 0 0 odhcpd

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.399241] [ 1454] 0 1454 270 11 6144 0 0 crond

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.407582] [ 1518] 0 1518 1536 655 14336 0 0 uhttpd

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.415856] [ 1645] 0 1645 1217 213 12288 0 0 collectd

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.424171] [ 1728] 65534 1728 1136 421 12288 0 0 https-dns-proxy

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.432855] [ 1729] 65534 1729 1149 416 12288 0 0 https-dns-proxy

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.442039] [ 2137] 0 2137 269 10 8192 0 0 udhcpc

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.451161] [ 2167] 0 2167 211 14 10240 0 0 odhcp6c

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.459222] [ 31838] 453 31838 13374 12769 61440 0 0 dnsmasq

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.467907] [ 32419] 0 32419 229 22 8192 0 0 dropbear

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.476274] [ 32427] 0 32427 271 13 10240 0 0 ash

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.484747] [ 12129] 0 12129 1188 565 10240 0 0 tcpdump

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.492929] [ 12136] 453 12136 13374 12768 61440 0 0 dnsmasq

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.501332] [ 12137] 453 12137 13374 12768 61440 0 0 dnsmasq

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.509767] [ 12138] 453 12138 13374 12768 61440 0 0 dnsmasq

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.518171] [ 12139] 453 12139 13374 12768 61440 0 0 dnsmasq

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.526532] [ 12140] 453 12140 13374 12768 61440 0 0 dnsmasq

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.535011] [ 12141] 453 12141 13374 12768 61440 0 0 dnsmasq

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.543443] oom-kill:constraint=CONSTRAINT_NONE,nodemask=(null),cpuset=/,mems_allowed=0,global_oom,task_memcg=/,task=dnsmasq,pid=31838,uid=453

Sat Mar 13 00:03:35 2021 kern.err kernel: [475662.551857] Out of memory: Killed process 31838 (dnsmasq) total-vm:53496kB, anon-rss:51072kB, file-rss:4kB, shmem-rss:0kB, UID:453 pgtables:60kB oom_score_adj:0

Sat Mar 13 00:03:35 2021 kern.info kernel: [475662.578465] oom_reaper: reaped process 31838 (dnsmasq), now anon-rss:0kB, file-rss:0kB, shmem-rss:0kB

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.940211] procd invoked oom-killer: gfp_mask=0x100cca(GFP_HIGHUSER_MOVABLE), order=0, oom_score_adj=0

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.940244] CPU: 0 PID: 1 Comm: procd Not tainted 5.4.100 #0

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.948747] Hardware name: Generic DT based system

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.954403] [<c0311068>] (unwind_backtrace) from [<c030c4e0>] (show_stack+0x14/0x20)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.959092] [<c030c4e0>] (show_stack) from [<c0941c78>] (dump_stack+0x94/0xa8)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.967077] [<c0941c78>] (dump_stack) from [<c0422134>] (dump_header+0x54/0x1a8)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.974191] [<c0422134>] (dump_header) from [<c04229b0>] (oom_kill_process+0x190/0x194)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.981828] [<c04229b0>] (oom_kill_process) from [<c04233c4>] (out_of_memory+0x268/0x324)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.989992] [<c04233c4>] (out_of_memory) from [<c0467ba0>] (__alloc_pages_nodemask+0xb9c/0x1260)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475662.998064] [<c0467ba0>] (__alloc_pages_nodemask) from [<c041dd08>] (pagecache_get_page+0x120/0x34c)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.007003] [<c041dd08>] (pagecache_get_page) from [<c041e504>] (filemap_fault+0x5d0/0x94c)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.016206] [<c041e504>] (filemap_fault) from [<c044e5f0>] (__do_fault+0x40/0x12c)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.024709] [<c044e5f0>] (__do_fault) from [<c0452a74>] (handle_mm_fault+0x7e8/0xb6c)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.032090] [<c0452a74>] (handle_mm_fault) from [<c0311fd4>] (do_page_fault+0x124/0x2c4)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.040075] [<c0311fd4>] (do_page_fault) from [<c03123fc>] (do_PrefetchAbort+0x48/0xa4)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.048321] [<c03123fc>] (do_PrefetchAbort) from [<c0302088>] (ret_from_exception+0x0/0x18)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.056476] Exception stack(0xd949dfb0 to 0xd949dff8)

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.064897] dfa0: 00000000 b6f7f268 0000000a 00000993

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.069855] dfc0: b6f7f208 b6f7f268 b6f7f010 00000000 ffffffff 00000001 0000201d 00000001

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.078099] dfe0: 00000004 bea0ee38 b6fa3f9c b6f6a79c 00000010 ffffffff

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.086494] Mem-Info:

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.093272] active_anon:68726 inactive_anon:738 isolated_anon:0

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.093272] active_file:33 inactive_file:60 isolated_file:0

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.093272] unevictable:0 dirty:10 writeback:2 unstable:0

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.093272] slab_reclaimable:2222 slab_unreclaimable:3583

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.093272] mapped:515 shmem:3154 pagetables:318 bounce:0

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.093272] free:4210 free_pcp:3 free_cma:0

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.106111] Node 0 active_anon:274904kB inactive_anon:2952kB active_file:340kB inactive_file:536kB unevictable:0kB isolated(anon):0kB isolated(file):0kB mapped:2060kB dirty:40kB writeback:8kB shmem:12616kB writeback_tmp:0kB unstable:0kB all_unreclaimable? no

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.128763] Normal free:16244kB min:16384kB low:20480kB high:24576kB active_anon:274760kB inactive_anon:2952kB active_file:0kB inactive_file:296kB unevictable:0kB writepending:152kB present:424960kB managed:410656kB mlocked:0kB kernel_stack:856kB pagetables:1272kB bounce:0kB free_pcp:248kB local_pcp:0kB free_cma:0kB

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.156869] lowmem_reserve[]: 0 0 0

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.179114] Normal: 89*4kB (UME) 17*8kB (E) 24*16kB (UE) 72*32kB (UME) 17*64kB (UME) 5*128kB (UM) 17*256kB (UE) 10*512kB (UM) 0*1024kB 1*2048kB (M) 0*4096kB = 16428kB

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.182787] 3387 total pagecache pages

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.197513] 0 pages in swap cache

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.201260] Swap cache stats: add 0, delete 0, find 0/0

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.204750] Free swap = 0kB

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.210258] Total swap = 0kB

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.213065] 106240 pages RAM

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.216017] 0 pages HighMem/MovableOnly

Sat Mar 13 00:03:35 2021 kern.warn kernel: [475663.219036] 3576 pages reserved

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.222962] Tasks state (memory values in pages):

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.226176] [ pid ] uid tgid total_vm rss pgtables_bytes swapents oom_score_adj name

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.230861] [ 180] 81 180 260 23 8192 0 0 ubusd

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.239440] [ 181] 0 181 176 10 8192 0 0 askfirst

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.247782] [ 225] 0 225 204 12 6144 0 0 urngd

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.256309] [ 685] 514 685 267 48 8192 0 0 logd

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.264527] [ 686] 0 686 293 23 10240 0 0 logread

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.272776] [ 738] 0 738 500 72 10240 0 0 rpcd

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.281270] [ 807] 323 807 252 33 8192 0 0 chronyd

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.289368] [ 1025] 0 1025 215 11 8192 0 0 dropbear

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.297858] [ 1145] 0 1145 1141 74 10240 0 0 hostapd

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.306218] [ 1146] 0 1146 1117 52 10240 0 0 wpa_supplicant

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.314790] [ 1208] 0 1208 393 37 10240 0 0 netifd

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.323568] [ 1360] 0 1360 313 30 10240 0 0 odhcpd

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.331878] [ 1454] 0 1454 270 11 6144 0 0 crond

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.340220] [ 1518] 0 1518 1536 655 14336 0 0 uhttpd

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.348563] [ 1645] 0 1645 1217 213 12288 0 0 collectd

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.356742] [ 1728] 65534 1728 1136 421 12288 0 0 https-dns-proxy

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.365494] [ 1729] 65534 1729 1149 416 12288 0 0 https-dns-proxy

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.374683] [ 2137] 0 2137 269 10 8192 0 0 udhcpc

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.383799] [ 2167] 0 2167 211 38 10240 0 0 odhcp6c

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.391863] [ 32419] 0 32419 229 22 8192 0 0 dropbear

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.400544] [ 32427] 0 32427 271 13 10240 0 0 ash

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.408980] [ 12129] 0 12129 1188 565 10240 0 0 tcpdump

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.417133] [ 12136] 453 12136 13374 12783 61440 0 0 dnsmasq

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.425490] [ 12137] 453 12137 13374 12768 61440 0 0 dnsmasq

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.433975] [ 12138] 453 12138 13374 12783 61440 0 0 dnsmasq

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.442407] [ 12139] 453 12139 13374 12768 61440 0 0 dnsmasq

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.450809] [ 12140] 453 12140 13374 12768 61440 0 0 dnsmasq

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.459243] [ 12141] 453 12141 13374 12768 61440 0 0 dnsmasq

Sat Mar 13 00:03:35 2021 kern.info kernel: [475663.467647] oom-kill:constraint=CONSTRAINT_NONE,nodemask=(null),cpuset=/,mems_allowed=0,global_oom,task_memcg=/,task=dnsmasq,pid=12141,uid=453

Sat Mar 13 00:03:35 2021 kern.err kernel: [475663.476027] Out of memory: Killed process 12141 (dnsmasq) total-vm:53496kB, anon-rss:51072kB, file-rss:0kB, shmem-rss:0kB, UID:453 pgtables:60kB oom_score_adj:0

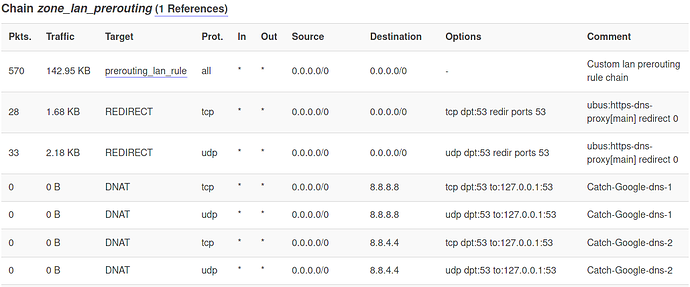

I have https-dns-proxy in use so cannot say much what's going on WAN side, but at least I cannot find any cloudflare:443 destination IP from WAN side tcpdump at the same time.

Dnsmasq version in use;

root@R7800:~# opkg list-installed |grep dnsmasq

dnsmasq-full - 2.84-1

System info

root@R7800:~# ubus call system board

{

"kernel": "5.4.100",

"hostname": "R7800",

"system": "ARMv7 Processor rev 0 (v7l)",

"model": "Netgear Nighthawk X4S R7800",

"board_name": "netgear,r7800",

"release": {

"distribution": "OpenWrt",

"version": "SNAPSHOT",

"revision": "r15992+17-cbcac4fde8",

"target": "ipq806x/generic",

"description": "OpenWrt SNAPSHOT r15992+17-cbcac4fde8"

}

}

dnsmasq config

config dnsmasq

option domainneeded '1'

option localise_queries '1'

option rebind_protection '1'

option rebind_localhost '1'

option local '/lan/'

option domain 'lan'

option expandhosts '1'

option authoritative '1'

option readethers '1'

option leasefile '/tmp/dhcp.leases'

option localservice '1'

option confdir '/tmp/dnsmasq.d'

list server '/0.sg.pool.ntp.org/1.1.1.1'

list server '/1.sg.pool.ntp.org/1.0.0.1'

list server '/2.sg.pool.ntp.org/1.1.1.1'

list server '/3.sg.pool.ntp.org/1.0.0.1'

# list server '127.0.0.1#5453'

list server '::1#5053'

list server '127.0.0.1#5054'

option noresolv '1'

option dnssec '1'

option nonegcache '1'

option cachesize '1000'

option ednspacket_max '1280'

list doh_backup_server '1.1.1.1'

list doh_backup_server '1.0.0.1'

https-dns-proxy config

root@R7800:/etc/config# cat https-dns-proxy

config main 'config'

option update_dnsmasq_config '-'

config https-dns-proxy

option bootstrap_dns '2606:4700:4700::1111,2606:4700:4700::1001'

option resolver_url 'https://cloudflare-dns.com/dns-query'

option listen_addr '::1'

option listen_port '5053'

option user 'nobody'

option group 'nogroup'

config https-dns-proxy

option bootstrap_dns '1.1.1.1,1.0.0.1'

option resolver_url 'https://cloudflare-dns.com/dns-query'

option listen_addr '127.0.0.1'

option listen_port '5054'

option user 'nobody'

option group 'nogroup'

Any idea how to troubleshoot further whether it's dnsmasq bug or my config that misbehaves with opening my Taxi App?