I'm trying to get my head around something. I've got a symmetric 500Mbit/s fiber. I've set the up- and download limits to 425Mbit/s.

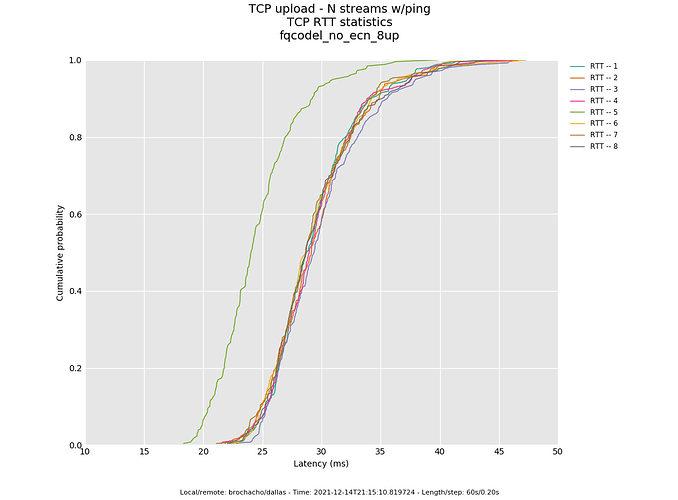

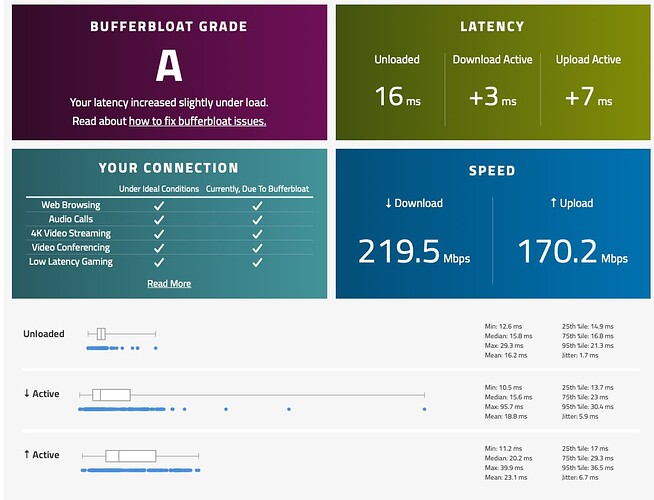

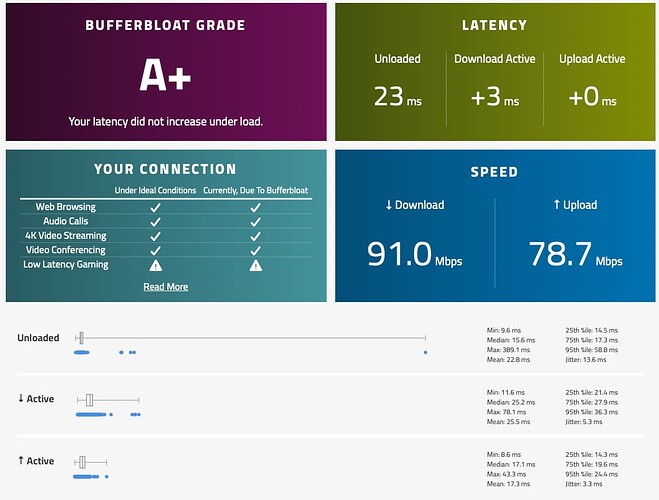

I've got a 22.03-RC1 build with the recent WiFi fixes from Felix applied to it (AQL and multicast, airtime scheduler and airtime fairness improvements). Via WiFi I get great results for buffer bloat:

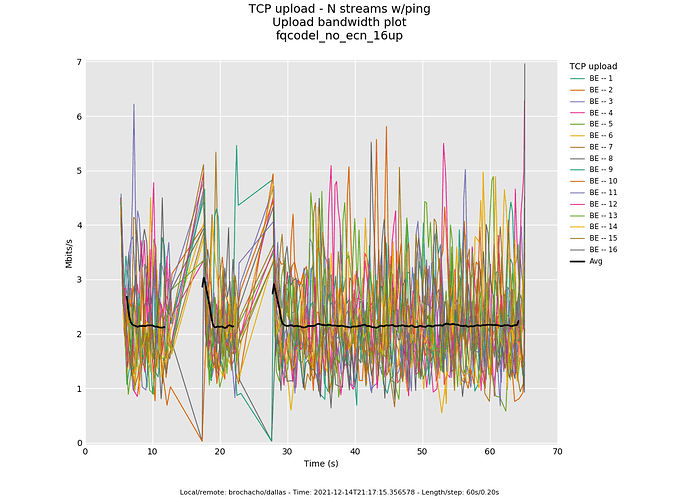

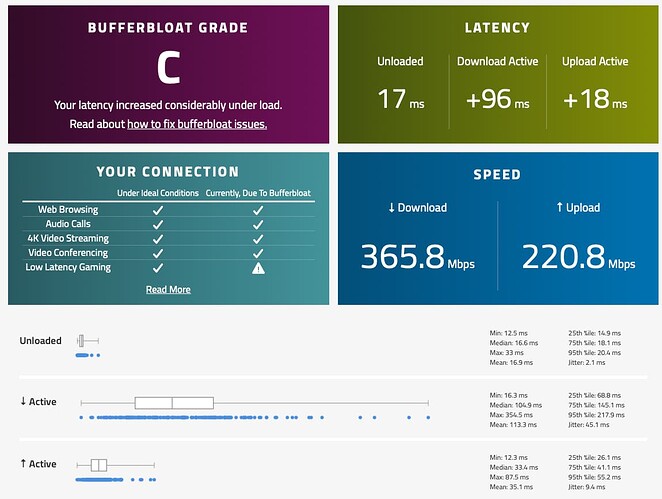

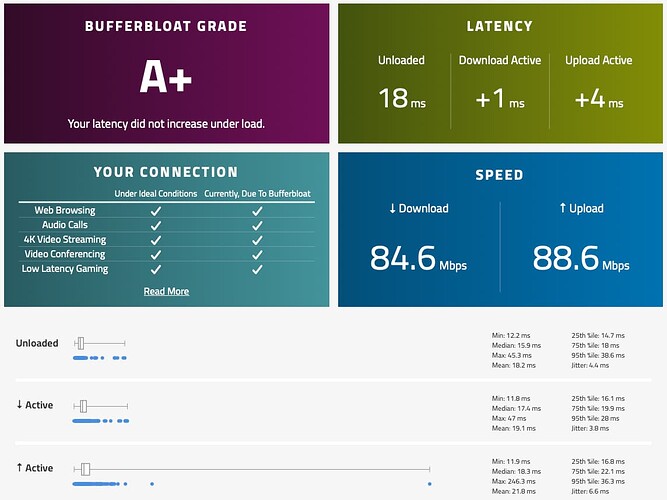

Via a wired connection I get not so great results:

I'm using NSS fq_codel:

root@OpenWrt:~# cat /etc/config/sqm

config queue 'eth1'

option debug_logging '1'

option verbosity '8'

option linklayer 'ethernet'

option qdisc 'fq_codel'

option download '425000'

option upload '425000'

option overhead '18'

option qdisc_advanced '1'

option squash_dscp '1'

option squash_ingress '1'

option ingress_ecn 'ECN'

option egress_ecn 'ECN'

option linklayer_advanced '1'

option tcMTU '2047'

option tcTSIZE '128'

option tcMPU '64'

option linklayer_adaptation_mechanism 'default'

option script 'nss.qos'

option enabled '1'

option interface 'eth0'

And it is active:

root@OpenWrt:~# tc -s qdisc

qdisc noqueue 0: dev lo root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc nsstbl 1: dev eth0 root refcnt 2 buffer/maxburst 53125b rate 425Mbit mtu 1514b accel_mode 0

Sent 8752044091 bytes 9276154 pkt (dropped 0, overlimits 2898 requeues 0)

backlog 0b 0p requeues 0

qdisc nssfq_codel 10: dev eth0 parent 1: target 5ms limit 1001p interval 100ms flows 1024 quantum 1514 set_default accel_mode 0

Sent 8752044328 bytes 9276156 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 1518 drop_overlimit 0 new_flow_count 4588352 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth1 root refcnt 2 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

Sent 18709169309 bytes 17844727 pkt (dropped 0, overlimits 0 requeues 2)

backlog 0b 0p requeues 2

maxpacket 5741 drop_overlimit 0 new_flow_count 82686 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc noqueue 0: dev br-guest root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev eth1.3 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev br-iot root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev eth1.5 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev br-lan root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev eth1.1 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev eth0.4 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev eth0.6 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc fq_codel 0: dev pppoe-wan root refcnt 2 limit 10240p flows 1024 quantum 1518 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

Sent 8511214852 bytes 9265927 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 61172 drop_overlimit 0 new_flow_count 19539 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc noqueue 0: dev wlan1 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev wlan0 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev wlan1-1 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev wlan1-2 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev wlan0-1 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc nsstbl 1: dev nssifb root refcnt 2 buffer/maxburst 53125b rate 425Mbit mtu 1514b accel_mode 0

Sent 23963963114 bytes 19888608 pkt (dropped 53469, overlimits 66570 requeues 0)

backlog 0b 0p requeues 0

qdisc nssfq_codel 10: dev nssifb parent 1: target 5ms limit 1001p interval 100ms flows 1024 quantum 1514 set_default accel_mode 0

Sent 23963963298 bytes 19888610 pkt (dropped 53469, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 1518 drop_overlimit 50945 new_flow_count 6646072 ecn_mark 0

new_flows_len 0 old_flows_len 0

For the sake of trying something I set the up- and download limits to 100Mbit/s and then via WiFi it still looks great:

Setting the limits lower makes a lot more difference via a wired connection:

I think I need to get in touch with my ISP about the advertised 500Mbit/s speed I'm getting. But I don't really understand why via WiFi I get better results in battling buffer bloat then via Wired when the up-/download limits are higher.