I have a Linksys WRT1200AC running “LEDE Reboot 17.01.4 r3560-79f57e422d”. It is connected to an ADSL modem which is I’m “bridge mode”, so that the LEDE router connects to the WAN in PPPoE mode.

I have tested bufferbloat with IPv4 and IPv6 (I have native IPv6 connectivity) and get these results…

IPv4:

IPv6:

As can be seen there is significant bufferbloat on upload, but not download.

I have enabled SQM as detailed in this guide:

I chose 1800 kbps for my download link (which is about 90% of the measured capacity by http://www.dslreports.com/speedtest. My measure upload varies wildly between about 55 - 300 kpbs. So I tried setting the upload link to 80 kbps (and then when that didn’t work 50 kbps). I use the recommended settings in the above guide for everything else, including ADSL overhead and using the cake queue discipline.

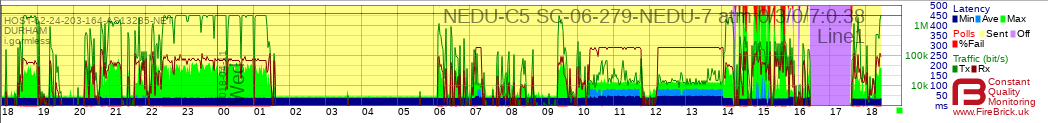

With SQM enabled I get the following results under IPv4…

Which as you can see fails to fix the bufferbloat on upload and in fact introduces new bufferbloat on download.

With SQM enabled I am unable to run the tests under IPv6 as the latency is so large the bufferbloat test reduces to run.

All the tests are done on a wired connection (wireless completely disabled), and with no other clients using the connection.

Changing the queue discipline to the old default of fq_codel does not help, nor does changing the upload and download values.

I followed the troubleshooting guide at https://openwrt.org/docs/guide-user/network/traffic-shaping/sqm-details#troubleshooting_sqm to produce the console log below.

Any help at all would be greatly appreciated. I am desperate to have a connection which will allow simultaneous upload and download, which my current setup (with or without SQM) does not.

CONSOLE LOG…

root@gw:~# cat /etc/config/sqm

config queue 'eth1'

option qdisc_advanced '0'

option debug_logging '0'

option verbosity '5'

option linklayer 'atm'

option overhead '44'

option interface 'pppoe-wan'

option qdisc 'cake'

option script 'piece_of_cake.qos'

option download '1880'

option enabled '1'

option upload '80'

root@gw:~# ifstatus wan

{

"up": true,

"pending": false,

"available": true,

"autostart": true,

"dynamic": false,

"uptime": 110,

"l3_device": "pppoe-wan",

"proto": "pppoe",

"device": "eth1",

"updated": [

"addresses",

"routes"

],

"metric": 0,

"dns_metric": 0,

"delegation": true,

"ipv4-address": [

{

"address": “REDACTED”,

"mask": 32

}

],

"ipv6-address": [

{

"address": "fe80::7dcb:8a:655b:25b5",

"mask": 128

}

],

"ipv6-prefix": [

],

"ipv6-prefix-assignment": [

],

"route": [

{

"target": "0.0.0.0",

"mask": 0,

"nexthop": “REDACTED”,

"source": "0.0.0.0\/0"

}

],

"dns-server": [

"217.169.20.20",

"217.169.20.21"

],

"dns-search": [

],

"inactive": {

"ipv4-address": [

],

"ipv6-address": [

],

"route": [

],

"dns-server": [

],

"dns-search": [

]

},

"data": {

}

}

root@gw:~# SQM_DEBUG=1 SQM_VERBOSITY_MAX=8 /etc/init.d/sqm stop ; SQM_DEBUG=1 SQ

M_VERBOSITY_MAX=8 /etc/init.d/sqm start

SQM: Stopping SQM on pppoe-wan

SQM: ifb associated with interface pppoe-wan:

SQM: Currently no ifb is associated with pppoe-wan, this is normal during starting of the sqm system.

SQM: /usr/lib/sqm/stop-sqm: ifb4pppoe-wan shaper deleted

SQM: /usr/lib/sqm/stop-sqm: ifb4pppoe-wan interface deleted

SQM: Starting SQM script: piece_of_cake.qos on pppoe-wan, in: 1880 Kbps, out: 80 Kbps

SQM: QDISC cake is useable.

SQM: Starting piece_of_cake.qos

SQM: ifb associated with interface pppoe-wan:

SQM: Currently no ifb is associated with pppoe-wan, this is normal during starting of the sqm system.

SQM: egress

SQM: STAB: stab mtu 2047 tsize 512 mpu 0 overhead 44 linklayer atm

SQM: egress shaping activated

SQM: QDISC ingress is useable.

SQM: ingress

SQM: STAB: stab mtu 2047 tsize 512 mpu 0 overhead 44 linklayer atm

SQM: ingress shaping activated

SQM: piece_of_cake.qos was started on pppoe-wan successfully

root@gw:~# cat /var/run/sqm/*debug.log

Wed Apr 11 15:13:19 GMT 2018: Starting.

Starting SQM script: piece_of_cake.qos on pppoe-wan, in: 1880 Kbps, out: 80 Kbps

Failed to find act_ipt. Maybe it is a built in module ?

module is already loaded - sch_cake

module is already loaded - sch_ingress

module is already loaded - act_mirred

module is already loaded - cls_fw

module is already loaded - cls_flow

module is already loaded - cls_u32

module is already loaded - sch_htb

module is already loaded - sch_hfsc

/sbin/ip link add name TMP_IFB_4_SQM type ifb

/usr/sbin/tc qdisc replace dev TMP_IFB_4_SQM root cake

QDISC cake is useable.

/sbin/ip link set dev TMP_IFB_4_SQM down

/sbin/ip link delete TMP_IFB_4_SQM type ifb

Starting piece_of_cake.qos

/usr/sbin/tc -p filter show parent ffff: dev pppoe-wan

ifb associated with interface pppoe-wan:

/usr/sbin/tc -p filter show parent ffff: dev pppoe-wan

Currently no ifb is associated with pppoe-wan, this is normal during starting of the sqm system.

/sbin/ip link add name ifb4pppoe-wan type ifb

egress

/usr/sbin/tc qdisc del dev pppoe-wan root

RTNETLINK answers: No such file or directory

STAB: stab mtu 2047 tsize 512 mpu 0 overhead 44 linklayer atm

/usr/sbin/tc qdisc add dev pppoe-wan root stab mtu 2047 tsize 512 mpu 0 overhead 44 linklayer atm cake bandwidth 80kbit besteffort

egress shaping activated

/sbin/ip link add name TMP_IFB_4_SQM type ifb

/usr/sbin/tc qdisc replace dev TMP_IFB_4_SQM ingress

QDISC ingress is useable.

/sbin/ip link set dev TMP_IFB_4_SQM down

/sbin/ip link delete TMP_IFB_4_SQM type ifb

ingress

/usr/sbin/tc qdisc del dev pppoe-wan handle ffff: ingress

RTNETLINK answers: Invalid argument

/usr/sbin/tc qdisc add dev pppoe-wan handle ffff: ingress

/usr/sbin/tc qdisc del dev ifb4pppoe-wan root

RTNETLINK answers: No such file or directory

STAB: stab mtu 2047 tsize 512 mpu 0 overhead 44 linklayer atm

/usr/sbin/tc qdisc add dev ifb4pppoe-wan root stab mtu 2047 tsize 512 mpu 0 overhead 44 linklayer atm cake bandwidth 1880kbit besteffort wash

/sbin/ip link set dev ifb4pppoe-wan up

/usr/sbin/tc filter add dev pppoe-wan parent ffff: protocol all prio 10 u32 match u32 0 0 flowid 1:1 action mirred egress redirect dev ifb4pppoe-wan

ingress shaping activated

piece_of_cake.qos was started on pppoe-wan successfully

root@gw:~# logread | grep SQM

Wed Apr 11 15:09:55 2018 user.notice SQM: Starting SQM script: piece_of_cake.qos on pppoe-wan, in: 1880 Kbps, out: 80 Kbps

Wed Apr 11 15:09:55 2018 user.notice SQM: piece_of_cake.qos was started on pppoe-wan successfully

Wed Apr 11 15:10:38 2018 user.notice SQM: Stopping SQM on pppoe-wan

Wed Apr 11 15:10:38 2018 user.notice SQM: Starting SQM script: piece_of_cake.qos on pppoe-wan, in: 1880 Kbps, out: 80 Kbps

Wed Apr 11 15:10:38 2018 user.notice SQM: piece_of_cake.qos was started on pppoe-wan successfully

Wed Apr 11 15:13:19 2018 user.notice SQM: Stopping SQM on pppoe-wan

Wed Apr 11 15:13:19 2018 user.notice SQM: ifb associated with interface pppoe-wan:

Wed Apr 11 15:13:19 2018 user.notice SQM: Currently no ifb is associated with pppoe-wan, this is normal during starting of the sqm system.

Wed Apr 11 15:13:19 2018 user.notice SQM: /usr/lib/sqm/stop-sqm: ifb4pppoe-wan shaper deleted

Wed Apr 11 15:13:19 2018 user.notice SQM: /usr/lib/sqm/stop-sqm: ifb4pppoe-wan interface deleted

Wed Apr 11 15:13:19 2018 user.notice SQM: Starting SQM script: piece_of_cake.qos on pppoe-wan, in: 1880 Kbps, out: 80 Kbps

Wed Apr 11 15:13:19 2018 user.notice SQM: QDISC cake is useable.

Wed Apr 11 15:13:19 2018 user.notice SQM: Starting piece_of_cake.qos

Wed Apr 11 15:13:19 2018 user.notice SQM: ifb associated with interface pppoe-wan:

Wed Apr 11 15:13:19 2018 user.notice SQM: Currently no ifb is associated with pppoe-wan, this is normal during starting of the sqm system.

Wed Apr 11 15:13:19 2018 user.notice SQM: egress

Wed Apr 11 15:13:19 2018 user.notice SQM: STAB: stab mtu 2047 tsize 512 mpu 0 overhead 44 linklayer atm

Wed Apr 11 15:13:19 2018 user.notice SQM: egress shaping activated

Wed Apr 11 15:13:19 2018 user.notice SQM: QDISC ingress is useable.

Wed Apr 11 15:13:19 2018 user.notice SQM: ingress

Wed Apr 11 15:13:19 2018 user.notice SQM: STAB: stab mtu 2047 tsize 512 mpu 0 overhead 44 linklayer atm

Wed Apr 11 15:13:19 2018 user.notice SQM: ingress shaping activated

Wed Apr 11 15:13:19 2018 user.notice SQM: piece_of_cake.qos was started on pppoe-wan successfully

root@gw:~# tc -d qdisc

qdisc noqueue 0: dev lo root refcnt 2

qdisc mq 0: dev eth0 root

qdisc fq_codel 0: dev eth0 parent :1 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

qdisc fq_codel 0: dev eth0 parent :2 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

qdisc fq_codel 0: dev eth0 parent :3 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

qdisc fq_codel 0: dev eth0 parent :4 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

qdisc fq_codel 0: dev eth0 parent :5 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

qdisc fq_codel 0: dev eth0 parent :6 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

qdisc fq_codel 0: dev eth0 parent :7 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

qdisc fq_codel 0: dev eth0 parent :8 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

qdisc mq 0: dev eth1 root

qdisc fq_codel 0: dev eth1 parent :1 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

qdisc fq_codel 0: dev eth1 parent :2 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

qdisc fq_codel 0: dev eth1 parent :3 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

qdisc fq_codel 0: dev eth1 parent :4 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

qdisc fq_codel 0: dev eth1 parent :5 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

qdisc fq_codel 0: dev eth1 parent :6 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

qdisc fq_codel 0: dev eth1 parent :7 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

qdisc fq_codel 0: dev eth1 parent :8 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

qdisc noqueue 0: dev br-lan root refcnt 2

qdisc cake 800a: dev pppoe-wan root refcnt 2 bandwidth 80Kbit besteffort triple-isolate rtt 100.0ms raw total_overhead 22 hard_header_len 22

linklayer atm overhead 44 mtu 2047 tsize 512

qdisc ingress ffff: dev pppoe-wan parent ffff:fff1 ----------------

qdisc cake 800b: dev ifb4pppoe-wan root refcnt 2 bandwidth 1880Kbit besteffort triple-isolate wash rtt 100.0ms raw total_overhead 14 hard_header_len 14

linklayer atm overhead 44 mtu 2047 tsize 512

root@gw:~# tc -s qdisc

qdisc noqueue 0: dev lo root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc mq 0: dev eth0 root

Sent 253845 bytes 685 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc fq_codel 0: dev eth0 parent :1 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 253845 bytes 685 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :2 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :3 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :4 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :5 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :6 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :7 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :8 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc mq 0: dev eth1 root

Sent 110618 bytes 965 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc fq_codel 0: dev eth1 parent :1 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 110618 bytes 965 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth1 parent :2 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth1 parent :3 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth1 parent :4 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth1 parent :5 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth1 parent :6 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth1 parent :7 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth1 parent :8 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc noqueue 0: dev br-lan root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc cake 800a: dev pppoe-wan root refcnt 2 bandwidth 80Kbit besteffort triple-isolate rtt 100.0ms raw total_overhead 22 hard_header_len 22

Sent 5353 bytes 23 pkt (dropped 0, overlimits 3 requeues 0)

backlog 0b 0p requeues 0

memory used: 1984b of 4Mb

capacity estimate: 80Kbit

Tin 0

thresh 80Kbit

target 227.1ms

interval 454.2ms

pk_delay 19.2ms

av_delay 339us

sp_delay 108us

pkts 23

bytes 5353

way_inds 0

way_miss 21

way_cols 0

drops 0

marks 0

sp_flows 6

bk_flows 1

un_flows 0

max_len 1484

qdisc ingress ffff: dev pppoe-wan parent ffff:fff1 ----------------

Sent 2576 bytes 23 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc cake 800b: dev ifb4pppoe-wan root refcnt 2 bandwidth 1880Kbit besteffort triple-isolate wash rtt 100.0ms raw total_overhead 14 hard_header_len 14

Sent 4505 bytes 23 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

memory used: 1984b of 4Mb

capacity estimate: 1880Kbit

Tin 0

thresh 1880Kbit

target 9.7ms

interval 104.7ms

pk_delay 3us

av_delay 0us

sp_delay 0us

pkts 23

bytes 4505

way_inds 0

way_miss 21

way_cols 0

drops 0

marks 0

sp_flows 12

bk_flows 1

un_flows 0

max_len 901

[At this point I ran tests at http://www.dslreports.com/speedtest from a browser]

root@gw:~# tc -s qdisc

qdisc noqueue 0: dev lo root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc mq 0: dev eth0 root

Sent 5721965 bytes 7294 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc fq_codel 0: dev eth0 parent :1 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 5721965 bytes 7294 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :2 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :3 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :4 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :5 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :6 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :7 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :8 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc mq 0: dev eth1 root

Sent 1982718 bytes 6335 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc fq_codel 0: dev eth1 parent :1 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 1982718 bytes 6335 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth1 parent :2 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth1 parent :3 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth1 parent :4 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth1 parent :5 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth1 parent :6 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth1 parent :7 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth1 parent :8 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc noqueue 0: dev br-lan root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc cake 800a: dev pppoe-wan root refcnt 2 bandwidth 80Kbit besteffort triple-isolate rtt 100.0ms raw total_overhead 22 hard_header_len 22

Sent 2227057 bytes 4806 pkt (dropped 1856, overlimits 11117 requeues 0)

backlog 12455b 25p requeues 0

memory used: 411456b of 4Mb

capacity estimate: 80Kbit

Tin 0

thresh 80Kbit

target 227.1ms

interval 454.2ms

pk_delay 25.7s

av_delay 12.9s

sp_delay 64.9ms

pkts 6687

bytes 3166878

way_inds 95

way_miss 483

way_cols 0

drops 1856

marks 870

sp_flows 13

bk_flows 6

un_flows 0

max_len 6466

qdisc ingress ffff: dev pppoe-wan parent ffff:fff1 ----------------

Sent 5432952 bytes 6530 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc cake 800b: dev ifb4pppoe-wan root refcnt 2 bandwidth 1880Kbit besteffort triple-isolate wash rtt 100.0ms raw total_overhead 14 hard_header_len 14

Sent 6291789 bytes 6476 pkt (dropped 54, overlimits 5565 requeues 0)

backlog 0b 0p requeues 0

memory used: 42240b of 4Mb

capacity estimate: 1880Kbit

Tin 0

thresh 1880Kbit

target 9.7ms

interval 104.7ms

pk_delay 30.9ms

av_delay 3.3ms

sp_delay 36us

pkts 6530

bytes 6381359

way_inds 11

way_miss 491

way_cols 0

drops 54

marks 238

sp_flows 1

bk_flows 1

un_flows 0

max_len 1696