Is there a way for speeding up a PPPoE connection? I'm finding it takes quite a bit of CPU overhead compared to not using PPPoE. My router can reach 1gb/s NATed speeds on IPoE in both directions. However, when testing my router on my PPPoE 500/500 mbit fibre connection, the upload speeds can only do ~410 mb/s. Not too shaby of course, but I would prefer the full 500 mbit

I found something myself, which is described as a high performance multithreaded PPPoE implementation:

Would it be possible to get this to work on LEDE? Or is the default package that is used for PPPoE too tightly integrated into LEDE? I think at the moment that the bottleneck might be due to the fact that PPPoE doesn't seem to be multithreaded. The reason why I think this, is because the connection is clearly bottlenecked, yet not all 4 threads of the CPU are fully loaded.

I think is already using kernel mode, try with fasth-path, there is a pr already open

I'm in the same boat with wrt3200acm. only ~750mb/s instead of 900+ on pppoe

Will fastpath also speed up PPPoE encapsulation / decapsulation? I thought it only improved NAT performance?

on 1043nd v1 pppoe without fastpath I have ~190mb/s with fastpath ~350mb/s

so yes, NAT is the problem, I think pppoe in LEDE is optimized as much as it can be

The big difference is that your router is a single core. Bringing down the NAT CPU loaf should leave more for PPPoE. However, my router has 4 threads and is at around 20-25% CPU idle while bottlenecking my upload speeds. So either PPPoE isn't properly multithreaded, or the bottleneck lies somewhere else.

it was just an example of how can scale fastpath, I'm using a wrt3200acm, as main, and it can't do gigabit on pppoe, none of the dual core cpu is used at 100 (60% max), and htop shows kernel load, not userspace load, but is almost double than yours on speed

edit: maybe i'll test fastpath on it just to be sure

If you do, please let me know the results! Very curious about those ![]()

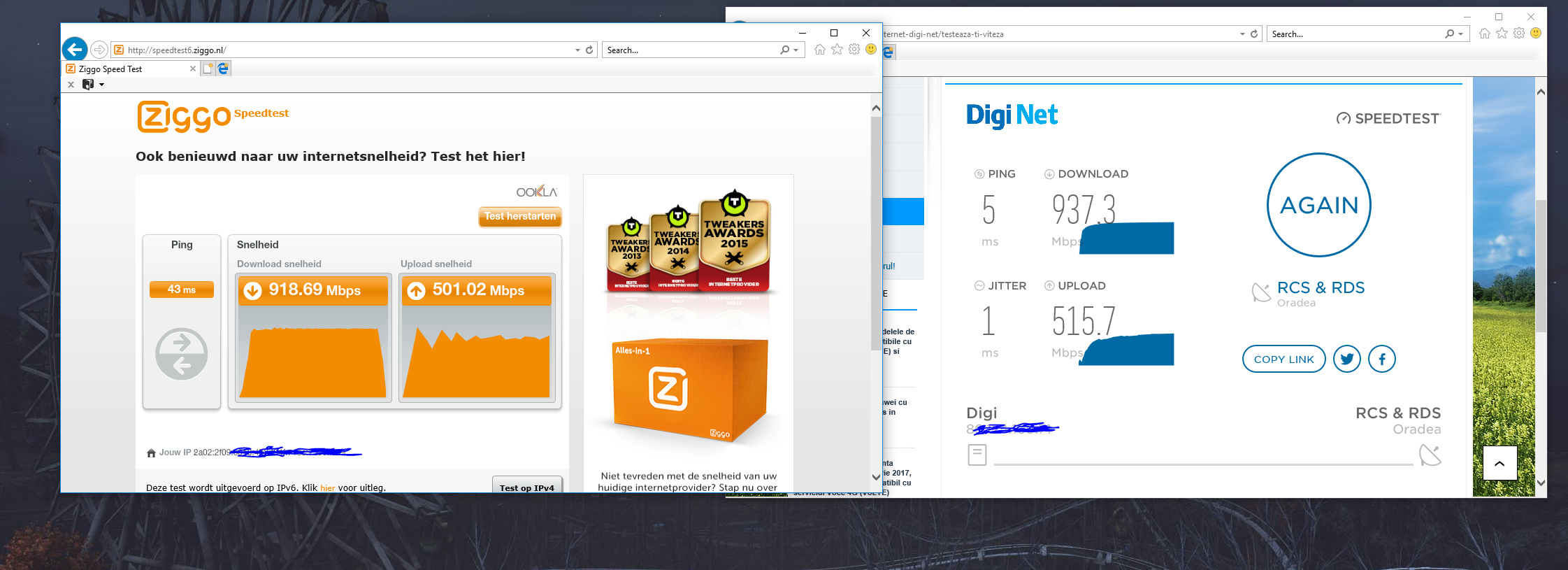

ok so with fastpath on pppoe

Very nice speeds! What internet connection speeds are you using? Fastpath does seem to allow you to cap out your download speeds now ![]()

best effort 1gb/s down 500mb/s up ~10$/ month

Your router should be plenty fast to max out that 500 mb/s, right? It's weird you are only getting around 450 mb/s. It's similar to my DIR-860L without fastpath.

is 450 from 500, and the test is not accurate for down is 900 from 1000 so it's ok with fastpath but only ~750 without it

WRT1900ACS v.2 on pppoe, enabled by default for accelerating IPv4 / IPv6 "fast classifier"!

can you try a test with this ?

echo reno > /proc/sys/net/ipv4/tcp_congestion_control

Wow, 1gbit/s down and 0.5gbit/s up must be nice !

Is sqm qos still usable with fastpath ?

I was thinking: Is there a way to find out whether PPPoE is using encryption and/or compression by any chance? That could explain the rather large CPU overhead I am seeing with PPPoE enabled. I've supplied pppd with the debug option and this is what I am seeing in my logs. Is there anything that tells me encryption/compression is being used?:

Fri Feb 23 11:08:58 2018 daemon.info pppd[1015]: Plugin rp-pppoe.so loaded.

Fri Feb 23 11:08:58 2018 daemon.info pppd[1015]: RP-PPPoE plugin version 3.8p compiled against pppd 2.4.7

Fri Feb 23 11:08:58 2018 daemon.notice pppd[1015]: pppd 2.4.7 started by root, uid 0

Fri Feb 23 11:08:58 2018 daemon.debug pppd[1015]: Send PPPOE Discovery V1T1 PADI session 0x0 length 4

Fri Feb 23 11:08:58 2018 daemon.debug pppd[1015]: dst ff:ff:ff:ff:ff:ff src 56:02:c8:64:3f:99

Fri Feb 23 11:08:58 2018 daemon.debug pppd[1015]: [service-name]

Fri Feb 23 11:08:58 2018 daemon.debug pppd[1015]: Recv PPPOE Discovery V1T1 PADO session 0x0 length 27

Fri Feb 23 11:08:58 2018 daemon.debug pppd[1015]: dst 56:02:c8:64:3f:99 src 80:38:bc:0b:cd:2e

Fri Feb 23 11:08:58 2018 daemon.debug pppd[1015]: [service-name] [AC-name 195.190.228.161] [end-of-list]

Fri Feb 23 11:08:58 2018 daemon.debug pppd[1015]: Send PPPOE Discovery V1T1 PADR session 0x0 length 4

Fri Feb 23 11:08:58 2018 daemon.debug pppd[1015]: dst 80:38:bc:0b:cd:2e src 56:02:c8:64:3f:99

Fri Feb 23 11:08:58 2018 daemon.debug pppd[1015]: [service-name]

Fri Feb 23 11:08:58 2018 daemon.debug pppd[1015]: Recv PPPOE Discovery V1T1 PADS session 0x20ef length 8

Fri Feb 23 11:08:58 2018 daemon.debug pppd[1015]: dst 56:02:c8:64:3f:99 src 80:38:bc:0b:cd:2e

Fri Feb 23 11:08:58 2018 daemon.debug pppd[1015]: [service-name] [end-of-list]

Fri Feb 23 11:08:58 2018 daemon.debug pppd[1015]: PADS: Service-Name: ''

Fri Feb 23 11:08:58 2018 daemon.info pppd[1015]: PPP session is 8431

Fri Feb 23 11:08:58 2018 daemon.warn pppd[1015]: Connected to 80:38:bc:0b:cd:2e via interface eth0.6

Fri Feb 23 11:08:58 2018 daemon.debug pppd[1015]: using channel 1

Fri Feb 23 11:08:59 2018 daemon.info pppd[1015]: Using interface pppoe-wan

Fri Feb 23 11:08:59 2018 daemon.notice pppd[1015]: Connect: pppoe-wan <--> eth0.6

Fri Feb 23 11:08:59 2018 daemon.debug pppd[1015]: sent [LCP ConfReq id=0x1 <mru 1492> <magic 0xb4f9c21>]

Fri Feb 23 11:08:59 2018 daemon.debug pppd[1015]: rcvd [LCP ConfReq id=0x2 <mru 1500> <auth pap> <magic 0xc4a12eb0>]

Fri Feb 23 11:08:59 2018 daemon.debug pppd[1015]: sent [LCP ConfAck id=0x2 <mru 1500> <auth pap> <magic 0xc4a12eb0>]

Fri Feb 23 11:08:59 2018 daemon.debug pppd[1015]: rcvd [LCP ConfAck id=0x1 <mru 1492> <magic 0xb4f9c21>]

Fri Feb 23 11:08:59 2018 daemon.debug pppd[1015]: sent [LCP EchoReq id=0x0 magic=0xb4f9c21]

Fri Feb 23 11:08:59 2018 daemon.debug pppd[1015]: sent [PAP AuthReq id=0x1 user=<hidden> password=<hidden>]

Fri Feb 23 11:08:59 2018 daemon.debug pppd[1015]: rcvd [LCP EchoRep id=0x0 magic=0xc4a12eb0]

Fri Feb 23 11:08:59 2018 daemon.debug pppd[1015]: rcvd [PAP AuthAck id=0x1 "Authentication success,Welcome!"]

Fri Feb 23 11:08:59 2018 daemon.info pppd[1015]: Remote message: Authentication success,Welcome!

Fri Feb 23 11:08:59 2018 daemon.notice pppd[1015]: PAP authentication succeeded

Fri Feb 23 11:08:59 2018 daemon.notice pppd[1015]: peer from calling number 80:38:BC:0B:CD:2E authorized

Fri Feb 23 11:08:59 2018 daemon.debug pppd[1015]: sent [IPCP ConfReq id=0x1 <addr 0.0.0.0> <ms-dns1 0.0.0.0> <ms-dns2 0.0.0.0>]

Fri Feb 23 11:08:59 2018 daemon.debug pppd[1015]: sent [IPV6CP ConfReq id=0x1 <addr fe80::348c:7a28:7c2e:58ea>]

Fri Feb 23 11:08:59 2018 daemon.debug pppd[1015]: rcvd [IPCP ConfReq id=0x1 <addr 195.190.228.161>]

Fri Feb 23 11:08:59 2018 daemon.debug pppd[1015]: sent [IPCP ConfAck id=0x1 <addr 195.190.228.161>]

Fri Feb 23 11:08:59 2018 daemon.debug pppd[1015]: rcvd [IPV6CP ConfReq id=0x1 <addr fe80::8238:bcff:fe0b:cd2e>]

Fri Feb 23 11:08:59 2018 daemon.debug pppd[1015]: sent [IPV6CP ConfAck id=0x1 <addr fe80::8238:bcff:fe0b:cd2e>]

Fri Feb 23 11:08:59 2018 daemon.debug pppd[1015]: rcvd [IPCP ConfNak id=0x1 <addr 86.88.184.57> <ms-dns1 195.121.1.34> <ms-dns2 195.121.1.66>]

Fri Feb 23 11:08:59 2018 daemon.debug pppd[1015]: sent [IPCP ConfReq id=0x2 <addr 86.88.184.57> <ms-dns1 195.121.1.34> <ms-dns2 195.121.1.66>]

Fri Feb 23 11:08:59 2018 daemon.debug pppd[1015]: rcvd [IPV6CP ConfAck id=0x1 <addr fe80::348c:7a28:7c2e:58ea>]

Fri Feb 23 11:08:59 2018 daemon.notice pppd[1015]: local LL address fe80::348c:7a28:7c2e:58ea

Fri Feb 23 11:08:59 2018 daemon.notice pppd[1015]: remote LL address fe80::8238:bcff:fe0b:cd2e

Fri Feb 23 11:08:59 2018 daemon.debug pppd[1015]: Script /lib/netifd/ppp6-up started (pid 1104)

Fri Feb 23 11:08:59 2018 daemon.debug pppd[1015]: rcvd [IPCP ConfAck id=0x2 <addr 86.88.184.57> <ms-dns1 195.121.1.34> <ms-dns2 195.121.1.66>]

Fri Feb 23 11:08:59 2018 daemon.notice pppd[1015]: local IP address 86.88.184.57

Fri Feb 23 11:08:59 2018 daemon.notice pppd[1015]: remote IP address 195.190.228.161

Fri Feb 23 11:08:59 2018 daemon.notice pppd[1015]: primary DNS address 195.121.1.34

Fri Feb 23 11:08:59 2018 daemon.notice pppd[1015]: secondary DNS address 195.121.1.66

Fri Feb 23 11:08:59 2018 daemon.debug pppd[1015]: Script /lib/netifd/ppp-up started (pid 1105)

Fri Feb 23 11:08:59 2018 daemon.debug pppd[1015]: Script /lib/netifd/ppp-up finished (pid 1105), status = 0x1

Fri Feb 23 11:08:59 2018 daemon.debug pppd[1015]: Script /lib/netifd/ppp6-up finished (pid 1104), status = 0x9

Fri Feb 23 11:09:33 2018 daemon.info pppd[1015]: System time change detected.what is your MTU ? if 1492 then the cpu will need to fragment the 1500 MTU packets so it will be a hit on the speed

you can try baby jumbo frames (see forum, set 1508 mtu on pppoe and if supported by ISP it will make link with 1500 MTU) or set by dhcp (not working for windows clients, have to set it manually) the MTU to your internal devices to 1492, this way you can leverage some cpu in doing packet fragmentation

I have tried baby jumbo frames, but they result in identical speeds. That is to be expected, since MSS clamping already makes sure frames do not have to be fragmented. If the throughput is limited due to a packet/second limitation, it would at most give you 8 / 1492 * 100 = 0.53% speed-up.

Why not simply take a packet capture on the ethernet interface that transports the PPPoE packets, if you can read the packet contents easily (and find expected patterns) this would indicate no compression/encryption (I believe ping can be used to send specific patterns that you than can look for).