Hi all,

I was wondering, is there any reason to keep software flow offloading disabled (aside not being able to use QoS/SQM)? Are there practical implications of any kind when enabling it?

Thanks.

I think you got it reversed. If you enable software flow offloading then you cannot use QoS and SQM. If it is disabled you can use them.

That's because "software flow offloading" means that the traffic is bypassing some of the advanced firewall features used by QoS and SQM to prioritize traffic.

It's meant to provide an "alternative" to the "hardware accelerated NAT" features offered in stock firmware that can't usually be done in OpenWrt. It's not as good as HW NAT, but it's at least halfway there

Yeh i see... so allow me to rephrase: any other reason to keep software flow offloading disabled when not using QoS/SQM at all?

And incidentally, any reason why it is disabled by default although there is no QoS/SQM by default (afaict)? Is it because it is still an experimental feature?

Because if it's off by default, someone enables it and their configuration breaks they can figure out pretty quickly that it's not good for them.

While if you enable it by default you will get flooded by people complaining and opening bug reports about SQM/QoS not working or this or that other feature not working and you can't be sure it's software offloading issue or something else.

Seems reasonable.

Thank you.

@bobafetthotmail and @xorbug as far as I understand software offloading still works with SQM just fine. Only hardware offloading is incompatible.

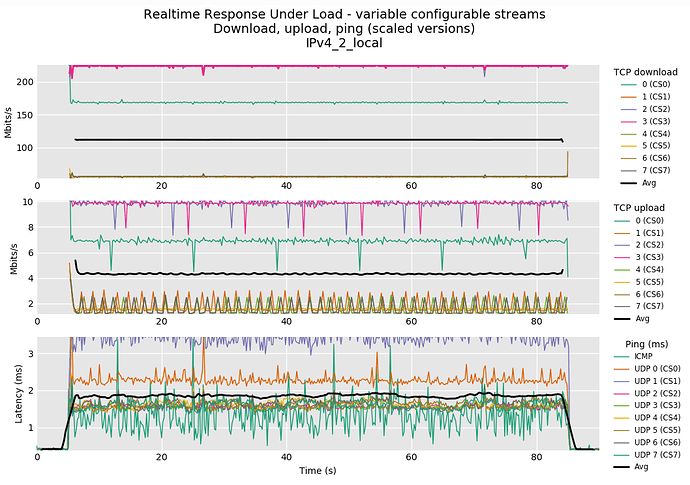

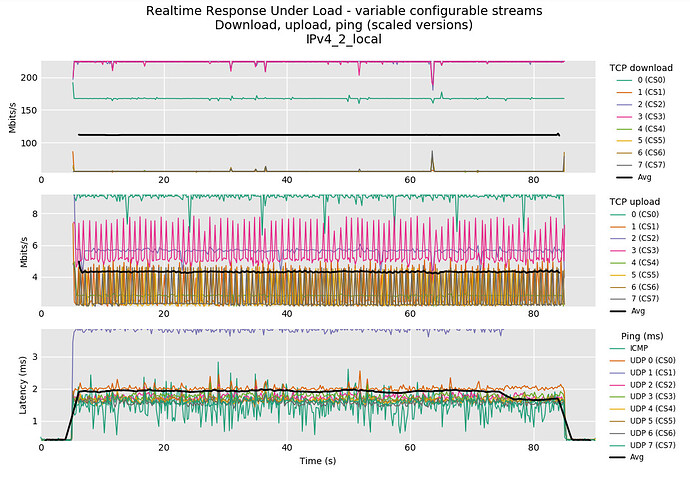

I'm not sure that it does. I was busy running some flent tests when I read your reply, so I enabled SFO for the one test and disabled it for the other. The first test shows SFO enabled and the second disabled.

This was with SQM running I assume? Both have average bandwidth within epsilon of each other, and avg latency under load within epsilon of each other. The details seem different, but I'd expect VERY different graphs if SFO disabled SQM.

Yes, this is SQM running with a diffserv8 profile in a gigabit link. Two identical tests run one after another. Some of the apparent difference is due to the scaling, but if you look at the two upload graphs, note how CS0 and CS2/CS3 are inverted between the two runs

It definitely doesn't disable SQM, but it seems to change the behaviour appreciably, right?

Yeah, I'm not sure what's up there. But if you turn on hardware offloading the qdiscs aren't involved at all and so SQM does nothing. If you turn on software offloading it may change exactly how cake handles thing potentially, but it also might be specific to diffserv8 which is I think an unusual config.

in general software offloading still uses the qdiscs, it mainly just bypasses the details of iptables. Basically the initial packets in a flow go through iptables, and then the remaining ones go through the "fast path" but they still hit the qdiscs.

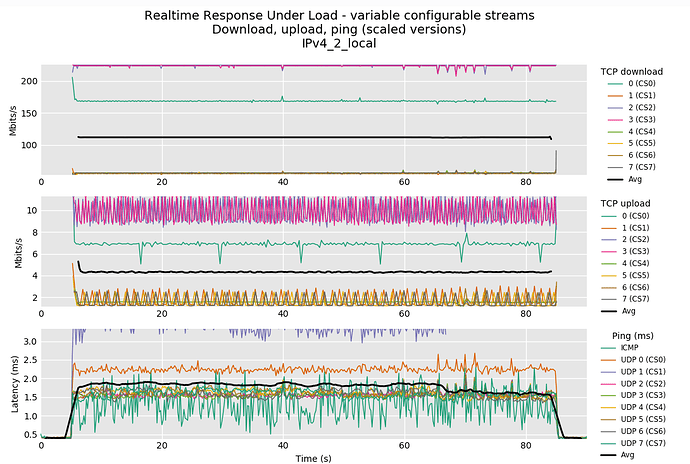

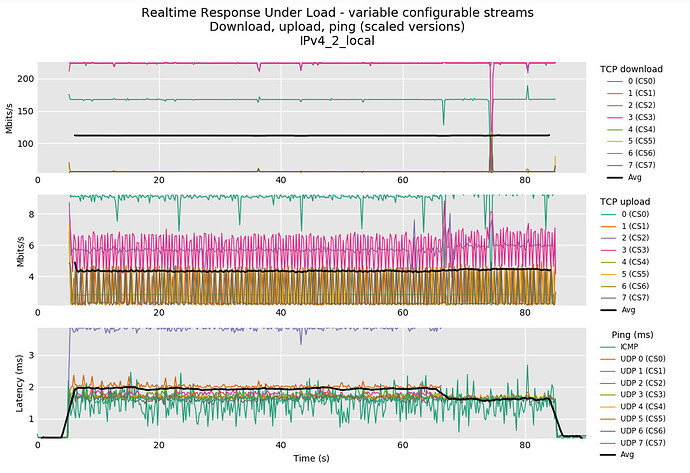

Let me do two runs with a diffserv4 profile and see what it looks like...

I agree it's weird how different tiers seem to priority-invert. I wonder what @moeller0 thinks is going on?

Ah good, I wasn't able to get that by reading around about SFO... also the "Experimental feature. Not fully compatible with QoS/SQM." warning in the LuCI firewall tab appears above the SFO checkbox (when HFO checkbox is not even visible) so maybe I got confused a bit by that...

@dl12345 thanks for taking the effort of sharing these benchmarks... very informative indeed.

Speaking as a layman about combined operation of SFO+SQM including these graphs meaning (which i never met but i just imagine to be measurements for different classes of traffic), could it be at this point the other way round?

I mean, since SFO disabled/enabled has so little impact (as if it's not operating at all, well, except the priority inversion, and the shapes too), maybe it's SQM that "disables" SFO no matter what the settings are and the cli/gui shows, and not the opposite? i.e. you are operating without SFO all the time? May this be possible? Or those iverted priorities and different shapes are a clear evidence of SFO?

(sorry in case this looks cocky, i see you know lots more than me on the subject, i don't mean to say you are not aware of what settings you are running or you cannot notice the differences by yourself... just asking to learn something ![]() )

)

This is a good thought but I don't think so. @moeller0 is there anything in the SQM scripts that disables software flow offload?

Reading further on the internet, it's clear that software offloads still send packets via neigh_xmit which I believe still sends things through the qdisc so SQM should work. The priority inversion is weird though.

No idea, but SQM does nothing actively to foil software offloading...

For flows in the outbound direction , Im curious whether the sqm layer is before or after software flow offloading layer.

Software offloading sends packets direct to the xmit path, and that means into the qdiscs, so SQM works both directions afaik.

And in the end, which one was the expected/good result? the one with SFO on or SFO off?

Ok i have done a couple tests myself with SFO and SQM (first time setting up SQM for me).

Well, SQM is clearly working and the difference is like night and day on latency. Additionally i see a FLOWOFFLOAD target in iptables, and it is being hit since the counter increases. I'd say they are both working fine together then.