Finally, I found time for conducting some more extensive testing on this

The test script for this scenario was just a loop transmitting constant purple color for a 4-channel device at address 1:

from dmx import DMXInterface

# Open an interface

with DMXInterface("FT232R") as interface:

interface.set_frame([255, 255, 0, 255]) # chinese 4-channel PAR: brightness, R, G, B

while True:

interface.send_update()

First, the original version of the ft232r.py driver from the PyDMX project on my ubuntu machine:

for reference, the code that produces it:

self._set_break_on()

wait_ms(10)

# Mark after break

self._set_break_off()

wait_us(8)

# Frame body

Device.write(self, b"\x00" + byte_data)

# Idle

wait_ms(15)

Curiously, the first thing to notice is that wait_ms(15) only generates an idle period of less than 13ms here, maybe due to the FTDI data transmission being buffered, and the call to Device.write() only blocking until the last byte is written into the buffer, so the timer set for 15ms prematurely starts while the FTDI is actually still busy transmitting the remaining data in the buffer...

Besides, I cannot see anywhere in the DMX specifications where these 15ms come from, the only value I could find for MBB (mark before break) is 8µs (which maybe did not work for the original author of the code, since any sleeping below 2ms would be useless due to the FTDI buffering  maybe this was the reason for choosing such an arbitrarily high value).

maybe this was the reason for choosing such an arbitrarily high value).

Break duration is somewhere near 12ms in most of the transmissions, resulting from wait_ms(10). Again, I don't see why such long duration was needed in the first place.

Mark after break is somewhere between 200 and 300µs (resulting from the call to wait_us(8), but there may be additional delay from the FTDI before it actually starts pulling the line down for the first byte transmitted).

Also a full universe will always be transmitted here, which could be optimized for setups with a small count of fixtures.

Now for the boring part: None of these timings change much depending on whether

- the original libc

nanosleep

- the libc

usleep

- or python

time.sleep(microsencods / 1000000)

is used.

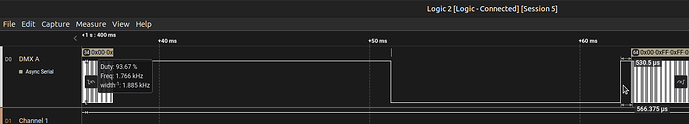

On OpenWrt, the main difference to ubuntu (for all three variants) is the increased MAB time, which is roughly about 500µs, regardless of whether using musl usleep or python time.sleep():

As a conclusion, the driver could really just be switched to the native python sleep function (which would also eliminate to distinguish between Windows and Linux in the code, though I haven't tested on Windows).

Now for the reality part: It actually did not work quite well... when started via ssh, the actual application under test would run fine for any amount of time (i.e. it would visualize the amount of wifi probe requests in the air by simply changing the value for the blue channel), however when started via init script, it would hang after 5-10 minutes with a DMX blackout - but that is for another night to debug

If anyone is interested though: https://github.com/s-2/pax2dmx/

Maybe switching to native python sleep helps here, though I believe the issue is rather related to the wifi capturing...

![]()