Just wanted to briefly say: to all attempting to solve this issue, it is appreciated. I'm unfortunately not in a position to play around with or test my R7800 (in fact, I'm on stock due to the ping issues, and other issues with netlink bandwidth monitor).

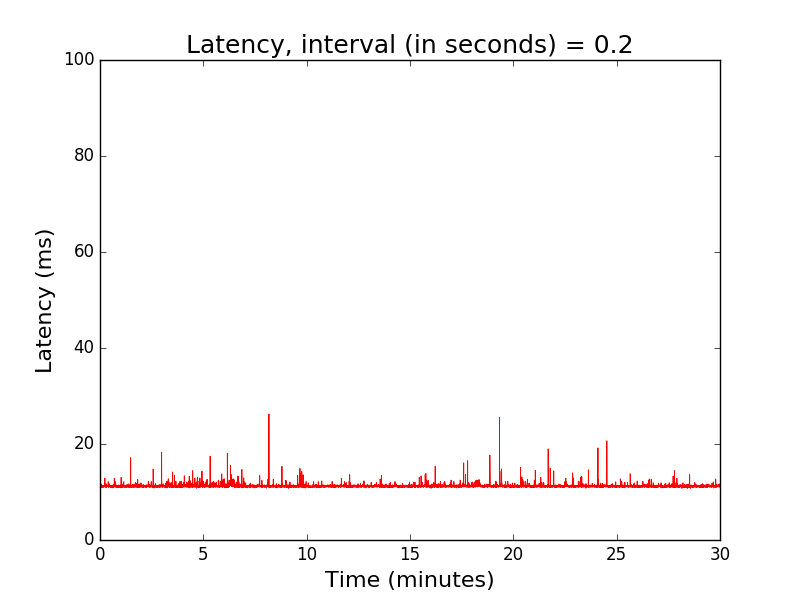

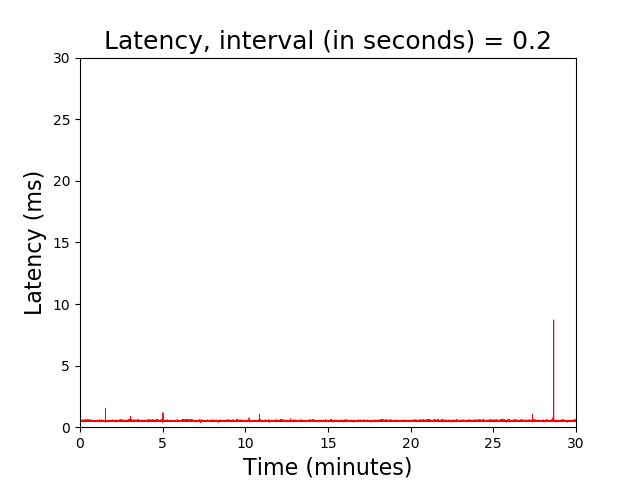

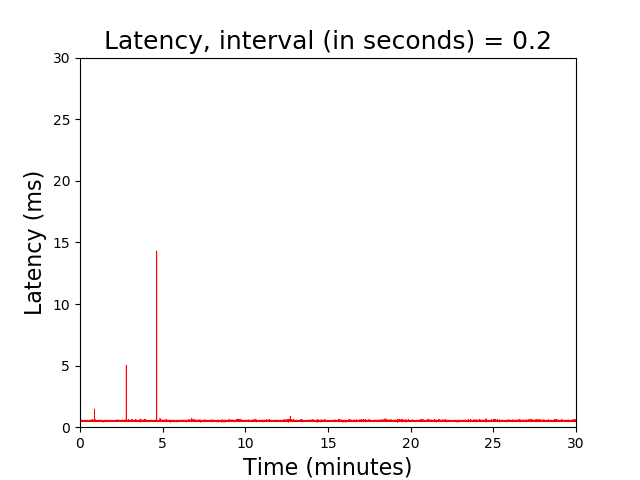

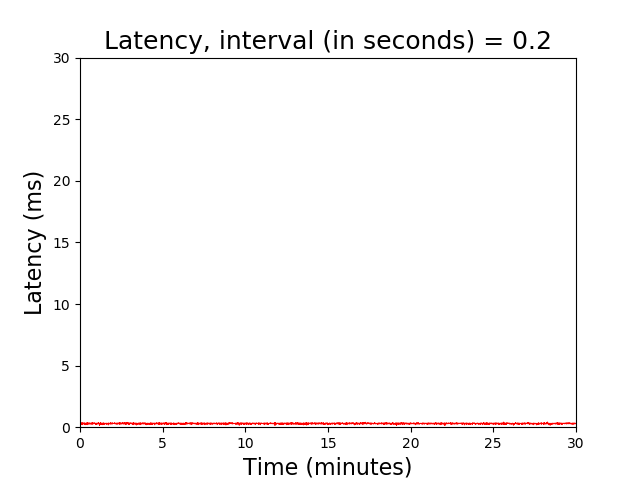

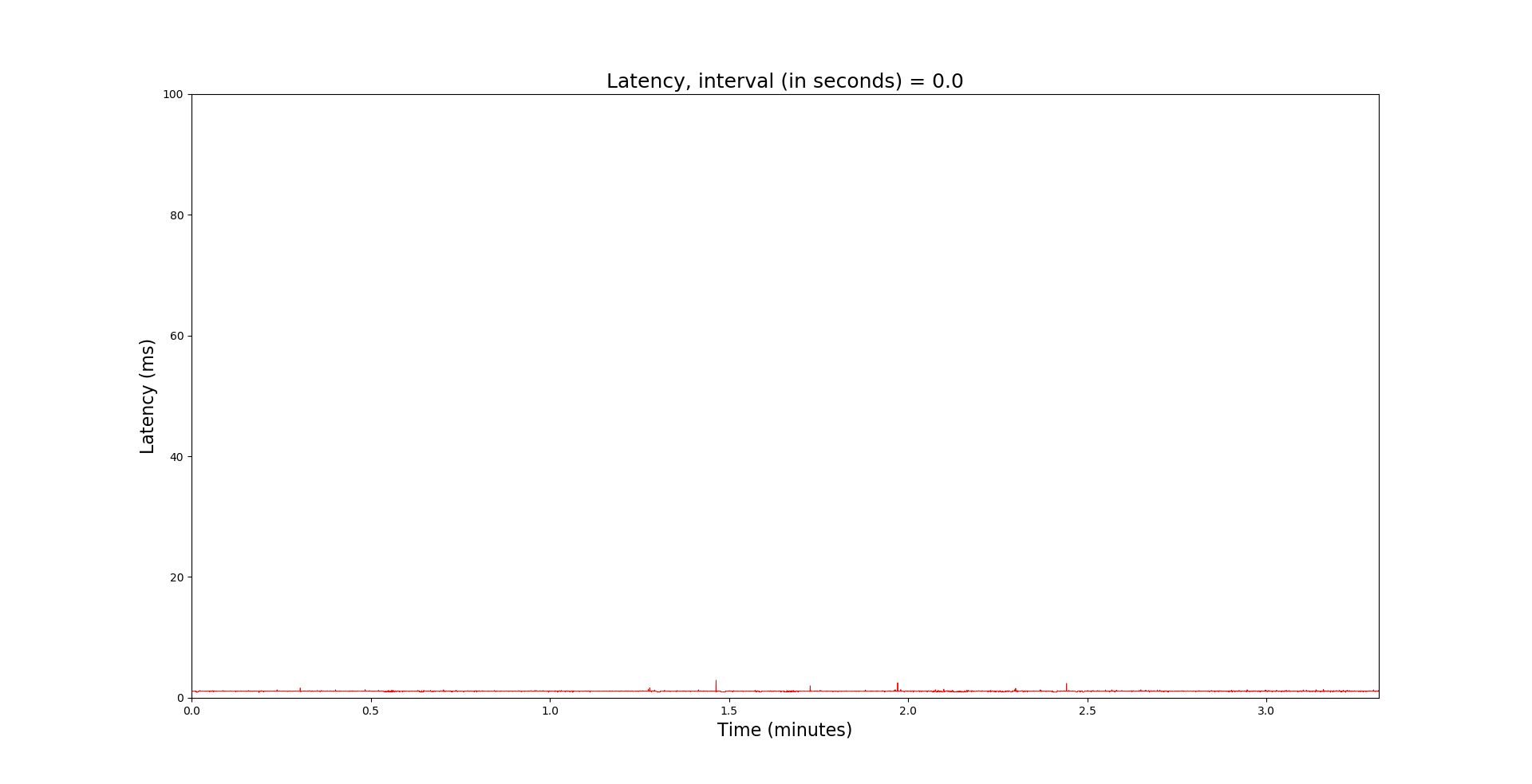

For what it's worth, my unit has 'Antenna #' on the antennae; and I also experience ping spikes. Switching over to stock, as expected, the latency issues go away.

Not as detailed and thorough as some of your posts here, but have a look at my own evidence located in this post here.

As far as I could remember, kernel log is the same as hnyman's when booting; so same flash (Micron), RAM (Nanya), CPU stepping/revision, etc..

Truly is very strange that some users don't experience this issue... So then, maybe it has something to do with the connection between a certain modem and it's WAN connection to the R7800. Maybe there is something odd with the switching between the QCA8337 and the respective user's modem (does that even control the WAN port?)? I'm using an SB8200 as the modem, so it's a Broadcom BCM3390Z.