This is with overhead 28, I testes defragmentation and is suppose to be 1472+24+4 for the overhead gives us 1500 right?

root@OpenWrt:~# speedtest-netperf.sh -H netperf-west.bufferbloat.net -p 1.1.1.1 --sequential

2025-03-05 18:31:02 Begin test with 30-second transfer sessions.

Measure speed to netperf-west.bufferbloat.net (IPv4) while pinging 1.1.1.1.

Download and upload sessions are sequential, each with 5 simultaneous streams.

.................................

Download: 91.83 Mbps

Latency: [in msec, 33 pings, 0.00% packet loss]

Min: 45.408

10pct: 45.712

Median: 46.250

Avg: 46.330

90pct: 46.696

Max: 48.505

CPU Load: [in % busy (avg +/- std dev), 30 samples]

cpu0: 17.6 +/- 4.2

cpu1: 14.4 +/- 3.6

Overhead: [in % used of total CPU available]

netperf: 4.3

................................

Upload: 23.81 Mbps

Latency: [in msec, 32 pings, 0.00% packet loss]

Min: 45.096

10pct: 45.322

Median: 46.013

Avg: 46.067

90pct: 46.641

Max: 47.806

CPU Load: [in % busy (avg +/- std dev), 30 samples]

cpu0: 7.5 +/- 2.1

cpu1: 4.8 +/- 1.4

Overhead: [in % used of total CPU available]

netperf: 0.6

root@OpenWrt:~#

and this is with 44 overhead, Mbps decreased as expected, right? but latency actually increased like 2 or 3 ms

root@OpenWrt:~# speedtest-netperf.sh -H netperf-west.bufferbloat.net -p 1.1.1.1 --sequential

2025-03-05 18:36:05 Begin test with 30-second transfer sessions.

Measure speed to netperf-west.bufferbloat.net (IPv4) while pinging 1.1.1.1.

Download and upload sessions are sequential, each with 5 simultaneous streams.

...............................

Download: 79.16 Mbps

Latency: [in msec, 31 pings, 0.00% packet loss]

Min: 45.215

10pct: 45.804

Median: 46.408

Avg: 46.415

90pct: 46.909

Max: 47.796

CPU Load: [in % busy (avg +/- std dev), 29 samples]

cpu0: 14.0 +/- 4.9

cpu1: 10.6 +/- 2.5

Overhead: [in % used of total CPU available]

netperf: 3.3

................................

Upload: 22.23 Mbps

Latency: [in msec, 32 pings, 0.00% packet loss]

Min: 45.085

10pct: 45.317

Median: 45.898

Avg: 45.972

90pct: 46.436

Max: 47.058

CPU Load: [in % busy (avg +/- std dev), 30 samples]

cpu0: 5.7 +/- 1.7

cpu1: 4.6 +/- 1.5

Overhead: [in % used of total CPU available]

netperf: 0.6

root@OpenWrt:~#

this are the settings

config global 'global'

option enabled '1'

config settings 'settings'

option WAN 'eth1'

option DOWNRATE '99000'

option UPRATE '26000'

option ROOT_QDISC 'hfsc'

config advanced 'advanced'

option PRESERVE_CONFIG_FILES '1'

option WASHDSCPUP '1'

option WASHDSCPDOWN '1'

option BWMAXRATIO '20'

option UDP_RATE_LIMIT_ENABLED '0'

option TCP_UPGRADE_ENABLED '1'

option UDPBULKPORT '51413,6881-6889'

option TCPBULKPORT '51413,6881-6889'

option NFT_HOOK 'forward'

option NFT_PRIORITY '0'

config hfsc 'hfsc'

option LINKTYPE 'ethernet'

option OH '44'

option gameqdisc 'red'

option nongameqdisc 'fq_codel'

option nongameqdiscoptions 'besteffort ack-filter'

option MAXDEL '8'

option PFIFOMIN '5'

option PACKETSIZE '450'

option netemdelayms '30'

option netemjitterms '7'

option netemdist 'normal'

option pktlossp 'none'

config cake 'cake'

option COMMON_LINK_PRESETS 'ethernet'

option PRIORITY_QUEUE_INGRESS 'diffserv4'

option PRIORITY_QUEUE_EGRESS 'diffserv4'

option HOST_ISOLATION '1'

option NAT_INGRESS '1'

option NAT_EGRESS '1'

option ACK_FILTER_EGRESS 'auto'

option AUTORATE_INGRESS '0'

I would argue that this implies that your traffic shaper is not working at all or configured such that the overhead setting has no direct implication.

But I also see that you have both sqm-scripts abd qosmate installed. Make sure these are never enabled for the same interface at the same time.

That said, do you have software flow offloading enabled?

And could you post the output of the following commands:

tc -s qdisc

tc -d qdisc

so we can see whatever is actually active on your router.

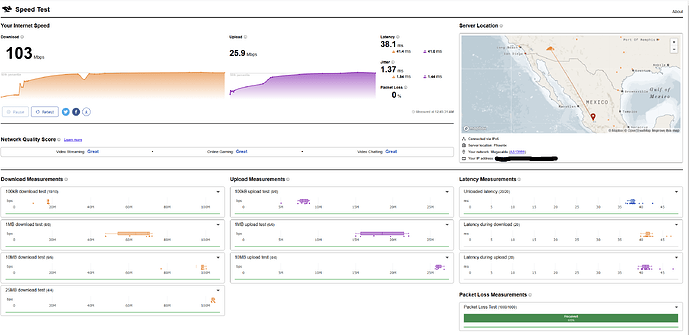

Finally, if possible, run thhe cloudflare capacity test:

from a compter behind your router and post a screenshot of the results.

Offloading type: None

root@OpenWrt:~# tc -s qdisc

qdisc noqueue 0: dev lo root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc mq 0: dev eth0 root

Sent 11577203802 bytes 9237345 pkt (dropped 0, overlimits 0 requeues 1463)

backlog 0b 0p requeues 1463

qdisc fq_codel 0: dev eth0 parent :10 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :f limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :e limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :d limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :c limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :b limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :a limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :9 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :8 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :7 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :6 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :5 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :4 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :3 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :2 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :1 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

Sent 11577203802 bytes 9237345 pkt (dropped 0, overlimits 0 requeues 1463)

backlog 0b 0p requeues 1463

maxpacket 66616 drop_overlimit 0 new_flow_count 9129 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc hfsc 1: dev eth1 root refcnt 17 default 13

Sent 70873222 bytes 153356 pkt (dropped 5, overlimits 62388 requeues 0)

backlog 0b 0p requeues 0

qdisc fq_codel 80f9: dev eth1 parent 1:12 limit 10240p flows 1024 quantum 3000 target 4ms interval 100ms memory_limit 750000b ecn drop_ba tch 64

Sent 11554974 bytes 11637 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 64034 drop_overlimit 0 new_flow_count 701 ecn_mark 0

new_flows_len 0 old_flows_len 1

qdisc fq_codel 80fb: dev eth1 parent 1:14 limit 10240p flows 1024 quantum 3000 target 4ms interval 100ms memory_limit 750000b ecn drop_ba tch 64

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc red 10: dev eth1 parent 1:11 limit 150000b min 1633b max 4900b

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

marked 0 early 0 pdrop 0 other 0

qdisc fq_codel 80fc: dev eth1 parent 1:15 limit 10240p flows 1024 quantum 3000 target 4ms interval 100ms memory_limit 750000b ecn drop_ba tch 64

Sent 2491565 bytes 18491 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 1040 drop_overlimit 0 new_flow_count 1 ecn_mark 0

new_flows_len 0 old_flows_len 1

qdisc fq_codel 80fa: dev eth1 parent 1:13 limit 10240p flows 1024 quantum 3000 target 4ms interval 100ms memory_limit 750000b ecn drop_ba tch 64

Sent 56826683 bytes 123228 pkt (dropped 5, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 49074 drop_overlimit 0 new_flow_count 4269 ecn_mark 0

new_flows_len 1 old_flows_len 7

qdisc ingress ffff: dev eth1 parent ffff:fff1 ----------------

Sent 803275548 bytes 674714 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev br-lan root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev phy0-ap0 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev phy1-ap0 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc hfsc 1: dev ifb-eth1 root refcnt 2 default 13

Sent 811070948 bytes 674241 pkt (dropped 246, overlimits 212521 requeues 1468)

backlog 0b 0p requeues 1468

qdisc fq_codel 80fd: dev ifb-eth1 parent 1:12 limit 10240p flows 1024 quantum 3000 target 4ms interval 100ms memory_limit 2750000b ecn dr op_batch 64

Sent 377762 bytes 797 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 16154 drop_overlimit 0 new_flow_count 93 ecn_mark 0

new_flows_len 1 old_flows_len 1

qdisc fq_codel 80ff: dev ifb-eth1 parent 1:14 limit 10240p flows 1024 quantum 3000 target 4ms interval 100ms memory_limit 2750000b ecn dr op_batch 64

Sent 78647 bytes 348 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 532 drop_overlimit 0 new_flow_count 347 ecn_mark 0

new_flows_len 1 old_flows_len 0

qdisc red 10: dev ifb-eth1 parent 1:11 limit 150000b min 5633b max 16900b

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

marked 0 early 0 pdrop 0 other 0

qdisc fq_codel 80fe: dev ifb-eth1 parent 1:13 limit 10240p flows 1024 quantum 3000 target 4ms interval 100ms memory_limit 2750000b ecn dr op_batch 64

Sent 810614539 bytes 673096 pkt (dropped 246, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 64062 drop_overlimit 0 new_flow_count 6273 ecn_mark 0

new_flows_len 1 old_flows_len 2

qdisc fq_codel 8100: dev ifb-eth1 parent 1:15 limit 10240p flows 1024 quantum 3000 target 4ms interval 100ms memory_limit 2750000b ecn dr op_batch 64

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

root@OpenWrt:~# tc -d qdisc

qdisc noqueue 0: dev lo root refcnt 2

qdisc mq 0: dev eth0 root

qdisc fq_codel 0: dev eth0 parent :10 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

qdisc fq_codel 0: dev eth0 parent :f limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

qdisc fq_codel 0: dev eth0 parent :e limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

qdisc fq_codel 0: dev eth0 parent :d limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

qdisc fq_codel 0: dev eth0 parent :c limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

qdisc fq_codel 0: dev eth0 parent :b limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

qdisc fq_codel 0: dev eth0 parent :a limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

qdisc fq_codel 0: dev eth0 parent :9 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

qdisc fq_codel 0: dev eth0 parent :8 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

qdisc fq_codel 0: dev eth0 parent :7 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

qdisc fq_codel 0: dev eth0 parent :6 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

qdisc fq_codel 0: dev eth0 parent :5 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

qdisc fq_codel 0: dev eth0 parent :4 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

qdisc fq_codel 0: dev eth0 parent :3 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

qdisc fq_codel 0: dev eth0 parent :2 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

qdisc fq_codel 0: dev eth0 parent :1 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 4Mb ecn drop_batch 64

qdisc hfsc 1: dev eth1 root refcnt 17 default 13

linklayer ethernet overhead 40

qdisc fq_codel 80f9: dev eth1 parent 1:12 limit 10240p flows 1024 quantum 3000 target 4ms interval 100ms memory_limit 750000b ecn drop_batch 64

qdisc fq_codel 80fb: dev eth1 parent 1:14 limit 10240p flows 1024 quantum 3000 target 4ms interval 100ms memory_limit 750000b ecn drop_batch 64

qdisc red 10: dev eth1 parent 1:11 limit 150000b min 1633b max 4900b ewma 2 probability 1 Scell_log 9

qdisc fq_codel 80fc: dev eth1 parent 1:15 limit 10240p flows 1024 quantum 3000 target 4ms interval 100ms memory_limit 750000b ecn drop_batch 64

qdisc fq_codel 80fa: dev eth1 parent 1:13 limit 10240p flows 1024 quantum 3000 target 4ms interval 100ms memory_limit 750000b ecn drop_batch 64

qdisc ingress ffff: dev eth1 parent ffff:fff1 ----------------

qdisc noqueue 0: dev br-lan root refcnt 2

qdisc noqueue 0: dev phy0-ap0 root refcnt 2

qdisc noqueue 0: dev phy1-ap0 root refcnt 2

qdisc hfsc 1: dev ifb-eth1 root refcnt 2 default 13

linklayer ethernet overhead 40

qdisc fq_codel 80fd: dev ifb-eth1 parent 1:12 limit 10240p flows 1024 quantum 3000 target 4ms interval 100ms memory_limit 2750000b ecn drop_batch 64

qdisc fq_codel 80ff: dev ifb-eth1 parent 1:14 limit 10240p flows 1024 quantum 3000 target 4ms interval 100ms memory_limit 2750000b ecn drop_batch 64

qdisc red 10: dev ifb-eth1 parent 1:11 limit 150000b min 5633b max 16900b ewma 4 probability 1 Scell_log 10

qdisc fq_codel 80fe: dev ifb-eth1 parent 1:13 limit 10240p flows 1024 quantum 3000 target 4ms interval 100ms memory_limit 2750000b ecn drop_batch 64

qdisc fq_codel 8100: dev ifb-eth1 parent 1:15 limit 10240p flows 1024 quantum 3000 target 4ms interval 100ms memory_limit 2750000b ecn drop_batch 64

root@OpenWrt:~#

1 Like

I have no idea what you are calculating here. Overhead is not restricted to MTU, but an ethernet frame with up to 1500 bytes of payload, will also carry an additional effective overhead of 38 bytes...

Regarding your delay test, if you could run the following (and post the results) please:

mtr -ezbw -c 100 -4 one.one.one.one

mtr -ezbw -c 100 -6 one.one.one.one

and

tracepath -b -4 one.one.one.one

tracepath -b -6 one.one.one.one

(you might need to install mtr/tracepath first opkg update ; opkg install mtr-json iputils-tracepath), these will give hints us where along the network path the delay is happening...

2 Likes

OK this is with OH 28, will run with 44 later

root@OpenWrt:~# mtr -ezbw -c 100 -4 one.one.one.one

Start: 2025-03-06T08:04:48-0600

HOST: OpenWrt Loss% Snt Last Avg Best Wrst StDev

1. AS??? 172.30.208.3 (172.30.208.3) 0.0% 100 1.4 1.5 1.4 1.8 0.1

2. AS??? 10.3.5.47 (10.3.5.47) 0.0% 100 1.8 1.9 1.7 2.3 0.1

3. AS??? 10.0.81.137 (10.0.81.137) 3.0% 100 2.1 2.0 1.4 2.6 0.3

4. AS??? 10.3.5.46 (10.3.5.46) 0.0% 100 27.9 28.1 27.6 28.7 0.3

[MPLS: Lbl 24015 TC 0 S u TTL 1]

[MPLS: Lbl 25508 TC 0 S u TTL 1]

5. AS??? 10.3.3.125 (10.3.3.125) 0.0% 100 32.4 32.1 31.5 33.3 0.4

[MPLS: Lbl 24092 TC 0 S u TTL 1]

[MPLS: Lbl 25508 TC 0 S u TTL 2]

6. AS??? 10.3.2.60 (10.3.2.60) 11.0% 100 34.3 34.4 33.6 39.7 0.9

[MPLS: Lbl 48637 TC 0 S u TTL 1]

[MPLS: Lbl 25508 TC 0 S u TTL 3]

7. AS??? 10.3.0.2 (10.3.0.2) 0.0% 100 35.0 34.1 33.4 35.5 0.4

8. AS3356 4.4.224.145 (4.4.224.145) 85.0% 100 33.8 34.2 33.7 34.6 0.3

9. AS3356 ae1.3509.edge2.Dallas2.net.lumen.tech (4.69.206.165) 0.0% 100 55.4 44.3 41.6 80.1 5.5

10. AS3356 4.4.128.2 (4.4.128.2) 69.0% 100 48.7 49.6 47.6 67.7 3.7

11. AS13335 141.101.74.211 (141.101.74.211) 0.0% 100 50.9 48.1 45.7 73.9 4.4

12. AS13335 one.one.one.one (1.1.1.1) 0.0% 100 46.5 46.1 45.6 46.8 0.3

root@OpenWrt:~# mtr -ezbw -c 100 -6 one.one.one.one

Start: 2025-03-06T08:06:37-0600

HOST: OpenWrt Loss% Snt Last Avg Best Wrst StDev

1. AS13999 2806:261::10:5:60:11 (2806:261::10:5:60:11) 0.0% 100 1.5 1.5 1.4 2.0 0.1

2. AS174 2001:550:2:107::d (2001:550:2:107::d) 0.0% 100 29.5 29.6 29.4 30.1 0.1

3. AS174 2001:550:2:107::c (2001:550:2:107::c) 7.0% 100 38.8 30.4 30.0 38.8 0.9

4. AS174 be5473.ccr32.phx01.atlas.cogentco.com (2001:550:0:1000::9a36:a646) 44.0% 100 45.0 45.1 44.5 51.7 1.0

5. AS174 be5040.rcr71.b047087-1.phx01.atlas.cogentco.com (2001:550:0:1000::9a36:a352) 4.0% 100 43.7 43.5 43.1 46.1 0.3

6. AS174 2001:550:2:38::bd:2 (2001:550:2:38::bd:2) 0.0% 100 86.1 48.8 42.0 86.1 9.8

7. AS13335 one.one.one.one (2606:4700:4700::1001) 0.0% 100 42.6 41.9 38.4 50.6 1.9

root@OpenWrt:~# tracepath -b -4 one.one.one.one

1?: [LOCALHOST] pmtu 1500

1: 172.30.208.3 (172.30.208.3) 1.722ms

1: 172.30.208.3 (172.30.208.3) 9.871ms

2: 10.3.5.47 (10.3.5.47) 2.154ms asymm 5

3: 10.0.81.137 (10.0.81.137) 1.776ms asymm 4

4: 10.3.5.46 (10.3.5.46) 28.733ms asymm 11

5: 10.3.3.125 (10.3.3.125) 32.693ms asymm 12

6: 10.3.2.60 (10.3.2.60) 34.441ms asymm 12

7: 10.3.0.2 (10.3.0.2) 32.099ms asymm 8

8: no reply

9: ae1.3509.edge2.Dallas2.net.lumen.tech (4.69.206.165) 41.146ms asymm 14

10: 4.4.128.2 (4.4.128.2) 72.888ms asymm 12

11: 141.101.74.51 (141.101.74.51) 44.129ms asymm 15

12: no reply

13: no reply

14: no reply

15: no reply

16: no reply

17: no reply

18: no reply

19: no reply

20: no reply

21: no reply

22: no reply

23: no reply

24: no reply

25: no reply

26: no reply

27: no reply

28: no reply

29: no reply

30: no reply

Too many hops: pmtu 1500

Resume: pmtu 1500

root@OpenWrt:~# tracepath -b -6 one.one.one.one

1?: [LOCALHOST] 0.027ms pmtu 1500

1: 2806:261::10:5:60:11 (2806:261::10:5:60:11) 1.724ms

1: 2806:261::10:5:60:11 (2806:261::10:5:60:11) 1.614ms

2: 2001:550:2:107::d (2001:550:2:107::d) 27.613ms

3: 2001:550:2:107::c (2001:550:2:107::c) 28.305ms asymm 8

4: no reply

5: be5040.rcr71.b047087-1.phx01.atlas.cogentco.com (2001:550:0:1000::9a36:a352) 37.960ms asymm 10

6: 2001:550:2:38::bd:2 (2001:550:2:38::bd:2) 40.386ms asymm 12

7: no reply

8: no reply

9: no reply

10: no reply

11: no reply

12: no reply

13: no reply

14: no reply

15: no reply

16: no reply

17: no reply

18: no reply

19: no reply

20: no reply

21: no reply

22: no reply

23: no reply

24: no reply

25: no reply

26: no reply

27: no reply

28: no reply

29: no reply

30: no reply

Too many hops: pmtu 1500

Resume: pmtu 1500

root@OpenWrt:~#

OH 44, and yes 40ms to Dallas is actually what I get and is good enough BUT with my previous router I was getting between 45 and 60 ms while gaming and now with the One router I get between 90 and 100ms

root@OpenWrt:~# mtr -ezbw -c 100 -4 one.one.one.one

Start: 2025-03-06T08:16:06-0600

HOST: OpenWrt Loss% Snt Last Avg Best Wrst StDev

1. AS??? 172.30.208.3 (172.30.208.3) 0.0% 100 1.6 1.6 1.4 2.0 0.1

2. AS??? 10.3.5.47 (10.3.5.47) 0.0% 100 2.0 1.9 1.7 2.4 0.1

3. AS??? 10.0.81.137 (10.0.81.137) 6.0% 100 2.6 2.1 1.6 2.8 0.3

4. AS??? 10.3.5.46 (10.3.5.46) 0.0% 100 28.4 28.2 27.6 29.5 0.4

[MPLS: Lbl 24015 TC 0 S u TTL 1]

[MPLS: Lbl 25508 TC 0 S u TTL 1]

5. AS??? 10.3.3.125 (10.3.3.125) 0.0% 100 32.2 32.2 31.7 33.5 0.3

[MPLS: Lbl 24092 TC 0 S u TTL 1]

[MPLS: Lbl 25508 TC 0 S u TTL 2]

6. AS??? ??? 100.0 100 0.0 0.0 0.0 0.0 0.0

7. AS??? 10.3.0.2 (10.3.0.2) 0.0% 100 34.5 34.3 33.7 35.5 0.3

8. AS3356 4.4.224.145 (4.4.224.145) 83.0% 100 34.3 34.7 33.7 43.1 2.2

9. AS3356 ae1.3509.edge2.Dallas2.net.lumen.tech (4.69.206.165) 0.0% 100 50.9 44.5 41.5 87.0 6.2

10. AS3356 4.4.128.2 (4.4.128.2) 69.0% 100 49.6 51.5 47.7 66.2 4.6

11. AS13335 141.101.74.211 (141.101.74.211) 0.0% 100 46.3 47.6 45.7 67.3 3.3

12. AS13335 one.one.one.one (1.1.1.1) 0.0% 100 46.1 46.2 45.6 46.9 0.3

root@OpenWrt:~# mtr -ezbw -c 100 -6 one.one.one.one

Start: 2025-03-06T08:18:27-0600

HOST: OpenWrt Loss% Snt Last Avg Best Wrst StDev

1. AS13999 2806:261::10:5:60:11 (2806:261::10:5:60:11) 0.0% 100 1.9 1.6 1.5 2.3 0.1

2. AS174 2001:550:2:107::d (2001:550:2:107::d) 0.0% 100 29.6 29.7 29.5 30.1 0.1

3. AS174 2001:550:2:107::c (2001:550:2:107::c) 16.0% 100 30.2 30.3 30.0 30.7 0.1

4. AS174 be5473.ccr32.phx01.atlas.cogentco.com (2001:550:0:1000::9a36:a646) 92.0% 100 44.9 45.0 44.9 45.3 0.2

5. AS174 be5040.rcr71.b047087-1.phx01.atlas.cogentco.com (2001:550:0:1000::9a36:a352) 13.0% 100 43.5 43.6 43.1 44.7 0.2

6. AS174 2001:550:2:38::bd:2 (2001:550:2:38::bd:2) 0.0% 100 43.1 52.0 42.1 139.1 18.7

7. AS13335 one.one.one.one (2606:4700:4700::1001) 0.0% 100 42.4 41.7 38.4 44.9 1.9

So for IPv6 the big delay jump happens between your ISP (Megacable) and Cogent (in El Paso Texas), so outside of where sqm/qosmate/qos on your router can realistically affect anything...

Well, what we can tell right now is that without network load it is not your OpenWrt One that is responsible here, as otherwise the RTT of the first few hops would already be terrible, these hops have pretty decent RTTs for all MTR runs. So purely judging from this data there is no reason to assume your qosmate install does not work as expected.

1 Like

yeah that is what I was looking with these, but the last time I use the linksys router was to run a test to compare to the One router, what change between one router and another? don't know, let me test again

ok, you have any idea, what and how?

root@OpenWrt:~# mtr -ezbw -c 100 -4 one.one.one.one

Start: 2025-03-06T10:02:20-0600

HOST: OpenWrt Loss% Snt Last Avg Best Wrst StDev

1. AS??? 172.30.208.3 (172.30.208.3) 0.0% 100 1.6 6.4 1.6 37.7 7.9

2. AS??? 10.3.10.206 (10.3.10.206) 0.0% 100 1.8 1.9 1.7 2.3 0.1

3. AS??? 10.0.81.121 (10.0.81.121) 2.0% 100 2.1 2.1 1.5 2.7 0.3

4. AS??? 10.3.5.48 (10.3.5.48) 0.0% 100 3.2 3.1 2.6 3.9 0.3

[MPLS: Lbl 24500 TC 0 S u TTL 1]

[MPLS: Lbl 26241 TC 0 S u TTL 1]

5. AS??? 10.3.3.127 (10.3.3.127) 0.0% 100 3.6 3.4 2.7 5.1 0.5

[MPLS: Lbl 24108 TC 0 S u TTL 1]

[MPLS: Lbl 26241 TC 0 S u TTL 2]

6. AS??? 10.3.0.72 (10.3.0.72) 0.0% 100 5.6 3.5 2.5 10.1 1.1

7. AS32098 201-174-24-209.transtelco.net (201.174.24.209) 0.0% 100 2.8 3.4 2.6 28.8 2.6

8. AS32098 201-174-250-5.transtelco.net (201.174.250.5) 0.0% 100 7.0 7.1 6.6 7.7 0.3

9. AS32098 201-174-17-159.transtelco.net (201.174.17.159) 0.0% 100 21.3 11.2 7.5 66.1 7.9

10. AS13335 one.one.one.one (1.1.1.1) 0.0% 100 7.3 7.1 6.5 7.7 0.3

root@OpenWrt:~# mtr -ezbw -c 100 -6 one.one.one.one

Start: 2025-03-06T10:05:21-0600

HOST: OpenWrt Loss% Snt Last Avg Best Wrst StDev

1. AS13999 2806:261::10:5:60:11 (2806:261::10:5:60:11) 0.0% 100 1.8 1.8 1.6 2.5 0.1

2. AS174 2001:550:2:107::d (2001:550:2:107::d) 0.0% 100 33.3 33.3 33.1 33.7 0.1

3. AS174 2001:550:2:107::c (2001:550:2:107::c) 8.0% 100 30.5 30.5 30.2 32.0 0.2

4. AS174 be5473.ccr32.phx01.atlas.cogentco.com (2001:550:0:1000::9a36:a646) 17.0% 100 42.5 42.6 42.2 49.2 0.8

5. AS174 be5040.rcr71.b047087-1.phx01.atlas.cogentco.com (2001:550:0:1000::9a36:a352) 1.0% 100 46.0 46.0 45.5 48.4 0.3

6. AS174 2001:550:2:38::bd:2 (2001:550:2:38::bd:2) 0.0% 100 43.7 50.9 42.7 87.7 9.1

7. AS13335 one.one.one.one (2606:4700:4700::1111) 0.0% 100 44.6 42.4 38.6 45.1 2.1

root@OpenWrt:~# tracepath -b -4 one.one.one.one

1?: [LOCALHOST] pmtu 1500

1: 172.30.208.3 (172.30.208.3) 1.883ms

1: 172.30.208.3 (172.30.208.3) 1.999ms

2: 10.3.10.206 (10.3.10.206) 2.821ms

3: 10.0.81.121 (10.0.81.121) 2.258ms

4: 10.3.5.48 (10.3.5.48) 3.229ms asymm 9

5: 10.3.3.128 (10.3.3.128) 3.281ms asymm 9

6: 10.3.0.72 (10.3.0.72) 3.729ms asymm 7

7: 10.3.2.228 (10.3.2.228) 3.405ms

8: 201-174-24-209.transtelco.net (201.174.24.209) 3.921ms

9: 201-174-17-159.transtelco.net (201.174.17.159) 9.363ms

10: 201-174-17-159.transtelco.net (201.174.17.159) 15.659ms asymm 9

11: no reply

12: no reply

13: no reply

14: no reply

15: no reply

16: no reply

17: no reply

18: no reply

19: no reply

20: no reply

21: no reply

22: no reply

23: no reply

24: no reply

25: no reply

26: no reply

27: no reply

28: no reply

29: no reply

30: no reply

Too many hops: pmtu 1500

Resume: pmtu 1500

root@OpenWrt:~# tracepath -b -6 one.one.one.one

1?: [LOCALHOST] 0.084ms pmtu 1500

1: 2806:261::10:5:60:11 (2806:261::10:5:60:11) 139.033ms

1: 2806:261::10:5:60:11 (2806:261::10:5:60:11) 4.744ms

2: 2001:550:2:107::d (2001:550:2:107::d) 31.049ms

3: 2001:550:2:107::c (2001:550:2:107::c) 28.723ms asymm 8

4: no reply

5: be5040.rcr71.b047087-1.phx01.atlas.cogentco.com (2001:550:0:1000::9a36:a352) 44.557ms asymm 10

6: 2001:550:2:38::bd:2 (2001:550:2:38::bd:2) 40.810ms asymm 12

7: no reply

8: no reply

9: no reply

10: no reply

11: no reply

12: no reply

13: no reply

14: no reply

15: no reply

16: no reply

17: no reply

18: no reply

19: no reply

20: no reply

21: no reply

22: no reply

23: no reply

24: no reply

25: no reply

26: no reply

27: no reply

28: no reply

29: no reply

30: no reply

Too many hops: pmtu 1500

Resume: pmtu 1500

could it possibly help if I reflash the entirety firmware? not the sys upgrade but the factory one?

with the Linksys router, the test starts right away but with the One there's like a delay that takes like 20 seconds to start

Your ISP has different routes to 1.1.1.1 (and hence you reach different instances, one closer by via AS32098/Transtelco and one further away via AS174/Cogent.

As far as I can tell this mostly happens within your ISP's network so little you can do...

but why is a different route with one router and different with another?

Ask your ISP ;)...

Really there is very little that could go different here:

But for b) the most prominent things the ISP sees are:

1 Like

Yeah I was thinking DHCP even though both routers are with 24.10 firmware, but could it be negotiating some different?

At first thought that adblock was blocking some DNS server that was causing to take another route

going to have to just keep testing some different

OK so IT WAS the OpenWrt one Router

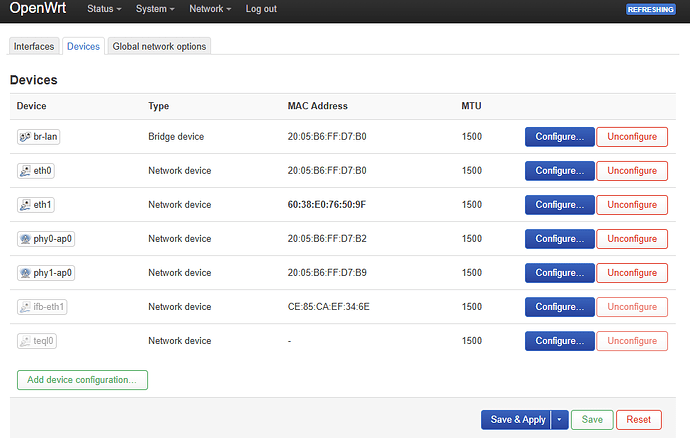

I went to change "clone" the Mac address BUT the "eth0" and "eth1" ports were grayed out like they weren't configured, I filled out the settings and now they appear "configured" and working the same as my Linksys router

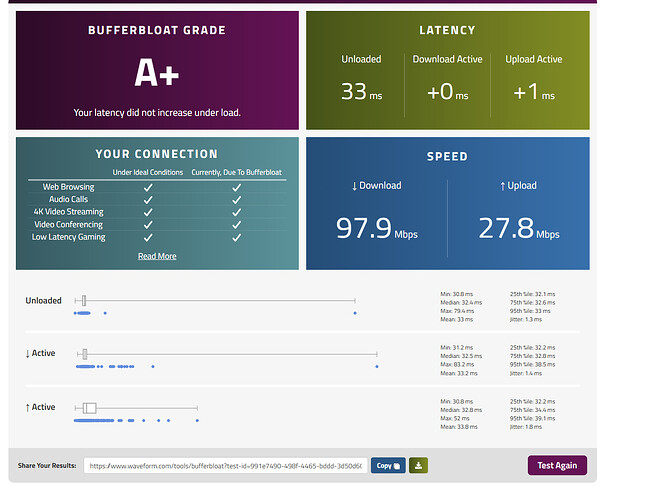

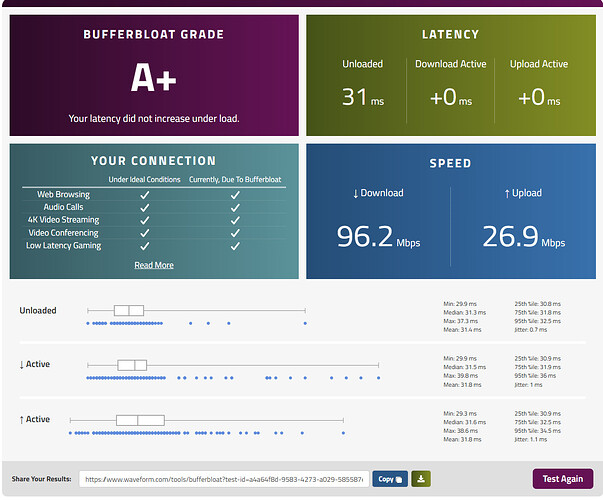

Now it's working even better than my Linksys router like it's supposed to, increased downloading and uploading speed, 33ms unloaded and no increased in load, Low latency gaming Checked, 1.3ms jitter, now I could even get better results once I fine tune my SqM settings

Christoph34:

OK so IT WAS the OpenWrt one Router

I went to change "clone" the Mac address BUT the "eth0" and "eth1" ports were grayed out like they weren't configured, I filled out the settings and now they appear "configured" and working the same as my Linksys router

Great that you got it working. I still have no idea though what you did... which fields in which GUI page were grayed out?

Here in the network/interfaces/devices everything was greyed out but the br-lan device

Now everything is working as it should, I was getting not less than 44ms unloaded, like 85 mbps download, Low latency gaming didn't pass and jitter I was getting like 7-8ms, and btw I was getting some issue with the wi-fi drooping a connection that at the moment was really not important to me but now the connection is rock solid

That likely just means these devices were running with the default configuration...

Changed nothing, copy pasted the same MAC address for and to each individual device, and after that I deleted the MAC address pasted, leaving behind the actual same MAC address that was "configured" at the beginning and that brought up or light up (whatever) the device, same devices are light up and not greyed out in my linksys router, and I already tested with gaming, I was getting around 80-90 ms and now it keeps it below 50 ms, which it was oscillating around 45-65 with my linksys router

Would be interesting to compare the /etc/config/network, /etc/config/firewall from before and after these changes...

Christoph34:

Changed nothing

So the One still has its original MAC addresses and not the one from the Linksys?

what I did now that I remember was clone the ula_prefix from my linksys, but I can see that it still has cloned the Mac address from my linksys, maybe I didn't click save and apply, I going to retest with the original ONE Mac address, BUT I remember clearly that from the line option_proto 'dhcp6 had nothing else from this line to the bottom so now it has added the eth1, eth0, phy0-ap0, phy1-ap0 and ifb-eth1

Firewall file seems the same as far as I can see, you want to post it?

config interface 'loopback'

option device 'lo'

option proto 'static'

option ipaddr '127.0.0.1'

option netmask '255.0.0.0'

config globals 'globals'

option ula_prefix 'fdee:ff97:73::/48'

option packet_steering '0'

config device

option name 'br-lan'

option type 'bridge'

list ports 'eth0'

config interface 'lan'

option device 'br-lan'

option proto 'static'

option ipaddr '192.168.1.1'

option netmask '255.255.255.0'

option ip6assign '60'

config interface 'wan'

option device 'eth1'

option proto 'dhcp'

config interface 'wan6'

option device 'eth1'

option proto 'dhcpv6'

config device

option name 'eth1'

option macaddr '60:38:e0:76:50:9f'

config device

option name 'eth0'

config device

option name 'phy0-ap0'

config device

option name 'phy1-ap0'

config device

option name 'ifb-eth1'

That is a Belkin registered MAC (Belkin bought Linksys IIRC)... interesting to see what happens if you switch back to the one's original MAC. That still keeps the "your ISP does different things for different MACs" theory on life-support