Hi @all,

I'm currently working on tuning OpenWRT inside a virtual machine (vm) as router/firewall for everyday-usage.

TL/DR

- Internet upload speed is for client devices unacceptable low (<1Mbps, line speed ~40Mbps)

- Network connection from client devices to OpenWRT-VM is too low - ~300 Mbps on a gigabit wired line

- Internet connection speed was/is near line speed (DL/UL 100/40 Mbps) when running a speed test directly on the OpenWRT-vm as well as on a physical OpenWRT-router

Long version

System information:

- Hardware: Intel Xeon E3-1235L v5 € 2.00GHz x 4 on a Asus P10S-I; 48GB ECC UDIMM; 2*1GBE Intel I210; 10GBE Aquantia AQC107

- Host: Ubuntu 18.06.3 running HWE-kernel (5.0.0-23)

- Hypervisor: Virtualbox v.5.2.34_Ubuntur133883

- Modem: Fritz!Box 7412, set to pass through traffic (called "Exposed Host" in settings)

VM-information (OpenWRT 19.07.0-rc2 X86-64-image):

hypervisor@PatIsa-Server-Hypervisor:/root$ vboxmanage showvminfo openwrt-rc

Name: openwrt-rc

Groups: /

Guest OS: Other Linux (64-bit)

UUID: b2558384-aeeb-460e-821d-67aef2dbd61a

Config file: /mnt/vm/openwrt-rc/openwrt-rc.vbox

Snapshot folder: /mnt/vm/openwrt-rc/Snapshots

Log folder: /mnt/vm/openwrt-rc/Logs

Hardware UUID: b2558384-aeeb-460e-821d-67aef2dbd61a

Memory size: 8192MB

Page Fusion: off

VRAM size: 16MB

CPU exec cap: 100%

HPET: off

Chipset: ich9

Firmware: BIOS

Number of CPUs: 4

PAE: on

Long Mode: on

Triple Fault Reset: off

APIC: on

X2APIC: on

CPUID Portability Level: 0

CPUID overrides: None

Boot menu mode: message and menu

Boot Device (1): Floppy

Boot Device (2): DVD

Boot Device (3): HardDisk

Boot Device (4): Not Assigned

ACPI: on

IOAPIC: on

BIOS APIC mode: APIC

Time offset: 0ms

RTC: UTC

Hardw. virt.ext: on

Nested Paging: on

Large Pages: off

VT-x VPID: on

VT-x unr. exec.: on

Paravirt. Provider: Default

Effective Paravirt. Provider: KVM

State: running (since 2019-12-03T13:04:20.476000000)

Monitor count: 1

3D Acceleration: off

2D Video Acceleration: off

Teleporter Enabled: off

Teleporter Port: 0

Teleporter Address:

Teleporter Password:

Tracing Enabled: off

Allow Tracing to Access VM: off

Tracing Configuration:

Autostart Enabled: off

Autostart Delay: 0

Default Frontend:

Storage Controller Name (0): SATA

Storage Controller Type (0): IntelAhci

Storage Controller Instance Number (0): 0

Storage Controller Max Port Count (0): 30

Storage Controller Port Count (0): 2

Storage Controller Bootable (0): on

SATA (0, 0): /mnt/vm/openwrt-rc/Snapshots/{6a158de0-00db-4a7e-b6a4-add6ba641f96}.vdi (UUID: 6a158de0-00db-4a7e-b6a4-add6ba641f96)

SATA (1, 0): Empty

NIC 1: MAC: 0800276AA93C, Attachment: Bridged Interface 'enp8s0', Cable connected: on, Trace: off (file: none), Type: virtio, Reported speed: 0 Mbps, Boot priority: 0, Promisc Policy: allow-all, Bandwidth group: none

NIC 2: MAC: 08002706074A, Attachment: Bridged Interface 'eno2', Cable connected: on, Trace: off (file: none), Type: virtio, Reported speed: 0 Mbps, Boot priority: 0, Promisc Policy: deny, Bandwidth group: none

NIC 3: MAC: 0800278B02B8, Attachment: Internal Network 'openwrt_switch', Cable connected: on, Trace: off (file: none), Type: virtio, Reported speed: 0 Mbps, Boot priority: 0, Promisc Policy: deny, Bandwidth group: none

NIC 4: disabled

NIC 5: disabled

NIC 6: disabled

NIC 7: disabled

NIC 8: disabled

NIC 9: disabled

NIC 10: disabled

NIC 11: disabled

NIC 12: disabled

NIC 13: disabled

NIC 14: disabled

NIC 15: disabled

NIC 16: disabled

NIC 17: disabled

NIC 18: disabled

NIC 19: disabled

NIC 20: disabled

NIC 21: disabled

NIC 22: disabled

NIC 23: disabled

NIC 24: disabled

NIC 25: disabled

NIC 26: disabled

NIC 27: disabled

NIC 28: disabled

NIC 29: disabled

NIC 30: disabled

NIC 31: disabled

NIC 32: disabled

NIC 33: disabled

NIC 34: disabled

NIC 35: disabled

NIC 36: disabled

Pointing Device: USB Tablet

Keyboard Device: PS/2 Keyboard

UART 1: disabled

UART 2: disabled

UART 3: disabled

UART 4: disabled

LPT 1: disabled

LPT 2: disabled

Audio: disabled

Audio playback: enabled

Audio capture: disabled

Clipboard Mode: disabled

Drag and drop Mode: disabled

Session name: GUI/Qt

Video mode: 720x400x0 at 0,0 enabled

VRDE: disabled

USB: disabled

EHCI: disabled

XHCI: enabled

USB Device Filters:

<none>

Available remote USB devices:

<none>

Currently Attached USB Devices:

<none>

Bandwidth groups: <none>

Shared folders: <none>

VRDE Connection: not active

Clients so far: 0

Capturing: not active

Capture audio: not active

Capture screens: 0

Capture file: /mnt/vm/openwrt-rc/openwrt-rc.webm

Capture dimensions: 1024x768

Capture rate: 512 kbps

Capture FPS: 25

Capture options: ac_enabled=false

Guest:

Configured memory balloon size: 0 MB

OS type: Linux_64

Additions run level: 0

Guest Facilities:

No active facilities.

Snapshots:

Name: 0000 (UUID: 88a337af-856f-45ed-8df6-44d50f8dff37) *

Description:

Vor dem eigentlichen Start

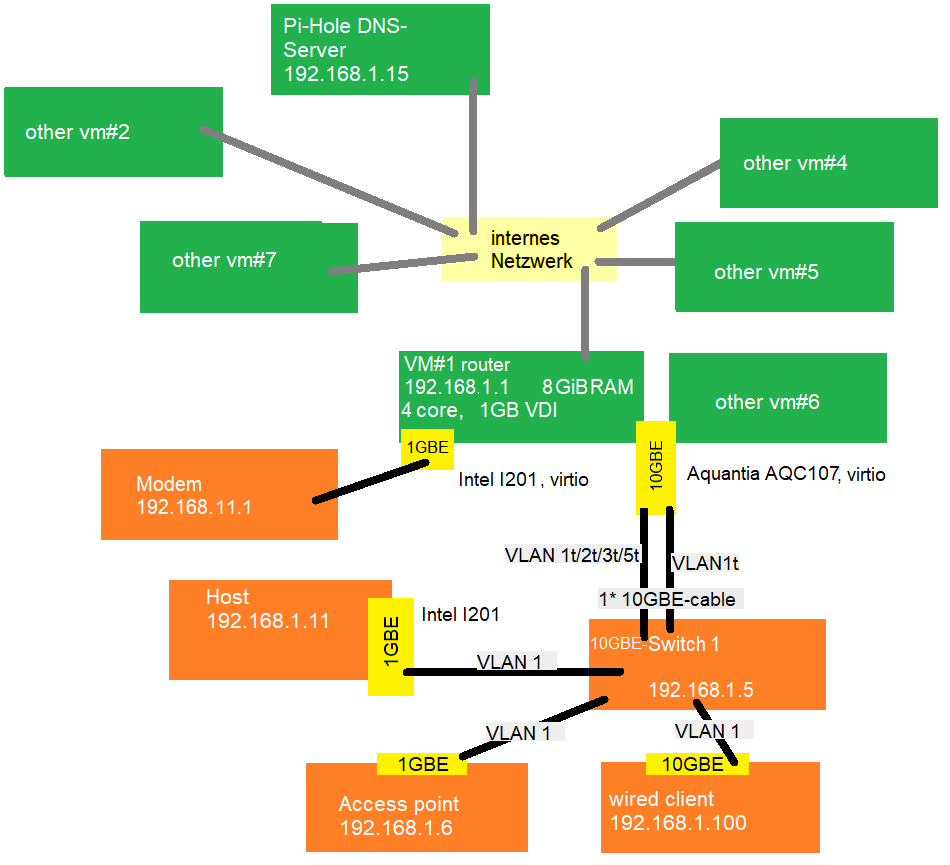

Network-setup:

When starting on this I got DL/UL-speeds on clients connected via wire to the switch around 2/0,05 Mbps.

Running a speedtest directly on the vm delivers the following results:

root@OpenWrt:~# speedtest-netperf.sh -s -H netperf-eu.bufferbloat.net -p 1.1.1.1

2019-12-03 13:17:30 Starting speedtest for 60 seconds per transfer session.

Measure speed to netperf-eu.bufferbloat.net (IPv4) while pinging 1.1.1.1.

Download and upload sessions are sequential, each with 5 simultaneous streams.

.............................................................

Download: 85.40 Mbps

Latency: [in msec, 61 pings, 0.00% packet loss]

Min: 21.402

10pct: 21.904

Median: 23.290

Avg: 23.430

90pct: 25.119

Max: 26.475

CPU Load: [in % busy (avg +/- std dev), 59 samples]

cpu0: 20.5 +/- 6.8

cpu1: 16.8 +/- 7.7

cpu2: 18.0 +/- 7.1

cpu3: 12.3 +/- 5.1

Overhead: [in % used of total CPU available]

netperf: 4.1

.............................................................

Upload: 36.83 Mbps

Latency: [in msec, 61 pings, 0.00% packet loss]

Min: 22.964

10pct: 23.346

Median: 25.037

Avg: 25.730

90pct: 27.968

Max: 41.320

CPU Load: [in % busy (avg +/- std dev), 59 samples]

cpu0: 15.4 +/- 4.2

cpu1: 11.1 +/- 3.5

cpu2: 17.9 +/- 4.9

cpu3: 0.4 +/- 0.7

Overhead: [in % used of total CPU available]

netperf: 0.2

which is more close to my real network speed of 100/40 Mbps.

I then installed sqm-scripts and configured it with a DL/UL limit of 95000/38000 which resulted in a not perfect but acceptable DL of ~70Mbps but UL still remains at 0.07 Mbps.

Next thing I did was installing iperf to measure wired speeds towards/from vm:

root@OpenWrt:~# iperf -s

------------------------------------------------------------

Server listening on TCP port 5001

TCP window size: 85.3 KByte (default)

------------------------------------------------------------

[ 4] local 192.168.1.1 port 5001 connected with 192.168.1.100 port 60122

[ ID] Interval Transfer Bandwidth

[ 4] 0.0-10.0 sec 353 MBytes 296 Mbits/sec

I could see a peak of 115 MiB/s, but average was 20-25 MiB/s.

Same client connecting to OpenWRT accesspoint installed in my LAN:

root@accesspoint:~# iperf -s

------------------------------------------------------------

Server listening on TCP port 5001

TCP window size: 85.3 KByte (default)

------------------------------------------------------------

[ 4] local 192.168.1.6 port 5001 connected with 192.168.1.100 port 60293

[ ID] Interval Transfer Bandwidth

[ 4] 0.0-10.0 sec 1.07 GBytes 914 Mbits/sec

So here I reach expected bandwidth which shows no issues with my general network setup.

Changing the MTU brings no difference.

Does anyone have an idea on how to solve these connection issues?

Best,

ssdnvv

edit: Results do not differ between running those tests with a 18.06.4- or a 19.07.0-rc2-image.

. Trying to copy a file via SSH (via WinSCP) I get - if at all - 64Kbps. (again: both directions reach gigabit-limit when adressing a physical wiredly connected OpenWrt-machine in my network).

. Trying to copy a file via SSH (via WinSCP) I get - if at all - 64Kbps. (again: both directions reach gigabit-limit when adressing a physical wiredly connected OpenWrt-machine in my network).