Hi, I just upgraded my family's MR8300 router from v22.03.7 to v24.10.0. I'm no expert but I thought I could use iperf to test between two devices on the local network both before and after, so that's what I did. The results I got are a bit further down, but in short they seem to match/ confirm this issue.

I don't think this issue is a big deal for our use case of just basic home wireless router (rarely sharing files/ data within local network), but I thought I could test and provide a datapoint in the process of upgrading at least. In fact it seems the wifi connection with my laptop is quite a bit faster on the new version, so I'm certainly not complaining

NOTE: While I was writing this up I tried running this test a few more times and once in a while the bandwidth tests much better (in line with before)! The load average is still high each time however. I also took a snapshot of 'top' while the test was running, that's also below but as you can see '[napi/eth0-8]' seems to be the high CPU user while the test is running.

Test Results:

Before upgrade (OpenWrt v22.03.7):

Bandwidth: 948 Mbits/s

1min load avg: 0.01 (at end of test)

(from Realtime graphs / System load page, for 1 min load) Average: 0.02, peak: 0.08

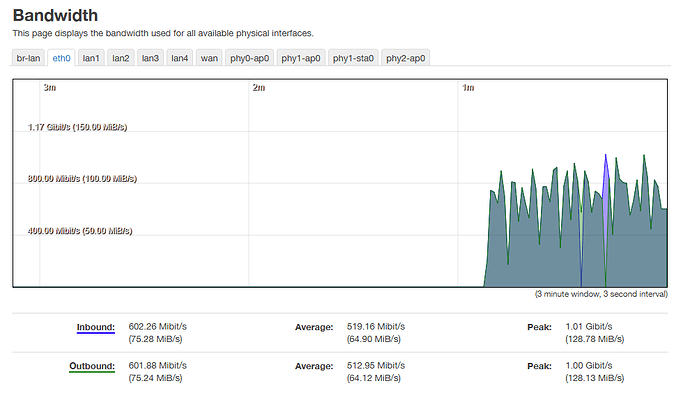

After upgrade (OpenWrt v24.10.0):

Test 1:

Bandwidth: 730 Mbits/s

1min load avg: 1.12 (at end of test)

(from Realtime graphs / System load page, for 1 min load) Average: 1.24, peak: 1.46

Test 2:

Bandwidth: 606 Mbits/s

1min load avg: 1.14 (at end of test)

(from Realtime graphs / System load page, for 1 min load) Average: 1.07, peak: 1.29

Test Notes:

Basically I just ran the basic iperf3 test, with one machine running 'iperf3 -s' and the other running 'iperf3 -c <ip_addr> -t 240'. Both are laptops, one running windows 10, the other fedora Linux.

Top Sample while test is running:

Mem: 194884K used, 311920K free, 428K shrd, 0K buff, 19828K cached

CPU: 0% usr 16% sys 0% nic 68% idle 0% io 0% irq 14% sirq

Load average: 1.11 0.83 0.62 5/116 4454

PID PPID USER STAT VSZ %VSZ %CPU COMMAND

69 2 root RW 0 0% 24% [napi/eth0-8]

76 2 root RW 0 0% 4% [napi/eth0-0]

75 2 root SW 0 0% 2% [napi/eth0-5]

14 2 root SW 0 0% 0% [ksoftirqd/0]

1150 1105 network S 3284 1% 0% /usr/sbin/hostapd -s -g /var/run/hostapd/global

4416 4408 root R 1144 0% 0% top