Sorry, but as I was trying to put my thoughts into somewhat coherent sentences, I noticed my English skills are just not sufficient for that task. This is why I used some translation software. I hope this is okay!

* Please click here for the automated translation... *

I don't even know where to start... ![]()

The situation is that I moved into a shared apartment with a friend and we simply took our existing networks with us. He uses an AVM Fritz!Box 7490 and I use some x86 China box from AliExpress, on which I have installed OPNsense. To somehow connect the networks to the Internet, I bought a NanoPi R6S from Amazon and slapped OpenWrt 24.10.1 on it (following this installation guide and this guide for performance optimization (option #1). After I deactivated NAT in both the Fritz!Box and the OPNsense router and configured the static routes in OpenWrt, everything worked fine (Well, except for the problems with DS-lite, but even that works fine now. Big THANK YOU to @moeller0 and @pavelgl !).

However, we quickly realized that as soon as one of us starts a download (or even just streams videos), the other can no longer use the internet. You can completely forget about playing Counter-Strike or Call of Duty (yes, I know, typical first-world problem...).

After a brief search, I came across the term “bufferbloat”, which seems to describe the situation pretty well. So I did some research on how to counteract this. I quickly came across this article on the OpenWrt wiki. Although I'm pretty sure I followed everything exactly as described at first, we still had problems.

I don't know how many hours I've spent changing a setting and then using FLENT to check whether the change had a positive or negative effect. All for naught.

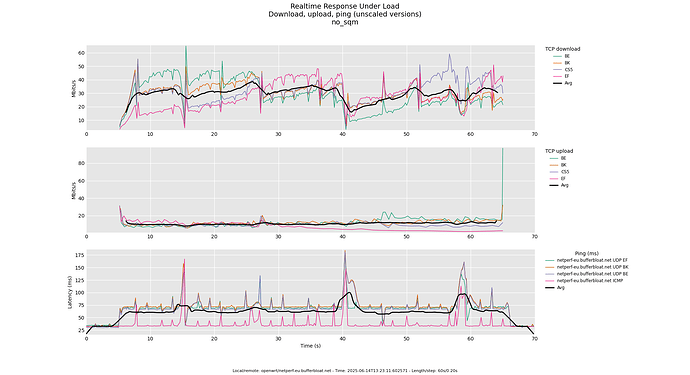

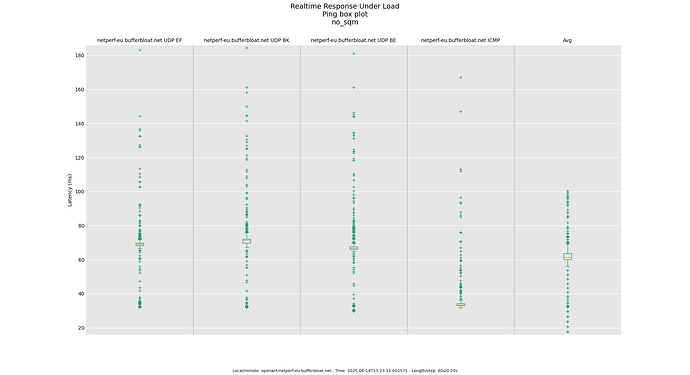

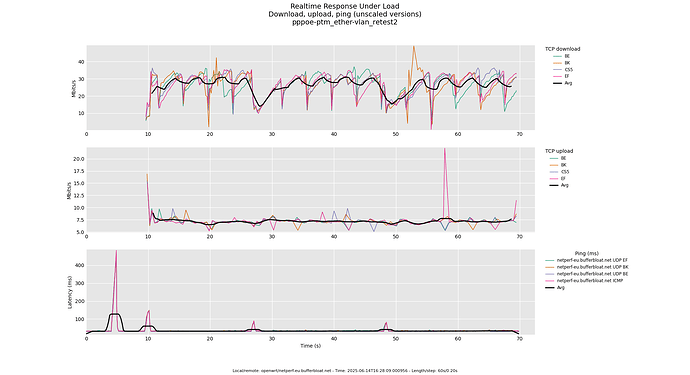

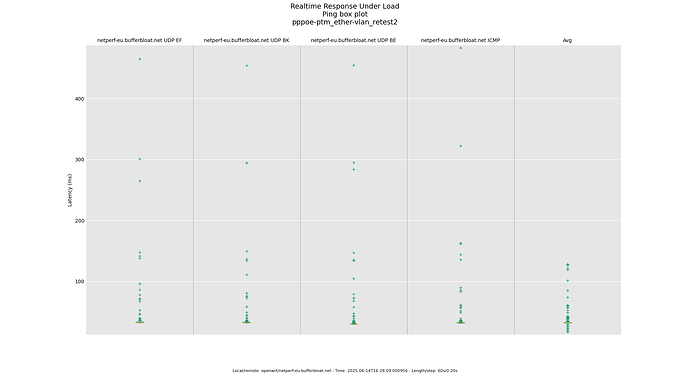

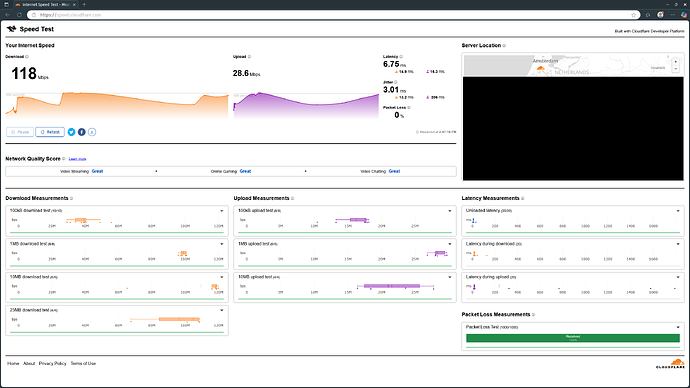

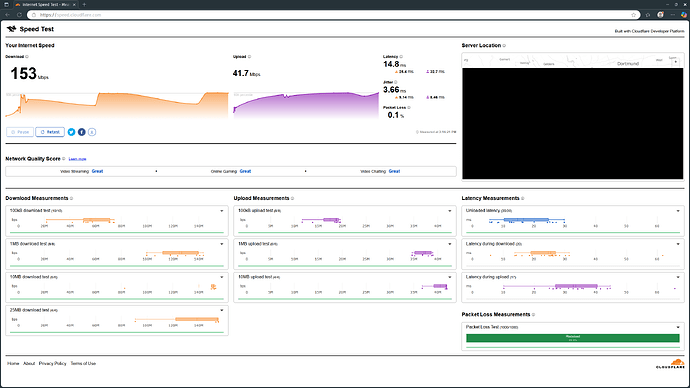

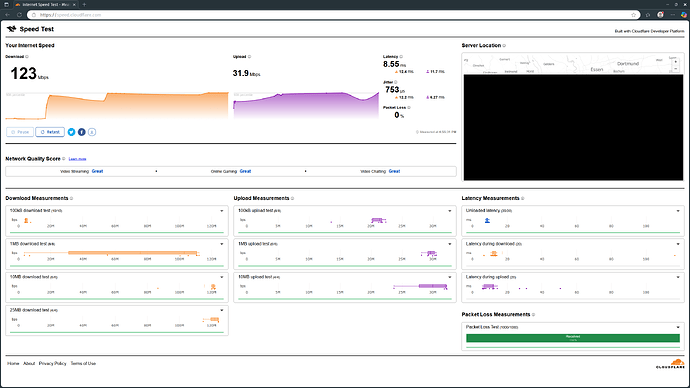

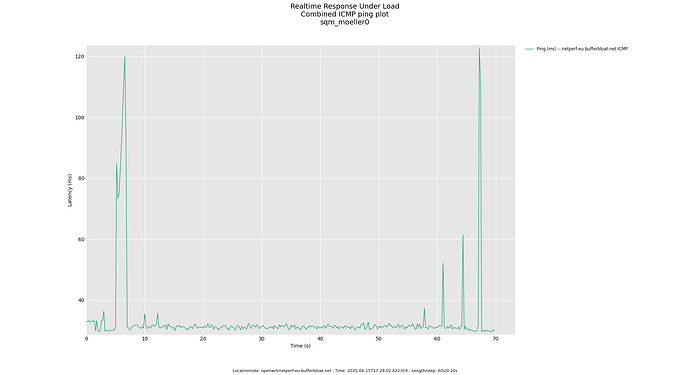

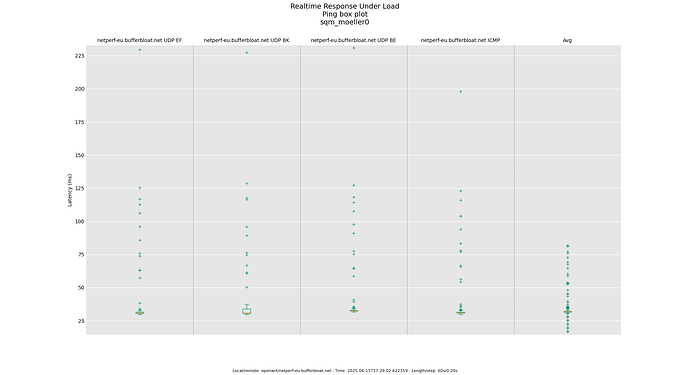

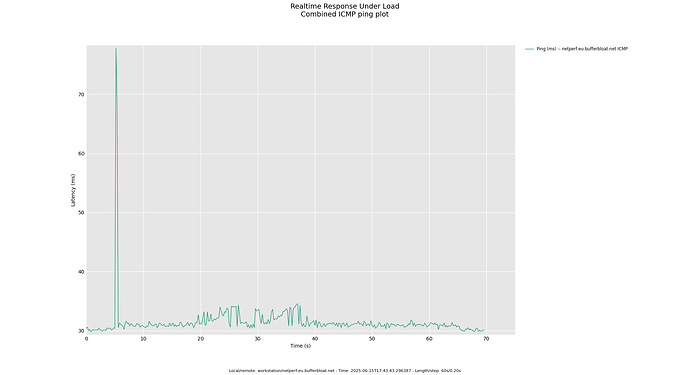

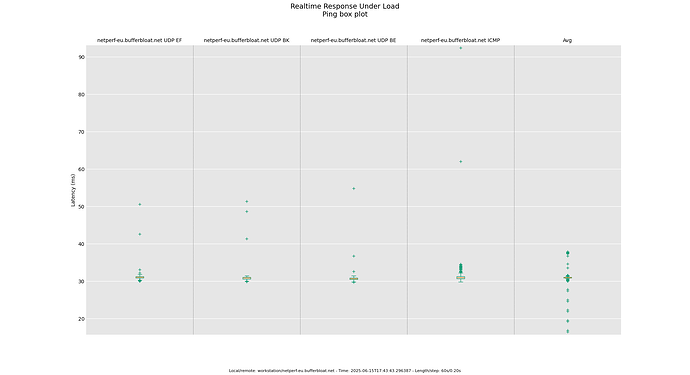

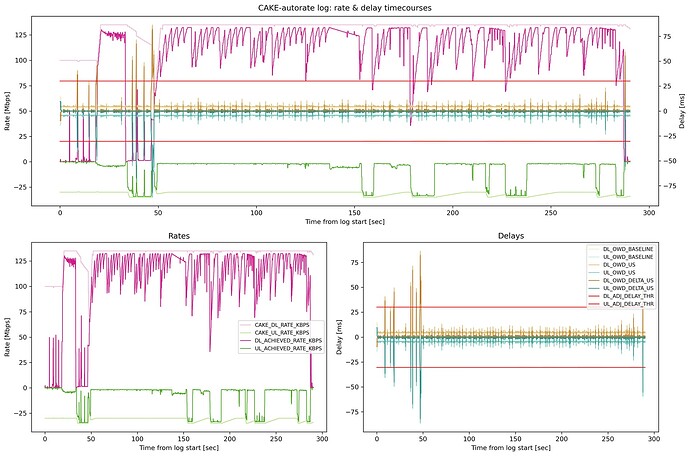

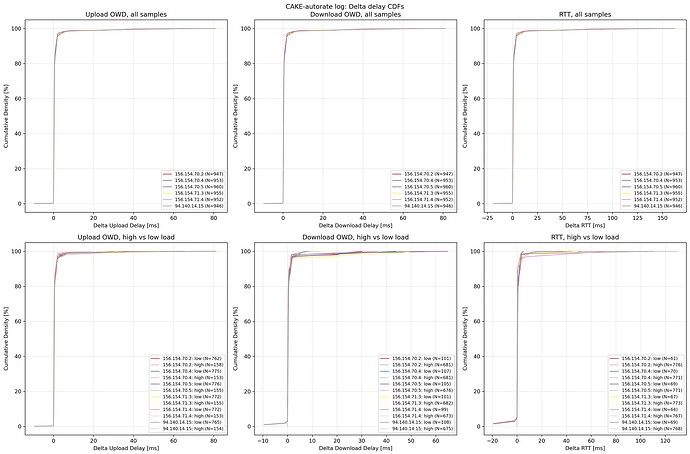

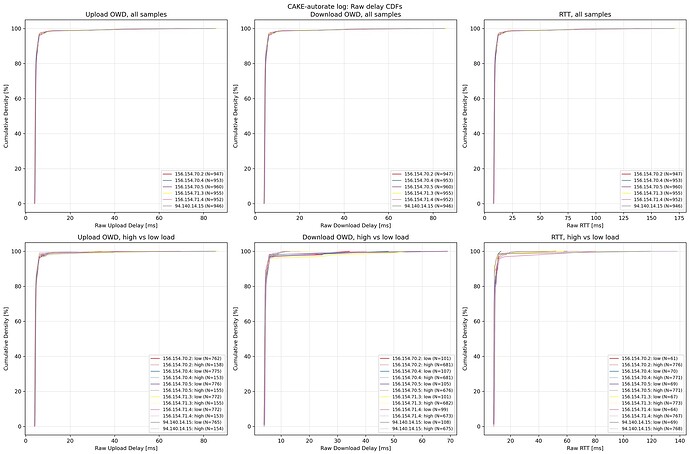

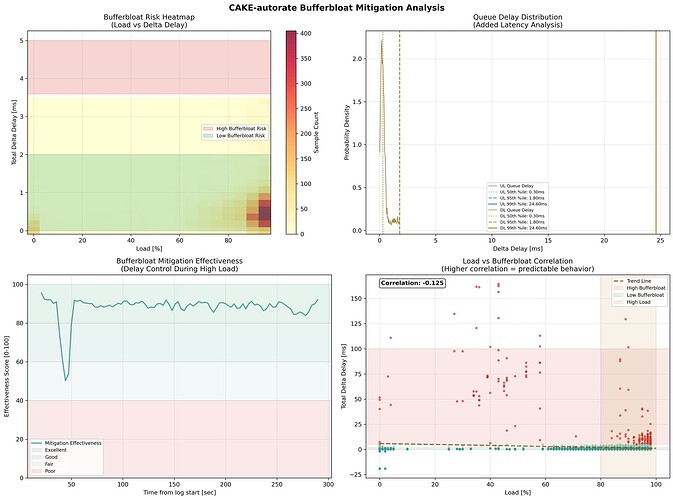

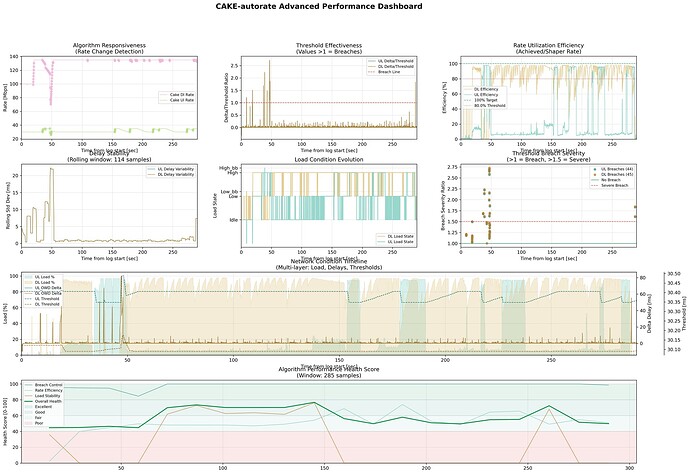

The problem: As soon as the bandwidth is fully utilized, there is a sharp increase (10-25x) in latency. Although the ping drops almost as quickly as it has shot up, it is enough to cause extreme stuttering in the games and there are persistent, noticeable delays as long as the Internet connection is so busy. Although the ping is fine most of the time, warnings such as “extrapolation” are then displayed (and opponents no longer topple over so easily ![]() ). You can really see this in all speed tests. Completely independent of the server used, only the initial latency is of course slightly higher with more distant servers.

). You can really see this in all speed tests. Completely independent of the server used, only the initial latency is of course slightly higher with more distant servers.

I have noticed that (without SQM) the available bandwidth is not utilized initially during these speed tests, especially in the receiving direction. It first jumps to around 30-40 Mbps and then veeery slowly climbs up to the maximum possible 150-160 Mbps. I can also observe this in the direction of transmission, but it doesn't take quite as long for the bandwidth to be fully utilized. Strangely enough, this only happens when I don't run one speed test after the other. In other words, only when the Internet connection “rests” a little between speed tests.

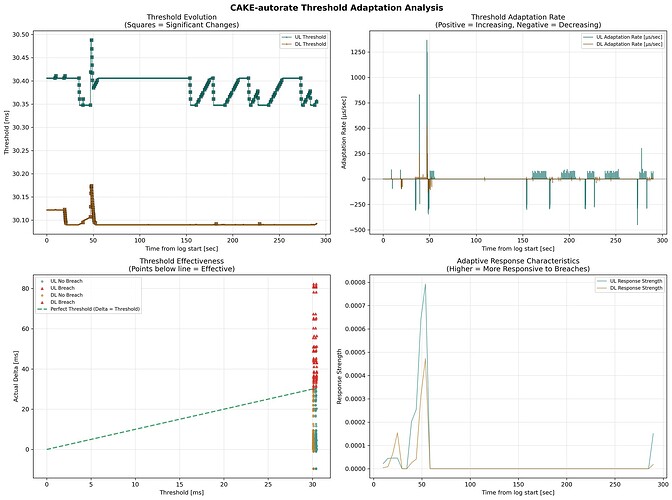

As a layman, I suspect a connection... maybe CAKE can't cope with this slow ramp-up in bandwidth?

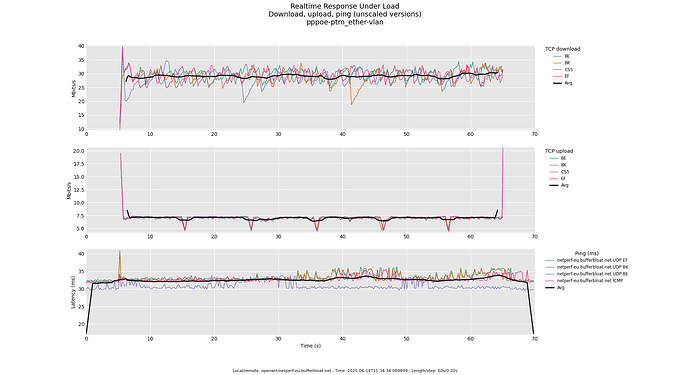

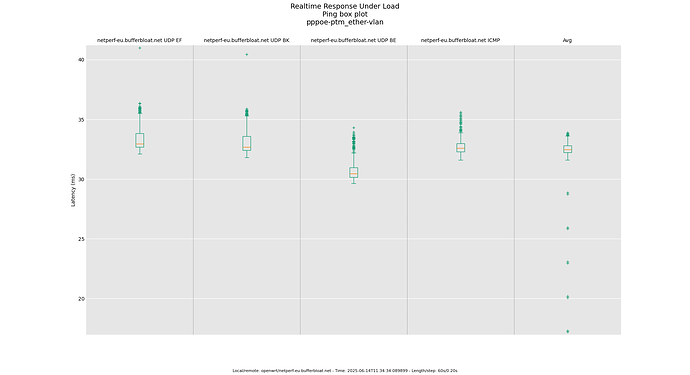

As I wrote, I initially adopted the settings from the guide in the OpenWrt Wiki (for VDSL2 with PPPoE and VLAN tagging), but have now (after countless FLENT tests) ended up with the following settings:

(* Please scroll down... *)

Ich weiß gar nicht wo ich anfangen soll... ![]()

Die Situation ist, dass ich mit einem Freund in eine Wohngemeinschaft gezogen bin und wir unsere vorhandenen Netzwerke einfach mitgenommen haben. Er benutzt eine AVM Fritz!Box 7490 und ich irgendeine x86-Chinakiste von AliExpress, auf die ich OPNsense installiert habe. Um die Netzwerke irgendwie mit dem Internet zu verbinden, habe ich kurzerhand einen NanoPi R6S bei Amazon gekauft und OpenWrt 24.10.1 draufgeklatscht (dabei bin ich dieser Anleitung für die Installation und dieser Anleitung für Leistungs-Optimierung (Option #1) gefolgt). Nachdem ich sowohl in der Fritz!Box als auch im OPNsense router NAT deaktiviert habe und die statischen Routen in OpenWrt eingetragen habe, hat erst einmal alles funktioniert (Naja, bis auf die Probleme mit DS-lite, aber selbst das klappt nun alles prima. Großes DANKESCHÖN an @moeller0 und @pavelgl !).

Nur haben wir schnell festgestellt, dass sobald einer von uns einen Download startet (oder auch nur Videos streamt) der jeweils andere das Internet faktisch nicht mehr benutzen kann. Vor allem währenddessen Counter-Strike oder Call of Duty zu spielen (ja, ich weiß, typisches First-World-Problem...) kann man komplett vergessen.

Nach kurzer Recherche bin ich über den Begriff "Bufferbloat" gestolpert, der die Situation ziemlich gut zu beschreiben scheint. Also habe ich mich informiert, wie man dem entgegenwirken kann. Schnell bin ich im OpenWrt Wiki auf den entspechenden Eintrag gestoßen. Obwohl ich mir ziemlich sicher bin, anfangs alles wirklich 1-zu-1 befolgt zu haben, hatten wir immer noch Probleme.

Ich weiß nicht wieviele Stunden ich bis jetzt damit verbracht habe, eine Einstellung zu ändern und anschließend mit FLENT zu überprüfen, ob die Änderung nun eher positive oder negative Auswirkungen hatte. Genützt hat es nichts.

Das Problem: Sobald die Bandbreite ausgelastet wird, kommt es zu einem krassen Anstieg (10-25x) der Latenz. Zwar sinkt der Ping fast so schnell wie er in die Höhe geschossen ist, aber es reicht für einen extremen Ruckler in den Spielen und es kommt zu anhaltenden, spürbaren Verzögerungen solange die Internetverbindung derartig ausgelastet ist. Obwohl der Ping die meiste Zeit in Ordnung ist, werden dann so Warnungen wie "Extrapolation" angezeigt (und Gegner kippen nicht mehr so leicht um ![]() ). Das kann man bei wirklich allen Speedtests beobachten. Völlig unabhängig des verwendeten Servers, nur die Ausgangslatenz ist bei entfernteren Servern natürlich etwas höher.

). Das kann man bei wirklich allen Speedtests beobachten. Völlig unabhängig des verwendeten Servers, nur die Ausgangslatenz ist bei entfernteren Servern natürlich etwas höher.

Mir ist aufgefallen, dass (ohne SQM) bei diesen Speedtests die verfügbare Bandbreite vor allem in Empfangsrichtung zunächst überhaupt nicht ausgereizt wird. Sie springt erst auf etwa 30-40 Mbps, um dann gaaanz langsam bis auf die maximal möglichen 150-160 Mbps zu klettern. In Senderichtung kann ich das auch beobachten, allerdings dauert es dort nicht ganz so lange, bis die Bandbreite voll ausgereizt wird. Komischerweise passiert das nur wenn ich nicht einen Speedtest nach dem anderen mache. Also nur, wenn zwischen den Speedtests die Internetverbindung etwas "ruht".

Als Laie vermute ich da einen Zusammenhang... vielleicht kommt CAKE mit diesem langsamen Anstieg der Bandbreite nicht klar?

Wie geschrieben habe ich zunächst die Einstellungen des Guides aus dem OpenWrt Wiki (für VDSL2 mit PPPoE und VLAN tagging) übernommen, bin aber inzwischen (nach unzähligen FLENT Tests) bei den folgenden Einstellungen gelandet:

Basic Settings:

^- Enable this SQM instance: [x]

^- Interface name: pppoe-wan (Ist das überhaupt korrekt? Oder hätte ich hier "eth1" oder "eth1.7" wählen sollen?)

^- Download speed (ingress): 135000 (Speedtest ohne SQM ~150-160 Mbps)

^- Upload speed (egress): 35000 (Speedtest ohne SQM ~40-45 Mbps)

^- Enable debug logging: [ ]

^- Log verbosity: warning

Queue Discipline:

^- Queueing discipline: cake

^- Queue setup script: piece_of_cake.qos

^- Advanced Configuration: [x]

^- Squash DSCP (ingress): SQUASH

^- Ignore DSCP (ingress): Allow

^- ECN (ingress): ECN (default)

^- ECN (egress): NOECN (default)

^- Dangerous Configuration: [x]

^- Hard queue limit (ingress):

^- Hard queue limit (egress):

^- Latency target (ingress):

^- Latency target (egress):

^- Qdisc options (ingress): ingress pppoe-ptm ether-vlan nat

^- Qdisc options (egress): besteffort pppoe-ptm ether-vlan nat

Link Layer Adaption:

^- Link layer: none (default)

Ein paar Anmerkungen dazu:

- Laut den Speedtests (wie z.B. dem von OOKLA, aber auch vom Anbieter, Vodafone) liegt die reale Bandbreite wohl so zwischen 150-160 Mbps in Empfangs- und 40-45 Mbps in Senderichtung.

- Laut unserem Draytek 167 Modem liegt die

actual ratebei177277 kbpsund46655 kbps. - Ich bin mir mega unsicher, ob ich

pppoe-wan,eth1odereth1.7als Interface benutzen muss, so eindeutig finde ich die Information dazu im Wiki nicht, was natürlich an meinen mangelnden Englischkentnissen liegen kann. Wegen diesem Thread hier habe ichpppoe-waneingestellt. - Ich benutze in Senderichtung

besteffort, weil beim standardmäßigendiffserv3(und auch beidiffserv4) Traffic lauttc -s qdisc showgrundsätzlich in den falschen Tins landet. - Wahrscheinlich macht es wenig Sinn

diffserv3alsingressOption zu verwenden, wenn ich gleichzeitigSquash DSCP (ingress)aufSQUASHstehen habe, oder? Trotzdem scheint hier dieser verdammte Ping-Spike weniger häufig aufzutreten. Kann aber Zufall sein. - Ich hatte

Link layerzuvor, wie im Wiki vorgeschlagen, aufEthernet with overhead: select for e.g. VDSL2.stehen undPer Packet Overhead (bytes)in 2er Schritten von22bis46, sowieMinimum packet sizevon68bis86gestellt (natürlich ohnepppoe-ptmundether-vlan) und das Resultat immer wieder mit FLENT getestet. Das beste Ergebnis hatte ich mit einem Overhead von34, weshalb ich nun stattdessen die beiden Optionen verwende, die dasselbe bezwecken.

Jedenfalls zeigt mit diesen Einstellungen tc qdisc show nun:

qdisc cake 805d: dev pppoe-wan root refcnt 2 bandwidth 35Mbit besteffort triple-isolate nat nowash no-ack-filter split-gso rtt 100ms ptm overhead 34

qdisc cake 805e: dev ifb4pppoe-wan root refcnt 2 bandwidth 135Mbit besteffort triple-isolate nat wash ingress no-ack-filter split-gso rtt 100ms ptm overhead 34

Außerdem habe ich die folgenden Änderungen in /etc/sysctl.conf vorgenommen, die die Situation anscheinend etwas verbessert haben, aber auch hier will ich nicht ausschließen, dass die FLENT Ergebnisse nur zufällig besser wurden:

# disable packet steering

net.core.rps_sock_flow_entries = 0

# increase per-CPU receive queue length

net.core.netdev_max_backlog = 2000

# increase packet processing budget per interrupt

net.core.netdev_budget = 1000

# maximum socket receive buffer size

net.core.rmem_max = 1048576

# default socket receive buffer size

net.core.rmem_default = 262144

# disable timer migration

kernel.timer_migration = 0

Und in /etc/rc.local:

# set scheduler to performance

find /sys/devices/system/cpu/cpufreq -name scaling_governor -exec awk '{print "performance" > "{}" }' {} \;

# disable NIC offload features on eth1

ethtool -K eth1 gso off tso off gro off rx-gro-list off rx off tx off rx-vlan-offload off tx-vlan-offload off

# disable energy efficient ethernet

ethtool --set-eee eth0 eee off

ethtool --set-eee eth1 eee off

ethtool --set-eee eth2 eee off

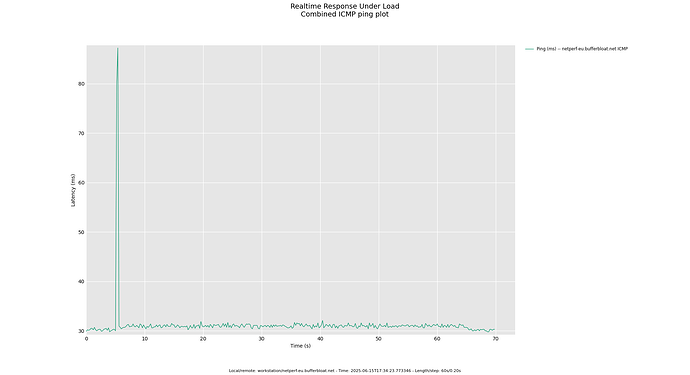

Damit wird bei FLENT (immer direkt auf der OpenWrt Maschine ausgeführt) aus:

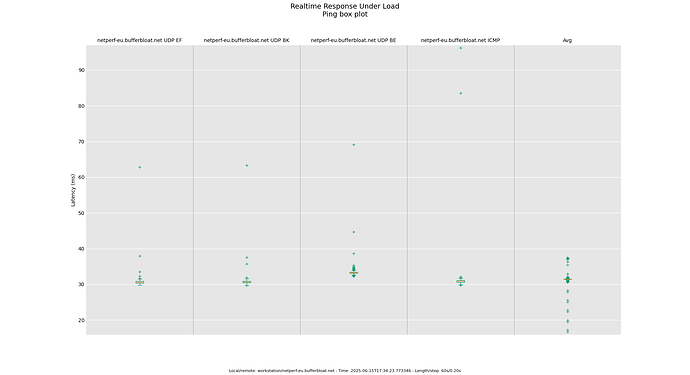

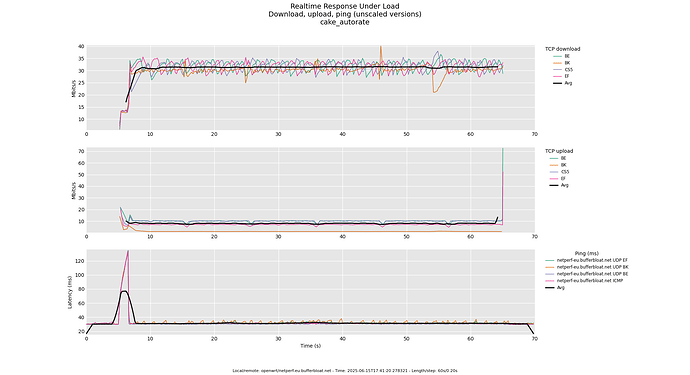

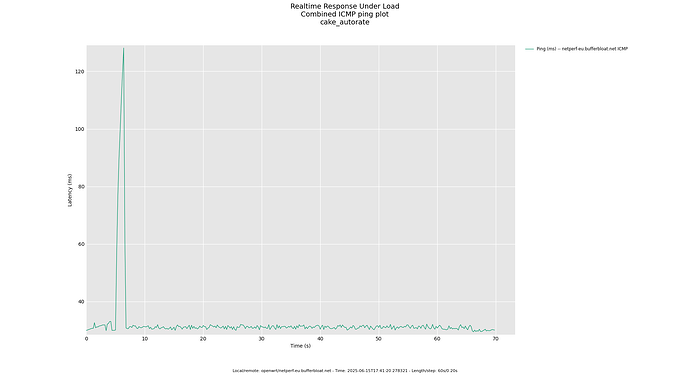

Nun in den allermeisten Fällen das hier:

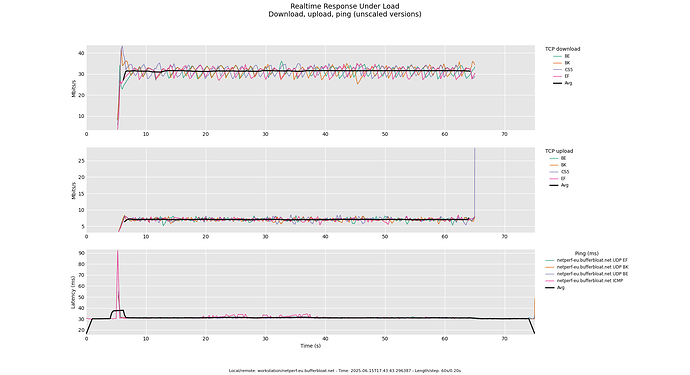

Aber leider auch immer wieder:

Danke schon einmal fürs Lesen!