Hey everyone,

Let me start by saying that I am completely rookie to this type of things related to network connection. It's a black magic for me.

I will try to describe my problem in as much detail as possible and I will try to provide all the necessary details.

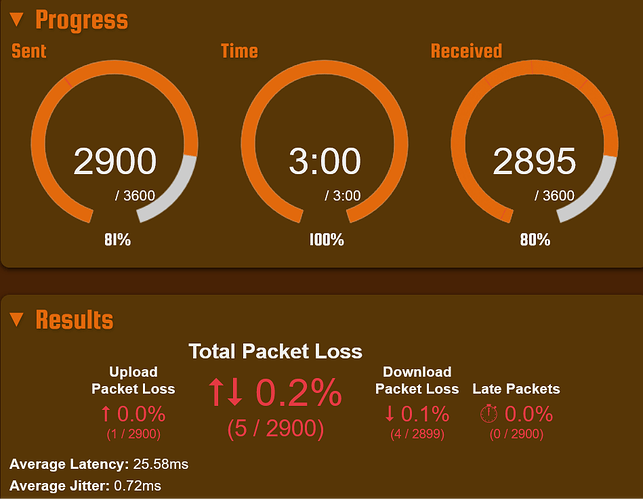

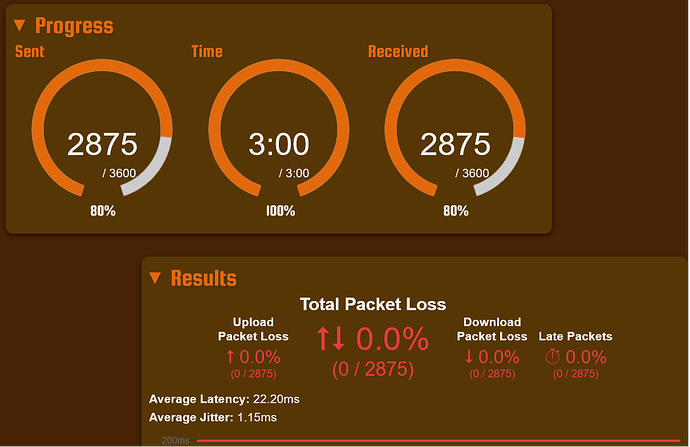

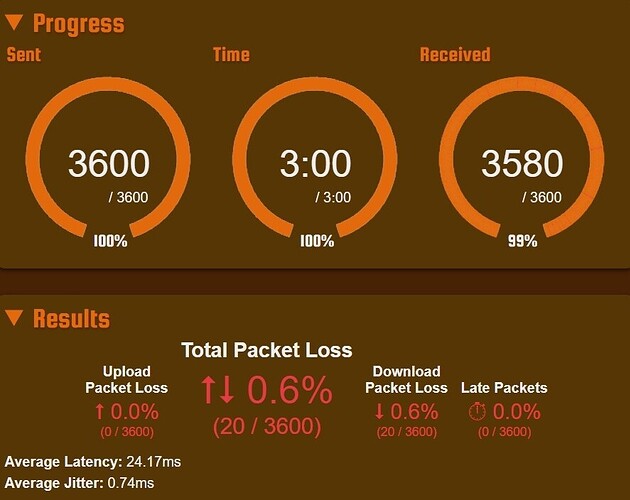

The problem is that despite enabling and configuring QSM, Bufferbloat still occurs, most often on download side.

This can be seen best in waveform tests, where some blue dots are much further away from the rest and have higher ping and jitter results compared to upload and unloaded.

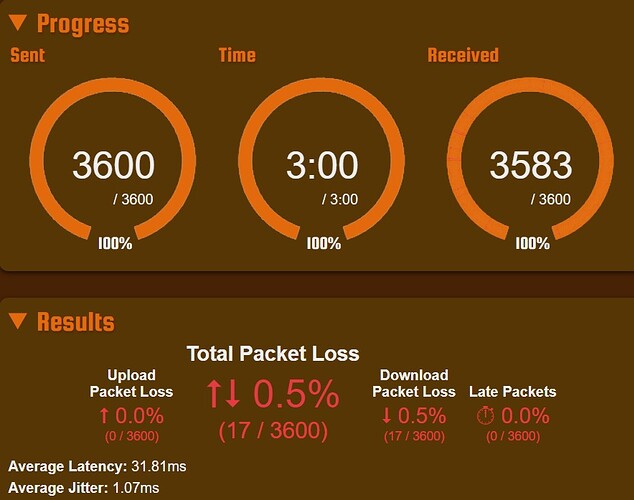

The second thing is packet loss.

These are not huge numbers, but the loss ranges from 1-5%, sometimes up to 10%.

According to net graphs from games like Battlefield, Call of Duty and several others, these lost packets are Inbound Packets.

There is no problem with Outbound Packets, it is always 0%.

These problems occur when my PC is the only device that uses the Internet.

Probably if I turned on WIFI on the TV and mobile phone, the problem could be worse.

Now, few words about my connection.

It is a 100/100 connection, it is copper cable.

The cable comes from the outside, from the box, enters my apartment and is plugged directly into the router, and from the router there is a CAT6 ethernet cable to the PC. PC has 1000/1000 Realtek card.

My router model is: Netgear R6220

However, my ISP uses PPPoE and only uses ipv4.

Moving on,

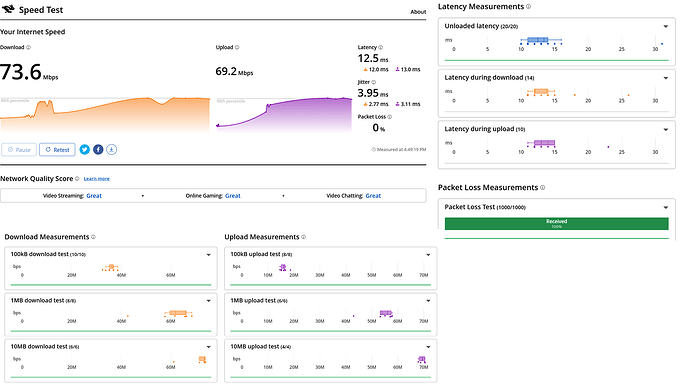

My connection test WITHOUT QSM.

waveform and dslreport:

https://imgur.com/SzZlZ1r

My connection test WITH QSM:

waveform and dslreport:

https://imgur.com/iK8jtXf

My QSM settings.

(for guidance, I used the wiki, faq and other posts available here on the forum.)

I changed my LAN ip from 192.168.1.1 to 192.168.2.1, because I thought there was some conflict with IP's between LAN and WAN

Switched ethernet cable from LAN 1 to LAN 2, just in case if the LAN 1 port was worn out.

But with no effect.

Maybe it's the router's fault? I heard that Mediatek chips are not the best.

I also have TP-Link Archer C7 V2 as a backup and CAT7 cable with gold plugs.

Or maybe the ISP is to blame?

I'm in dead end.

I will be grateful for any help and suggestions.

Sorry for using imgur, as a new member, I'm limited to one embed media.

I hope that I described my problem quite understandably and provided the necessary information, if more information is needed, I will of course add it.

Best regards, have a nice day.