opkg update

opkg install htop

htop

*f2 for settings

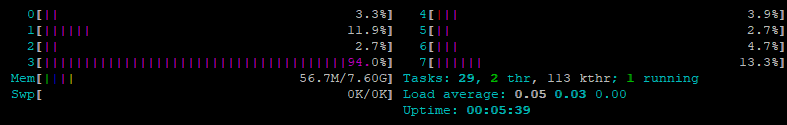

*check Detailed CPU time. Soft IRQ color is Magenta -the pinkish color.

*f10 done

*run below iperf3 command with 100 conenctions..

*from other SSH session inside OpenWRT and keep eye on htop,

*does one CPU core max out?

iperf3 -P 100 -c queen.cisp.co.za

*now run same command via host behind OpenWRT and monitor htop of OpenWRT.. any CPU core max out now?

*Does anyone use x86 or x64 and have same issue?

*If someone behind OpenWRT uses speedtest.net or iper3 -P100 then one router CPU core maxes out with 95% of Soft IRQ ?

*If same iper3 -P100 testing from OpenWRT then CPUs does not swet.

*What could be the issue here? iptables involved?

*How to configure OpenWRT so CPUs will not swet if clients use the router and open few hundred connections. Even simple speedtest.net

####### More background info..

Im running 20.02.2 x64 self compiled for few pcie realtek cards.. these drivers are not involved in testings on virtualbox tho. And for mdadm raid1 booting which should not influence CPU usage on network loads. Can SELinux influence networking speeds ?

In virtualbox with virtio for all network interfaces - to validate, precreate configs and test to replace pfSense on real hardware later.

Noticed a weird thing.

iperf3 from or to router get about 10Gbps all 8 cores of opensese are almost idle about 5%ish seldom.

This i thought is superb as pfSense cant even handle virtio drivers properly.. has sub 100mbps speeds with same setup.

Via client virtualbox that uses DHCP of OpenWRT virtualbox.. Client goes to speedtest.com.. one core on OpenWRT gets maxed out on every download test. Upload part of speedtest cores are around 10-15%.

This seemed extremely abnormal as I knew iperf3 got 10Gbps speed and CPU cores did not swet.

It is a Ryzen cpu 8 cores to the virtual box of OpenWRT virtualbox and the outside internet connection is 100mbps and via Virtualbox guest gets 60mbps but host.. the real connection speed is aroun 91mbps so OpenWRT as a router and 1 core maxed does block off some downlaod speed.. not upload speed tho.. which is around 94mbps on host and on guest behind OpenWRT (and core does not amx out).

This seemed worse than pfSense virtualbox (with e1000 drivers not virtio.. no such issue.. did not reach 1Gbps with iperf3 but the 100mbit internet testings were fine, no cores overloading).

What did not affect the issue. any state.. Enable/Disable

- Packet Steering

- Software flow offloading

- irqbalance (with blank config just enabled/disabled)

- installing/uninstalling mwan3

- setting firewall config file back to default

So I started to suspect maybe it is not bandwith but connection amount.

So I tested iperf3 -P 100 to internet host from OpenWRT but it worked fine no cores swetting.

As I tested same from behind router.. virtualbox using OpenWRT as router then OpenWRT had one CPU core maxed again with Soft IRQ. Magenta color in htop.

Im out of ideas what to try next - any ideas are welcome.