I haven’t, but I can give it a try. Meanwhile I changed country, apartment and internet connection so the previous tests won’t be comparable, but I can try a couple of them with different settings to see if AQL does something when WED is enabled.

Thanks for following up nice tests. I'm seeing it hard to draw conclusions running WED + AQL adjustments, aql_txq_limit 1500 looks good, but then again so does 15000 and that's an order of magnitude difference. They all have some issues but this is expected on wifi.

The affect on adjusting limits seems to be more impacted with WED off though. Seeing latency ranging from 20-100ms reminds of why wifi gaming = bad ![]()

Great observations and feedback, thanks! I, too, am finding it difficult to state with any level of confidence that the AQL knobs have an impact with WED enabled. What is interesting to me is that there seems to be some impact, though. I would have expected a more binary conclusion here.

(Thinking out loud here...) If WED is offloading (fast-pathing?) packets, then it seems AQL should have no effect as that "path" is being bypassed, right?? This is where my knowledge of how AQL+WED are related starts breaking apart. I would really need someone who knows the wireless stack and WED to answer this definitively. Maybe @nbd or @blocktrron could set the record straight here? ![]()

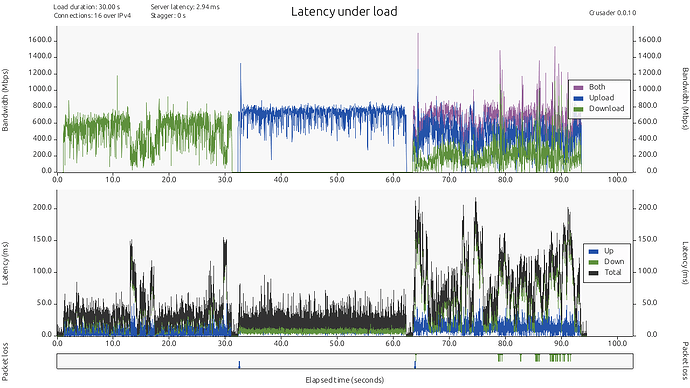

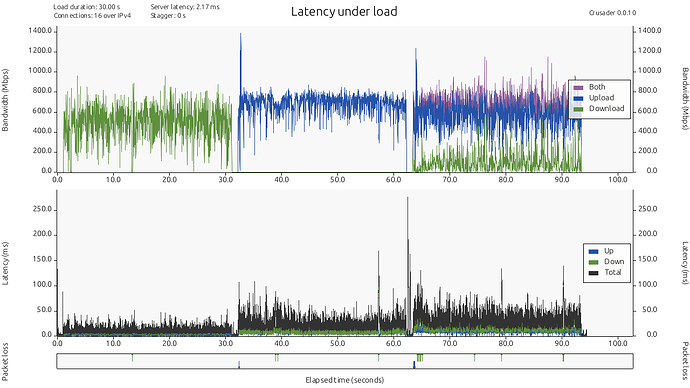

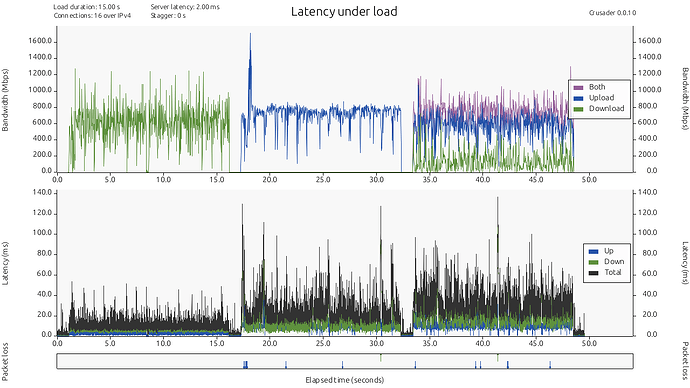

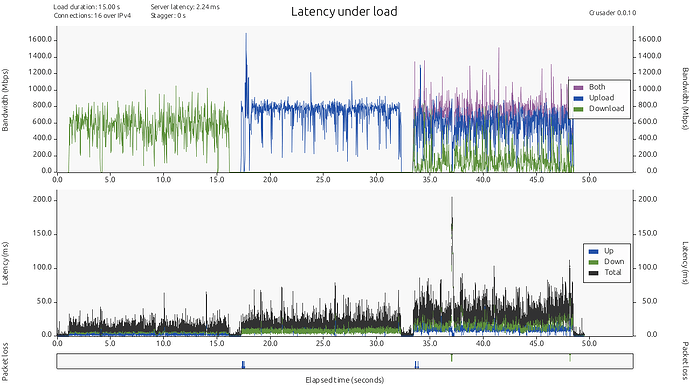

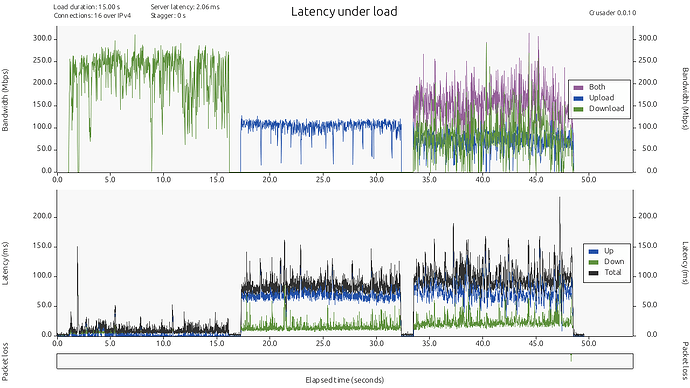

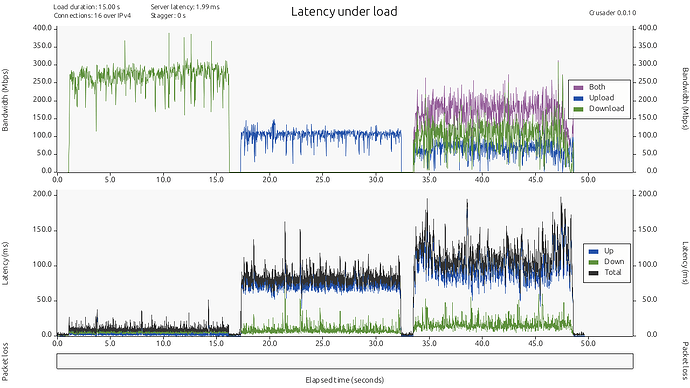

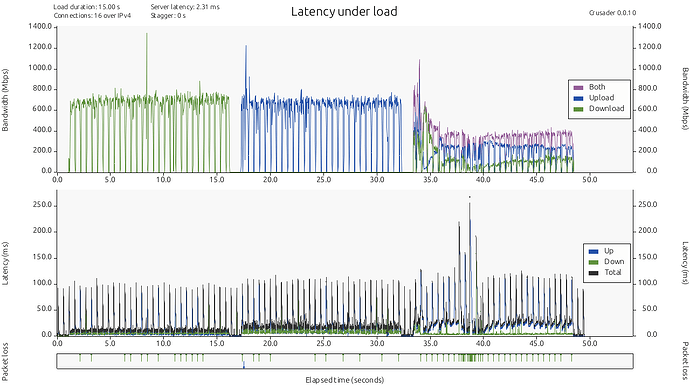

I just re-ran a test at aql_txq_limit = 1500 with WED enabled and then again with WED disabled:

aql_txq_limit 1500 + WED Enabled:

aql_txq_limit 1500 + WED Disabled:

There is undoubtedly much tighter control on latency with WED disabled--far fewer excursions from a baseline, though still more excursions into higher latency than I would prefer to see.

Well it takes two to tango, I see for the no load interim periods excellent low delay, and for the download test as well something well below 100, more around 50ms (which is not great for gaming, but a whole lot less terrible). The tests involving the upload direction (upload and bidirectional) show clearly worse delays, implying that the wifi of that device could use airtime fairness as well.. (I see the same on my older AP with AQL versus a recent mac book, the apple wifi simply is doing considerably worse (latency-wise) than my routers roughly 9 years old ath10K).

I think that is actually how it should be done, testing under realistic conditions ![]()

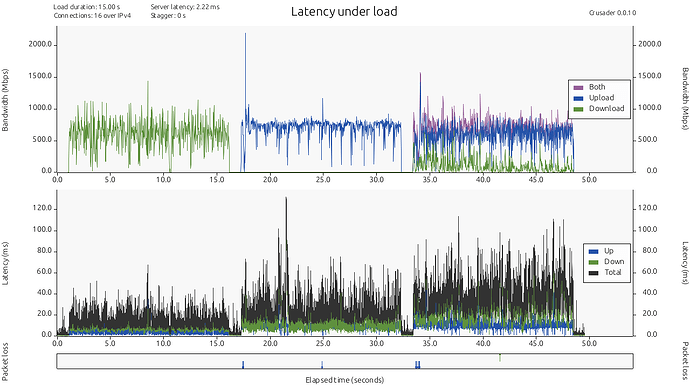

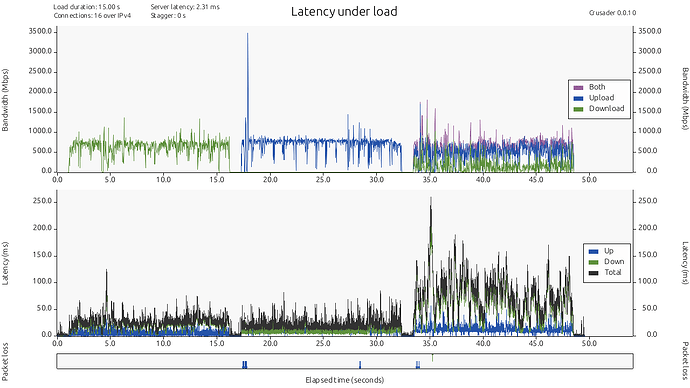

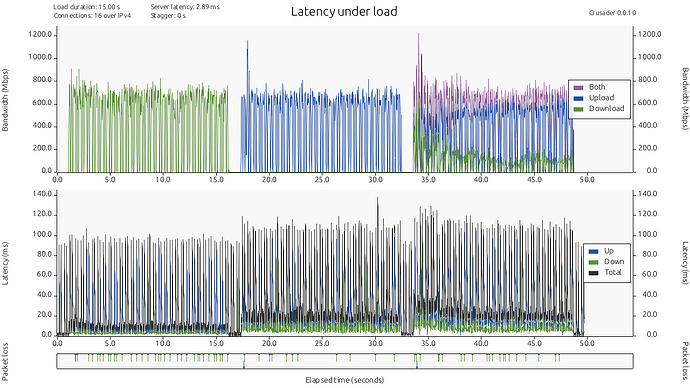

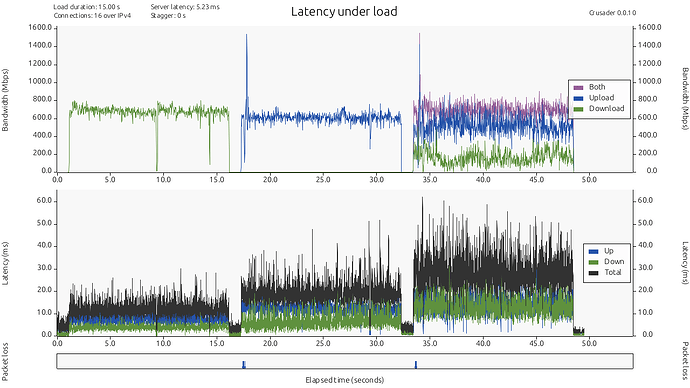

New data to chew on. I ran multiple crusader tests with varying aql_txq_limit values with WED disabled. Here are the results:

500 - WED Disabled:

1000 - WED Disabled:

1500 - WED Disabled:

2000 - WED Disabled:

2500 - WED Disabled:

5000 - WED Disabled:

15000 - WED Disabled:

It still looks to me that out of these tests, 1500 seems to be the sweet spot in terms of favoring low latency. However, for those that need higher simultaneous bandwidth, 15000 is likely the ideal target.

Prefer low latency:

aql_txq_limit=1500

for ac in 0 1 2 3; do echo $ac $aql_txq_limit $aql_txq_limit > /sys/kernel/debug/ieee80211/phy0/aql_txq_limit; done

for ac in 0 1 2 3; do echo $ac $aql_txq_limit $aql_txq_limit > /sys/kernel/debug/ieee80211/phy1/aql_txq_limit; done

cat /sys/kernel/debug/ieee80211/phy0/aql_txq_limit /sys/kernel/debug/ieee80211/phy1/aql_txq_limit

Prefer high simultaneous bandwidth:

aql_txq_limit=15000

for ac in 0 1 2 3; do echo $ac $aql_txq_limit $aql_txq_limit > /sys/kernel/debug/ieee80211/phy0/aql_txq_limit; done

for ac in 0 1 2 3; do echo $ac $aql_txq_limit $aql_txq_limit > /sys/kernel/debug/ieee80211/phy1/aql_txq_limit; done

cat /sys/kernel/debug/ieee80211/phy0/aql_txq_limit /sys/kernel/debug/ieee80211/phy1/aql_txq_limit

Prefer a balance between higher bandwidth and reasonable latency:

aql_txq_limit=5000

for ac in 0 1 2 3; do echo $ac $aql_txq_limit $aql_txq_limit > /sys/kernel/debug/ieee80211/phy0/aql_txq_limit; done

for ac in 0 1 2 3; do echo $ac $aql_txq_limit $aql_txq_limit > /sys/kernel/debug/ieee80211/phy1/aql_txq_limit; done

cat /sys/kernel/debug/ieee80211/phy0/aql_txq_limit /sys/kernel/debug/ieee80211/phy1/aql_txq_limit

This is your key test. It proves for latency sensitive tasks with Wi-Fi (e.g. VoIP or gaming) it's a must to disable WED. With WED off the aql_txq_limit 1500 results look fantastic this should be the default value.

If you aren't using those things then enabling WED to free up CPU for other tasks remains a viable choice.

edit: I updated the hardware acceleration section of our wiki to reflect the data from your results.

@phinn I put together the script below to provide an easy way to tweak the aql_txq_limit.

Basic usage:

(First time) Set the file as executable: # chmod +x change-aql.sh

Call the script with one of latency, balanced, bandwidth, or a valid integer value. This will tune the AQL Tx queue limit to the preference (or integer value) specified.

Example: Set 'balanced' Preference

# ./change-aql.sh balanced

>> Device setting: /sys/kernel/debug/ieee80211/phy1/aql_txq_limit <<

Before:

AC AQL limit low AQL limit high

VO 1500 1500

VI 1500 1500

BE 1500 1500

BK 1500 1500

BC/MC 50000

After:

AC AQL limit low AQL limit high

VO 5000 5000

VI 5000 5000

BE 5000 5000

BK 5000 5000

BC/MC 50000

>> Device setting: /sys/kernel/debug/ieee80211/phy0/aql_txq_limit <<

Before:

AC AQL limit low AQL limit high

VO 1500 1500

VI 1500 1500

BE 1500 1500

BK 1500 1500

BC/MC 50000

After:

AC AQL limit low AQL limit high

VO 5000 5000

VI 5000 5000

BE 5000 5000

BK 5000 5000

BC/MC 50000

Example: Set Integer Value

# ./change-aql.sh 1750

Info: Valid integer value provided.

>> Device setting: /sys/kernel/debug/ieee80211/phy1/aql_txq_limit <<

Before:

AC AQL limit low AQL limit high

VO 5000 5000

VI 5000 5000

BE 5000 5000

BK 5000 5000

BC/MC 50000

After:

AC AQL limit low AQL limit high

VO 1750 1750

VI 1750 1750

BE 1750 1750

BK 1750 1750

BC/MC 50000

>> Device setting: /sys/kernel/debug/ieee80211/phy0/aql_txq_limit <<

Before:

AC AQL limit low AQL limit high

VO 5000 5000

VI 5000 5000

BE 5000 5000

BK 5000 5000

BC/MC 50000

After:

AC AQL limit low AQL limit high

VO 1750 1750

VI 1750 1750

BE 1750 1750

BK 1750 1750

BC/MC 50000

Awesome script thanks for posting.

I was looking over the bootlog on our wiki you can see the mt798x-wmac binary compile dates. It definitely seems way out of date on OpenWrt. The firmware on OpenWrt shows way back in Oct 2022, while Gl.iNet's firmware is from Aug 2023, 10 months newer. That was my oem log from dmesg I posted way back when I got my router last year too theirs is maybe even more recent now.

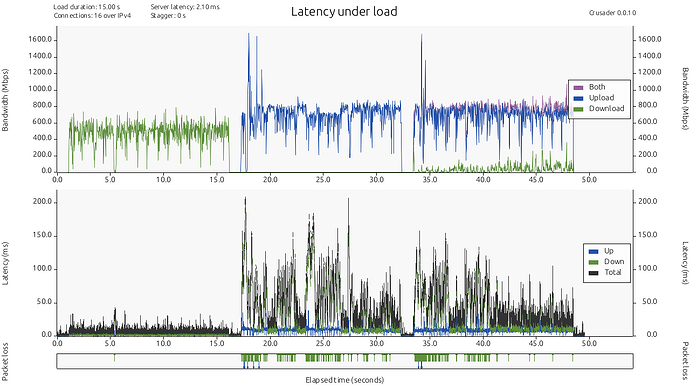

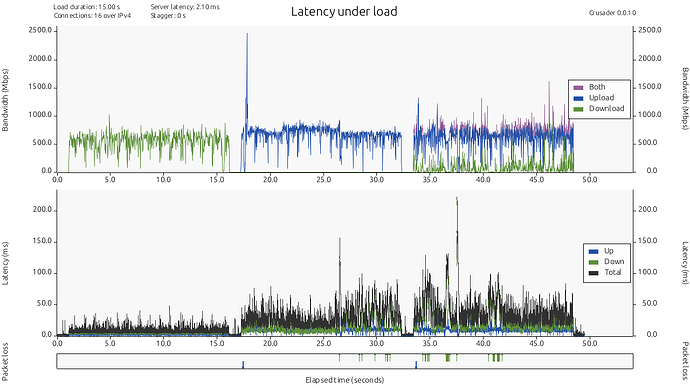

I just realized I had already run some tests with and without WED in my previous location.

1500 + WED:

1500 w/o WED:

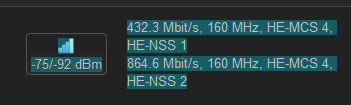

Besides, at the moment I can't restart the router to run a test with WED enabled, but in my new location (where the computer sits less than 2 meters away from the router) the graph is somewhat odd:

1500 w/o WED:

Ooof! Yeah, that seems to indicate a substantial amount of packet loss going on. Do you know what percentage of packet loss you're seeing from a ping command to the same host?

That's the interesting part, the packet loss is 0.0%. This is crusader serving from the GL-MT6000 itself, with my laptop as a client.

And yet crusader actually reports packet loss in the bottom panel?

Yep, while running ping (with a packet count of 100, for example) against the same internal target or an external target doesn't incur any packet loss, although there are huge latency spikes:

--- 192.168.50.100 ping statistics ---

100 packets transmitted, 100 packets received, 0.0% packet loss

round-trip min/avg/max/stddev = 2.037/36.630/1166.221/120.552 ms

--- 8.8.8.8 ping statistics ---

100 packets transmitted, 100 packets received, 0.0% packet loss

round-trip min/avg/max/stddev = 6.798/37.407/996.029/101.021 ms

I would guess these ICMP probes will be treated as different flows to the crusader traffic that reported drops, so all of this seems sane and we are simply looking at two different packet loss rates here...

I guess so too, and there's something weird that I can't quite pinpoint. These are two tests I just made, 5 min from each other, same settings, no other traffic in the network. Pretty difficult to test anything in these conditions.

95 rather periodic latency spikes in 48 seconds or roughly 95/48 = 1.9791 ~2 Hz...

That should be possible to find, because that 2 Hz signal is rather conserved...

But whether that is external RF noise or some periodic timer in one of the devices (powersave or similar) is maximally unclear... or some cyclic channel scanning by either the AP or the client that results in these stalls...

#define IEEE80211_DEFAULT_AIRTIME_WEIGHT 256

/* The per TXQ device queue limit in airtime */

#define IEEE80211_DEFAULT_AQL_TXQ_LIMIT_L 5000

#define IEEE80211_DEFAULT_AQL_TXQ_LIMIT_H 12000

#define IEEE80211_DEFAULT_AQL_TXQ_LIMIT_BC 50000

/* The per interface airtime threshold to switch to lower queue limit */

#define IEEE80211_AQL_THRESHOLD 24000

I think all of these are related... do you have some suggestion?

I think that a good choice is:

#define IEEE80211_DEFAULT_AIRTIME_WEIGHT 256

/* The per TXQ device queue limit in airtime */

#define IEEE80211_DEFAULT_AQL_TXQ_LIMIT_L 1500

#define IEEE80211_DEFAULT_AQL_TXQ_LIMIT_H 5000

#define IEEE80211_DEFAULT_AQL_TXQ_LIMIT_BC 50000

/* The per interface airtime threshold to switch to lower queue limit */

#define IEEE80211_AQL_THRESHOLD 8000

edit... after some test I confirm that these settings are good

These are my 100 ping result

Statistiche Ping per 192.168.181.1:

Pacchetti: Trasmessi = 100, Ricevuti = 100,

Persi = 0 (0% persi),

Tempo approssimativo percorsi andata/ritorno in millisecondi:

Minimo = 0ms, Massimo = 516ms, Medio = 22ms

these one of the iperf3

Connecting to host 192.168.181.1, port 5201

[ 5] local 192.168.181.230 port 51782 connected to 192.168.181.1 port 5201

[ ID] Interval Transfer Bitrate

[ 5] 0.00-1.00 sec 59.9 MBytes 501 Mbits/sec

[ 5] 1.00-2.01 sec 61.1 MBytes 509 Mbits/sec

[ 5] 2.01-3.01 sec 58.4 MBytes 491 Mbits/sec

[ 5] 3.01-4.00 sec 59.1 MBytes 497 Mbits/sec

[ 5] 4.00-5.00 sec 54.8 MBytes 461 Mbits/sec

[ 5] 5.00-6.00 sec 59.4 MBytes 499 Mbits/sec

[ 5] 6.00-7.00 sec 59.4 MBytes 498 Mbits/sec

[ 5] 7.00-8.02 sec 60.8 MBytes 502 Mbits/sec

[ 5] 8.02-9.01 sec 64.1 MBytes 540 Mbits/sec

[ 5] 9.01-10.01 sec 65.4 MBytes 550 Mbits/sec

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate

[ 5] 0.00-10.01 sec 602 MBytes 505 Mbits/sec sender

[ 5] 0.00-10.03 sec 602 MBytes 503 Mbits/sec receiver

Those settings look okay for now. Definitely an improvement over the previous defaults.

I typically set the AQL low and high limit to be equal which effectively bypasses the AQL threshold altogether, thus forcing all STAs to the AQL high limit even if there are not 2+ STAs competing for airtime. (Ref: How OpenWrt Vanquishes Bufferbloat - #20 by tohojo)

With the settings as you’ve defined, an STA will be operating with a 3x higher AQL limit at least some of the time as compared to what I’ve shown in my testing where 1500 seems to be a pretty ideal target all the time.

This is a good start though and should allow for a wider test base as you get this into your updated build! Once more users can test these settings “in the field”, we can see what knobs to twist next ![]()

Totally overcrowded 2.4 where you barely get 1/10 of nominal BW

rtt min/avg/max/mdev = 1.622/7.813/160.973/22.193 ms

your shown numbers are on the extreme end of misbehaving multi-pdu aggregation.