Exactly. The CPU requirements at 10Gbps are significant. Something computationally cheap enough to run with routing and firewall on say a Celeron, particularly if it could be multithreaded, would be a lot better than a 1000 element pfifo.

So how dead IS mips, anyway. There's a new ebpf jit for it now: https://lwn.net/Articles/874683/

I have a spare R7800 not (yet) actively in use and a fiber connection of 500/500mbps. I’ve never done anything with flent, but if you guys need some testing on speeds above 100mbps and can “talk me through it”, just let me know.

Start by installing flent from the flent.org site or via apt/yum/etc.

My desired topology is client - router - server - nothing else, so we can merely verify correctness. Do you have a decent box to use as a server?

OK, here is the first test:

flent --step-size=.05 --socket-stats --te=upload_streams=4 tcp_nup -H netperf-eu.bufferbloat.net

flent --step-size=.05 --socket-stats --te=download_streams=4 tcp_ndown -H netperf-eu.bufferbloat.net

ISP speed is ~100Mbps/35Mbps. I have configured 95000 down and 30000 up.

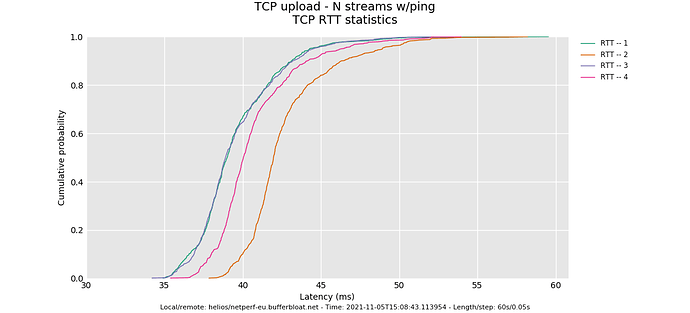

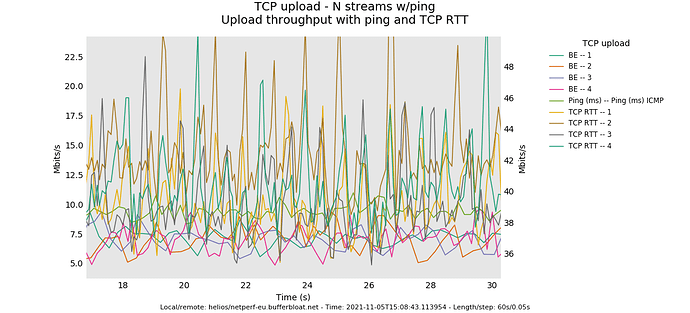

--socket-stats only works on the up. Both the up and down look ok, however the distribution of tcp RTT looks a bit off. These lines should be pretty identical.

Three possible causes: 1) your overlarge burst parameter. At 35Mbit you shouldn't need more than 8k!! The last flow has started up late and doesn't quite get back into fairness with the others. 2) They aren't using a DRR++ scheduler, but DRR. 3) Unknown. I always leave a spot in there for the unknown and without a 35ms rtt path to compare this against I just go and ask you for more data

No need for more down tests at the moment.

A) try 8 and 16 streams on the up.

B) try a vastly reduced htb burst.

A packet capture of a simple 1 stream test also helps me on both up and down, if you are in a position to take one. I don't need a long one (use -l 20) and tcpdump -i the_interface -s 128 -w whatever.cap on either the server or client will suffice.

I am temporarily relieved, it's just that the drop scheduler in the paper was too agressive above 100mbit... and we haven't tested that on this hardware....

Anyway this more clearly shows two of the flows are "stuck":

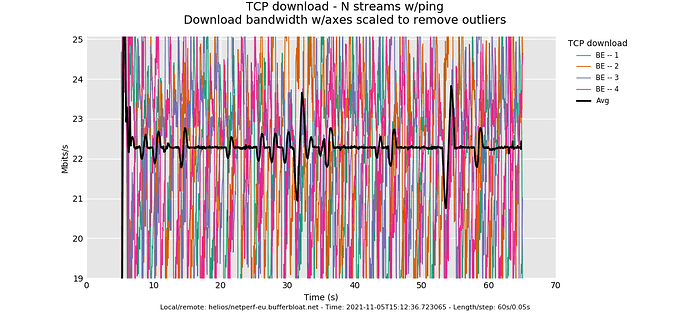

The 100mbit download is just perfect - no synchronization, perfect throughput, 5ms of latency. Btw, we should move this to another bug.

ok, moving this thread over here: Validating fq_codel's correctness. Thx @kong for reassuring me that the nss is at least semi-correct at these speeds.

I am still basically soliciting any ideas for a cerowrt II project here.

I have a self built NAS, it has a 1Gb/s NIC in it. I ran speedtest (Ookla) on it just now:

Speedtest by Ookla

Server: Jonaz B.V. - Amersfoort (id = 10644)

ISP: KPN

Latency: 3.35 ms (0.11 ms jitter)

Download: 502.56 Mbps (data used: 238.9 MB)

Upload: 598.88 Mbps (data used: 1.1 GB)

Packet Loss: 0.0%

Result URL: https://www.speedtest.net/result/c/812e0f44-d77e-4a36-9a1e-35e19707c1c6

It's getting older now, built about 6 years or so with low energy consumption in mind but still fits the bill, quad core, 8GB RAM.

Would this be decent enough as a server? I'm running @ACwifidude 's NSS build (21.02) build on my R7800. On the other R7800 I'll be happy to sysupgrade to a build from @KONG with the settings that are used with 100Mbps so I can produce some flent output to see what happens above 100Mbps? Need to try to match the same circumstances off course.

speedtest doesn't do a ping under load. But nice!

I can trust that to 500mbit at least. ![]() but can we move the bug to here:

but can we move the bug to here:

So what’s your list of things?

- Reproducable builds

- Fully automated and secure updates

- Developing and deploying better measurement (ebpf pping) and bandwidth management tools

- Mwan3 based faster failover using link aware measurements, rather than ping

- A local video-conferencing server (galene.org) suitable for deployment on a local village network

- Apple Homekit and Thread support

- As much pure, published, QA’d and peer reviewed open source as possible

- Fully deploying The “Cosmic Background Bufferbloat Detector” to end users

- Improvements from the IETF homenet project

- bcp38

- Unicast MDNS

- .Local and correct in-addr.arpa addressing

- DNS views

- Babel routing protocol support

- Source Specific routing for ipv6 so that multiple providers can be used

- tailscale mesh

- Improve existing network reporting tools so that congestion and latency are clearly shown to more users.

- Explore potential use of a 0/8 and 240/0 for routing

- Attempt and enable use of advanced protocols such as UDPLITE, Quic, BBRv2

- Privacy support (independent DNS, VPN, additional encryption/obfuscation services)

- Microservices and local caching

- ICN

starlink support.

openwifi support: https://github.com/open-sdr/openwifi

I think as an architecture for people to use to make NEW hardware... it's pretty dead. The OSS world has a tendency to keep stuff working for decades after it's no longer actively being used for new mfg. Like Linux kernel for SPARC or whatever.

I'd like to see multicast be much more widely used across basically the entire internet. Since it seems unlikely across typical ISPs perhaps that's best implemented across the overlay networks I mentioned. So how about a multicast routing suite and some kind of multicast "visualization" to make it clearer what multicast is routing where?

Some example thoughts:

- suppose you want to watch a movie with your friends? So you just start streaming it off your media server, via multicast ipv6 (perhaps in the ff08:: or ff0e:: scopes). Your friend then simply subscribes to this ipv6 multicast stream and suddenly within a few seconds, the wireguard tunnel between your houses is streaming live the same multicast stream in both homes. A third friend wants in on the party, so they fire up and subscribe... voila watch-party!

- Suppose you want to play realtime games with your friends. You fire up your "multicast audio comms" software (along the lines of mumble) and by subscribing to some ipv6 global scope multicast stream with a randomly generated address (ff08:ab82:1104:3310:8890:fafa:f1e6:0001 for example), magically audio from all your devices is simultaneously routed across an overlay network to all your houses.

Let's stop letting ISPs, record companies, and big fat network conglomorates decide what we're allowed to do.

Keep 'em coming. Also I'd like software updates via bittorrent to work.

Better LTE/ISP failover

I've worked off this list for years: http://the-edge.taht.net/post/gilmores_list/

- Routing scalability at planetary scale

- Uncensorable, untappable Internet infrastructure (Freedombox-ish)

- Routing scalability at city scale among peer nodes (Freedombox-ish)

- A fully distributed database replacement for DNS

- How to crutch along on IPv4 without destroying end-to-end?

- Finishing the IPv6 transition, sanely, and safely.

- DNSSEC as a trustable infrastructure.

- Delivering fiber speeds to ordinary consumers.

- Keeping email relevant while ending its centralized censorship.

- Reliable, secure wireless ad hoc peer to peer radio communication.

- Reliable, working long-haul wireless Internet that isn’t owned by crabbed censorial monopolist cellular companies.

- Restoring “network neutrality” by restoring competition among ISPs.

- Reliable, working, worldwide voice interaction (telephone) replacement.

- Copyright and patent defense/collaboration is another; or perhaps a better way to put it is “how the next Internet can pay creators without throwing away all the advantages of worldwide instant communication”

- Digital value transfer (reliable digital cash)

- Reliable node-level cyber security

- Reliable network-level cyber security

- Reliable internetwork-level cyber security

- Privacy on a large scale network.

- How to leverage information asymmetries for ordinary users’ benefit.

- Distributed social networking.

- Avoidance of pinch-points like Google and Facebook that bend a widely distributed system into an access network that somehow always leads to their monopoly.

- What replaces the Web as the next big obvious thing that we should’ve done years before which takes over the world’s idea of “what the Internet is”?

- Creating better business models than (1) move bits as a commodity, and (2) force ads on people!

I've never really understood the point of this, but: https://ipfs.io/

yeah ipfs feels like a good idea that isn't going anywhere.

what it should do is be the mechanism to eliminate Scientific Journals. Anyone who wants to publish some stuff, just throw it into the IPFS and bang, there it is... But it won't happen unless there are searchable indexes, and unless "journals as recommender systems" take off... neither of which will happen.

For too many of the worlds problems, The problem is People, not technology.

https://web.hypothes.is/ I like. Fixing the wuffie problem is not in scope for cerowrt II, but with the rise in serious amounts of storage along the edge, disributing data better does strike me as a good goal. What is so wrong with torrent, btw?

If I read it right I think it's rather cool in principle: the ability to publish to a uniform standard with support for hardening, integrity, high availability and anti-censorship as intrinsic properties of that standard, rather than having to select, engage, manage, purchase and hope for longevity among distinct offerings for each of those requirements. The act of publishing safely and reliably would have some separation from your technical ability, your means, your geopolitical situation, your access to knowledgeable support, etc.

Edit: on reading more thoroughly, it's all a bit too venture-capitalized / incubator and hothouse-driven a la Docker; not so much community led. So for all the emphasis on community and open-source in their language -- which is itself very marketing-agency in style and content -- it's still privately directed, with all that that does and doesn't entail. I'm more optimistic about RFCs than mission statements.