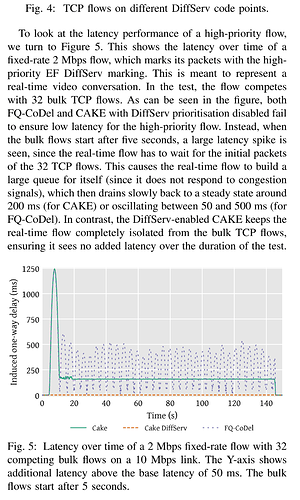

BOTE: 32 bulk flows all starting at the same time, and all having an initial window of say 10 packets result in 32 * 10 packets injected into the network or roughly 32*10*1514*8 = 3875840 bits which at 10Mbps (or rather 10-2, remember our probe flow runs at a fixed 2 Mbps) take: (32*10*1514*8)/((10-2)*1000^2) = 0.48448 seconds or 484 milliseconds, but these flows will not stand still, but rather try to ramp up their congestion window dumping even more packets into the queue until they get sufficient feed-back to slow down again, the exact dynamics of that depend on the exact TCPs used by the senders and the path RTT to the receiver and the resulting ACK flow.

The 200ms steady state probably is a result of the number of flows, at 33 flows each will get access to the link every 33 * 1514 * 8 / (10*1000^2) = 40 ms (assuming fair round-robin and all flows using maximum sized packets), and due to fair queueing each flow will get 10*1000/33 = 303 Kbps of capacity. The TCP flows will probably adapt to that rate more or less, but the real time flow will keep running at 2Mbps, so cake will ramp up the dropping rate and it seems that the 200ms are the steady state (at the given temporal resolution of the measurements) of cake using drops trying to reign in the unresponsive flow and that flow unrelentingly continuing to send packets at 2Mbps.

In this context each flow of N will get 1/N of the total capacity and will have to wait for all other N-1 flows to have their packets transmitted before getting its next packet transmitted. Sometimes this period of N transmission times can be too large (e.g. think an on-line game that send updates of world state as a burst of packets every 1/60 seconds, but the client can only make sense of the new world state after having evaluated all of the packets, so ideally these bursts of packets are not maximally interleaved at the AQM but transmitted back to back; which requires to have a way to select packets for special treatment, and that is what DSCPs are used for).

Not really, it is a function of whether the "latency" requirements of your important flow can e fulfilled with getting transmitted every Nths "timeslot" or not, if yes no special treatment required, if no, you need to carve out an exception for this flow. This is true in general (as transport protocols are designed to "fairly" share bottlenecks) but even more important for a flow queueing system like cake that can and does enforce pretty equitable sharing of the bottleneck capacity between all active flows. See how this description does not contain the direction at all. Naively one can expect this problem to show up more often ion the uplink, because that often is smaller then the downlink for many popular access technologies, but at the same time many users have more ingress/download traffic than upload traffic (downloads, video streaming, ...), so I am not sure the naive hypothesis is correct.

Just look at all the gaming use-cases in the qosify thread or the " Ultimate SQM settings:" thread, where admittedly often the issue is that the links are slow and gaming traffic adds up to a considerable fraction of capacity, but still this is where the desire for prioritisation comes from.

My take on this is that prioritization requires self constraint, up- or down-prioritizing a few carefully selected "connections" can do wonder, but one needs to take care not to go overboard; and I think one should always ask and test whether prioritization is actually required/helpful. Because prioritization will not "create" low latency de novo, but really just shifts resources (like transmit opportunities) around so is essentially a zero sum game for every packet that is transmitted earlier than its "fair share" other packets will be sent delayed by that packet's transmission time. (This is the rationale why I push back against configurations where apparently most traffic ends up in the high priority tiers, because at that point prioritization becomes futile).