Hello guys,

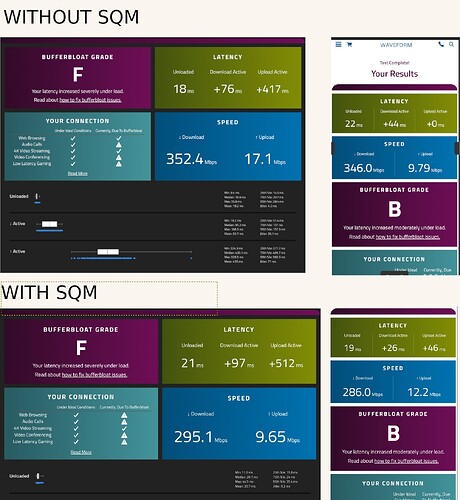

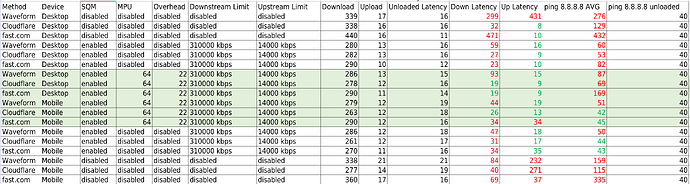

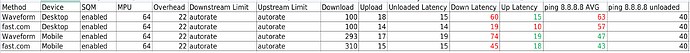

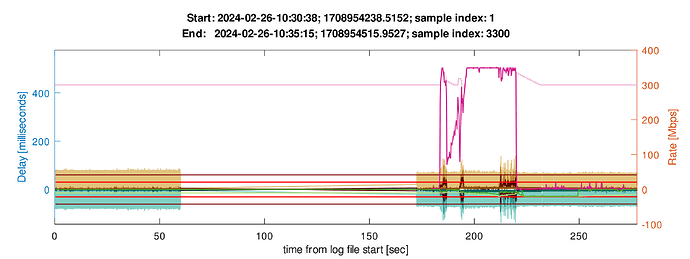

I'm trying to figure out why my bufferbloat is not mitigated with the SQM enabled on OpenWRT.

This is my current setup:

- ISP Modem (Cable Modem Arris TG1692A) in Bridge Mode

- MINI PC x86 with Intel J4125 (8GB RAM)

- WAN eth0 -> ISP

- eth1 -> Xiaomi Repeater

- eth2 -> My Desktop PC

- Xiaomi AX3200 AP in Repeater Mode (Smartphone connects here)

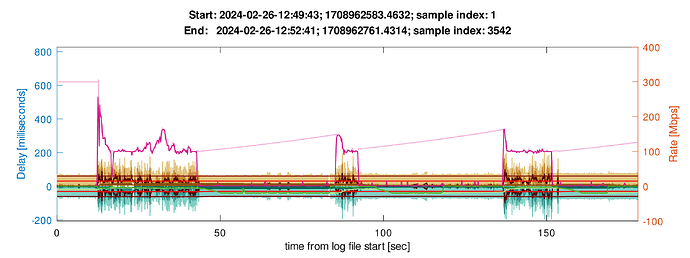

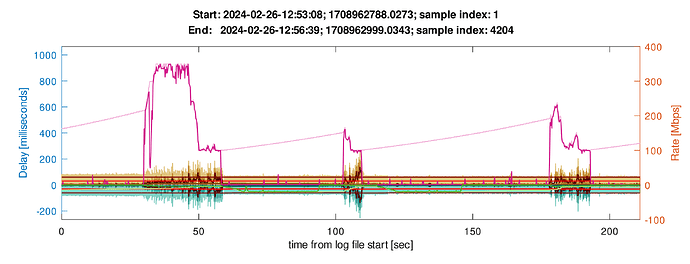

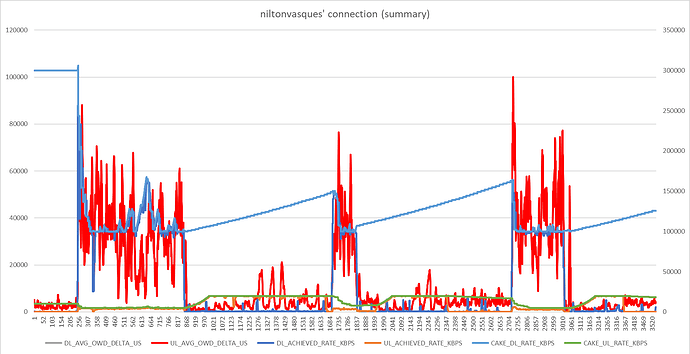

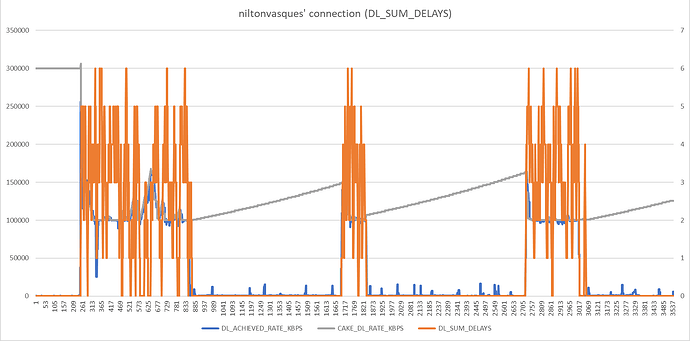

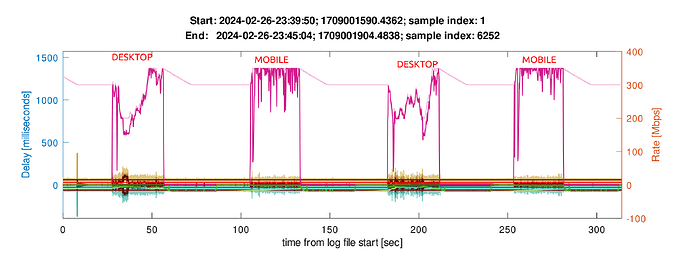

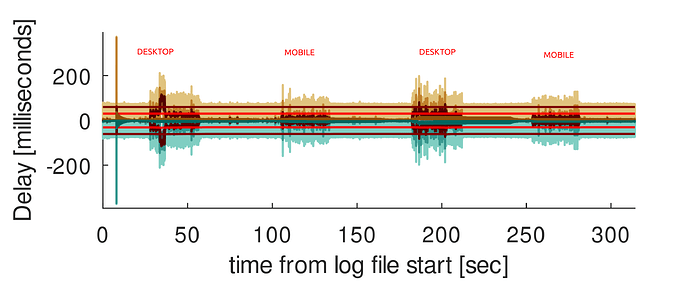

Here we already notes a huge difference between my smartphone wireless and my computer wired network.

Below I pasted the qc qdisc logs using SQM with PC and Smartphone:

PC

tc -s qdisc

root@OpenWrt:~# tc -s qdisc

qdisc noqueue 0: dev lo root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc cake 802d: dev eth0 root refcnt 5 bandwidth 21Mbit besteffort triple-isolate nonat nowash no-ack-filter split-gso rtt 100ms raw overhead 0

Sent 63379900 bytes 277693 pkt (dropped 36, overlimits 217709 requeues 2)

backlog 0b 0p requeues 2

memory used: 484872b of 4Mb

capacity estimate: 21Mbit

min/max network layer size: 42 / 1494

min/max overhead-adjusted size: 42 / 1494

average network hdr offset: 14

Tin 0

thresh 21Mbit

target 5ms

interval 100ms

pk_delay 2.78ms

av_delay 741us

sp_delay 4us

backlog 0b

pkts 277729

bytes 63428870

way_inds 1577

way_miss 191

way_cols 0

drops 36

marks 0

ack_drop 0

sp_flows 1

bk_flows 1

un_flows 0

max_len 5736

quantum 640

qdisc ingress ffff: dev eth0 parent ffff:fff1 ----------------

Sent 1076662679 bytes 800019 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc mq 0: dev eth1 root

Sent 94621648836 bytes 85645752 pkt (dropped 63, overlimits 0 requeues 90435)

backlog 0b 0p requeues 90435

qdisc fq_codel 0: dev eth1 parent :4 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 18193739771 bytes 14649633 pkt (dropped 8, overlimits 0 requeues 16093)

backlog 0b 0p requeues 16093

maxpacket 18642 drop_overlimit 0 new_flow_count 10807 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth1 parent :3 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 29740509205 bytes 22846232 pkt (dropped 22, overlimits 0 requeues 32189)

backlog 0b 0p requeues 32189

maxpacket 11472 drop_overlimit 0 new_flow_count 25423 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth1 parent :2 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 27384300918 bytes 21251711 pkt (dropped 24, overlimits 0 requeues 24516)

backlog 0b 0p requeues 24516

maxpacket 14340 drop_overlimit 0 new_flow_count 19088 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth1 parent :1 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 19303098942 bytes 26898176 pkt (dropped 9, overlimits 0 requeues 17637)

backlog 0b 0p requeues 17637

maxpacket 11472 drop_overlimit 0 new_flow_count 11184 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc mq 0: dev eth2 root

Sent 42092256562 bytes 32497901 pkt (dropped 0, overlimits 0 requeues 32982)

backlog 0b 0p requeues 32982

qdisc fq_codel 0: dev eth2 parent :4 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 9423504139 bytes 7381996 pkt (dropped 0, overlimits 0 requeues 5600)

backlog 0b 0p requeues 5600

maxpacket 18168 drop_overlimit 0 new_flow_count 5345 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth2 parent :3 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 12088431043 bytes 9377949 pkt (dropped 0, overlimits 0 requeues 8267)

backlog 0b 0p requeues 8267

maxpacket 28766 drop_overlimit 0 new_flow_count 8559 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth2 parent :2 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 9701918011 bytes 7314544 pkt (dropped 0, overlimits 0 requeues 9260)

backlog 0b 0p requeues 9260

maxpacket 24224 drop_overlimit 0 new_flow_count 7805 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth2 parent :1 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 10878403369 bytes 8423412 pkt (dropped 0, overlimits 0 requeues 9855)

backlog 0b 0p requeues 9855

maxpacket 19682 drop_overlimit 0 new_flow_count 12474 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc mq 0: dev eth3 root

Sent 15179037241 bytes 24418478 pkt (dropped 0, overlimits 0 requeues 25745)

backlog 0b 0p requeues 25745

qdisc fq_codel 0: dev eth3 parent :4 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 1105938021 bytes 2272617 pkt (dropped 0, overlimits 0 requeues 5107)

backlog 0b 0p requeues 5107

maxpacket 1514 drop_overlimit 0 new_flow_count 2625 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth3 parent :3 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 363110404 bytes 1575782 pkt (dropped 0, overlimits 0 requeues 3503)

backlog 0b 0p requeues 3503

maxpacket 1805 drop_overlimit 0 new_flow_count 1588 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth3 parent :2 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 467310237 bytes 1866639 pkt (dropped 0, overlimits 0 requeues 5406)

backlog 0b 0p requeues 5406

maxpacket 2988 drop_overlimit 0 new_flow_count 2625 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth3 parent :1 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 13242678579 bytes 18703440 pkt (dropped 0, overlimits 0 requeues 11729)

backlog 0b 0p requeues 11729

maxpacket 1514 drop_overlimit 0 new_flow_count 7392 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc noqueue 0: dev br-lan root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc cake 802e: dev ifb4eth0 root refcnt 2 bandwidth 320Mbit besteffort triple-isolate nonat wash no-ack-filter split-gso rtt 100ms raw overhead 0

Sent 1106724385 bytes 792244 pkt (dropped 7775, overlimits 928921 requeues 0)

backlog 0b 0p requeues 0

memory used: 3837704b of 15140Kb

capacity estimate: 320Mbit

min/max network layer size: 60 / 1514

min/max overhead-adjusted size: 60 / 1514

average network hdr offset: 14

Tin 0

thresh 320Mbit

target 5ms

interval 100ms

pk_delay 52us

av_delay 9us

sp_delay 2us

backlog 0b

pkts 800019

bytes 1117871309

way_inds 1

way_miss 186

way_cols 0

drops 7775

marks 0

ack_drop 0

sp_flows 2

bk_flows 1

un_flows 0

max_len 18642

quantum 1514

Smartphone

tc -s qdisc

root@OpenWrt:~# tc -s qdisc

qdisc noqueue 0: dev lo root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc cake 8039: dev eth0 root refcnt 5 bandwidth 21Mbit besteffort triple-isolate nonat nowash no-ack-filter split-gso rtt 100ms raw overhead 0

Sent 63575815 bytes 166831 pkt (dropped 93, overlimits 376134 requeues 50)

backlog 0b 0p requeues 50

memory used: 791256b of 4Mb

capacity estimate: 21Mbit

min/max network layer size: 42 / 1494

min/max overhead-adjusted size: 42 / 1494

average network hdr offset: 14

Tin 0

thresh 21Mbit

target 5ms

interval 100ms

pk_delay 3.04ms

av_delay 959us

sp_delay 4us

backlog 0b

pkts 166924

bytes 63699769

way_inds 0

way_miss 235

way_cols 0

drops 93

marks 0

ack_drop 0

sp_flows 1

bk_flows 1

un_flows 0

max_len 11472

quantum 640

qdisc ingress ffff: dev eth0 parent ffff:fff1 ----------------

Sent 1080824883 bytes 805621 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc mq 0: dev eth1 root

Sent 95733016883 bytes 86464173 pkt (dropped 63, overlimits 0 requeues 90574)

backlog 0b 0p requeues 90574

qdisc fq_codel 0: dev eth1 parent :4 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 18383937837 bytes 14790093 pkt (dropped 8, overlimits 0 requeues 16109)

backlog 0b 0p requeues 16109

maxpacket 18642 drop_overlimit 0 new_flow_count 10815 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth1 parent :3 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 29923489192 bytes 22979181 pkt (dropped 22, overlimits 0 requeues 32225)

backlog 0b 0p requeues 32225

maxpacket 11472 drop_overlimit 0 new_flow_count 25443 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth1 parent :2 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 27928818113 bytes 21642997 pkt (dropped 24, overlimits 0 requeues 24579)

backlog 0b 0p requeues 24579

maxpacket 14340 drop_overlimit 0 new_flow_count 19180 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth1 parent :1 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 19496771741 bytes 27051902 pkt (dropped 9, overlimits 0 requeues 17661)

backlog 0b 0p requeues 17661

maxpacket 11472 drop_overlimit 0 new_flow_count 11209 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc mq 0: dev eth2 root

Sent 42094878142 bytes 32502762 pkt (dropped 0, overlimits 0 requeues 32992)

backlog 0b 0p requeues 32992

qdisc fq_codel 0: dev eth2 parent :4 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 9424774406 bytes 7383762 pkt (dropped 0, overlimits 0 requeues 5604)

backlog 0b 0p requeues 5604

maxpacket 18168 drop_overlimit 0 new_flow_count 5346 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth2 parent :3 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 12088806711 bytes 9378747 pkt (dropped 0, overlimits 0 requeues 8272)

backlog 0b 0p requeues 8272

maxpacket 28766 drop_overlimit 0 new_flow_count 8561 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth2 parent :2 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 9702433547 bytes 7316029 pkt (dropped 0, overlimits 0 requeues 9261)

backlog 0b 0p requeues 9261

maxpacket 24224 drop_overlimit 0 new_flow_count 7805 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth2 parent :1 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 10878863478 bytes 8424224 pkt (dropped 0, overlimits 0 requeues 9855)

backlog 0b 0p requeues 9855

maxpacket 19682 drop_overlimit 0 new_flow_count 12474 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc mq 0: dev eth3 root

Sent 15199893109 bytes 24446928 pkt (dropped 0, overlimits 0 requeues 25790)

backlog 0b 0p requeues 25790

qdisc fq_codel 0: dev eth3 parent :4 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 1106331141 bytes 2273678 pkt (dropped 0, overlimits 0 requeues 5116)

backlog 0b 0p requeues 5116

maxpacket 1514 drop_overlimit 0 new_flow_count 2631 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth3 parent :3 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 363581807 bytes 1577192 pkt (dropped 0, overlimits 0 requeues 3510)

backlog 0b 0p requeues 3510

maxpacket 1805 drop_overlimit 0 new_flow_count 1592 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth3 parent :2 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 467798222 bytes 1868546 pkt (dropped 0, overlimits 0 requeues 5416)

backlog 0b 0p requeues 5416

maxpacket 2988 drop_overlimit 0 new_flow_count 2630 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth3 parent :1 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 13262181939 bytes 18727512 pkt (dropped 0, overlimits 0 requeues 11748)

backlog 0b 0p requeues 11748

maxpacket 1514 drop_overlimit 0 new_flow_count 7398 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc noqueue 0: dev br-lan root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc cake 803a: dev ifb4eth0 root refcnt 2 bandwidth 320Mbit besteffort triple-isolate nonat wash no-ack-filter split-gso rtt 100ms raw overhead 0

Sent 1106899343 bytes 794720 pkt (dropped 10901, overlimits 934907 requeues 0)

backlog 0b 0p requeues 0

memory used: 3171172b of 15140Kb

capacity estimate: 320Mbit

min/max network layer size: 60 / 1514

min/max overhead-adjusted size: 60 / 1514

average network hdr offset: 14

Tin 0

thresh 320Mbit

target 5ms

interval 100ms

pk_delay 13us

av_delay 7us

sp_delay 3us

backlog 0b

pkts 805621

bytes 1122531377

way_inds 6

way_miss 224

way_cols 0

drops 10901

marks 0

ack_drop 0

sp_flows 2

bk_flows 1

un_flows 0

max_len 37284

quantum 1514

SQM Settings

cat /etc/config/sqm

config queue 'eth1'

option enabled '1'

option interface 'eth0'

option download '320000'

option upload '21000'

option qdisc 'cake'

option script 'piece_of_cake.qos'

option linklayer 'none'

option debug_logging '0'

option verbosity '5'

I was inclined to just blame my internet connection, but it doesnt justify why my smartphone get better results, although it is still far from an unbloated result.

Could be some misconfiguration from my side?