I will patch this next. Test to come tomorrow, though, Dave.

Update: I changed my mind; I will fight against Gravity in the arvo. See below.

WMM test parameters:

tx_queue_data2_aifs=3

tx_queue_data2_cwmin=15

tx_queue_data2_cwmax=63

tx_queue_data2_burst=0

wmm_ac_be_txop_limit=0

AQL test parameters:

root@nanohd-downstairs:~# cat /sys/kernel/debug/ieee80211/phy1/aql_txq_limit

AC AQL limit low AQL limit high

VO 2000 2000

VI 2000 2000

BE 2000 2000

BK 2000 2000

root@nanohd-downstairs:~# cat /sys/kernel/debug/ieee80211/phy1/aql_threshold

24000

root@nanohd-downstairs:~# cat /sys/kernel/debug/ieee80211/phy1/aql_enable

1

Kernel patches:

MS2TIME(8)

NAPI_POLL_WEIGHT=16

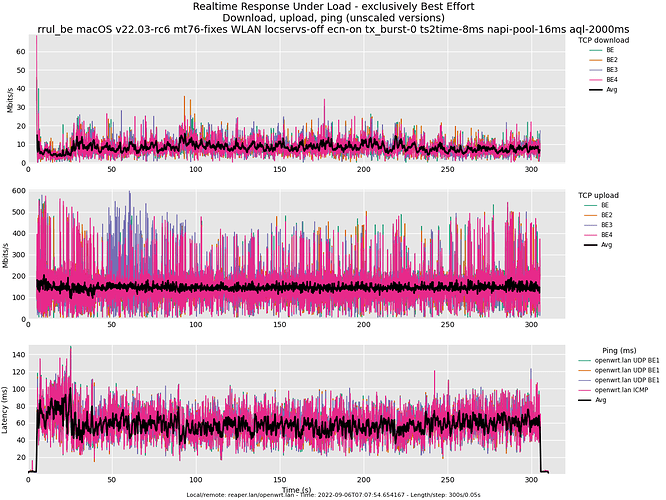

flent rrul_be 300 s test graph:

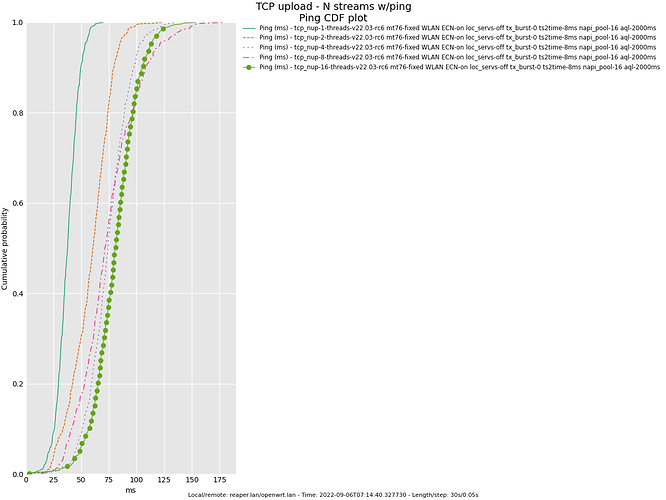

flent tcpnup test with 1, 2, 4, 8 and 16 threads ping cdf (median ≈70 ms) graph:

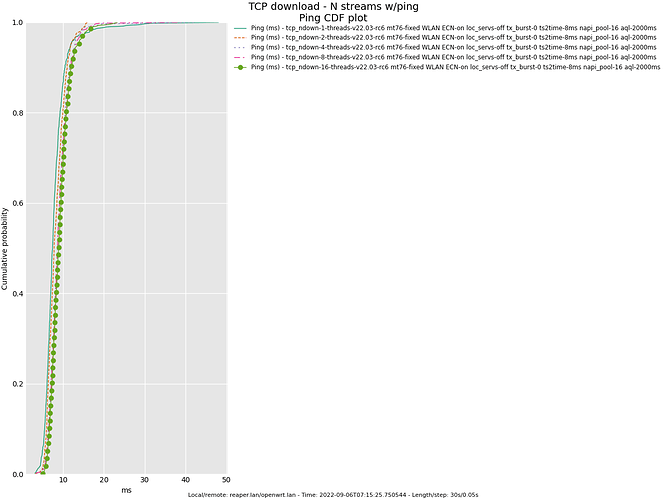

flent tcpndown test with 1, 2, 4, 8 and 16 threads ping cdf (median ≈8 ms) graph:

Click me to download flent data and tcpdump capture.

Note that I didn't see any kernel warning; hence the driver is not calling netif_napi_add() with a weight value higher than the one defined (16).

if (weight > NAPI_POLL_WEIGHT)

netdev_err_once(dev, "%s() called with weight %d\n", __func__,

weight);