Thank you for your informational graph. Is this with cake ‘out of the box’ configuration or is there some tinkering involved?

This is the script I used to turn CAKE on and off:

#!/bin/bash

set -ex

ul_if=$1

cake_ul_rate=$2

cake_ul_options="diffserv4 wash"

if [ "$cake_ul_rate" -eq "0" ]; then

ethtool -K "${ul_if}" tcp-segmentation-offload on

ethtool -K "${ul_if}" generic-segmentation-offload on

ethtool -K "${ul_if}" generic-receive-offload on

tc qdisc delete dev "${ul_if}" root

else

ethtool -K "${ul_if}" tcp-segmentation-offload off

ethtool -K "${ul_if}" generic-segmentation-offload off

ethtool -K "${ul_if}" generic-receive-offload off

tc qdisc replace dev "${ul_if}" root handle 1: cake bandwidth ${cake_ul_rate}mbit ${cake_ul_options}

fi

So cake can do gso-segmentation (split-gso keyword) so it should not be strictly necessary to disable these offloads.

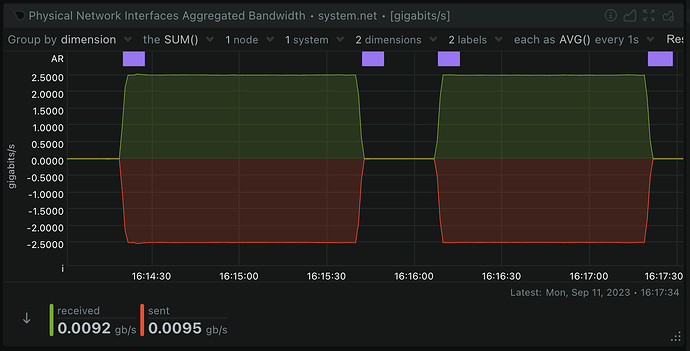

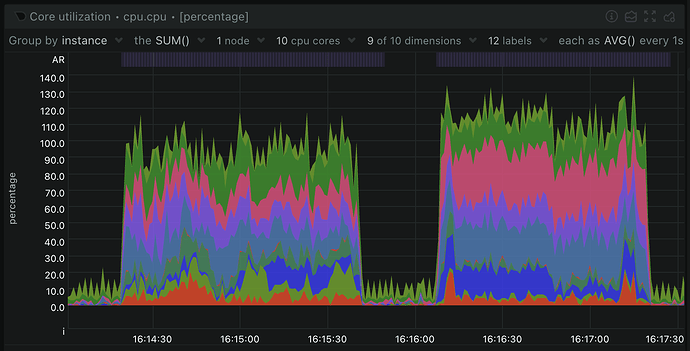

I figured turning off the hw offload features further shows that this box/processor has no problems at 2.5G.

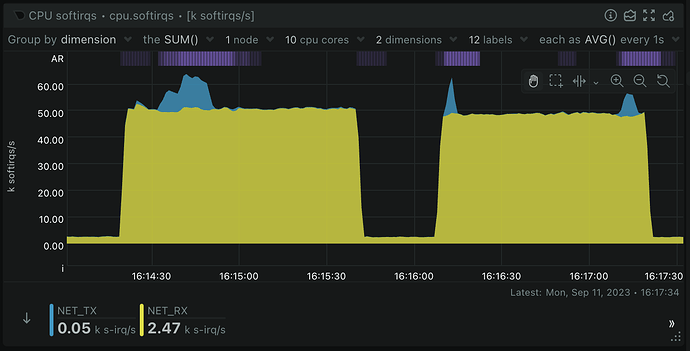

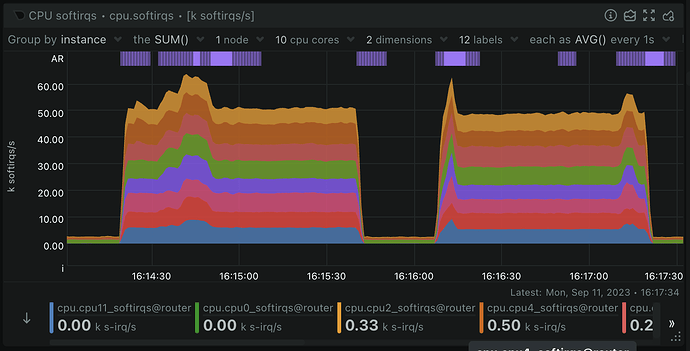

I am under the impression that most 2.5ghz ethernet chips expose 1 tx/rx interface per core. Using fq_codel or cake as the default egress qdisc in these cases when actually running at 2.5gbit should first, invoke the mq qdisc, and then apply fq_codel or cake to the per core tx queues, an use up very little cpu compared to shaping. I would be interested in a performance comparison for both fq_codel and cake running as the native qdisc in the hw mq situation.

I have been collecting feedback and feature requests on a new version of cake over here.

But would that not require BQL and relay on that BQL back-pressure is "fairly" distributed across the multi-queues, which would only work for egress? NOt that this would not already be great!

The size of the BQL backlog is dependent on interrupt service time more than anything else. I do not have any 2.5gbit hw to test. At the time, bqlmon would typically show ~44k per mq at 1 Gbit with GSO/GRO disabled, 130-150k with GSO/GRO enabled. The side effects of GRO were ever more obvious if you tried to run at physical rates of 100Mbit and 10Mbit.

CAKE typically ended up with a larger BQL backlog than fq_codel.

I am under the impression that most 2.5ghz ethernet chips expose 1 tx/rx interface per core.

The igc driver that my i226 NICs use has a hardcoded max of 4 queues. That's why you see the softirqs in my graph only going to 4 cores.

I would be interested in a performance comparison for both fq_codel and cake running as the native qdisc in the hw mq situation.

You'd like me to run the same test, but use fq_codel instead, correct?

attached to the 4 queues, not shaped.

It does appear bql is fully supported by this driver.

https://github.com/ffainelli/bqlmon can be used to monitor bql interactions.

Can you give me an example of how to configure what you are asking for? I don't quite understand how you want it configured.

sysctl -w net.core.default_qdisc=fq_codel # or cake

tc qdisc replace dev your_device root cake # or fq_codel

tc qdisc del dev your_device root # Will then reset that device to this new default

tc -s qdisc show dev your_device # should show mq with 4 fq_codels

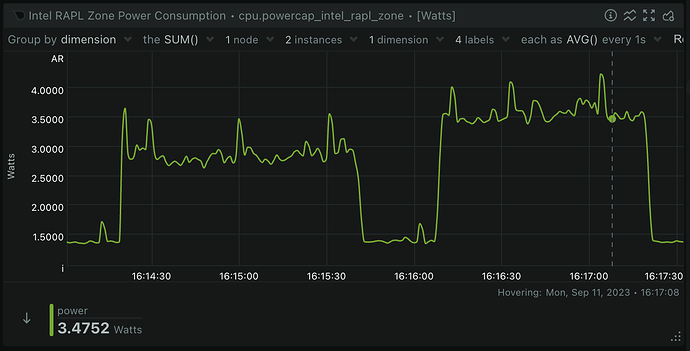

cake eats watts

Roughly 20% more... could you post the output of tc -s qdisc please? I wonder which cake options are in play (probably the defaults, but it would be nice to confirm this).

Thanks for your detailed statistics. I was concerned newer cpu's with a mixed setup of performance cores (p-cores) and efficient cores, like the 1235u with two max 55 watt tdp p-cores, would draw way too much electricity. Reviews like https://www.notebookcheck.net/Intel-Core-i5-1235U-Processor-Benchmarks-and-Specs.589637.0.html had put me off too. Electricity is currently very expensive in Germany. 10 Watt 24/7 = ~ 35 € per year at 40 cent per kWh.

root@router:~# ./start_shaping2.sh test fq_codel

+ ul_if=test

+ qdisc=fq_codel

+ sysctl -w net.core.default_qdisc=fq_codel

net.core.default_qdisc = fq_codel

+ tc qdisc replace dev test root fq_codel

+ tc qdisc del dev test root

+ tc -s qdisc show dev test

qdisc mq 0: root

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc fq_codel 0: parent :4 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: parent :3 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: parent :2 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: parent :1 limit 10240p flows 1024 quantum 1514 target 5ms interval 100ms memory_limit 32Mb ecn drop_batch 64

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

root@router:~# ./start_shaping2.sh test cake

+ ul_if=test

+ qdisc=cake

+ sysctl -w net.core.default_qdisc=cake

net.core.default_qdisc = cake

+ tc qdisc replace dev test root cake

+ tc qdisc del dev test root

+ tc -s qdisc show dev test

qdisc mq 0: root

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc cake 0: parent :4 bandwidth unlimited diffserv3 triple-isolate nonat nowash no-ack-filter split-gso rtt 100ms raw overhead 0

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

memory used: 0b of 15140Kb

capacity estimate: 0bit

min/max network layer size: 65535 / 0

min/max overhead-adjusted size: 65535 / 0

average network hdr offset: 0

Bulk Best Effort Voice

thresh 0bit 0bit 0bit

target 5ms 5ms 5ms

interval 100ms 100ms 100ms

pk_delay 0us 0us 0us

av_delay 0us 0us 0us

sp_delay 0us 0us 0us

backlog 0b 0b 0b

pkts 0 0 0

bytes 0 0 0

way_inds 0 0 0

way_miss 0 0 0

way_cols 0 0 0

drops 0 0 0

marks 0 0 0

ack_drop 0 0 0

sp_flows 0 0 0

bk_flows 0 0 0

un_flows 0 0 0

max_len 0 0 0

quantum 1514 1514 1514

qdisc cake 0: parent :3 bandwidth unlimited diffserv3 triple-isolate nonat nowash no-ack-filter split-gso rtt 100ms raw overhead 0

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

memory used: 0b of 15140Kb

capacity estimate: 0bit

min/max network layer size: 65535 / 0

min/max overhead-adjusted size: 65535 / 0

average network hdr offset: 0

Bulk Best Effort Voice

thresh 0bit 0bit 0bit

target 5ms 5ms 5ms

interval 100ms 100ms 100ms

pk_delay 0us 0us 0us

av_delay 0us 0us 0us

sp_delay 0us 0us 0us

backlog 0b 0b 0b

pkts 0 0 0

bytes 0 0 0

way_inds 0 0 0

way_miss 0 0 0

way_cols 0 0 0

drops 0 0 0

marks 0 0 0

ack_drop 0 0 0

sp_flows 0 0 0

bk_flows 0 0 0

un_flows 0 0 0

max_len 0 0 0

quantum 1514 1514 1514

qdisc cake 0: parent :2 bandwidth unlimited diffserv3 triple-isolate nonat nowash no-ack-filter split-gso rtt 100ms raw overhead 0

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

memory used: 0b of 15140Kb

capacity estimate: 0bit

min/max network layer size: 65535 / 0

min/max overhead-adjusted size: 65535 / 0

average network hdr offset: 0

Bulk Best Effort Voice

thresh 0bit 0bit 0bit

target 5ms 5ms 5ms

interval 100ms 100ms 100ms

pk_delay 0us 0us 0us

av_delay 0us 0us 0us

sp_delay 0us 0us 0us

backlog 0b 0b 0b

pkts 0 0 0

bytes 0 0 0

way_inds 0 0 0

way_miss 0 0 0

way_cols 0 0 0

drops 0 0 0

marks 0 0 0

ack_drop 0 0 0

sp_flows 0 0 0

bk_flows 0 0 0

un_flows 0 0 0

max_len 0 0 0

quantum 1514 1514 1514

qdisc cake 0: parent :1 bandwidth unlimited diffserv3 triple-isolate nonat nowash no-ack-filter split-gso rtt 100ms raw overhead 0

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

memory used: 0b of 15140Kb

capacity estimate: 0bit

min/max network layer size: 65535 / 0

min/max overhead-adjusted size: 65535 / 0

average network hdr offset: 0

Bulk Best Effort Voice

thresh 0bit 0bit 0bit

target 5ms 5ms 5ms

interval 100ms 100ms 100ms

pk_delay 0us 0us 0us

av_delay 0us 0us 0us

sp_delay 0us 0us 0us

backlog 0b 0b 0b

pkts 0 0 0

bytes 0 0 0

way_inds 0 0 0

way_miss 0 0 0

way_cols 0 0 0

drops 0 0 0

marks 0 0 0

ack_drop 0 0 0

sp_flows 0 0 0

bk_flows 0 0 0

un_flows 0 0 0

max_len 0 0 0

quantum 1514 1514 1514

Indeed the cake defaults, thanks for documenting this! So here cake does considerably more than fq_codel (whether this additional work is actually useful here is a different question ;))

I would have liked a tc -s qdisc show after the tests completed. Or during. same for a bqlmon result.

I agree getting a tc -s qdisc pair tightly "sandwiching" the test would be great ![]()