Ingress traffic isn't shaping at all. Doesn't limit bandwidth at all and gives bufferbloat.

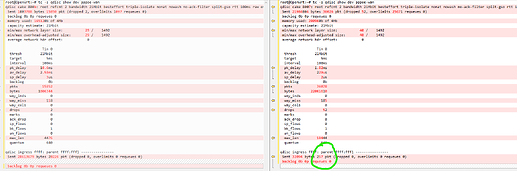

@brada4 Here are the results when Software offloading is enabled in both old and new firmware:

root@OpenWrt:~# tc -s qdisc show dev pppoe-wan

qdisc cake 800b: root refcnt 2 bandwidth 21Mbit besteffort triple-isolate nonat nowash no-ack-filter split-gso rtt 100ms raw overhead 0

Sent 1803788 bytes 15850 pkt (dropped 2, overlimits 1097 requeues 0)

backlog 0b 0p requeues 0

memory used: 165120b of 4Mb

capacity estimate: 21Mbit

min/max network layer size: 29 / 1492

min/max overhead-adjusted size: 29 / 1492

average network hdr offset: 0

Tin 0

thresh 21Mbit

target 5ms

interval 100ms

pk_delay 10.6ms

av_delay 2.58ms

sp_delay 3us

backlog 0b

pkts 15852

bytes 1806344

way_inds 0

way_miss 118

way_cols 0

drops 2

marks 0

ack_drop 0

sp_flows 0

bk_flows 1

un_flows 0

max_len 4476

quantum 640

qdisc ingress ffff: parent ffff:fff1 ----------------

Sent 28613679 bytes 20226 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

On New:

root@OpenWrt:~# tc -s qdisc show dev pppoe-wan

qdisc cake 8007: root refcnt 2 bandwidth 21Mbit besteffort triple-isolate nonat nowash no-ack-filter split-gso rtt 100ms raw overhead 0

Sent 21094224 bytes 24769 pkt (dropped 52, overlimits 25476 requeues 0)

backlog 0b 0p requeues 0

memory used: 200960b of 4Mb

capacity estimate: 21Mbit

min/max network layer size: 40 / 1492

min/max overhead-adjusted size: 40 / 1492

average network hdr offset: 0

Tin 0

thresh 21Mbit

target 5ms

interval 100ms

pk_delay 4.85ms

av_delay 3.82ms

sp_delay 2us

backlog 0b

pkts 24821

bytes 21171030

way_inds 0

way_miss 71

way_cols 0

drops 52

marks 0

ack_drop 0

sp_flows 1

bk_flows 1

un_flows 0

max_len 10444

quantum 640

qdisc ingress ffff: parent ffff:fff1 ----------------

Sent 9611 bytes 96 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

And, why the packets sent are very low here?

Is the output of nft list flowtables the same on both versions?

@AlanDias17 Do you even need offloading with a Raspberry Pi 4 Model B? It has 4 cores which are for the most part fast enough for most SQM/QoS/routing processing. I would recommend just setting https://openwrt.org/docs/guide-user/services/irqbalance properly and forgetting about offloading.

Given my current internet speed, I don't need this feature. However, those with approximately 1 Gbps speeds will definitely benefit from it. I'm currently debugging why it's not working in the newer firmware

Sorry for the delay I had to reinstall everything back.

root@OpenWrt:~# nft list flowtables

table inet fw4 {

flowtable ft {

hook ingress priority filter

devices = { eth0, eth1, phy0-ap0, pppoe-wan }

flags offload

counter

}

(Displays flags offload when H/w offloading is also ticked)

1 gbps should work fine with this model, even with SQM running (as long as you don't abuse the hell out of it with lots of complex rules).

https://openwrt.org/docs/guide-user/network/traffic-shaping/sqm

SQM is incompatible with software/hardware flow offloading which bypasses part of the network stack. Be sure to uncheck those features in LuCI → Firewall to use SQM.

Offloading will always be inherently conflicting/problematic with traffic shaping/schedulers. How are those softwares supposed to work with data that might not even be visible to them?

I wouldn't call this a bug, in fact, if SQM was working with offloading before, then I would call it either a bug or a very specific side effect to how your SQM is operating or some of your driver's code.

TL;DR: SQM is not guaranteed at all to work with SQM and if it works now, it might not work in the future. Should not be considered a bug though, unless offloading is eventually developed to, somehow, fully support higher-layer packet management.

If you replace your fw4.uc with this one https://github.com/openwrt/firewall4/blob/master/root/usr/share/ucode/fw4.uc it will exclude physical interfaces from the flow table.

1 Like

I understand, but I want to point out that the author may have changed the code in a way that broke offloading with SQM for ingress. It still works fine for egress. For now, I'll downgrade to 23.05.3 until we figure this out.

Maybe offloading didn't work for Archer devices, but based on the results I've posted above, it works in my case, right? The snapshot build sounds promising from your links. I'll give it a try tomorrow. Thank you!

I don't have a router set up for PPPoE, but my guess is that it "worked" with some caveats, like not properly (or at all) offloading frames.

As for those firewall4 files, they require no compilation or packaging, so a snapshot build is not required. Just replace them in your /usr/share folder, service firewall restart and nft list ruleset.

brada4

August 3, 2024, 4:36am

32

Who iis the author? Upstream linux devs who fixed PPPOE offload after 3 years of buggy? No flow offload with qdisc - is broadly documented , even next to the checkbox.

Installed snapshot. Didn't help. Replaced file as you said, didn't do the trick. Although offloading always work

root@OpenWrt:~# nft list ruleset

table inet fw4 {

flowtable ft {

hook ingress priority filter

devices = { eth0, eth1, pppoe-wan }

flags offload

counter

}

chain input {

type filter hook input priority filter; policy drop;

iif "lo" accept comment "!fw4: Accept traffic from loopback"

ct state vmap { established : accept, related : accept } comment "!fw4: Handle inbound flows"

tcp flags syn / fin,syn,rst,ack jump syn_flood comment "!fw4: Rate limit TCP syn packets"

iifname "br-lan" jump input_lan comment "!fw4: Handle lan IPv4/IPv6 input traffic"

iifname "pppoe-wan" jump input_wan comment "!fw4: Handle wan IPv4/IPv6 input traffic"

jump handle_reject

}

chain forward {

type filter hook forward priority filter; policy drop;

meta l4proto { tcp, udp } flow add @ft

ct state vmap { established : accept, related : accept } comment "!fw4: Handle forwarded flows"

iifname "br-lan" jump forward_lan comment "!fw4: Handle lan IPv4/IPv6 forward traffic"

iifname "pppoe-wan" jump forward_wan comment "!fw4: Handle wan IPv4/IPv6 forward traffic"

jump upnp_forward comment "Hook into miniupnpd forwarding chain"

jump handle_reject

}

chain output {

type filter hook output priority filter; policy accept;

oif "lo" accept comment "!fw4: Accept traffic towards loopback"

ct state vmap { established : accept, related : accept } comment "!fw4: Handle outbound flows"

oifname "br-lan" jump output_lan comment "!fw4: Handle lan IPv4/IPv6 output traffic"

oifname "pppoe-wan" jump output_wan comment "!fw4: Handle wan IPv4/IPv6 output traffic"

}

chain prerouting {

type filter hook prerouting priority filter; policy accept;

iifname "br-lan" jump helper_lan comment "!fw4: Handle lan IPv4/IPv6 helper assignment"

}

chain handle_reject {

meta l4proto tcp reject with tcp reset comment "!fw4: Reject TCP traffic"

reject comment "!fw4: Reject any other traffic"

}

chain syn_flood {

limit rate 25/second burst 50 packets return comment "!fw4: Accept SYN packets below rate-limit"

drop comment "!fw4: Drop excess packets"

}

chain input_lan {

jump accept_from_lan

}

chain output_lan {

jump accept_to_lan

}

chain forward_lan {

jump accept_to_wan comment "!fw4: Accept lan to wan forwarding"

jump accept_to_lan

}

chain helper_lan {

}

chain accept_from_lan {

iifname "br-lan" counter packets 251 bytes 28131 accept comment "!fw4: accept lan IPv4/IPv6 traffic"

}

chain accept_to_lan {

oifname "br-lan" counter packets 42 bytes 5286 accept comment "!fw4: accept lan IPv4/IPv6 traffic"

}

chain input_wan {

meta nfproto ipv4 udp dport 68 counter packets 0 bytes 0 accept comment "!fw4: Allow-DHCP-Renew"

icmp type echo-request counter packets 0 bytes 0 accept comment "!fw4: Allow-Ping"

meta nfproto ipv4 meta l4proto igmp counter packets 0 bytes 0 accept comment "!fw4: Allow-IGMP"

meta nfproto ipv6 udp dport 546 counter packets 0 bytes 0 accept comment "!fw4: Allow-DHCPv6"

ip6 saddr fe80::/10 icmpv6 type . icmpv6 code { mld-listener-query . no-route, mld-listener-report . no-route, mld-listener-done . no-route, mld2-listener-report . no-route } counter packets 0 bytes 0 accept comment "!fw4: Allow-MLD"

icmpv6 type { destination-unreachable, time-exceeded, echo-request, echo-reply, nd-router-solicit, nd-router-advert } limit rate 1000/second burst 5 packets counter packets 0 bytes 0 accept comment "!fw4: Allow-ICMPv6-Input"

icmpv6 type . icmpv6 code { packet-too-big . no-route, parameter-problem . no-route, nd-neighbor-solicit . no-route, nd-neighbor-advert . no-route, parameter-problem . admin-prohibited } limit rate 1000/second burst 5 packets counter packets 0 bytes 0 accept comment "!fw4: Allow-ICMPv6-Input"

jump reject_from_wan

}

chain output_wan {

jump accept_to_wan

}

chain forward_wan {

icmpv6 type { destination-unreachable, time-exceeded, echo-request, echo-reply } limit rate 1000/second burst 5 packets counter packets 0 bytes 0 accept comment "!fw4: Allow-ICMPv6-Forward"

icmpv6 type . icmpv6 code { packet-too-big . no-route, parameter-problem . no-route, parameter-problem . admin-prohibited } limit rate 1000/second burst 5 packets counter packets 0 bytes 0 accept comment "!fw4: Allow-ICMPv6-Forward"

meta l4proto esp counter packets 0 bytes 0 jump accept_to_lan comment "!fw4: Allow-IPSec-ESP"

udp dport 500 counter packets 0 bytes 0 jump accept_to_lan comment "!fw4: Allow-ISAKMP"

jump reject_to_wan

}

chain accept_to_wan {

meta nfproto ipv4 oifname "pppoe-wan" ct state invalid counter packets 8 bytes 320 drop comment "!fw4: Prevent NAT leakage"

oifname "pppoe-wan" counter packets 309 bytes 74299 accept comment "!fw4: accept wan IPv4/IPv6 traffic"

}

chain reject_from_wan {

iifname "pppoe-wan" counter packets 14 bytes 1105 jump handle_reject comment "!fw4: reject wan IPv4/IPv6 traffic"

}

chain reject_to_wan {

oifname "pppoe-wan" counter packets 0 bytes 0 jump handle_reject comment "!fw4: reject wan IPv4/IPv6 traffic"

}

chain dstnat {

type nat hook prerouting priority dstnat; policy accept;

jump upnp_prerouting comment "Hook into miniupnpd prerouting chain"

}

chain srcnat {

type nat hook postrouting priority srcnat; policy accept;

oifname "pppoe-wan" jump srcnat_wan comment "!fw4: Handle wan IPv4/IPv6 srcnat traffic"

jump upnp_postrouting comment "Hook into miniupnpd postrouting chain"

}

chain srcnat_wan {

meta nfproto ipv4 masquerade comment "!fw4: Masquerade IPv4 wan traffic"

}

chain raw_prerouting {

type filter hook prerouting priority raw; policy accept;

}

chain raw_output {

type filter hook output priority raw; policy accept;

}

chain mangle_prerouting {

type filter hook prerouting priority mangle; policy accept;

}

chain mangle_postrouting {

type filter hook postrouting priority mangle; policy accept;

oifname "pppoe-wan" tcp flags syn / fin,syn,rst tcp option maxseg size set rt mtu comment "!fw4: Zone wan IPv4/IPv6 egress MTU fixing"

}

chain mangle_input {

type filter hook input priority mangle; policy accept;

}

chain mangle_output {

type route hook output priority mangle; policy accept;

}

chain mangle_forward {

type filter hook forward priority mangle; policy accept;

iifname "pppoe-wan" tcp flags syn / fin,syn,rst tcp option maxseg size set rt mtu comment "!fw4: Zone wan IPv4/IPv6 ingress MTU fixing"

}

chain upnp_forward {

}

chain upnp_prerouting {

}

chain upnp_postrouting {

}

}

brada4

August 3, 2024, 9:51am

34

I dont understand your obsession with hardware offload. Named fix to replace file works only with soft offload.

I am confused, I thought the raspberry pi 4B does not even have a hardware offload for NAT or PPPoE...

2 Likes

brada4

August 3, 2024, 9:54am

36

Yep, it just falls back to soft offload with different set of devices.

@moeller0 , I’m not talking about hardware offload at all! Software offload works on the RPi4 along with SQM. It was fine before the firmware upgrade. However, after the upgrade, SQM on the PPPoE interface no longer works when software offload is enabled. This wasn't the case previously.

I'm pointing this out to suggest that there might be a bug? Is there an error in the recent code? Can it be patched? Additionally, why is this issue affecting only ingress traffic and not egress?

brada4

August 3, 2024, 12:34pm

38

Software offload newer worked with PPPoE, and as a consequence every packet crossed slow path including qdisc.

2 Likes

Ohh damn then I don't understand why do I get this result?

root@OpenWrt:~# conntrack -L -u offload

tcp 6 src=2407:8700:0:5070:f9ec:eaff:f748:520b dst=2620:1ec:bdf::68 sport=60857 dport=443 packets=13 bytes=4035 src=2620:1ec:bdf::68 dst=2407:8700:0:5070:f9ec:eaff:f748:520b sport=443 dport=60857 packets=16 bytes=9153 [OFFLOAD] mark=0 use=2

tcp 6 src=192.168.1.100 dst=172.105.109.165 sport=61783 dport=4222 packets=118 bytes=18069 src=172.105.109.165 dst=100.64.55.195 sport=4222 dport=61783 packets=118 bytes=5152 [OFFLOAD] mark=0 use=2

tcp 6 src=192.168.1.100 dst=151.101.194.133 sport=60854 dport=443 packets=14 bytes=3123 src=151.101.194.133 dst=100.64.55.195 sport=443 dport=60854 packets=26 bytes=24252 [OFFLOAD] mark=0 use=2

tcp 6 src=192.168.1.100 dst=151.101.194.133 sport=60852 dport=443 packets=11 bytes=2982 src=151.101.194.133 dst=100.64.55.195 sport=443 dport=60852 packets=15 bytes=6240 [OFFLOAD] mark=0 use=2

tcp 6 src=192.168.1.100 dst=151.101.1.194 sport=60884 dport=443 packets=229 bytes=74878 src=151.101.1.194 dst=100.64.55.195 sport=443 dport=60884 packets=232 bytes=110526 [OFFLOAD] mark=0 use=2

tcp 6 src=192.168.1.100 dst=20.212.88.117 sport=60531 dport=443 packets=35 bytes=6998 src=20.212.88.117 dst=100.64.55.195 sport=443 dport=60531 packets=46 bytes=9042 [OFFLOAD] mark=0 use=2

udp 17 src=192.168.1.101 dst=35.190.43.134 sport=57445 dport=443 packets=206 bytes=105850 src=35.190.43.134 dst=100.64.55.195 sport=443 dport=57445 packets=170 bytes=35241 [OFFLOAD] mark=0 use=2

tcp 6 src=192.168.1.100 dst=204.79.197.203 sport=60831 dport=443 packets=78 bytes=27432 src=204.79.197.203 dst=100.64.55.195 sport=443 dport=60831 packets=143 bytes=157796 [OFFLOAD] mark=0 use=2

tcp 6 src=2407:8700:0:5070:f9ec:eaff:f748:520b dst=2606:4700::6812:b2b sport=60848 dport=443 packets=11 bytes=1562 src=2606:4700::6812:b2b dst=2407:8700:0:5070:f9ec:eaff:f748:520b sport=443 dport=60848 packets=12 bytes=6060 [OFFLOAD] mark=0 use=2

tcp 6 src=2407:8700:0:5070:f9ec:eaff:f748:520b dst=2606:4700::6812:1f02 sport=60773 dport=443 packets=33 bytes=24068 src=2606:4700::6812:1f02 dst=2407:8700:0:5070:f9ec:eaff:f748:520b sport=443 dport=60773 packets=48 bytes=11622 [OFFLOAD] mark=0 use=2

tcp 6 src=192.168.1.105 dst=15.164.43.102 sport=49153 dport=8883 packets=802 bytes=181487 src=15.164.43.102 dst=100.64.55.195 sport=8883 dport=49153 packets=681 bytes=50094 [OFFLOAD] mark=0 use=2

tcp 6 src=2407:8700:0:5070:f9ec:eaff:f748:520b dst=2606:4700:3030::6815:30c7 sport=60931 dport=443 packets=7 bytes=854 src=2606:4700:3030::6815:30c7 dst=2407:8700:0:5070:f9ec:eaff:f748:520b sport=443 dport=60931 packets=7 bytes=4098 [OFFLOAD] mark=0 use=2

tcp 6 src=192.168.1.100 dst=185.199.110.153 sport=60807 dport=443 packets=13 bytes=1418 src=185.199.110.153 dst=100.64.55.195 sport=443 dport=60807 packets=15 bytes=4832 [OFFLOAD] mark=0 use=2

tcp 6 src=192.168.1.100 dst=151.101.1.194 sport=60882 dport=443 packets=235 bytes=77149 src=151.101.1.194 dst=100.64.55.195 sport=443 dport=60882 packets=236 bytes=113680 [OFFLOAD] mark=0 use=2

tcp 6 src=192.168.1.100 dst=151.101.2.133 sport=60856 dport=443 packets=11 bytes=3048 src=151.101.2.133 dst=100.64.55.195 sport=443 dport=60856 packets=14 bytes=4719 [OFFLOAD] mark=0 use=2

tcp 6 src=192.168.1.100 dst=157.90.91.74 sport=60578 dport=443 packets=42 bytes=3765 src=157.90.91.74 dst=100.64.55.195 sport=443 dport=60578 packets=51 bytes=14801 [OFFLOAD] mark=0 use=2

tcp 6 src=192.168.1.108 dst=20.157.85.165 sport=58376 dport=1883 packets=139 bytes=11612 src=20.157.85.165 dst=100.64.55.195 sport=1883 dport=58376 packets=126 bytes=6720 [OFFLOAD] mark=0 use=3

tcp 6 src=192.168.1.101 dst=64.233.170.188 sport=39948 dport=5228 packets=497 bytes=31085 src=64.233.170.188 dst=100.64.55.195 sport=5228 dport=39948 packets=537 bytes=465795 [OFFLOAD] mark=0 use=2

tcp 6 src=2407:8700:0:5070:f9ec:eaff:f748:520b dst=2606:4700::6812:a2b sport=60929 dport=443 packets=10 bytes=1538 src=2606:4700::6812:a2b dst=2407:8700:0:5070:f9ec:eaff:f748:520b sport=443 dport=60929 packets=12 bytes=5570 [OFFLOAD] mark=0 use=2

brada4

August 3, 2024, 1:07pm

40

because in fact the offload states used to be established and torn down between packets. Never steadily fastpath forwarding all packets.