hey,

i was super fine with sqm till "today" when i noticed even a slow download (1/2 of my connetion) cause stadia to stutter.

im running OpenWrt 19.07.4 on a ZyXEL NBG6617.

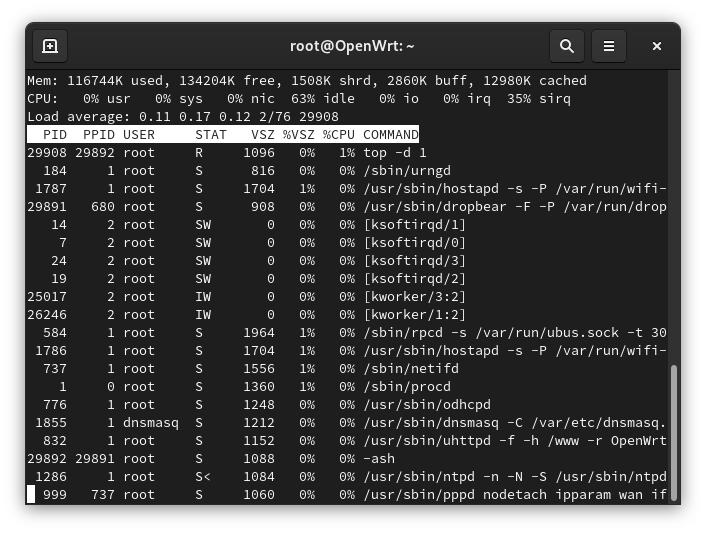

here are some infos:

Mon Sep 7 02:36:13 2020 daemon.info pppd[8047]: Remote message: SRU=41296#SRD=109974#

config queue 'eth1'

option interface 'pppoe-wan'

option debug_logging '0'

option verbosity '5'

option qdisc 'cake'

option script 'piece_of_cake.qos'

option linklayer 'ethernet'

option overhead '34'

option qdisc_advanced '1'

option squash_dscp '1'

option squash_ingress '1'

option ingress_ecn 'ECN'

option egress_ecn 'NOECN'

option qdisc_really_really_advanced '1'

option enabled '1'

option download '98976'

option upload '40883'

option iqdisc_opts 'diffserv4 nat dual-dsthost ingress'

option eqdisc_opts 'diffserv4 nat dual-srchost'

qdisc noqueue 0: dev lo root refcnt 2

qdisc mq 0: dev eth0 root

qdisc fq_codel 0: dev eth0 parent :4 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

qdisc fq_codel 0: dev eth0 parent :3 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

qdisc fq_codel 0: dev eth0 parent :2 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

qdisc fq_codel 0: dev eth0 parent :1 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

qdisc mq 0: dev eth1 root

qdisc fq_codel 0: dev eth1 parent :4 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

qdisc fq_codel 0: dev eth1 parent :3 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

qdisc fq_codel 0: dev eth1 parent :2 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

qdisc fq_codel 0: dev eth1 parent :1 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

qdisc noqueue 0: dev br-lan root refcnt 2

qdisc noqueue 0: dev eth1.7 root refcnt 2

qdisc cake 8031: dev pppoe-wan root refcnt 2 bandwidth 40883Kbit diffserv4 dual-srchost nat nowash no-ack-filter split-gso rtt 100.0ms noatm overhead 34

qdisc ingress ffff: dev pppoe-wan parent ffff:fff1 ----------------

qdisc cake 8032: dev ifb4pppoe-wan root refcnt 2 bandwidth 98976Kbit diffserv4 dual-dsthost nat wash ingress no-ack-filter split-gso rtt 100.0ms noatm overhead 34

qdisc noqueue 0: dev lo root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc mq 0: dev eth0 root

Sent 3989931935710 bytes 3210730344 pkt (dropped 159, overlimits 0 requeues 3573)

backlog 0b 0p requeues 3573

qdisc fq_codel 0: dev eth0 parent :4 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

Sent 75129428 bytes 405240 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :3 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

Sent 1828256686075 bytes 1408559667 pkt (dropped 158, overlimits 0 requeues 2734)

backlog 0b 0p requeues 2734

maxpacket 1514 drop_overlimit 0 new_flow_count 2160 ecn_mark 0

new_flows_len 1 old_flows_len 16

qdisc fq_codel 0: dev eth0 parent :2 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

Sent 1144271842886 bytes 966054880 pkt (dropped 0, overlimits 0 requeues 529)

backlog 0b 0p requeues 529

maxpacket 3028 drop_overlimit 0 new_flow_count 1502 ecn_mark 0

new_flows_len 0 old_flows_len 2

qdisc fq_codel 0: dev eth0 parent :1 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

Sent 1017328277321 bytes 835710557 pkt (dropped 1, overlimits 0 requeues 310)

backlog 0b 0p requeues 310

maxpacket 1514 drop_overlimit 0 new_flow_count 1779 ecn_mark 0

new_flows_len 1 old_flows_len 24

qdisc mq 0: dev eth1 root

Sent 217896645257 bytes 1719160849 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc fq_codel 0: dev eth1 parent :4 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

Sent 35257641 bytes 380436 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth1 parent :3 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

Sent 50978630783 bytes 383593218 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth1 parent :2 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

Sent 58714865001 bytes 474800081 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth1 parent :1 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

Sent 108167891832 bytes 860387114 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 164 drop_overlimit 0 new_flow_count 2 ecn_mark 0

new_flows_len 1 old_flows_len 0

qdisc noqueue 0: dev br-lan root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev eth1.7 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc cake 8031: dev pppoe-wan root refcnt 2 bandwidth 40883Kbit diffserv4 dual-srchost nat nowash no-ack-filter split-gso rtt 100.0ms noatm overhead 34

Sent 1359784600 bytes 13624863 pkt (dropped 217, overlimits 168124 requeues 0)

backlog 0b 0p requeues 0

memory used: 155008b of 4Mb

capacity estimate: 40883Kbit

min/max network layer size: 28 / 1492

min/max overhead-adjusted size: 62 / 1526

average network hdr offset: 0

Bulk Best Effort Video Voice

thresh 2555Kbit 40883Kbit 20441Kbit 10220Kbit

target 7.1ms 5.0ms 5.0ms 5.0ms

interval 102.1ms 100.0ms 100.0ms 100.0ms

pk_delay 0us 687us 193us 1.6ms

av_delay 0us 112us 87us 76us

sp_delay 0us 16us 20us 15us

backlog 0b 0b 0b 0b

pkts 0 13623219 414 1447

bytes 0 1359733177 31464 269511

way_inds 0 218604 0 0

way_miss 0 104327 238 268

way_cols 0 0 0 0

drops 0 217 0 0

marks 0 0 0 0

ack_drop 0 0 0 0

sp_flows 0 2 1 1

bk_flows 0 1 0 0

un_flows 0 0 0 0

max_len 0 24820 76 576

quantum 300 1247 623 311

qdisc ingress ffff: dev pppoe-wan parent ffff:fff1 ----------------

Sent 37365882268 bytes 31369039 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc cake 8032: dev ifb4pppoe-wan root refcnt 2 bandwidth 98976Kbit diffserv4 dual-dsthost nat wash ingress no-ack-filter split-gso rtt 100.0ms noatm overhead 34

Sent 37321282381 bytes 31337775 pkt (dropped 31264, overlimits 15642863 requeues 0)

backlog 0b 0p requeues 0

memory used: 2112Kb of 4948800b

capacity estimate: 98976Kbit

min/max network layer size: 28 / 1492

min/max overhead-adjusted size: 62 / 1526

average network hdr offset: 0

Bulk Best Effort Video Voice

thresh 6186Kbit 98976Kbit 49488Kbit 24744Kbit

target 5.0ms 5.0ms 5.0ms 5.0ms

interval 100.0ms 100.0ms 100.0ms 100.0ms

pk_delay 0us 1.2ms 0us 4.7ms

av_delay 0us 150us 0us 243us

sp_delay 0us 21us 0us 23us

backlog 0b 0b 0b 0b

pkts 0 31358309 0 10730

bytes 0 37362815869 0 3066399

way_inds 0 328716 0 0

way_miss 0 115424 0 78

way_cols 0 0 0 0

drops 0 31264 0 0

marks 0 2 0 0

ack_drop 0 0 0 0

sp_flows 0 1 0 1

bk_flows 0 1 0 0

un_flows 0 0 0 0

max_len 0 1492 0 1383

quantum 300 1514 1510 755