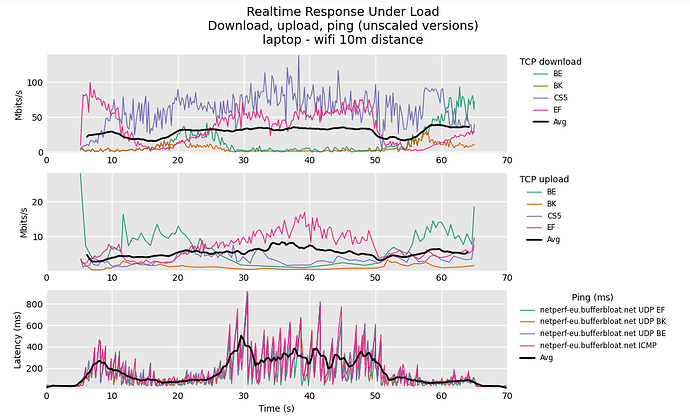

I recently switched from rrul over to rrul_var, so I can easily change the number of flows as well as the DSCPs per flow:

echo "IPv4" ; date ; ping -c 10 netperf-eu.bufferbloat.net ; ./run-flent --ipv4 -l 60 -H netperf-eu.bufferbloat.net rrul_var --remote-metadata=root@192.168.42.1 --test-parameter=cpu_stats_hosts=root@192.168.42.1 --socket-stats --step-size=.05 --test-parameter bidir_streams=8 --test-parameter markings=CS0,CS1,CS2,CS3,CS4,CS5,CS6,CS7 --test-parameter ping_hosts=1.1.1.1 -D . -t IPv4_DUT_2_netperf-eu.bufferbloat.net --log-file

ping -c 10 netperf-eu.bufferbloat.net:

This just collects unloaded RTT data to give an idea what to expect

-l 60:

Run for a minute, IMHO running tests longer than the typical 10-20 seconds can be quite revealing, but when using infrastructure payed for by others I think it courteous not to go over board here.

--test-parameter=cpu_stats_hosts=root@192.168.42.1:

This will get CPU usage information from the router, useful to diagnose CPU overload, although it currently only reports total CPU usage, which for multicore routers is less useful.

--step-size=.05:

If the rate is high enough this allows nicer plots.

--socket-stats:

Will only work if flent is run under Linux, but will record relevant per flow/connection information like the sRTT for TCP flows, can be quite interesting (as it gives an idea about intra-flow or self-congestion)

--test-parameter bidir_streams=8:

What is says, the number of flows per direction

--test-parameter markings=CS0,CS1,CS2,CS3,CS4,CS5,CS6,CS7:

Set the DSCP marking for each flow, either use the few known symbolic names, or use numbers (decimal TOS numbers, if you work from decimal DSCP numbers, just multiply by 4 to make up for the 2 bit difference in length)

markings=0,32,64,96,128,160,192,224 should be equivalent to markings=CS0,CS1,CS2,CS3,CS4,CS5,CS6,CS7

--test-parameter ping_hosts=1.1.1.1:

Also ping this specific host... can be useful for reference

--remote-metadata=root@192.168.42.1:

192.168.42.1 is my router running sqm, this will collect the tc -s qdisc output from before ans after the test, replace with the IP of your own router

--log-file:

Also create a log file for a flent run, can be useful for debugging.

![]()