I went and read a couple of your posts on the subject. I think as more people start moving towards x86_64 platforms, these issues are going to become more frequent and dissatisfaction at network performance is going to come to the fore, since the default OS and hardware configuration is largely optimized for use-cases where the connection terminates on the host and not for a router-use case.

This thread was originally posted 3 years ago when I had the previous iteration of my hardware platform, which used igb drivers and Intel i354 controllers. When upgrading, I chose a more capable platform with network hardware (Intel X553) that uses ixgbe drivers. The poor-performance problem is then magnified by literally an order of magnitude due to misconfiguration.

There was quite a steep learning curve involved in optimizing for the router use-case, as documentation about how everything functions is hard to find and somewhat arcane.

So in the interests of someone landing on this thread seeking info, here's a more detailed description of what I needed to do to optimize ixgbe on Openwrt.

As an aside, I think that when choosing an x86_64 platform, people need to consider the network interface hardware and driver functionality as carefully as they do the CPU and core count. igb interfaces have only two RX/TX RSS queues, which means that the interrupts are only going to be processed on two physical cores, no matter how high the core count of the CPU. For this reason, desktop x86_64 platforms are a bit of a mismatch to a router use-case, since a lot of them use igb drivers or less capable network hardware.

With ixgbe capable X553 hardware, there are 63 channels.

root@openwrt:~# ethtool -l eth0

Channel parameters for eth0:

Pre-set maximums:

RX: 0

TX: 0

Other: 1

Combined: 63

However, this in itself poses a challenge, since left at default, the experience out of the box is not satisfactory, with heavy spikes in individual core usage.

ixgbe / X553 has Intel Flow Director enabled out of the box, which is really only suitable for connections terminating on the host - it's designed to match flows to cores where the packet consuming process is running, mostly irrelevant in a router (unless you have some user-space daemon doing packet captures, and even then, it's a blunt instrument). Intel Flow Director overrides RSS and will cause any manual tuning done to be ignored.

So, firstly, turn it off:

root@openwrt:~# ethtool --features ntuple off

Then, RX/TX RSS channels need to be set to the number of physical cores in the CPU

root@openwrt:~# ethtool -L eth0 combined 8

root@openwrt:~# ethtool -l eth0

Channel parameters for eth0:

Pre-set maximums:

RX: 0

TX: 0

Other: 1

Combined: 63

Current hardware settings:

RX: 0

TX: 0

Other: 1

Combined: 8

root@openwrt:~# ethtool -x eth0

RX flow hash indirection table for eth0 with 8 RX ring(s):

0: 0 1 2 3 4 5 6 7

8: 0 1 2 3 4 5 6 7

16: 0 1 2 3 4 5 6 7

24: 0 1 2 3 4 5 6 7

32: 0 1 2 3 4 5 6 7

40: 0 1 2 3 4 5 6 7

48: 0 1 2 3 4 5 6 7

56: 0 1 2 3 4 5 6 7

64: 0 1 2 3 4 5 6 7

72: 0 1 2 3 4 5 6 7

80: 0 1 2 3 4 5 6 7

88: 0 1 2 3 4 5 6 7

96: 0 1 2 3 4 5 6 7

104: 0 1 2 3 4 5 6 7

112: 0 1 2 3 4 5 6 7

120: 0 1 2 3 4 5 6 7

128: 0 1 2 3 4 5 6 7

136: 0 1 2 3 4 5 6 7

144: 0 1 2 3 4 5 6 7

152: 0 1 2 3 4 5 6 7

160: 0 1 2 3 4 5 6 7

168: 0 1 2 3 4 5 6 7

176: 0 1 2 3 4 5 6 7

184: 0 1 2 3 4 5 6 7

192: 0 1 2 3 4 5 6 7

200: 0 1 2 3 4 5 6 7

208: 0 1 2 3 4 5 6 7

216: 0 1 2 3 4 5 6 7

224: 0 1 2 3 4 5 6 7

232: 0 1 2 3 4 5 6 7

240: 0 1 2 3 4 5 6 7

248: 0 1 2 3 4 5 6 7

256: 0 1 2 3 4 5 6 7

264: 0 1 2 3 4 5 6 7

272: 0 1 2 3 4 5 6 7

280: 0 1 2 3 4 5 6 7

288: 0 1 2 3 4 5 6 7

296: 0 1 2 3 4 5 6 7

304: 0 1 2 3 4 5 6 7

312: 0 1 2 3 4 5 6 7

320: 0 1 2 3 4 5 6 7

328: 0 1 2 3 4 5 6 7

336: 0 1 2 3 4 5 6 7

344: 0 1 2 3 4 5 6 7

352: 0 1 2 3 4 5 6 7

360: 0 1 2 3 4 5 6 7

368: 0 1 2 3 4 5 6 7

376: 0 1 2 3 4 5 6 7

384: 0 1 2 3 4 5 6 7

392: 0 1 2 3 4 5 6 7

400: 0 1 2 3 4 5 6 7

408: 0 1 2 3 4 5 6 7

416: 0 1 2 3 4 5 6 7

424: 0 1 2 3 4 5 6 7

432: 0 1 2 3 4 5 6 7

440: 0 1 2 3 4 5 6 7

448: 0 1 2 3 4 5 6 7

456: 0 1 2 3 4 5 6 7

464: 0 1 2 3 4 5 6 7

472: 0 1 2 3 4 5 6 7

480: 0 1 2 3 4 5 6 7

488: 0 1 2 3 4 5 6 7

496: 0 1 2 3 4 5 6 7

504: 0 1 2 3 4 5 6 7

RSS hash key:

dd:55:0f:b5:5f:6b:23:dc:e4:58:7c:ce:24:78:79:5a:58:56:39:da:a1:cd:fe:67:76:9b:97:f6:1e:63:23:1f:96:62:05:43:5c:88:5e:c3

RSS hash function:

toeplitz: on

xor: off

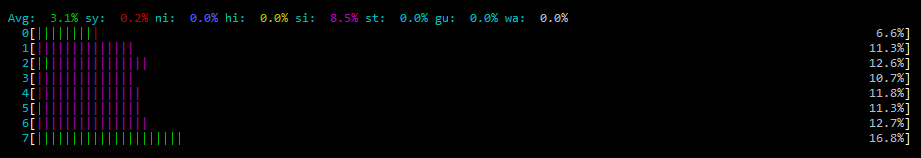

Only once that's done can interrupt affinities be tuned using the set_irq_affinity script I posted earlier in the thread, resulting in the nice even distribution of interrupts across cores seen in the htop screenshot above. This configuration workflow is a must if using SQM, otherwise you may as well opt for a cpu with only one or two cores.

IFACE CORE MASK -> FILE

=======================

eth0 1 2 -> /proc/irq/47/smp_affinity

eth0 2 4 -> /proc/irq/48/smp_affinity

eth0 3 8 -> /proc/irq/49/smp_affinity

eth0 4 10 -> /proc/irq/50/smp_affinity

eth0 5 20 -> /proc/irq/51/smp_affinity

eth0 6 40 -> /proc/irq/52/smp_affinity

eth0 1 2 -> /proc/irq/53/smp_affinity

eth0 2 4 -> /proc/irq/77/smp_affinity