{"kernel":"5.4.50","hostname":"OpenWrt","system":"ARMv7 Processor rev 1 (v7l)","model":"Turris Omnia","board_name":"cznic,turris-omnia","release":{"distribution":"OpenWrt","version":"SNAPSHOT","revision":"r13719-66e04abbb6","target":"mvebu/cortexa9","description":"OpenWrt SNAPSHOT r13719-66e04abbb6"}}

With DSA it seems redundant to create a kernel soft bridge for Lan ports since the switch chip fabric is already hard bridging those ports.

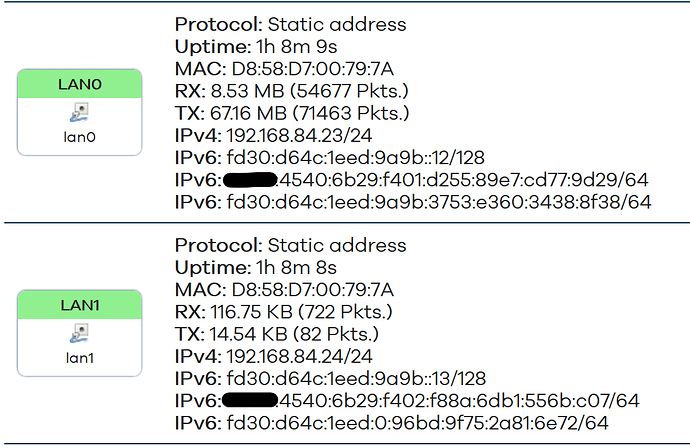

That said, is there a concept that netifd can place/manage multiple DSA Lan ports in the same DHCP subnet without creating a soft bridge, e.g.

config interface 'lan'

list ifname 'lan0'

list ifname 'lan1'

list ifname 'lan2'

?

what is your semantic of (different) subnet? In this case on IPv4 the Lan ports and clients connecting to the Lan ports are within the 192.168.84.0/24 mask, just need to be diligent with the pool range for each port (prevent clashing).

what is your semantic of (different) subnet? In this case on IPv4 the Lan ports and clients connecting to the Lan ports are within the 192.168.84.0/24 mask, just need to be diligent with the pool range for each port (prevent clashing).