I built it a few days ago... no issues. I didn't have a chance to load it until now. Initial observations below - again no obvious issues. I can let it run a day or so if that helps.

r7500v2 # cat /etc/openwrt_release | grep DESCRIPTION

DISTRIB_DESCRIPTION='OpenWrt SNAPSHOT r13429+594-e238c85e571f'

r7500v2 # uptime

09:02:19 up 13 min, load average: 0.05, 0.08, 0.08

r7500v2 # cat /sys/devices/system/cpu/cpufreq/policy0/stats/trans_table

From : To

: 384000 600000 800000 1000000 1200000 1400000

384000: 0 6868 124 34 15 9

600000: 6958 0 400 23 16 19

800000: 50 479 0 13 12 8

1000000: 19 36 18 0 10 18

1200000: 9 13 9 9 0 27

1400000: 14 21 10 22 14 0

r7500v2 # uptime

09:02:54 up 13 min, load average: 0.10, 0.09, 0.08

r7500v2 # cat /sys/devices/system/cpu/cpufreq/policy0/stats/trans_table

From : To

: 384000 600000 800000 1000000 1200000 1400000

384000: 0 7314 125 34 15 9

600000: 7404 0 409 23 16 19

800000: 51 488 0 13 12 8

1000000: 19 36 18 0 10 18

1200000: 9 13 9 9 0 27

1400000: 14 21 10 22 14 0

r7500v2 # uptime

09:03:54 up 14 min, load average: 0.08, 0.08, 0.08

r7500v2 # cat /sys/devices/system/cpu/cpufreq/policy0/stats/trans_table

From : To

: 384000 600000 800000 1000000 1200000 1400000

384000: 0 7895 127 34 15 9

600000: 7986 0 409 23 16 19

800000: 52 489 0 13 12 8

1000000: 19 36 18 0 10 18

1200000: 9 13 9 9 0 27

1400000: 14 21 10 22 14 0

r7500v2 #

wired

August 5, 2020, 2:14pm

2386

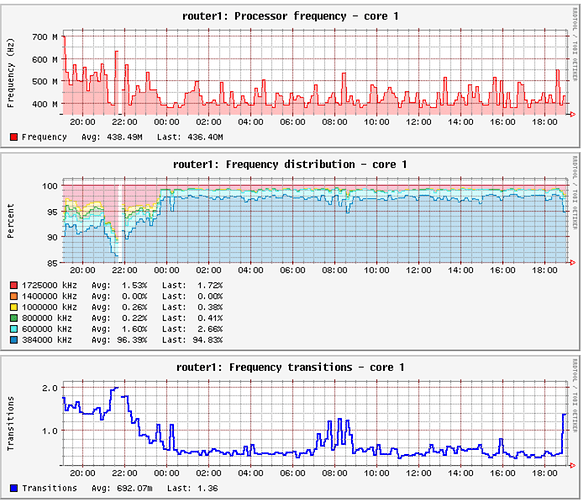

I think this might be because of the 60 setting that your build uses for /sys/devices/system/cpu/cpufreq/ondemand/up_threshold What that does it ramps up the freq straight to max when load hits 60, it is not the load to go to the next freq, but rather all the way up. If you set it to something higher like 75-80 you will see it being used.

You can also cat /sys/devices/system/cpu/cpufreq/policy*/stats/time_in_state to see how much time the CPU spends at each freq.

FWIW, on mine I'm happy with performance governor always at 1.7GHz. If I toy with ondemand or schedutil I set min freq to 800MHz anyway, I don't see a benefit to use anything below and have these constant transitions.

1 Like

hnyman

August 5, 2020, 2:40pm

2387

That is also visible in LuCI stats.

wired

August 5, 2020, 3:58pm

2388

Isn't that just collectd that samples on a periodic basis (every 30s it seems at least with my config) and displays what freq it might happen to see at the time when it takes a sample? If that's the case (though you may be talking about something else in LuCI which I haven't discovered), then the stats in sysfs are a lot more accurate.

hnyman

August 5, 2020, 4:07pm

2389

I am.

Just enable the "Extra items" for cpufreq in config.

Ps.

committed 05:47PM - 30 Jul 19 UTC

Collectd 5.9.0 changed the data structure of the cpufreq plugin:

CPU cores are n… ow handled as separate plugin instances.

There are also new data items per core:

* time spent at each frequency

* amount of frequency transitions

Enable these new data items, but initially hide them behind

a new config option "ExtraItems" (default: disabled), as

the amount of graphs in multi-core systems could be rather large.

Note that the frequencies are not (yet) sorted, so the

information value of the time-spent graph is semi-random.

Signed-off-by: Hannu Nyman <hannu.nyman@iki.fi>

Pps.

1 Like

I just noticed this post to @slh . I didn't add your qcom-krait-cache entry to the dtsi. Let me know if you want it but this will take a few additional days due to the near constant internet demand in my household.

Also ipq806x: use qcom-ipq8064.dtsi from upstream was merged a few hours ago. Regardless, it shouldn't be difficult to make the dtsi addition if you want it.

FWIW:

r7500v2 # uptime

20:27:38 up 11:38, load average: 0.06, 0.08, 0.08

r7500v2 # cat /sys/devices/system/cpu/cpufreq/policy0/stats/trans_table

From : To

: 384000 600000 800000 1000000 1200000 1400000

384000: 0 341976 2837 574 268 90

600000: 343336 0 12073 292 267 222

800000: 1783 13179 0 178 98 202

1000000: 295 536 226 0 109 104

1200000: 174 253 150 151 0 95

1400000: 158 246 153 75 81 0

This seems excessive compared to my results above without the patch. I can't answer your question about how much is too much (likely the answer is a somewhat arbitrary judgement call by the ipq806x designers) but I wouldn't want such an increase without knowing the benefit.

Ansuel

August 6, 2020, 10:58am

2391

fact is that without the dts the driver is not even used so it's all normal? (also take notice that you need to remove the cpufreq patch that add cache scaling support)

hmm, test without the change had less than one transition from 384 to 600 per sec (only measured over 116 seconds). Test with the change had more than 8 transitions from 384 to 600 per sec (measured over ~22 hr). So it seems like something is different.

But if I didn't implement the change correctly then perhaps this result is not that useful - not to mention accounting for differences in cpu usage over two (different) measuring periods.

I'm going back to "normal" for now.

r7500v2 # uptime

07:04:00 up 22:14, load average: 0.16, 0.15, 0.14

r7500v2 # cat /sys/devices/system/cpu/cpufreq/policy0/stats/trans_table

From : To

: 384000 600000 800000 1000000 1200000 1400000

384000: 0 662301 9875 1040 472 207

600000: 666303 0 19473 458 469 353

800000: 6399 23016 0 249 144 359

1000000: 579 882 317 0 162 152

1200000: 324 427 243 236 0 98

1400000: 291 430 258 109 81 0

slh

August 6, 2020, 10:24pm

2393

I've just done a new built for my nbg6817, based on the DTS changes above:

--- a/target/linux/ipq806x/config-5.4

+++ b/target/linux/ipq806x/config-5.4

@@ -525,3 +525,4 @@ CONFIG_ZLIB_DEFLATE=y

CONFIG_ZLIB_INFLATE=y

CONFIG_ZSTD_COMPRESS=y

CONFIG_ZSTD_DECOMPRESS=y

+CONFIG_ARM_QCOM_KRAIT_CACHE=y

--- /dev/null

+++ b/target/linux/ipq806x/patches-5.4/999-qcom-cache-scaling.patch

@@ -0,0 +1,245 @@

[…]

--- a/target/linux/ipq806x/patches-5.4/083-ipq8064-dtsi-additions.patch

+++ b/target/linux/ipq806x/patches-5.4/083-ipq8064-dtsi-additions.patch

@@ -26,7 +26,7 @@

};

cpu1: cpu@1 {

-@@ -38,11 +50,458 @@

+@@ -38,11 +50,469 @@

next-level-cache = <&L2>;

qcom,acc = <&acc1>;

qcom,saw = <&saw1>;

@@ -66,6 +66,17 @@

+ };

+ };

+

++ qcom-krait-cache {

++ compatible = "qcom,krait-cache";

++ clocks = <&kraitcc 4>;

++ clock-names = "l2";

++ voltage-tolerance = <5>;

++ l2-rates = <384000000 1000000000 1200000000>;

++ l2-cpufreq = <384000 600000 1200000>;

++ l2-volt = <1100000 1100000 1150000>;

++ l2-supply = <&smb208_s1a>;

++ };

++

+ opp_table0: opp_table0 {

+ compatible = "operating-points-v2-qcom-cpu";

+ nvmem-cells = <&speedbin_efuse>;

root@nbg6817:~# uptime

22:21:58 up 41 min, load average: 0.02, 0.08, 0.08

root@nbg6817:~# zcat /proc/config.gz | grep CONFIG_ARM_QCOM_KRAIT_CACHE

CONFIG_ARM_QCOM_KRAIT_CACHE=y

root@nbg6817:~# find /sys/firmware/devicetree/base/qcom-krait-cache/ -exec grep -H . {} \;

/sys/firmware/devicetree/base/qcom-krait-cache/compatible:qcom,krait-cache

/sys/firmware/devicetree/base/qcom-krait-cache/clocks:

/sys/firmware/devicetree/base/qcom-krait-cache/clocks:

/sys/firmware/devicetree/base/qcom-krait-cache/l2-volt:��

/sys/firmware/devicetree/base/qcom-krait-cache/l2-volt:��

/sys/firmware/devicetree/base/qcom-krait-cache/l2-volt:�0

/sys/firmware/devicetree/base/qcom-krait-cache/clock-names:l2

/sys/firmware/devicetree/base/qcom-krait-cache/voltage-tolerance:

/sys/firmware/devicetree/base/qcom-krait-cache/l2-cpufreq:�

/sys/firmware/devicetree/base/qcom-krait-cache/l2-cpufreq: '�

/sys/firmware/devicetree/base/qcom-krait-cache/l2-cpufreq:O�

/sys/firmware/devicetree/base/qcom-krait-cache/l2-rates:�`

/sys/firmware/devicetree/base/qcom-krait-cache/l2-rates:;��

/sys/firmware/devicetree/base/qcom-krait-cache/l2-rates:G��

/sys/firmware/devicetree/base/qcom-krait-cache/l2-supply:

/sys/firmware/devicetree/base/qcom-krait-cache/name:qcom-krait-cache

root@nbg6817:~# cat /sys/devices/system/cpu/cpufreq/policy0/stats/trans_table

From : To

: 384000 600000 800000 1000000 1400000 1725000

384000: 0 3794 800 246 178 732

600000: 3828 0 855 240 174 1631

800000: 809 952 0 1556 357 1278

1000000: 295 229 1597 0 1139 360

1400000: 430 144 365 1155 0 419

1725000: 389 1609 1334 423 665 0

root@nbg6817:~# cat /sys/devices/system/cpu/cpufreq/policy1/stats/trans_table

From : To

: 384000 600000 800000 1000000 1400000 1725000

384000: 0 6290 848 718 394 548

600000: 6430 0 1214 240 180 943

800000: 844 1321 0 1183 298 1021

1000000: 665 298 1151 0 1703 350

1400000: 554 158 373 1635 0 375

1725000: 304 941 1081 391 520 0

I'll update the stats above after a ~day's worth of uptime.

1 Like

Ansuel

August 6, 2020, 10:40pm

2394

Thx a lot for the testing let's see if the results are similar

RobertP

August 7, 2020, 6:30pm

2395

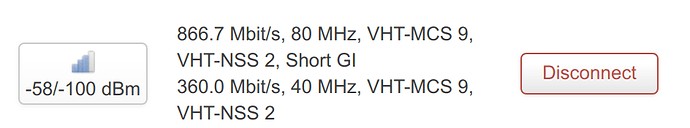

I'm wondering if anyone has succeeded with connecting clients to R7800 using 160 Mhz channel width?

# iw list

...

Wiphy phy0

max # scan SSIDs: 16

max scan IEs length: 199 bytes

max # sched scan SSIDs: 0

max # match sets: 0

max # scan plans: 1

max scan plan interval: -1

max scan plan iterations: 0

Retry short limit: 7

Retry long limit: 4

Coverage class: 0 (up to 0m)

Device supports AP-side u-APSD.

Available Antennas: TX 0xf RX 0xf

Configured Antennas: TX 0xf RX 0xf

Supported interface modes:

* IBSS

* managed

* AP

* AP/VLAN

* monitor

* mesh point

* P2P-client

* P2P-GO

* P2P-device

Band 2:

Capabilities: 0x19ef

RX LDPC

HT20/HT40

SM Power Save disabled

RX HT20 SGI

RX HT40 SGI

TX STBC

RX STBC 1-stream

Max AMSDU length: 7935 bytes

DSSS/CCK HT40

Maximum RX AMPDU length 65535 bytes (exponent: 0x003)

Minimum RX AMPDU time spacing: 8 usec (0x06)

HT TX/RX MCS rate indexes supported: 0-31

VHT Capabilities (0x339b79fa):

Max MPDU length: 11454

Supported Channel Width: 160 MHz, 80+80 MHz

RX LDPC

short GI (80 MHz)

short GI (160/80+80 MHz)

TX STBC

SU Beamformer

SU Beamformee

MU Beamformer

MU Beamformee

RX antenna pattern consistency

TX antenna pattern consistency

VHT RX MCS set:

1 streams: MCS 0-9

2 streams: MCS 0-9

3 streams: MCS 0-9

4 streams: MCS 0-9

5 streams: not supported

6 streams: not supported

7 streams: not supported

8 streams: not supported

VHT RX highest supported: 1560 Mbps

VHT TX MCS set:

1 streams: MCS 0-9

2 streams: MCS 0-9

3 streams: MCS 0-9

4 streams: MCS 0-9

5 streams: not supported

6 streams: not supported

7 streams: not supported

8 streams: not supported

VHT TX highest supported: 1560 Mbps

Frequencies:

* 5180 MHz [36] (23.0 dBm)

* 5200 MHz [40] (23.0 dBm)

* 5220 MHz [44] (23.0 dBm)

* 5240 MHz [48] (23.0 dBm)

* 5260 MHz [52] (20.0 dBm) (radar detection)

* 5280 MHz [56] (20.0 dBm) (radar detection)

* 5300 MHz [60] (20.0 dBm) (radar detection)

* 5320 MHz [64] (20.0 dBm) (radar detection)

* 5500 MHz [100] (26.0 dBm) (radar detection)

* 5520 MHz [104] (26.0 dBm) (radar detection)

* 5540 MHz [108] (26.0 dBm) (radar detection)

* 5560 MHz [112] (26.0 dBm) (radar detection)

* 5580 MHz [116] (26.0 dBm) (radar detection)

* 5600 MHz [120] (26.0 dBm) (radar detection)

* 5620 MHz [124] (26.0 dBm) (radar detection)

* 5640 MHz [128] (26.0 dBm) (radar detection)

* 5660 MHz [132] (26.0 dBm) (radar detection)

* 5680 MHz [136] (26.0 dBm) (radar detection)

* 5700 MHz [140] (26.0 dBm) (radar detection)

* 5720 MHz [144] (disabled)

* 5745 MHz [149] (13.0 dBm)

* 5765 MHz [153] (13.0 dBm)

* 5785 MHz [157] (13.0 dBm)

* 5805 MHz [161] (13.0 dBm)

* 5825 MHz [165] (13.0 dBm)

* 5845 MHz [169] (13.0 dBm)

* 5865 MHz [173] (13.0 dBm)

valid interface combinations:

* #{ managed } <= 16, #{ AP, mesh point } <= 16, #{ IBSS } <= 1,

total <= 16, #channels <= 1, STA/AP BI must match, radar detect widths: { 20 MHz (no HT), 20 MHz, 40 MHz, 80 MHz, 80+80 MHz, 160 MHz }

HT Capability overrides:

* MCS: ff ff ff ff ff ff ff ff ff ff

* maximum A-MSDU length

* supported channel width

* short GI for 40 MHz

* max A-MPDU length exponent

* min MPDU start spacing

Supported extended features:

* [ VHT_IBSS ]: VHT-IBSS

* [ RRM ]: RRM

* [ SET_SCAN_DWELL ]: scan dwell setting

* [ CQM_RSSI_LIST ]: multiple CQM_RSSI_THOLD records

* [ CONTROL_PORT_OVER_NL80211 ]: control port over nl80211

* [ TXQS ]: FQ-CoDel-enabled intermediate TXQs

* [ AIRTIME_FAIRNESS ]: airtime fairness scheduling

I tested connections from 2 different laptops (Windows & Linux, two different wifi cards, both wifi cards should support 160 Mhz channels) but I am able only to get 80MHz

Could anyone advise if this is achievable?

slh

August 7, 2020, 8:01pm

2396

root@nbg6817:~# uptime

20:00:10 up 16:05, load average: 0.37, 0.11, 0.03

root@nbg6817:~# cat /sys/devices/system/cpu/cpufreq/policy0/stats/trans_table

From : To

: 384000 600000 800000 1000000 1400000 1725000

384000: 0 100767 14267 6630 3881 17421

600000: 96405 0 14036 11440 5275 43313

800000: 12909 8554 0 13942 11487 39141

1000000: 10236 5917 4802 0 23132 11508

1400000: 15152 8247 9797 11426 0 13900

1725000: 8264 46984 43131 12157 14747 0

root@nbg6817:~# cat /sys/devices/system/cpu/cpufreq/policy1/stats/trans_table

From : To

: 384000 600000 800000 1000000 1400000 1725000

384000: 0 193676 27599 17748 8264 6928

600000: 193266 0 16720 10752 4049 11138

800000: 24972 11958 0 11421 8056 12357

1000000: 18716 7047 2916 0 17134 10309

1400000: 12510 8574 6008 7143 0 17803

1725000: 4751 14670 15521 9058 14535 0

2-3 hours of rather active usage, the rest of the time mostly idle background traffic.

Ansuel

August 7, 2020, 8:14pm

2397

Ok the driver seems to be working fine... the results more or less match the data without the driver active so my problem was related to something else...

2 Likes

facboy

August 10, 2020, 10:04am

2398

is anybody running the IPQ8065 switch with the DSA driver? i keep getting messages in the log that lan1 is down, even though nothing is connected to it. lately the wan port has started logging the same message sporadically, which does interrupt the pppoe-wan link.

Ansuel

August 10, 2020, 12:02pm

2401

(not related to @facboy problem)

Can someone test this commit

openwrt:master ← Ansuel:upstream-patch

opened 01:59PM - 08 Aug 20 UTC

(one way to test this would be check if the wifi speed is similar to an image without this and check if any packet is lost)

facboy

August 11, 2020, 5:18pm

2402

well i don't know if it's related, but i've tried this patch and now my link is dropping every 5 minutes. i think i'm gonna give up on DSA, it's clearly too buggy.

Ansuel

August 11, 2020, 5:30pm

2403

these patch doesn't touch any part of the ethernet driver

facboy

August 11, 2020, 6:31pm

2404

yes, it's v hot here, could be something is overheating.