@romanovj in my case I see no differences in speed after using ethtool -K wan rx-gro-list off (wan1 in my router).

It can still benefit from hardware aes-ctr + software ghash.

openvpn dco supports only aes-gcm and chacha-polly

You should know how gcm works in Linux: it reuses aes-ctr algorithm (which eip93 already supports) then use ghash to generate authtag (which can only be done in software).

Roughly matches cryptsetup which runs on all cores, 10MB/s on CPUs (23.05) and 30-some with eip93 (24.10.0) this is octets not bits.

eip197 was not the bottleneck here - it was how ovpn-dco handle async crypto requests. Currently it always waits for the previous one to complete before handling the next one, which is very ineffective.

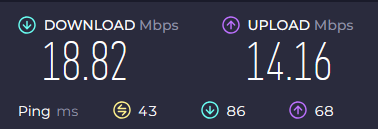

Stock firmware (ER605v2) + OpenVPN

OpenWRT 24.10 + OpenVPN +dco patch (ER605v2)

The difference is quite impresive.

Ahh ok, cryptsetup in this case is different code.

Is it hard to fix it?

The refactored code is being sent upstream

https://lore.kernel.org/netdev/20250211-b4-ovpn-v19-0-86d5daf2a47a@openvpn.net/

And I have a fix already, it also does out-of-place crypto:

patch

--- a/drivers/net/ovpn-dco/crypto_aead.c

+++ b/drivers/net/ovpn-dco/crypto_aead.c

@@ -17,23 +17,34 @@

#include <linux/skbuff.h>

#include <linux/printk.h>

-#define AUTH_TAG_SIZE 16

+void ovpn_decrypt_async_cb(struct crypto_async_request *areq, int ret);

+void ovpn_encrypt_async_cb(struct crypto_async_request *areq, int ret);

-static int ovpn_aead_encap_overhead(const struct ovpn_crypto_key_slot *ks)

+/* like aead_request_alloc, but allocates extra space for scatterlist[nfrags + 2] */

+static __always_inline struct aead_request *

+ovpn_aead_request_alloc(struct crypto_aead *tfm, int nfrags, gfp_t gfp)

{

- return OVPN_OP_SIZE_V2 + /* OP header size */

- 4 + /* Packet ID */

- crypto_aead_authsize(ks->encrypt); /* Auth Tag */

+ struct aead_request *req;

+

+ req = kmalloc(ALIGN(sizeof(*req) + crypto_aead_reqsize(tfm), __alignof__(struct scatterlist)) +

+ sizeof(struct scatterlist) * (nfrags + 2), gfp);

+

+ if (likely(req))

+ aead_request_set_tfm(req, tfm);

+

+ return req;

+}

+

+static struct scatterlist *ovpn_aead_request_to_sg(struct aead_request *req, struct crypto_aead *tfm)

+{

+ return (void *)req + ALIGN(sizeof(*req) + crypto_aead_reqsize(tfm), __alignof__(struct scatterlist));

}

int ovpn_aead_encrypt(struct ovpn_crypto_key_slot *ks, struct sk_buff *skb, u32 peer_id)

{

- const unsigned int tag_size = crypto_aead_authsize(ks->encrypt);

- const unsigned int head_size = ovpn_aead_encap_overhead(ks);

- struct scatterlist sg[MAX_SKB_FRAGS + 2];

- DECLARE_CRYPTO_WAIT(wait);

+ struct scatterlist *sg = NULL, *dsg;

struct aead_request *req;

- struct sk_buff *trailer;

+ struct sk_buff *nskb, *frag_skb;

u8 iv[NONCE_SIZE];

int nfrags, ret;

u32 pktid, op;

@@ -45,30 +56,25 @@ int ovpn_aead_encrypt(struct ovpn_crypto

* IV head]

*/

- /* check that there's enough headroom in the skb for packet

- * encapsulation, after adding network header and encryption overhead

- */

- if (unlikely(skb_cow_head(skb, OVPN_HEAD_ROOM + head_size)))

- return -ENOBUFS;

-

/* get number of skb frags and ensure that packet data is writable */

- nfrags = skb_cow_data(skb, 0, &trailer);

- if (unlikely(nfrags < 0))

- return nfrags;

+ nfrags = skb_shinfo(skb)->nr_frags + 1;

+ skb_walk_frags(skb, frag_skb)

+ nfrags += skb_shinfo(frag_skb)->nr_frags + 1;

- if (unlikely(nfrags + 2 > ARRAY_SIZE(sg)))

+ if (unlikely(nfrags > MAX_SKB_FRAGS))

return -ENOSPC;

- req = aead_request_alloc(ks->encrypt, GFP_KERNEL);

+ req = ovpn_aead_request_alloc(ks->encrypt, nfrags, GFP_KERNEL);

if (unlikely(!req))

return -ENOMEM;

+ sg = ovpn_aead_request_to_sg(req, ks->encrypt);

/* sg table:

* 0: op, wire nonce (AD, len=OVPN_OP_SIZE_V2+NONCE_WIRE_SIZE),

* 1, 2, 3, ..., n: payload,

* n+1: auth_tag (len=tag_size)

*/

- sg_init_table(sg, nfrags + 2);

+ sg_init_table(sg, nfrags + 1);

/* build scatterlist to encrypt packet payload */

ret = skb_to_sgvec_nomark(skb, sg + 1, 0, skb->len);

@@ -77,10 +83,6 @@ int ovpn_aead_encrypt(struct ovpn_crypto

goto free_req;

}

- /* append auth_tag onto scatterlist */

- __skb_push(skb, tag_size);

- sg_set_buf(sg + nfrags + 1, skb->data, tag_size);

-

/* obtain packet ID, which is used both as a first

* 4 bytes of nonce and last 4 bytes of associated data.

*/

@@ -88,35 +90,50 @@ int ovpn_aead_encrypt(struct ovpn_crypto

if (unlikely(ret < 0))

goto free_req;

+ nskb = __alloc_skb(skb->len + NET_IP_ALIGN + LL_MAX_HEADER + 32, GFP_KERNEL, 0, NUMA_NO_NODE);

+ if (unlikely(!nskb)) {

+ ret = -ENOMEM;

+ goto free_req;

+ }

+

+ skb_reserve(nskb, NET_IP_ALIGN + LL_MAX_HEADER);

+ dsg = (struct scatterlist *)nskb->cb;

+ sg_init_table(dsg, 2);

+ sg_set_buf(dsg + 1, __skb_put(nskb, skb->len), SKB_DATA_ALIGN(skb->len + AUTH_TAG_SIZE));

+ sg_set_buf(dsg, __skb_push(nskb, AUTH_TAG_SIZE + NONCE_WIRE_SIZE + OVPN_OP_SIZE_V2), NONCE_WIRE_SIZE + OVPN_OP_SIZE_V2);

+ OVPN_ASYNC_SKB_CB(skb)->nskb = nskb;

+

/* concat 4 bytes packet id and 8 bytes nonce tail into 12 bytes nonce */

ovpn_pktid_aead_write(pktid, &ks->nonce_tail_xmit, iv);

/* make space for packet id and push it to the front */

- __skb_push(skb, NONCE_WIRE_SIZE);

- memcpy(skb->data, iv, NONCE_WIRE_SIZE);

+ memcpy(nskb->data + OVPN_OP_SIZE_V2, iv, NONCE_WIRE_SIZE);

/* add packet op as head of additional data */

op = ovpn_opcode_compose(OVPN_DATA_V2, ks->key_id, peer_id);

- __skb_push(skb, OVPN_OP_SIZE_V2);

BUILD_BUG_ON(sizeof(op) != OVPN_OP_SIZE_V2);

- *((__force __be32 *)skb->data) = htonl(op);

+ *((__force __be32 *)nskb->data) = htonl(op);

/* AEAD Additional data */

- sg_set_buf(sg, skb->data, OVPN_OP_SIZE_V2 + NONCE_WIRE_SIZE);

+ sg_set_buf(sg, nskb->data, OVPN_OP_SIZE_V2 + NONCE_WIRE_SIZE);

/* setup async crypto operation */

aead_request_set_tfm(req, ks->encrypt);

aead_request_set_callback(req, CRYPTO_TFM_REQ_MAY_BACKLOG |

CRYPTO_TFM_REQ_MAY_SLEEP,

- crypto_req_done, &wait);

- aead_request_set_crypt(req, sg, sg, skb->len - head_size, iv);

+ ovpn_encrypt_async_cb, skb);

+ aead_request_set_crypt(req, sg, dsg, skb->len, iv);

aead_request_set_ad(req, OVPN_OP_SIZE_V2 + NONCE_WIRE_SIZE);

/* encrypt it */

- ret = crypto_wait_req(crypto_aead_encrypt(req), &wait);

- if (ret < 0)

+ ret = crypto_aead_encrypt(req);

+ if (ret == -EINPROGRESS) {

+ return ret;

+ }

+ if (ret < 0) {

net_err_ratelimited("%s: encrypt failed: %d\n", __func__, ret);

-

+ kfree_skb(nskb);

+ }

free_req:

aead_request_free(req);

return ret;

@@ -124,14 +141,13 @@ free_req:

int ovpn_aead_decrypt(struct ovpn_crypto_key_slot *ks, struct sk_buff *skb)

{

- const unsigned int tag_size = crypto_aead_authsize(ks->decrypt);

- struct scatterlist sg[MAX_SKB_FRAGS + 2];

+ const unsigned int tag_size = AUTH_TAG_SIZE;

+ struct sk_buff *nskb = NULL, *frag_skb;

+ struct scatterlist *sg = NULL, *dsg = NULL;

int ret, payload_len, nfrags;

u8 *sg_data, iv[NONCE_SIZE];

unsigned int payload_offset;

- DECLARE_CRYPTO_WAIT(wait);

struct aead_request *req;

- struct sk_buff *trailer;

unsigned int sg_len;

__be32 *pid;

@@ -149,17 +165,18 @@ int ovpn_aead_decrypt(struct ovpn_crypto

return -ENODATA;

/* get number of skb frags and ensure that packet data is writable */

- nfrags = skb_cow_data(skb, 0, &trailer);

- if (unlikely(nfrags < 0))

- return nfrags;

+ nfrags = skb_shinfo(skb)->nr_frags + 1;

+ skb_walk_frags(skb, frag_skb)

+ nfrags += skb_shinfo(frag_skb)->nr_frags + 1;

- if (unlikely(nfrags + 2 > ARRAY_SIZE(sg)))

+ if (unlikely(nfrags > MAX_SKB_FRAGS))

return -ENOSPC;

- req = aead_request_alloc(ks->decrypt, GFP_KERNEL);

+ req = ovpn_aead_request_alloc(ks->decrypt, nfrags, GFP_KERNEL);

if (unlikely(!req))

return -ENOMEM;

+ sg = ovpn_aead_request_to_sg(req, ks->decrypt);

/* sg table:

* 0: op, wire nonce (AD, len=OVPN_OP_SIZE_V2+NONCE_WIRE_SIZE),

* 1, 2, 3, ..., n: payload,

@@ -179,6 +196,17 @@ int ovpn_aead_decrypt(struct ovpn_crypto

goto free_req;

}

+ nskb = __alloc_skb(payload_len + NET_IP_ALIGN + LL_MAX_HEADER + 32, GFP_KERNEL, 0, NUMA_NO_NODE);

+ if (!nskb)

+ return -ENOMEM;

+

+ skb_reserve(nskb, NET_IP_ALIGN + LL_MAX_HEADER);

+ dsg = (struct scatterlist *)nskb->cb;

+ sg_init_table(dsg, 2);

+ sg_set_buf(dsg, skb->data, NONCE_WIRE_SIZE + OVPN_OP_SIZE_V2);

+ sg_set_buf(dsg + 1, __skb_put(nskb, payload_len), SKB_DATA_ALIGN(payload_len));

+ OVPN_ASYNC_SKB_CB(skb)->nskb = nskb;

+

/* append auth_tag onto scatterlist */

sg_set_buf(sg + nfrags + 1, skb->data + sg_len, tag_size);

@@ -191,15 +219,19 @@ int ovpn_aead_decrypt(struct ovpn_crypto

aead_request_set_tfm(req, ks->decrypt);

aead_request_set_callback(req, CRYPTO_TFM_REQ_MAY_BACKLOG |

CRYPTO_TFM_REQ_MAY_SLEEP,

- crypto_req_done, &wait);

- aead_request_set_crypt(req, sg, sg, payload_len + tag_size, iv);

+ ovpn_decrypt_async_cb, skb);

+ aead_request_set_crypt(req, sg, dsg, payload_len + tag_size, iv);

aead_request_set_ad(req, NONCE_WIRE_SIZE + OVPN_OP_SIZE_V2);

/* decrypt it */

- ret = crypto_wait_req(crypto_aead_decrypt(req), &wait);

+ ret = crypto_aead_decrypt(req);

+ if (ret == -EINPROGRESS) {

+ return ret;

+ }

if (ret < 0) {

net_err_ratelimited("%s: decrypt failed: %d\n", __func__, ret);

+ kfree_skb(nskb);

goto free_req;

}

@@ -209,9 +241,6 @@ int ovpn_aead_decrypt(struct ovpn_crypto

if (unlikely(ret < 0))

goto free_req;

- /* point to encapsulated IP packet */

- __skb_pull(skb, payload_offset);

-

free_req:

aead_request_free(req);

return ret;

--- a/drivers/net/ovpn-dco/crypto_aead.h

+++ b/drivers/net/ovpn-dco/crypto_aead.h

@@ -12,6 +12,8 @@

#include "crypto.h"

+#define AUTH_TAG_SIZE 16

+

#include <asm/types.h>

#include <linux/skbuff.h>

--- a/drivers/net/ovpn-dco/main.c

+++ b/drivers/net/ovpn-dco/main.c

@@ -128,7 +128,7 @@ static void ovpn_setup(struct net_device

max(sizeof(struct ipv6hdr), sizeof(struct iphdr));

netdev_features_t feat = NETIF_F_SG | NETIF_F_LLTX |

- NETIF_F_HW_CSUM | NETIF_F_RXCSUM | NETIF_F_GSO |

+ NETIF_F_RXCSUM | NETIF_F_GSO |

NETIF_F_GSO_SOFTWARE | NETIF_F_HIGHDMA;

dev->ethtool_ops = &ovpn_ethtool_ops;

--- a/drivers/net/ovpn-dco/ovpn.c

+++ b/drivers/net/ovpn-dco/ovpn.c

@@ -24,6 +24,7 @@

#include <linux/workqueue.h>

#include <net/gso.h>

#include <uapi/linux/if_ether.h>

+#include <crypto/aead.h>

static const unsigned char ovpn_keepalive_message[] = {

0x2a, 0x18, 0x7b, 0xf3, 0x64, 0x1e, 0xb4, 0xcb,

@@ -68,7 +69,7 @@ int ovpn_struct_init(struct net_device *

spin_lock_init(&ovpn->peers.lock);

ovpn->crypto_wq = alloc_workqueue("ovpn-crypto-wq-%s",

- WQ_CPU_INTENSIVE | WQ_MEM_RECLAIM, 0,

+ WQ_UNBOUND | WQ_MEM_RECLAIM, 0,

dev->name);

if (!ovpn->crypto_wq)

return -ENOMEM;

@@ -112,6 +113,7 @@ static void tun_netdev_write(struct ovpn

skb_set_queue_mapping(skb, 0);

skb_scrub_packet(skb, true);

+ skb_reset_mac_header(skb);

skb_reset_network_header(skb);

skb_reset_transport_header(skb);

skb_probe_transport_header(skb);

@@ -123,7 +125,7 @@ static void tun_netdev_write(struct ovpn

dev_sw_netstats_rx_add(peer->ovpn->dev, skb->len);

/* cause packet to be "received" by tun interface */

- napi_gro_receive(&peer->napi, skb);

+ netif_receive_skb(skb);

}

int ovpn_napi_poll(struct napi_struct *napi, int budget)

@@ -170,36 +172,15 @@ int ovpn_recv(struct ovpn_struct *ovpn,

return 0;

}

-static int ovpn_decrypt_one(struct ovpn_peer *peer, struct sk_buff *skb)

+static int ovpn_decrypt_done(struct ovpn_crypto_key_slot *ks, struct ovpn_peer *peer, struct sk_buff *skb)

{

struct ovpn_peer *allowed_peer = NULL;

- struct ovpn_crypto_key_slot *ks;

__be16 proto;

- int ret = -1;

- u8 key_id;

-

- ovpn_peer_stats_increment_rx(&peer->link_stats, skb->len);

-

- /* get the key slot matching the key Id in the received packet */

- key_id = ovpn_key_id_from_skb(skb);

- ks = ovpn_crypto_key_id_to_slot(&peer->crypto, key_id);

- if (unlikely(!ks)) {

- net_info_ratelimited("%s: no available key for peer %u, key-id: %u\n", __func__,

- peer->id, key_id);

- goto drop;

- }

-

- /* decrypt */

- ret = ovpn_aead_decrypt(ks, skb);

+ int ret;

+ prefetch(skb->data);

ovpn_crypto_key_slot_put(ks);

- if (unlikely(ret < 0)) {

- net_err_ratelimited("%s: error during decryption for peer %u, key-id %u: %d\n",

- __func__, peer->id, key_id, ret);

- goto drop;

- }

-

/* note event of authenticated packet received for keepalive */

ovpn_peer_keepalive_recv_reset(peer);

@@ -257,6 +238,46 @@ drop:

return ret;

}

+static int ovpn_decrypt_one(struct ovpn_peer *peer, struct sk_buff *skb)

+{

+ struct ovpn_crypto_key_slot *ks;

+ int ret = -1;

+ u8 key_id;

+

+ ovpn_peer_stats_increment_rx(&peer->link_stats, skb->len);

+

+ /* get the key slot matching the key Id in the received packet */

+ key_id = ovpn_key_id_from_skb(skb);

+ ks = ovpn_crypto_key_id_to_slot(&peer->crypto, key_id);

+ if (unlikely(!ks)) {

+ net_info_ratelimited("%s: no available key for peer %u, key-id: %u\n", __func__,

+ peer->id, key_id);

+ goto drop;

+ }

+

+ /* decrypt */

+ OVPN_ASYNC_SKB_CB(skb)->ks = ks;

+ OVPN_ASYNC_SKB_CB(skb)->peer = peer;

+ ret = ovpn_aead_decrypt(ks, skb);

+ if (ret == -EINPROGRESS)

+ return ret; /* not an error */

+ if (!ret) {

+ struct sk_buff *nskb = OVPN_ASYNC_SKB_CB(skb)->nskb;

+ kfree_skb(skb);

+ return ovpn_decrypt_done(ks, peer, nskb);

+ }

+ if (ret) {

+ net_err_ratelimited("%s: error during decryption for peer %u, key-id %u: %d\n",

+ __func__, peer->id, key_id, ret);

+ goto drop;

+ }

+drop:

+ if (unlikely(ret < 0))

+ kfree_skb(skb);

+

+ return ret;

+}

+

/* pick packet from RX queue, decrypt and forward it to the tun device */

void ovpn_decrypt_work(struct work_struct *work)

{

@@ -280,29 +301,71 @@ void ovpn_decrypt_work(struct work_struc

ovpn_peer_put(peer);

}

-static bool ovpn_encrypt_one(struct ovpn_peer *peer, struct sk_buff *skb)

+void ovpn_decrypt_async_cb(struct crypto_async_request *areq, int ret)

+{

+ struct aead_request *req = container_of(areq, struct aead_request, base);

+ struct sk_buff *skb = areq->data;

+ struct ovpn_peer *peer = OVPN_ASYNC_SKB_CB(skb)->peer;

+ struct ovpn_crypto_key_slot *ks = OVPN_ASYNC_SKB_CB(skb)->ks;

+ struct sk_buff *nskb = OVPN_ASYNC_SKB_CB(skb)->nskb;

+ const unsigned int tag_size = AUTH_TAG_SIZE;

+ unsigned int payload_offset;

+ int payload_len;

+ __be32 *pid;

+

+ aead_request_free(req);

+

+ if (unlikely(ret)) {

+ net_err_ratelimited("%s: decrypt failed: %d\n", __func__, ret);

+ kfree_skb(nskb);

+ goto out;

+ }

+

+ payload_offset = OVPN_OP_SIZE_V2 + NONCE_WIRE_SIZE + tag_size;

+ payload_len = skb->len - payload_offset;

+

+ /* sanity check on packet size, payload size must be >= 0 */

+ if (unlikely(payload_len < 0)){

+ ret = -EINVAL;

+ goto out;

+ }

+

+ /* PID sits after the op */

+ pid = (__force __be32 *)(skb->data + OVPN_OP_SIZE_V2);

+ ret = ovpn_pktid_recv(&ks->pid_recv, ntohl(*pid), 0);

+ if (unlikely(ret < 0))

+ goto out;

+

+ ret = ovpn_decrypt_done(ks, peer, nskb);

+ if (ret == 0) {

+ napi_schedule(&peer->napi);

+ }

+out:

+ kfree_skb(skb);

+}

+

+static int ovpn_encrypt_one(struct ovpn_peer *peer, struct sk_buff *skb)

{

struct ovpn_crypto_key_slot *ks;

- bool success = false;

- int ret;

+ int ret = -1;

/* get primary key to be used for encrypting data */

ks = ovpn_crypto_key_slot_primary(&peer->crypto);

if (unlikely(!ks)) {

net_warn_ratelimited("%s: error while retrieving primary key slot\n", __func__);

- return false;

- }

-

- if (unlikely(skb->ip_summed == CHECKSUM_PARTIAL &&

- skb_checksum_help(skb))) {

- net_err_ratelimited("%s: cannot compute checksum for outgoing packet\n", __func__);

- goto err;

+ return ret;

}

ovpn_peer_stats_increment_tx(&peer->vpn_stats, skb->len);

/* encrypt */

+ OVPN_ASYNC_SKB_CB(skb)->ks = ks;

+ OVPN_ASYNC_SKB_CB(skb)->peer = peer;

ret = ovpn_aead_encrypt(ks, skb, peer->id);

+ if (ret == -EINPROGRESS) {

+ /* not an error */

+ return ret;

+ }

if (unlikely(ret < 0)) {

/* if we ran out of IVs we must kill the key as it can't be used anymore */

if (ret == -ERANGE) {

@@ -316,12 +379,38 @@ static bool ovpn_encrypt_one(struct ovpn

goto err;

}

- success = true;

-

ovpn_peer_stats_increment_tx(&peer->link_stats, skb->len);

err:

ovpn_crypto_key_slot_put(ks);

- return success;

+ return ret;

+}

+

+static void ovpn_encrypt_done(struct ovpn_peer *peer, struct sk_buff *skb)

+{

+ struct sk_buff *nskb = OVPN_ASYNC_SKB_CB(skb)->nskb;

+ skb_mark_not_on_list(skb);

+

+ kfree_skb(skb);

+ skb = nskb;

+ /* copy tag from ctext tail back to ctext head */

+ memcpy(skb->data + 8, skb_tail_pointer(skb), AUTH_TAG_SIZE);

+ ovpn_peer_stats_increment_tx(&peer->link_stats, skb->len);

+ switch (peer->sock->sock->sk->sk_protocol)

+ {

+ case IPPROTO_UDP:

+ ovpn_udp_send_skb(peer->ovpn, peer, skb);

+ break;

+ case IPPROTO_TCP:

+ ovpn_tcp_send_skb(peer, skb);

+ break;

+ default:

+ /* no transport configured yet */

+ consume_skb(skb);

+ break;

+ }

+

+ /* note event of authenticated packet xmit for keepalive */

+ ovpn_peer_keepalive_xmit_reset(peer);

}

/* Process packets in TX queue in a transport-specific way.

@@ -333,6 +422,7 @@ void ovpn_encrypt_work(struct work_struc

{

struct sk_buff *skb, *curr, *next;

struct ovpn_peer *peer;

+ int ret;

peer = container_of(work, struct ovpn_peer, encrypt_work);

while ((skb = ptr_ring_consume_bh(&peer->tx_ring))) {

@@ -340,38 +430,13 @@ void ovpn_encrypt_work(struct work_struc

* independently

*/

skb_list_walk_safe(skb, curr, next) {

- /* if one segment fails encryption, we drop the entire

- * packet, because it does not really make sense to send

- * only part of it at this point

- */

- if (unlikely(!ovpn_encrypt_one(peer, curr))) {

- kfree_skb_list(skb);

- skb = NULL;

- break;

- }

- }

-

- /* successful encryption */

- if (skb) {

- skb_list_walk_safe(skb, curr, next) {

- skb_mark_not_on_list(curr);

-

- switch (peer->sock->sock->sk->sk_protocol) {

- case IPPROTO_UDP:

- ovpn_udp_send_skb(peer->ovpn, peer, curr);

- break;

- case IPPROTO_TCP:

- ovpn_tcp_send_skb(peer, curr);

- break;

- default:

- /* no transport configured yet */

- consume_skb(skb);

- break;

- }

- }

-

- /* note event of authenticated packet xmit for keepalive */

- ovpn_peer_keepalive_xmit_reset(peer);

+ ret = ovpn_encrypt_one(peer, curr);

+ if (ret == 0)

+ ovpn_encrypt_done(peer, curr);

+ else if (ret == -EINPROGRESS)

+ continue;

+ else

+ kfree_skb(skb);

}

/* give a chance to be rescheduled if needed */

@@ -380,6 +445,22 @@ void ovpn_encrypt_work(struct work_struc

ovpn_peer_put(peer);

}

+void ovpn_encrypt_async_cb(struct crypto_async_request *areq, int ret)

+{

+ struct aead_request *req = container_of(areq, struct aead_request, base);

+ struct sk_buff *skb = areq->data;

+ struct ovpn_peer *peer = OVPN_ASYNC_SKB_CB(skb)->peer;

+ struct ovpn_crypto_key_slot *ks = OVPN_ASYNC_SKB_CB(skb)->ks;

+

+ aead_request_free(req);

+

+ if (likely(!ret)) {

+ ovpn_encrypt_done(peer, skb);

+ }

+

+ ovpn_crypto_key_slot_put(ks);

+}

+

/* Put skb into TX queue and schedule a consumer */

static void ovpn_queue_skb(struct ovpn_struct *ovpn, struct sk_buff *skb, struct ovpn_peer *peer)

{

--- a/drivers/net/ovpn-dco/pktid.c

+++ b/drivers/net/ovpn-dco/pktid.c

@@ -32,7 +32,7 @@ int ovpn_pktid_recv(struct ovpn_pktid_re

const unsigned long now = jiffies;

int ret;

- spin_lock(&pr->lock);

+ spin_lock_bh(&pr->lock);

/* expire backtracks at or below pr->id after PKTID_RECV_EXPIRE time */

if (unlikely(time_after_eq(now, pr->expire)))

@@ -122,6 +122,6 @@ int ovpn_pktid_recv(struct ovpn_pktid_re

pr->expire = now + PKTID_RECV_EXPIRE;

ret = 0;

out:

- spin_unlock(&pr->lock);

+ spin_unlock_bh(&pr->lock);

return ret;

}

--- a/drivers/net/ovpn-dco/skb.h

+++ b/drivers/net/ovpn-dco/skb.h

@@ -17,15 +17,13 @@

#include <linux/socket.h>

#include <linux/types.h>

-#define OVPN_SKB_CB(skb) ((struct ovpn_skb_cb *)&((skb)->cb))

-struct ovpn_skb_cb {

- union {

- struct in_addr ipv4;

- struct in6_addr ipv6;

- } local;

- sa_family_t sa_fam;

+struct ovpn_async_skb_cb {

+ struct ovpn_peer *peer;

+ struct ovpn_crypto_key_slot *ks;

+ struct sk_buff *nskb;

};

+#define OVPN_ASYNC_SKB_CB(skb) ((struct ovpn_async_skb_cb *)&((skb)->cb))

/* Return IP protocol version from skb header.

* Return 0 if protocol is not IPv4/IPv6 or cannot be read.

--- a/drivers/net/ovpn-dco/tcp.c

+++ b/drivers/net/ovpn-dco/tcp.c

@@ -26,7 +26,6 @@ static int ovpn_tcp_read_sock(read_descr

{

struct sock *sk = desc->arg.data;

struct ovpn_socket *sock;

- struct ovpn_skb_cb *cb;

struct ovpn_peer *peer;

size_t chunk, copied = 0;

int status;

@@ -93,10 +92,6 @@ static int ovpn_tcp_read_sock(read_descr

if (peer->tcp.offset != peer->tcp.data_len)

goto next_read;

- /* do not perform IP caching for TCP connections */

- cb = OVPN_SKB_CB(peer->tcp.skb);

- cb->sa_fam = AF_UNSPEC;

-

/* At this point we know the packet is from a configured peer.

* DATA_V2 packets are handled in kernel space, the rest goes to user space.

*

openwrt-23 (CONFIG_KERNEL_WERROR=y, gcc-12) - build OK

openwrt-24 (CONFIG_KERNEL_WERROR=y, gcc-13) - error

./ovpn-dco-20240408/drivers/net/ovpn-dco/crypto_aead.c: In function 'ovpn_aead_encrypt':

../ovpn-dco-20240408/drivers/net/ovpn-dco/crypto_aead.c:124:35: error: passing argument 3 of 'aead_request_set_callback' from incompatible pointer type [-Werror=incompatible-pointer-types]

124 | ovpn_encrypt_async_cb, skb);

| ^~~~~~~~~~~~~~~~~~~~~

| |

| void (*)(struct crypto_async_request *, int)

In file included from ../ovpn-dco-20240408/drivers/net/ovpn-dco/crypto_aead.c:16:

./include/crypto/aead.h:489:66: note: expected 'crypto_completion_t' {aka 'void (*)(void *, int)'} but argument is of type 'void (*)(struct crypto_async_request *, int)'

489 | crypto_completion_t compl,

| ~~~~~~~~~~~~~~~~~~~~^~~~~

../ovpn-dco-20240408/drivers/net/ovpn-dco/crypto_aead.c: In function 'ovpn_aead_decrypt':

../ovpn-dco-20240408/drivers/net/ovpn-dco/crypto_aead.c:222:35: error: passing argument 3 of 'aead_request_set_callback' from incompatible pointer type [-Werror=incompatible-pointer-types]

222 | ovpn_decrypt_async_cb, skb);

| ^~~~~~~~~~~~~~~~~~~~~

| |

| void (*)(struct crypto_async_request *, int)

./include/crypto/aead.h:489:66: note: expected 'crypto_completion_t' {aka 'void (*)(void *, int)'} but argument is of type 'void (*)(struct crypto_async_request *, int)'

489 | crypto_completion_t compl,

It was kernel API changes. I'll port it to 24 later.

Did you test it? I have:

[ 63.879519] Unable to handle kernel read from unreadable memory at virtual address 00000000400d1cc0

[ 64.165079] CPU: 1 PID: 1099 Comm: irq/20-10320000 Not tainted 5.15.167 #0

[ 64.171939] Hardware name: Routerich AX3000 (DT)

[ 64.176541] pstate: 80400005 (Nzcv daif +PAN -UAO -TCO -DIT -SSBS BTYPE=--)

[ 64.183488] pc : dst_release+0x20/0xb4

[ 64.187233] lr : skb_scrub_packet+0xdc/0xf0

[ 64.191405] sp : ffffffc00910bab0

[ 64.194705] x29: ffffffc00910bab0 x28: 0000000000000000 x27: 0000000000000040

[ 64.201828] x26: 0000000000000011 x25: ffffffc008c34440 x24: 0000000000000000

[ 64.208951] x23: 00000000010fa8c0 x22: 00000000020fa8c0 x21: ffffff8006920000

[ 64.216073] x20: 0000000000000000 x19: 00000000400d1c80 x18: 0000000000000070

[ 64.223196] x17: 0000000000000000 x16: 0000000000000000 x15: 0000000000000080

[ 64.230318] x14: 0000000000000001 x13: 000000000000aa04 x12: 0000000000000680

[ 64.237440] x11: ffffff8002236e2c x10: 000000000000006c x9 : ffffff80000d1c00

[ 64.244563] x8 : 0000000000000060 x7 : 0000000000000040 x6 : 0000000000000000

[ 64.251685] x5 : 0000000000000011 x4 : 00000000020fa8c0 x3 : 00000000010fa8c0

[ 64.258808] x2 : 00000000400d1cc0 x1 : 0000000000000060 x0 : 0000000000000001

[ 64.265930] Call trace:

[ 64.268365] dst_release+0x20/0xb4

[ 64.271756] skb_scrub_packet+0xdc/0xf0

[ 64.275580] iptunnel_xmit+0x78/0x240

[ 64.279230] udp_tunnel_xmit_skb+0xd4/0xf4 [udp_tunnel]

[ 64.284489] ovpn_udp_send_skb+0x264/0x43c [ovpn_dco_v2]

[ 64.289802] ovpn_crypto_key_slots_swap+0xe0/0x890 [ovpn_dco_v2]

[ 64.295806] ovpn_encrypt_async_cb+0x30/0x9c [ovpn_dco_v2]

[ 64.301289] 0xffffffc000bec86c

[ 64.304427] irq_thread_fn+0x28/0x90

[ 64.307992] irq_thread+0x10c/0x210

[ 64.311468] kthread+0x11c/0x130

[ 64.314684] ret_from_fork+0x10/0x20

[ 64.318251] Code: aa0003f3 91010262 52800020 f9800051 (885f7c54)

[ 64.324330] ---[ end trace 73a91d01e401e679 ]---

[ 64.333855] Kernel panic - not syncing: Oops: Fatal exception in interrupt

[ 64.340717] SMP: stopping secondary CPUs

[ 64.344628] Kernel Offset: disabled

[ 64.348103] CPU features: 0x0,00000000,20000802

[ 64.352620] Memory Limit: none

[ 64.360476] Rebooting in 3 seconds..

I only tested it on OpenWrt 22 on a different hardware. I think there is a bug in the out-of-place crypto ops, which was meant to workaround the alignment requirement of the hardware crypto engine.

I created a new topic. Please continue the discussion there.

Ok, so the VPN stuff got kinda solved, but what about the OC. How could I modify (without personally rebuilding it) so I can have 1.2GHz with the CPU? Regarding that uboot stuff, still didnt figured out how to use it. I cant build OpenWRT right now cuz I dont have linux anymore and I'm kind of over the buildbot times (barely having any time lately), but I would really want to have this OC.

Thanks!

Nothing to OC this mainstream CPU :D?

I guess I looked into this, but it changed since 5.15 so it wouldnt work.

file drivers/clk/ralink/clk-mt7621.c

serach for mt7621_cpu_recalc_rate

fake patch(manually modify the file or create patch file yourself)

254 static unsigned long mt7621_cpu_recalc_rate(struct clk_hw *hw,

255 unsigned long xtal_clk)

256 {

257 static const u32 prediv_tbl[] = { 0, 1, 2, 2 };

258 struct mt7621_clk *clk = to_mt7621_clk(hw);

259 struct regmap *sysc = clk->priv->sysc;

260 struct regmap *memc = clk->priv->memc;

261 u32 clkcfg, clk_sel, curclk, ffiv, ffrac;

262 - u32 pll, prediv, fbdiv;

262 + u32 pll, prediv, fbdiv, i;

263 unsigned long cpu_clk;

264

265 regmap_read(sysc, SYSC_REG_CLKCFG0, &clkcfg);

266 clk_sel = FIELD_GET(CPU_CLK_SEL_MASK, clkcfg);

267

268 regmap_read(sysc, SYSC_REG_CUR_CLK_STS, &curclk);

269 ffiv = FIELD_GET(CUR_CPU_FDIV_MASK, curclk);

270 ffrac = FIELD_GET(CUR_CPU_FFRAC_MASK, curclk);

271

272 switch (clk_sel) {

273 case 0:

274 cpu_clk = 500000000;

275 break;

276 case 1:

277 regmap_read(memc, MEMC_REG_CPU_PLL, &pll);

278 + pll &= ~(0x7ff);

279 + pll |= (0x362);

280 + regmap_write(memc, MEMC_REG_CPU_PLL, pll);

281 + for(i=0;i<1024;i++);

282 fbdiv = FIELD_GET(CPU_PLL_FBDIV_MASK, pll);

283 prediv = FIELD_GET(CPU_PLL_PREDIV_MASK, pll);

284 cpu_clk = ((fbdiv + 1) * xtal_clk) >> prediv_tbl[prediv];

285 break;

frequency is here

pll |= (0x362);

0x312=1000MHz

0x362=1100MHz

0x3B2=1200MHz

1200 should be to much, I didn't test it

bench^

iperf3 -s -D;

iperf3 -c 192.168.1.10 -R -t 20

stock: 630mbps

1000MHz: 665mbps

1100MHz: 700mbps