By default, only /etc/sysctl.conf (and not /etc/sysctl.d/*) is copied over during system upgrades, so I would still go with /etc/sysctl.conf.

I dug a bit into nf_conntrack_max. The kernel formulas in net/netfilter/nf_conntrack_core.c are (pseudo-code):

- if

hashsizemodule parameter is not provided (and it is not in 21.02 as far as I can find)- nf_conntrack_htable_size = min(256k, max(1k, ram_size_in_bytes / 16k / sizeof(struct *)))

- nf_conntrack_max = 1 * nf_conntrack_htable_size

- if

hashsizeis provided- nf_conntrack_htable_size = hashsize

- nf_conntrack_max = 8 * nf_conntrack_htable_size

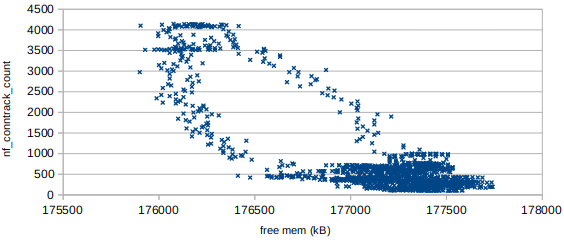

So, on a 256MB, 32bits device, nf_conntrack_max = nf_conntrack_htable_size = 256M/16k/4 = 4096. 4 times less than with the openwrt-provided override. And indeed:

# sysctl net.netfilter.nf_conntrack_buckets`

net.netfilter.nf_conntrack_buckets = 4096

Some more notes: the kernel documentation and comments mention that with nf_conntrack_htable_size == nf_conntrack_max, the average number of entries per hash table slot will be 2, because each connection needs 2 entries. With openwrt's default, in the case of my device, nf_conntrack_max = nf_conntrack_htable_size * 4, so an average of 8 entries per slot. I have not benchmarked anything, but to me this means that openwrt should rather consider increasing net.netfilter.nf_conntrack_buckets to the same value as nf_conntrack_max. And maybe increase nf_conntrack_max in the process.

Going further, my feeling is that the kernel should maybe expose the 16k divisor applied to total ram size: this would allow using the in-kernel formula by just expressing that this distribution is geared towards handling a large number of connection rather than trying to leave a lot of free ram for userland programs (which is my interpretation of this default 16k).