The performance governor is already being set, intel_pstate is being used (active). I don't get meaningful changes in performance by setting it to disable or passive in the kernel command line. No meaningful change with C-States toggled on/off; it's hard to test due to requiring a reboot each time, but it seems to be within margin of error.

I don't think that's the case here. In the longer reply I left, I also demonstrated how by putting on a bunch of artificial CPU load on CPU0, my Internet throughput increases almost to the same degree as it is with SQM enabled.

It has nothing to do with traffic shaping itself because when I was testing this machine with OPNsense, it did not have any traffic shaping and I was still able to hit 4.4 Gbps.

The "too small buffers" issue that SQM can alleviate is when there's a shallow-buffered switch downstream, and pacing packets can avoid overflowing that buffer. But since there's no shaping involved here, this does not appear to be that same issue.

Since adding CPU load helps, my guess is that the CPU simply takes too long to wake up from idle and start the NAPI processing whenever a packet interrupt arrives, which causes drops on RX. You can check whether this is the case by looking at ethtool stats: ethtool -S $IFACE, and look for rx_queue_X_drops. If those are non-zero, the NIC RX queue is indeed overflowing. You can try increasing the ring size (with ethtool -G) and see if that helps. ethtool -g will show you the maximum size supported by the NIC.

Another tuning thing to try along these lines is the maximum NAPI budget. See the section on increasing the time SoftIRQs can run on the CPU in this tuning guide.

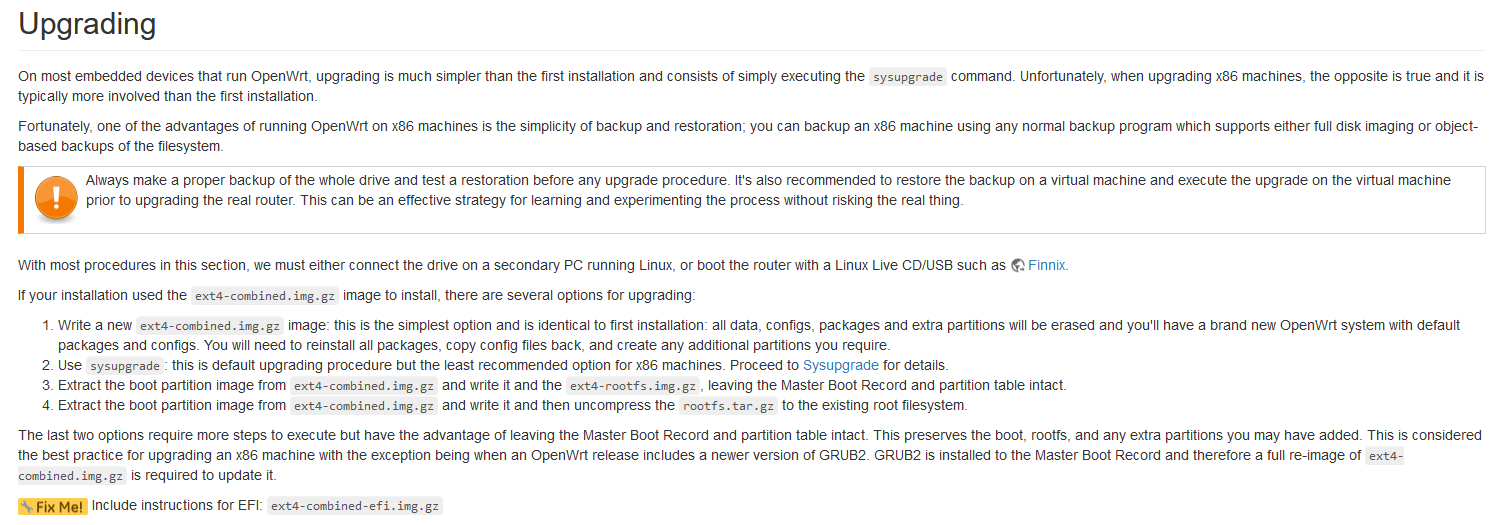

I had problems with my x86 box the other day. Come to find out if you upgrade the system the wrong way it might still work but, it will start exhibiting abnormal behaviors in various ways. My box was also having issues with connection speeds and it was entirely due to OpenWRT being messed up, but still working, albeit at a completely unacceptable level, and the only way to address my issues was to reflash the system from scratch using the latest stable release once again.

After that, the system works flawlessly again. I highly recommend doing that and seeing if that addresses your issue. Refrain from updating anything or applying a sysupgrade as that method of updating does not work properly on x86 boxes hence the back up your configuration to a USB and then fully reflash the system when there is enough updates for you to warrant the upgrade. Once the new image is flashed and you have reacquired all the desired packages simply apply the back up of the configuration.

https://openwrt.org/docs/guide-user/installation/openwrt_x86

Can you tell us more what went wrong? I've been updating x86 devices using sysupgrade tools for years on an almost daily basis and have no issues at all.

Sure thing. My box just started acting abnormal. You would turn options off but they were on, when you would turn them on they would actually be off. You would enter a value for the SQM scripts and it would not put the right amount of zeros when actually employing the script but in the LuCi UI it would show the proper number entered for the script. Just weird stuff.

When you sysupgrade x86 boxes, especially if you are using packages beyond what is normally there in the stock images, things just start to get weird. Things will work just not as intended or as expected.

In my case it started applying SQM across connections I didn't want SQM on and was inappropriately applying incorrect values to the SQM scripts. So instead of the SQM values I was trying to apply to the Gigabit wan line it was applying it to the LAN instead, just weird messed up stuff.

Once I wiped the hard drive on that machine and reflashed an image to it, everything works fine and as expected. Then I read the documentation again and saw the line in the documentation that they do not recommend upgrading via sysupgrade as weird issues manifest and I can confirm that is 100% accurate. Listen to the documentation. Lol.

I checked with ethtool and none of my ixgbe (10GbE) interfaces seem to report rx_queue_X_drops at all. My igc (2.5GbE) interfaces do report it, but with 0.. because they're unused. I tried the advice of upping the ring size.. which following the Red Hat docs I believe it'd be ethtool -G eth0 rx 8192 (and the same for eth1 which is my WAN), but that didn't improve my throughput.

I also followed the advice of increasing the net.core.netdev_max_backlog sysctl. The command listed there didn't work for me and I don't understand why so I just blindly kept doubling the number all the way up to 32000 without seeing any improvements, so I reverted it back to stock (1000).

root@OpenWrt:~# awk '{for (i=1; i<=NF; i++) printf strtonum("0x" $i) (i==NF?"\n":" ")}' /proc/net/softnet_stat | column -t

-ash: column: not found

awk: cmd. line:1: Call to undefined function

I also followed the next advice on the page (34.1.3) and I don't see dropped packets either

I don't think so; in my initial testing I've seen myself getting 2~ Gbps uplink speeds after a fresh install of OpenWrt, having configured absolutely nothing other than the PPPoE connection. I've done that on 23.05 as well as the snapshots, from the same day of the OP here.

I have another update: testing my throughput directly through the OpenWrt machine, I can get 4.5+ Gbps consistently without SQM or any other nonsense. So it's just other devices that experience the slowdown, not the router itself. But I can get 10 Gbps from a device on the same network to the router via iperf3. I don't get it. On the other hand, iperf3 only uses 35% of a single CPU core to max out the entire 10 gig NIC, so I genuinely don't understand what's going on here. Need some kind of CPU load to make Internet connections faster for the other local clients on the network..?

Never ever run iperf3 or similar speed testing tools on the same device you're testing. This should be emphasized in bold, capital letters in the OpenWRT documentation.

Well if you have CPU to spare, why not? Also depends what you’re testing like NAT, routing vs lan or wifi.

Well, no!

It always has a bigger impact than you might imagine, especially on embedded devices. So, don’t let it become a bad habit.

It's not an embedded device. It's an x86 machine with 8 CPU cores.. and the single CPU core it stresses does not even get close to being maxed out even when I stress out the entire NIC.

Sure, there might be some impact because of context switching between iperf and all other processes running on the tested device. However, I find that it often doesn't matter if just testing 1 Gbps ethernet link or let's say 80 MHz wide AX WiFi. If none of your CPU cores gets maxed out, then there shouldn't be any significant difference.

However, when looking at SQM, NAT or routing performance, then it only makes sense to run iperf on other devices than the one in test, which is the case here.

It doesn’t really matter since the network traffic ends up in user space instead of just being routed between the interfaces. If you really want to know what’s going on, just use the actual interfaces and set up iperf on a separate server. Get rid of queues, firewall, nat and anything else that messes with nftables’ default flow, and run Linux kernel profiling that is actually intended for that kind of troubleshooting. Always start by checking and pret-testing your own test environment to make sure everything works well before diving into other tests.

Here are a few common issues and bottlenecks to look out for: a poorly functioning test environment, MTU mismatches, flapping ports, poorly configured firewall and nat, hardware offloading that’s not working leading to excessive interrupts that can kill the CPU (check interrupt rate, coalescing/balancing and receive-side scaling), incorrectly configured ring buffers and zero-copy issues, hidden nftables hooks causing interference, starving kernel network buffers, or simply just very buggy NIC device drivers in general.

Or you can keep doing trial-and-error...

Hi, @yuv420p10le do you have enabled flow control?

You can check by using: ethtool -a <interface>.

to;Dr I have similar issue, but just with upload (upload was more less fine when SQM was enabled). After enabling flow control:

ethtool -A <interface> rx on tx on; ip link set <interface> down; sleep 2; ip link set <interface> up

), and disable sqm, problem disappear