Speeds are fine. Test with the power line adapter, WiFi is trash for gaming.

@EXREYFOX how does it work for you?

At this moment i play BF1 on PS4 with Auto (GAMEUP\GAMEDOWN)

This photo is with no other traffic on the router, just ps4

I was confused but when I clicked through it made sense. That seems outstandingly good, very constant latency, zero packet loss, etc.

BF Games have this grafic its very good to see if

qos and sqm works..

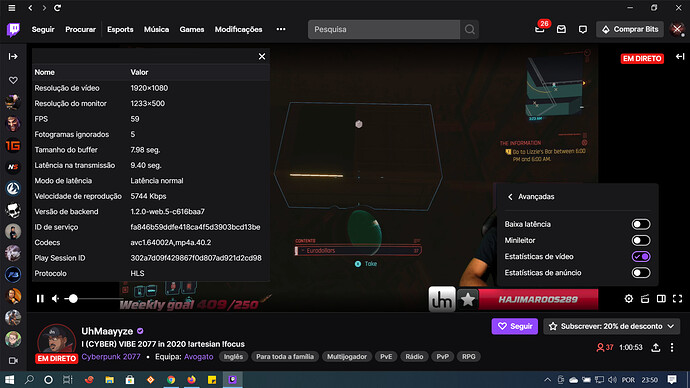

Now i play the same game in a 60hz server with me seeing a stream from a pc(not gaming pc) at 1080-60fps.

Stream data:

I will test others games with some time of latency grafic like (fortnite or apex-legend).

Cod game are not god to test trash net-code and the lang-comp

can you send a test a bufferbloat for see please thanks i' have playing during 1h is so horrible at my home i don't understand

battefield i have symbol cercle red with latency and packet loss

I'm sorry to tell you that the script is not too well developed for the moment for the connexion adsl if you have gameplay to date I would like to see them, in all the games my character has become slow, slow web browsing, is just my impression

Juju, best would be to get a packet capture from eth0 during game play. Pm me a link.

Use command tcpdump -i eth0 -w /tmp/capture.pcap

Your game has zero packet loss and variation in packet arrival of 1.1ms even while a second pc streams 1080p/60hz

This is outstanding

how you downoload speed you are in speed test ?

because he has a vdsl2 i think at adsl we are the problem ...

Knomax has almost the same connection as you ADSL

yes i know but i don't know if work at his home

I bet I know what is wrong. At these low speeds, remaining connected to LUCI uses up all your bandwidth!!! YOU CAN NOT remain connected to the router web interface. ssh should be ok though.

This is something I need to add in version 1.1.0 or something, we need a special queue for the router traffic itself. Only people with very slow connections have this issue, but the LUCI auto-updates can easily generate bursts of traffic that exceed the download bandwidth. It ruins the connection.

i just wanted to ask is the script working here

i am using cake in wan and lan to me it looks like it is

but im pretty sure i aint

as it aint showing in the status firewall screen

root@OpenWrt:~# tc -s qdisc

qdisc noqueue 0: dev lo root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc fq_codel 0: dev eth0 root refcnt 2 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

Sent 56718888 bytes 103183 pkt (dropped 0, overlimits 0 requeues 5)

backlog 0b 0p requeues 5

maxpacket 1514 drop_overlimit 0 new_flow_count 7 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc cake 801e: dev eth0.1 root refcnt 2 bandwidth 16Mbit besteffort triple-isolate nonat nowash no-ack-filter split-gso rtt 100.0ms noatm overhead 26

Sent 5577630 bytes 18179 pkt (dropped 0, overlimits 198 requeues 0)

backlog 0b 0p requeues 0

memory used: 7936b of 4Mb

capacity estimate: 16Mbit

min/max network layer size: 28 / 1500

min/max overhead-adjusted size: 54 / 1526

average network hdr offset: 14

Tin 0

thresh 16Mbit

target 5.0ms

interval 100.0ms

pk_delay 18us

av_delay 13us

sp_delay 11us

backlog 0b

pkts 18179

bytes 5577630

way_inds 0

way_miss 54

way_cols 0

drops 0

marks 0

ack_drop 0

sp_flows 1

bk_flows 1

un_flows 0

max_len 1514

quantum 488

qdisc cake 801b: dev eth0.2 root refcnt 2 bandwidth 1Mbit besteffort triple-isolate nonat nowash no-ack-filter split-gso rtt 100.0ms noatm overhead 26

Sent 3332732 bytes 16843 pkt (dropped 0, overlimits 1017 requeues 0)

backlog 0b 0p requeues 0

memory used: 15872b of 4Mb

capacity estimate: 1Mbit

min/max network layer size: 28 / 1500

min/max overhead-adjusted size: 54 / 1526

average network hdr offset: 14

Tin 0

thresh 1Mbit

target 18.2ms

interval 113.2ms

pk_delay 17us

av_delay 14us

sp_delay 12us

backlog 0b

pkts 16843

bytes 3332732

way_inds 0

way_miss 88

way_cols 0

drops 0

marks 0

ack_drop 0

sp_flows 0

bk_flows 1

un_flows 0

max_len 1514

quantum 300

qdisc noqueue 0: dev wlan1 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

root@OpenWrt:~#

For the script to work properly you have to disable SQM.

How that? Unless the shaper also affects internal traffic, connecting to the router's GUI should not cause any noticeable network load (that said LUCI can be CPU intensive but at the low wan rates discussed in that thread most router's even from 10 years ago should be plenty fast to process LUCI and the the shaper without missing a beat...)

The bandwidth & load from an open normal LuCI settings page is negligible.

LuCI consumes most bandwidth and CPU if you keep a heavy-load automatically refreshing page open, e.g. realtime conntract graph with connection DNS enabled...

sqm is disable @Hudra

luci is not on my page while the test @dlakelan

root@OpenWrt:~# tcpdump -i eth0 -w /tmp/capture.pcap

tcpdump: listening on eth0, link-type EN10MB (Ethernet), capture size 262144 byt es

^C9109 packets captured

9143 packets received by filter

0 packets dropped by kernel

root@OpenWrt:~# tcpdump -i eth0 -w /tmp/capture.pcap

tcpdump: listening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes

^C65349 packets captured

65495 packets received by filter

0 packets dropped by kernel

root@OpenWrt:~#

is correct ?

root@OpenWrt:~# tc -s qdisc

qdisc noqueue 0: dev lo root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc fq_codel 0: dev eth0 root refcnt 2 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

Sent 51722115 bytes 88106 pkt (dropped 0, overlimits 0 requeues 26)

backlog 0b 0p requeues 26

maxpacket 3028 drop_overlimit 0 new_flow_count 1667 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc hfsc 1: dev eth0.1 root refcnt 2 default 13

Sent 46813332 bytes 44084 pkt (dropped 4624, overlimits 39932 requeues 0)

backlog 0b 0p requeues 0

qdisc fq_codel 800b: dev eth0.1 parent 1:13 limit 10240p flows 1024 quantum 3000 target 9.0ms interval 104.0ms memory_limit 140000b ecn

Sent 45141413 bytes 41479 pkt (dropped 4066, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 4450 drop_overlimit 455 new_flow_count 6173 ecn_mark 0 drop_overmemory 455

new_flows_len 1 old_flows_len 1

qdisc fq_codel 800d: dev eth0.1 parent 1:15 limit 10240p flows 1024 quantum 3000 target 9.0ms interval 104.0ms memory_limit 140000b ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

Segmentation fault

root@OpenWrt:~#