Hi all,

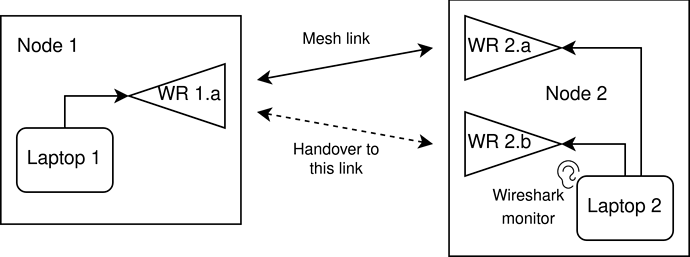

Currently I am working on my bachelors thesis and for this I need to design a handover from one 802.11s connection to another between two nodes, as shown in the figure below.

Context:

- Node 1 has one laptop and one wireless router WR 1.a

- Node 2 has one laptop and two wireless routers: WR 2.a and WR 2.b

- node 2 has two WRs to find the strongest possible link to node 1

- On laptop 2 Wireshark is active in wireless monitor mode

- Each WR runs OpenWRT; laptops run Linux. All devices have static IPs and DHCP disabled.

- All radios are statically configured to have mesh i/f and be in the same MBSS

- Transparent L2 connection between the laptops.

- Handover is required from WR 2.a to WR 2.b

- Existing situation: connection from WR 1.a to WR 2.a

- Desired situation: connection from WR 1.a to WR 2.b

- Laptops should experience as little effect of handover as possible in terms of packet loss, delay, etc. while transferring data.

All WRs have openWRT 21.02.3 with iw-full, kmod-ath9k and wpad-mesh-wolfssl installed using the imagebuilder. /etc/config/network is default (with enabled radio).

/etc/config/wireless:

config wifi-device 'radio0'

option type 'mac80211'

option path 'pci0000:00/0000:00:0e.0'

option channel '36'

option band '5g'

option htmode 'HT20'

option disabled '0'

config wifi-iface 'mesh0'

option device 'radio0'

option network 'lan'

option mode 'mesh'

option ssid 'MaMaNet'

option mesh_id 'MaMaNet'

option encryption 'none'

option disabled '0'

Ifconfig output (truncated with ... and showing only relevant interfaces):

br-lan Link encap:Ethernet HWaddr 00:0D:B9:39:7B:10

inet addr:192.168.1.7 Bcast:192.168.1.255 Mask:255.255.255.0

...

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

...

wlan0 Link encap:Ethernet HWaddr 04:F0:21:17:7B:7E

...

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

...

With WR 2.b powered off, there is a functional mesh network in which I can ping all devices from both laptops. In order to measure mesh link setup time, I make WR 2.a leave the mesh, and subsequently join the mesh after which I can measure the time before the connection is usable (with pings).

Questions:

- Why does ifconfig report wlan0 while the configured interface is called mesh0?

- After WR 2.a joins the mesh, it takes 10-30 seconds before the first ping (laptop to laptop) is successful. Why does it take so long? (Wireshark shows that the Mesh Peering sequence is about 100 ms).

- To facilitate the handover we want to disable automatic peering and only enable/disable specific peerings when needed. We did the following:

- WR 1.a powered on and issued: iw dev wlan0 set mesh_param mesh_auto_open_plinks=0. The setting is confirmed using iw dev wlan0 get mesh_param mesh_auto_open_plinks.

- Then WR 2.a is powered on and connects as usual. We expected WR 2.a to not connect to WR 1.a or to be blocked. How is this possible?

Thank you for your support!