I keep bringing up the Arslan/McKeown paper, if we would signal the max queue occupancy (in say 4 bits) along a path, and return that information to the sender that sender might be able to estimate at how many doublings the queue should be overflowing and simple smoothly exit slow start before dumping too much data into the network. Now, that would not be 100% perfect (after all there are other flows affecting the rate of change of queue occupancy at the bottleneck than just the current flow), but I bet pretty much "good enough" an improvement over the status quo to go for it....

It certainly would beat L4S' harebrained idea of bitbanging the buffer occupancy out as one-bit signal as that requires no packet loss and longer integration time than a per-packet queue occupancy field... I add to this that L4S idea of how to solve this issue essentially was doubling down on packet pair measurements, which we already know not to work well over the existing internet, color me not surprised that there apparently was zero news about "paced chirping" since the thesis looking at it and a few resulting papers had been out...

I pointed at their more recent work on that juniper piece, as well as their methods on the github. There was also a really good conversation with dave reed about aiming for zero queuing in general with early signalling, which tosses a LOT of my prior thinking out with the bathwater, on my linked in also, over here:

I hate how fragmented the conversation is. I am inclined to re-re-re resume work on "bobbie".

quic is a place where we could play with that concept...

Not that it matters, but I am not sure the otherwise great minds behind quic are open to such ideas (1). Also this needs information from the network which we will not be getting any time soon, maybe after L4S will have crashed and burned (or simply whimpered out)...

(1) They seemed to have aligned fully behind the "measure queueing delay directly approach" (2), so not sure whether they would be willing to contemplate different sources of information.

(2) I am not opposed to doing that per se (heck that is the not so secret sauce behind codel and its derivatives(3)), except for slow start that doesn't seem to work out.

(3) Except unlike the end-points the AQM has veridical information about its actual queueing delay, a point Arslan/McKeown make much better than I can. The end-points suffer from having a somewhat noise delay measurement only, where total delay not only depends on the bottlenecks queueing delay, but also on things like transient "jitter" affecting transmission order on non-bottleneck links (4)

(4) As Jonathan once reminded me, our typical links are really either at 100% or 0% load, so it is all about the duty cycle, if a packet reaches an on-average non-congested node while another packet is still being transmitted, it will need to wait for that transmission to finish, so every node introduces its own variable part to the end-to-end delay, good luck tweezing these apart to deduce the bottleneck's true queueing delay without additional information... (I bet there are plenty of paths where just treating the full delay changes as queuing induced will work out fine, but I am not convinced that these balance out those paths where this is wrong).

I had a quick look again at one of the chirping papers and I think at least naive but fast implementations of these ideas are incompatible with the existing internet.... just look at likely bottlenecks like WiFi or a DSL link, both tend to batch packets which at the egress side might be continued along their path not with the shortest packet spacing expected from the link capacity, but at the packet spacing dependent on the speed of the egress interface... this seems generally not ideal. I appreciate a lot the BBR even though focussing on induced delay did not go down that path but instead tries to saturate a path and measure how/if additional rate increases increase the delay, this approach is pretty much immune to the problems that paired-packet measurements bring. I am sure one can cleverly try to counter aggregating physical layers somehow, but likely involving some sort of average and hence compromising on the "fast" part... and fast is not that negotiable either, if I detect the carrying capacity only after having admitted too much in flight data I am not better off than simply doubling the congestion window every RTT until seeing the first drop feedback...

I do not doubt that paced chirping works like a bat out of hell in a well-controlled lab environment free of problematic link types.

BTW, https://www.duo.uio.no/bitstream/handle/10852/63604/main.pdf?sequence=1&isAllowed=y openly admits that

- Bursty link MACs (Medium Access Controllers) can eliminate the individual rates of the packets in a chirp. Dealing with this situation is future work.

in short paced chirping ATM (for more than 10 years) is known to not be fit for general internet deployment...

Thx for the steer to that thesis... a little fq here would help!

And probably more cow-bells...

I fail to see how continuing on a packet pair method is anything but an academic exercise:

'Assume packet-pair measurements would work robustly and reliably, could we use that to improve slow-start'

with 'now discuss why your solution will not work well over the existing internet' part of the assignment missing.

Or put differently, given the known problems of packet-pair measurements any 'solution' employing them should contain an explicit empiric section on how the solution deals with the problem under realistic conditions....

Punting this to future work, might be fine for academia, but for a deployable solution not so much... then again, this is by the same jokers who are about to deploy L4S in spite of similarly severe issues with lab versus existing internet*.

*) I am joking, L4S issues are part of its reason to exist, it is an indirect play to give an advantage to short RTT path to make ISP owned data centers more attractive... note this is pure subjective speculation.

how young you are to have achieved such cynicism!

I happen to agree that L4S is a play to make the ISP owned DC more attractive. Every design decision is made to make it hard to exit their DC. Which, I think/hope, will result in ... no uptake. And we can put this part of our lives down and go on FQ-ing all the non-cable technologies.

This many months later, are you, @moeller0, and @f.b.ex-turristech still of the mindset that L4S is largely unattractive and destined to fizzle out?

The reason I ask is that I find myself sitting here reading up on enabling L4S bits and looking at the theoretical results, getting excited. But then I'm left wondering if I'm chasing the wrong thing.

If not L4S, what else does one chase after in 2024 to make a better internet?

Personally yes, I am. IMHO L4S is "too little, too late". (Or phrased differently L4S over-promises but under-delivers). There is however quite some push behind it and you know what they say about what is required to make pigs fly...

The problem I see is, that without proper preemptive scheduling L4S will just be operating as well as its weakest link because the L4S default scheduler is cooperative* within both classes, but a priority scheduler that prioritizes ECT(1) traffic over the rest. Once you jettison that mis-design and switch over to a flow-queueing scheduler, you solved most of L4S's issues, but then you also realized most of L4S' gain even for non-ECT(1) traffic.

The thing is, most latency-sensitive traffic is not infinitely capacity seeking and most truly capacity seeking traffic is not all that latency sensitive; L4S' unique claim however is that it can also gracefully serve such unicorn applications that are both greedy and real-time. You might understand this better if you look at the contrived examples they bring for why L4S is needed:

a) a real-time high definition video application where users can instantaneously change between cameras (with out lag!) this is far less attractive than it sounds, for professional sports broadcast, they have experts watch multiple feeds in parallel to decide which feed to broadcast with a few seconds delay, so the resulting broadcast has some sort of coherence/narrative/logic in itself. I am not sure that end-users without the benefit of being able to preview the individual feeds for a bit will end up being all that interested in having access to the individual feeds.

b) a remote desktop application that was so badly designed and coded that it is unlikely that had the original team used L4S the application would have been any more usable... (remote desktop is clearly a latency sensitive application and that needs to inform the whole design and implementation process, just enabling L4S and assuming that will fix all warts is quite optimistic). That bad remote desktop think came out when there already were superior solutions available that worked reasonably well across the Atlantic...

BUT, just because I think L4S is generally not worth it does not mean:

a) that this assessment is correct

b) and even stipulating generally L4S might not cut it, it might well be helpful for your own use-cases

=> so if you feel up to it, just go and try yourself?

Honestly, I have no idea, but then I do not do "internet-stuff" for a living ;).

*) Cooperative scheduling/cooperative multitasking used to be normal for operating systems like DOS, windows 3 and classic MacOS, but essentially all mainstream OS switched to preemptive scheduling. And similar to packet scheduling in theory cooperative scheduling can be better than strict preemptive scheduling, however for this to happen ALL producers of scheduling entities (packets or load) need to, well, cooperate, a single misbehaving entity can ruin it for all...

P.S.: Then there are also the clear side-effects of L4S that will increase the RTT bias of TCP and will make short RTT traffic easily ride rough-shot over long-RTT traffic.

Hey, thanks for asking. L$S (pun intended ![]() ) is destined to happened, I'm afraid, no matter the questions and Red Team findings. There is too much power at the IETF held by certain companies so it's done deal.

) is destined to happened, I'm afraid, no matter the questions and Red Team findings. There is too much power at the IETF held by certain companies so it's done deal.

My suggestions to you as well as to anyone else here would be to take a look at CAKEMQ - @dtaht et all effort, that's definitely worth a look.

Also, LibreQoS - open source QoE middle box for ISPs, that's currently helping a few hundred ISPs worldwide. Another Dave's effort to fix bufferbloat, I'm also part of it.

L4S' Jason Livingood (Comcast) is willing to fund an effort to add L4S support to CAKE and FQ-CoDel, so feel free to reach out to him: https://x.com/jlivingood/status/1734299833433821599?s=20

Very cool! I will add this to my weekend reading list ![]()

I've had my eye on this as well for some time--pretty neat looking! Is it safe to assume it does not really have a place in a personal (home) configuration? In other words, it's really only beneficial to an entity such as an ISP?

In the current state of it, yes - it's targeted for ISPs, however, there are some university campuses "playing" with it already and we will share the case studies when available. Also, we are contemplating rescaling it for some Raspberry Pi-sh type of device, as well as to test it with 100-400Gb appliances. Best way to keep an eye on the news is to join our Matrix channel: https://app.element.io/#/room/#libreqos:matrix.org

Quick note fq_codel already has this:

ecn | noecn

has the same semantics as codel and can be used to mark packets

instead of dropping them. If ecn has been enabled, noecn can be

used to turn it off and vice-a-versa. Unlike codel, ecn is turned

on by default.ce_threshold

sets a threshold above which all packets are marked with ECN

Congestion Experienced. This is useful for DCTCP-style congestion

control algorithms that require marking at very shallow queueing

thresholds.ce_threshold_selector

sets a filter so that the ce_threshold feature is applied to only

a subset of the traffic seen by the qdisc. If set, the MASK value

will be applied as a bitwise AND to the diffserv/ECN byte of the

IP header, and only if the result of this masking equals VALUE,

will the ce_threshold logic be applied to the packet.

This essentially allows to request a differential CE-marking strategy for ECT(1) compared to ECT(0), it is a harsh step marking function, not a ramp but should allow for first L4S experiments... (one clear advantage over L4S' DualQ is that it offers a decent scheduler over the "hopes and prayers" coupling "design" in DualQ)

You still need L4S-enabled end points to actually be able to test that...

L4S is not all about a scheduler. I think you know this. It is a signal - for both endpoints, that queuing is occurring, so endpoints throttle what they send. Dropping packets as part of a congestion strategy is not great in the long term when endpoints react more aggressively. This whole strategy is borne of the understanding that you cannot really do much with packets already in flight, but applications can adjust themselves to available bandwidth, and routers can queue latency insensitive data.

As others here have noted: L4S is coming.

Look, I have seen how L4S was turned into an RFC and I can tell it did not reach that status by technical excellence, rather by eccellent gamesmanship regarding the flawed IETF process.

I just note that you restate its claims without checking whether it actually delivers on its promises in a robust and reliable fashion (spoiler: it does not).

That said, higher temporal fidelity in congestion signaling is not a bad idea, but what L4S made out of that premise IMHO is simple underwhelming.

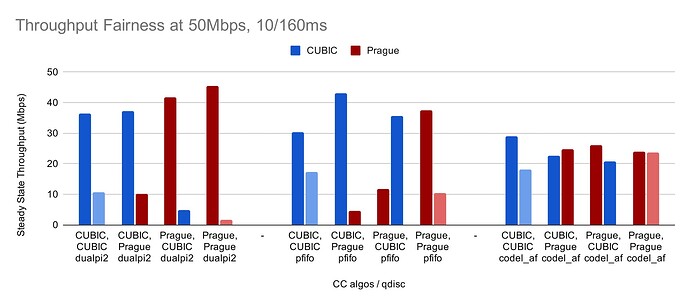

That seems settled, the bigger question is, will it stay. And for the latter I have my doubts, based on L4S not meeting its promises as documented here:

This concisely shows:

DualQ has higher RTT Bias than FIFO or an approximate fairness version of codel

This RTT bias is even more pronounced for two Prague/L$S flows than for two CUBIC flows

TCP Prague shows even more RTT bias that CUBIC when the bottleneck is a simple FIFO

Lastly, a better scheduler (here an approximate flow scheduler) can salvage a lot of these issues.

This data has been documented and made known during the RFCification process and yet L4S was ratified with the clearly under-performing DualQ as its reference AQM. Instead of fixing the documented issues, the L4S proponents simply doubled down and pushed that gunk through the IETF process.

... that Prague works as intended. It is not made to compete with other whatever those things are in the bar graphs; it is a replacement.

I get the feeling you really like L4S ![]()

RFC are not set in stone. The standards language it is replacing/deprecating is more than twenty years old. If DualQ needs improvement, a new RFC will arrive.

End users rarely see the magic unicorn shit utopia that WiFi standards alliances promise. But it mostly works.

Let's wait and see how L4S performs in the real world.

Sorry, you clearly did either not read the RFCs or worse did not understand them. Non-L4S TCPs are going to stay with us for a looooong time so L4S speficically caters to them and claims:

a) https://datatracker.ietf.org/doc/html/rfc9331 : This Experimental specification defines the rules that L4S transports and network elements need to follow, with the intention that L4S flows neither harm each other's performance nor that of Classic traffic.

b) A.1.6. Reduce RTT Dependence

Description: A Scalable congestion control needs to reduce RTT bias as much as possible at least over the low-to-typical range of RTTs that will interact in the intended deployment scenario (see the precise normative requirement wording in Section 4.3, Paragraph 8, Item 4).

The plot above clear shows:

a) L4S traffic doing harm to classic traffic

b) showing an increased RTT bias

This really is not rocket science, L4S' falls way short of its promised behaviour, both the reference AQM and the reference L4S protocol. This is crap, pushed through the IETF with a political agenda, not a technical one. I participate in that clusterfsck so know way too well, about the short comings of the IETF process.

I consider it to be a piece of garbage pushed through the IETF based on an ideological agenda that made it impossible to learn from what the state of the art has been for over a decade... The point is, if you use SQM today with either fq_codel or cake, enabling L4S will only mildly improve the fidelity of interactive internet use-cases. As I said above, too little, too late.

Not by itself, someone will need to write that and convince the IETF to touch that "molten core" ever again... The point is the deficiencies in both the protocol and the AQM have been documented before the RFC were ratified, so why expect that this is going to change now?

Really what is going to happen is L4S is going to be hyped a bit longer as the coming solution to all latency woes only to be silently replaced with the next big thing once every body sees that this emporer has little to no cloths.

For all intents and purposed the WiFi alliance is a marketing organization where auch shenanigans are not only expected but part of the reason they exist, the IETF however fancied it self as an engineering organization where politics and horse-trading have no influence (and yet lacks a process that actually counter acts potential horse-trading, and lo and behold it ratifies clunkers as the L4S drafts).

Well, no need to wait, just look at the L4S data from relative simple tests that I posted above (here is the link again to the full write-up: https://github.com/heistp/l4s-tests in case you did not notice). We already know it will not deliver on its promises, and it will be quite fickle while doing so. Going for a shared queue per traffic class means that a single misbehaving flow will affect a full class, and the asinine "coupling" between the queues will also result that misbehaving traffic in one queue will also affect traffic of the other class, heck even simply having naturally bursty traffic in the C-queue already compromises throughput in the L-queue. This is neither robust nor reliable...

Side-note: the core of the actual high-temporal fidelity congestion signaling was borrowed from data center tcp (DCTCP) however in actual data centers folks do not consider DCTCP as all that hot and continue to come up with better congestion signaling. Most of these have in common that they eschew the dctcp approach of rate coding the queue occupancy via CE marks distributed over all egressing packets (of wildly varying flows) and instead use explicit congestion magnitude signals per packet.

If L4S had not insisted upon redefining what a CE means (compared to rfc3168) it could have used already a 1.5 bit magnitude signal (three code points)... water down the bridge

Your point ( I'm not arguing with you ) is predicated on L4S flows harming other flows. Flows are oblivious to each other. It is not flows harming other flows, what is it instead? That's right: the scheduler.

One point of L4S is to reduce buffer sizes, thus q sizes, thus congestion.

L4S is not a panacea:

L4S is designed for incremental deployment. It is possible to deploy

the L4S service at a bottleneck link alongside the existing best

efforts service [DualPI2Linux] so that unmodified applications can

start using it as soon as the sender's stack is updated.

Naturally. No need to keep something that does not work.

I've looked. And I see it working. I do not advocate that it is better or worse than anything else because I'm not comparing it. I see it working as intended.

If L4S turns out to be the clusterfuck you pronounce it to be, we can always ask the IEEE to step in and unclusterfuck it.

No, my point is L4S (the whole package so aqm/scheduler (DualQ) and protocol (e.g. TCP Prague)) falls well behind its promises to the point of not being worth deploying.

My short list included RTT bias which shows as lower RTT Prague flows hurting higher RTT Prague flows, the fact that you subsume this as "L4S flows hurting other flows" makes me believe I was not clear enough...

Yes and no, with two TCP Prague flows of different RTT we see that DualQ and FIFO show increased RTT bias, while codel_af does not, which demonstrates that DualQ is a just a shitty design (but we knew that), but the fact that Prague/Prague/pfifo shows much stronger RTT bias than CUBIC/CUBIC/pfifo (let alone CUBIC/Prague and Prague/CUBIC) shows that TCP Prague will not work well over longer paths that do happen in the existing internet... which in turn IMHO make the incremental deploymet claims quite hollow...

Then respectfully you did not look close enough... it does not meet its own lofty promises.

Oha, two notes:

a) the IEEE will never step in to fix issues in IETF standards

b) the IETF will neither, their modus operandi is to simply ignore old RFCs that have no merit, only in extreme cases old standards are converted to historic status, but only if there is an urgent need.

But same recommendation I gave @_FailSafe: just go and try it yourself, maybe it will work wonders on your use-cases...

For my use-cases it is unlikely* to help, as I am just fine with fq_codel/cake performance.... I happen to have no latency-sensitive traffic that is also truly capacity seeking, and hence proper scheduling solves my real-time issues quite well, and codel takes care of the capacity seeking flows equally well.

*) Without any L4S enabled endpoints in the internet it is going to be hard to empirically prove that, but I am fine with not proving that myself, occasionally an argument based on some lab testing and first principles is enough for me.

P.S.: Others however are running with it, there is interest from both Apple and Google, but I note that these tend to have data centers near users and hence will see the increased RTT bias of L4S not as a bug, but as a feature.