Looks like Google is not indexing new Wiki.

What makes you think so?

For example this page:

https://openwrt.org/docs/guide-quick-start/verify_firmware_checksum

Search result:

https://www.google.com/search?q=verify+site%3Aopenwrt.org

Strange issue...

Page created: 2017/08/19

Page last changed: 2018/10/15

Page is in the sitemap: Yes

Google search console is telling me:

- Page has been crawled (last time 14.09.2018), but has not been indexed yet.

- Crawling is permitted

- Indexing is permitted

I manually requested indexing now, but there are more pages like this which have been crawled, but are not indexed.

@thess @jow Any clue what might be going on here? Maybe google is not happy about the rate limiting?

It is certainly possible that rate limiting google slows down indexing.

then again, who is?

I suspect the issue also affects all new pages.

For instance:

https://openwrt.org/docs/guide-user/services/vpn/openvpn/dual_stack

At the same time namespace overview page is indexed and cached and its cached version has up-to-date link to the page above:

https://webcache.googleusercontent.com/search?q=cache:t8a9oD6ziEoJ:https://openwrt.org/docs/guide-user/services/vpn/openvpn/start

Partly true...

In this case: The page has been created 22/09/2018, and has been crawled by google on 24/09/2018. Dokuwiki has an anti spam measure that prevents any new changes to be picked up by searchengines by adding a "noindex" tag for 3 days after the edit. Since there have been multiple edits, this "noindex" should have been present until 01/10/2018. After that again from 12/10 - 15/10/2018.

If you look at the headers today, you will find <meta name="robots" content="index,follow"/>.

As per today, nothing is hindering google to crawl and index this page.... except the rate limiting. Trying to get this page manually indexed via the searchconsole leads to "Server error (5xx)", although the real error we sent was 429.

@jow How do you think about temporarily lifting the rate limiting for google for let's say 1 month? I suggest to do that at November 1st, in order to be able to easily monitor the effects via the stats.

Don't know much about Google, but

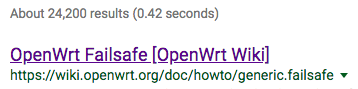

... gets a good result

Feel free to try.

I spent quite a lot of time by doing something on old instruction from old wiki which google showed to me.

Maybe you can just disallow the whole old wiki in the robots.txt?

https://wiki.openwrt.org/robots.txt

Solutions for the old wiki have already been discussed.

All that is missing is some support to move wiki.openwrt.org -> oldwiki.openwrt.org

FYI - I have taken googlebot out of the rate limiting now.

On short term, no significant effect on server load observed.

I'll keep an eye on this.

FYI: This page is now listed on google.

Before closing the issue let's see how much time does it take to index some new pages.

I'm looking forward to the day that https://www.google.com/search?q=openwrt+failsafe+mode doesn't return

but instead, as its first result, gives

We can also forcibly redirect old pages to the new wiki on a case by cass basis, such as for the failsafe instructions. You can ping @wigyori and kindly ask him to do

Only downside is that the old redirected page isn‘t directly accessible anymore then.

I have added a redirect from old to new failsafe page now.

I don't understand how google indexes webpages...

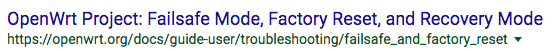

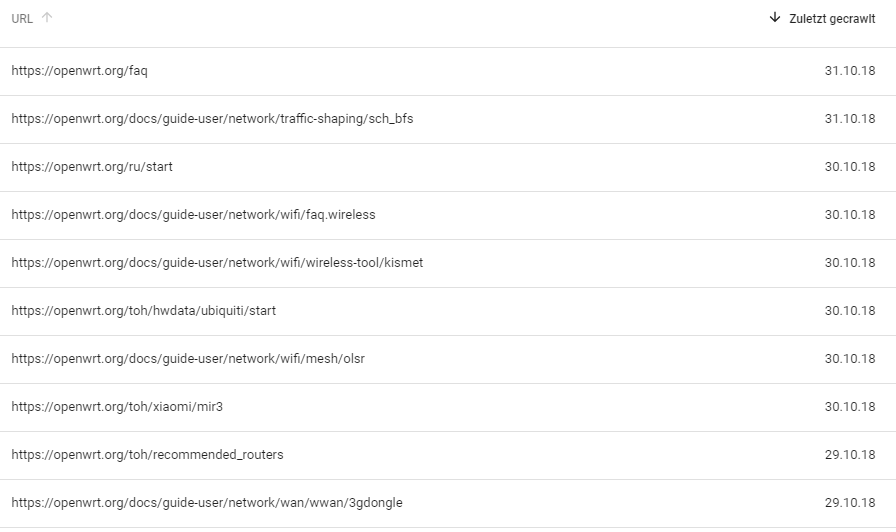

Issue: Google complains that certain URLs are blocked by robots.txt

Examples out of the search console:

This is the robots.txt:

Now where could google see that e.g. https://openwrt.org/docs/guide-user/network/wifi/mesh/olsr is blocked by robots.txt? 99% of the URLs are not blocked in any way, but google is of different opinion...

I started a check of these URLs in the searchconsole some days ago, and today google comes back: Check failed. D'oh!

I really don't understand what google is doing here...

Google IS indexing openwrt.org, and in the meantime the statistics in the searchconsole improved a bit. Turns out that google is just incredibly slow with updating the search console.