Have been a "loyal" user of cake and piece_of_cake for several years.

However, testing fq_codel with simplest_tbf tonight may have changed that.

Testing on Waveform with an Archer C7 v2 running the 21.02.1 release version.

Connection is 50/50 fiber.

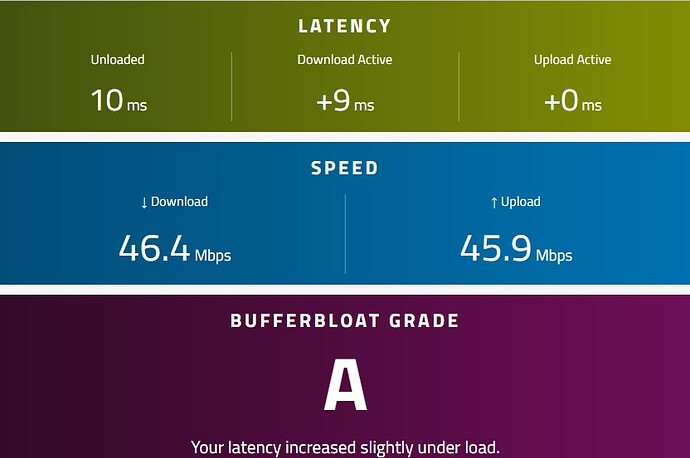

With cake and piece_of cake, I was getting around 9 to 11 ms of latency on download, and 0 on upload.

Speed was in the neighborhood of 44-45 Mbps for both download and upload.

So an "A" from Waveform...OK.

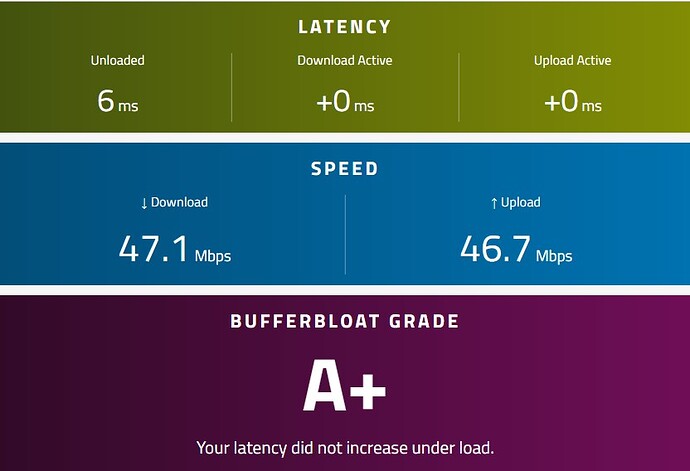

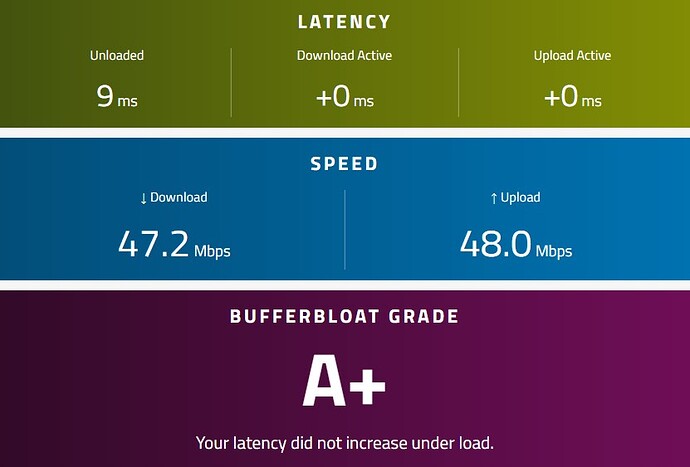

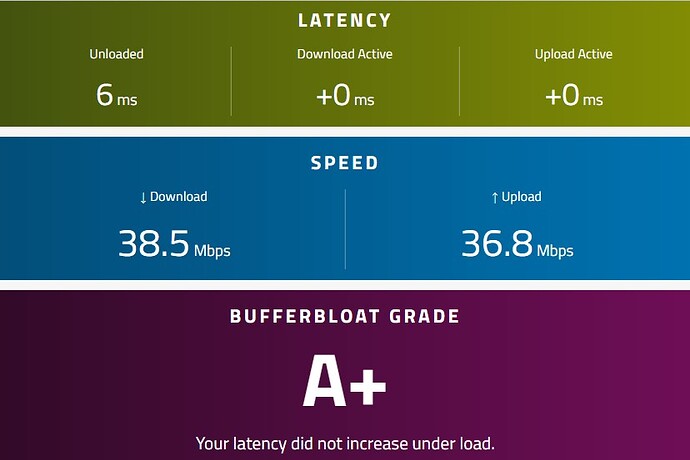

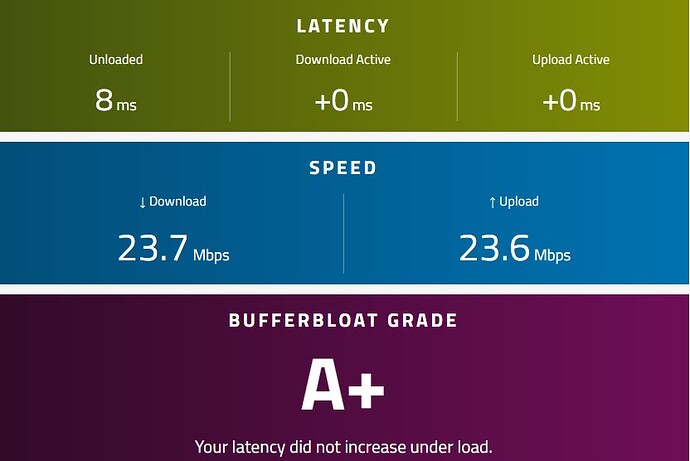

With fq_codel and simplest_tbf, latency on download dropped to 1 ms (and again 0 on upload)

Speed went up to 47 for both download and upload...and an "A+"

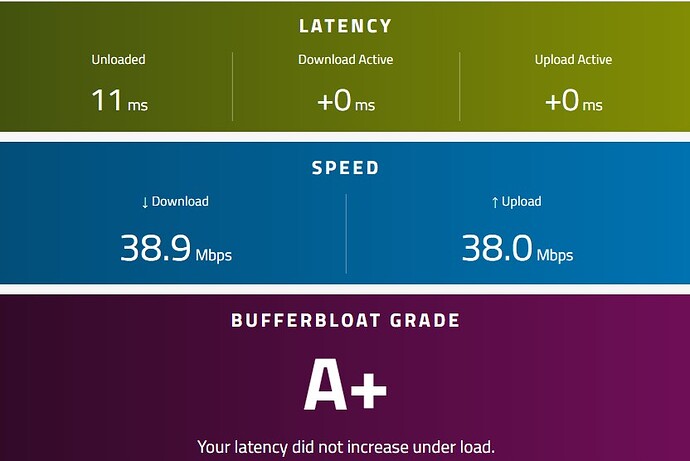

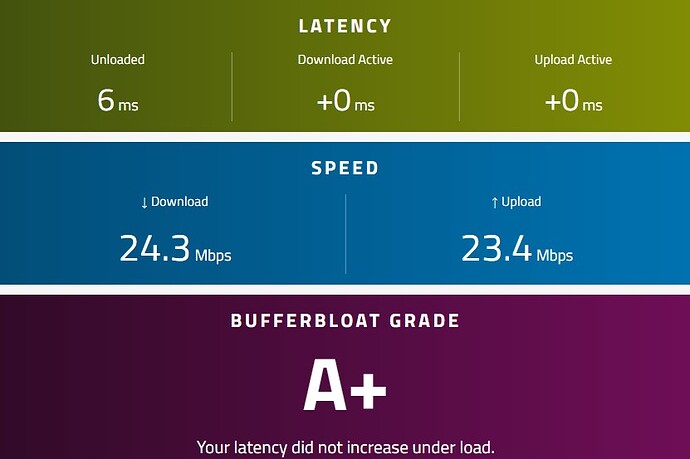

Similar results after several runs.

Almost spit coffee on my keyboard...

Egress and ingress are shaped at 49 Mbps, and Per Packet Overhead set at 18.

Not sure how this will work out in the long run, but I'll be interested to see what happens.