I have a minor issue with firewall configuration which might escalate to a security issue, so let's talk about it. It's on a snapshot version, and I am using fw3. Let's start with my network configuration...

It's non-relevant, but system is x86_64.

LAN is 10.0.0.0/9 ( 10.0.0.1-10.127.255.255 address space )

Actually LAN is only 10.0.0.0/16 (10.0.0.1-10.0.255.0 ) but since I have some VPN network with bridging, outside hosts are using 10.x.0.0/24 as their address spacing, so 10.0.0.0/9 covers this. I have a testing device where I have this "issue" with address of 10.95.0.1.

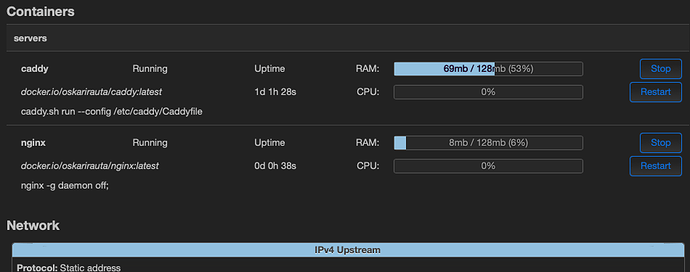

Issue comes with containerised service(s), I have nginx running inside a podman container. CNI is configured to use 10.129.0.0/24 as subnet, so:

config interface 'podman'

option proto 'none'

option device 'cni-podman0'

and in my firewall configuration I have following (un-related parts cut out):

config defaults

option flow_offloading 1

option syn_flood 1

option input ACCEPT

option output ACCEPT

option forward REJECT

config zone

option name lan

list network 'lan'

option input ACCEPT

option output ACCEPT

option forward ACCEPT

...

config zone

option name podman

list device 'cni-podman0'

list subnet '10.129.0.0/24'

option input REJECT

option output ACCEPT

option forward ACCEPT

option masq 0

option mtu_fix 1

...

config forwarding

option src lan

option dest podman

config forwarding

option src podman

option dest wan

...

config rule

option name Reject-podman-to-lan

option src podman

option dest *

option dest_ip 10.0.0.0/9

option proto tcp+udp

option target REJECT

config redirect

option name Allow-HTTP

option src wan

option dest podman

option src_dport 80

option dest_ip 10.129.0.2

option dest_port 80

option proto tcp

option target DNAT

config redirect

option name Allow-HTTPS

option src wan

option dest podman

option src_dport 80

option dest_ip 10.129.0.2

option dest_port 80

option proto tcp

option target DNAT

ifconfig:

cni-podman0 Link encap:Ethernet HWaddr FE:7D:2E:77:DF:39

inet addr:10.129.0.1 Bcast:10.129.0.255 Mask:255.255.255.0

...

br-lan Link encap:Ethernet HWaddr 36:16:CD:39:4D:23

inet addr:10.95.0.1 Bcast:10.95.0.255 Mask:255.255.255.0

...

firewall.user does not have any rules.

And then I have a pod that is set to use IP address 10.129.0.2.

With recent podman, on OpenWrt it is possible to use storage for podman which is on a physical device instead of in RAM, so pod(s) & container(s) can survive reboot, but if I won't use this set up, I have a kube file which builds pod and it's host(nginx) on rc.local

Now, as you see, on my firewall configuration, I want to disable access from container to ANY lan ip addresses, and all my configurations work as they should- except... After building my service with script on rc.local, I also must add /etc/init.d/firewall restart (maybe reload would be sufficient...) to block access to LAN from containers. I have not tested this yet, but I am pretty sure, I need to reload firewall EVERY time after creating containers to keep this wanted behaviour.

If I keep my containers on a persisting storage, like on a disk, I do not need to restart firewall.

And that is my concern of security issue I am talking about, as I'd like to fix this so one would not need to remember to restart firewall to retain podman -> lan blocking...

Here is what I think this is about: firewall starts BEFORE podman. So even though cni-podman0 is introduced in network configuration and zone is listed in firewall configuration, rules are ignored - which is strange in that way, that http(s) redirection works any way..

I have not actually ever been a firewall (iptables) expert, so that is why these are just thoughts why I have this issue. So, now I am looking for those experts to tell me where my mistake is and how to fix this.

Hopefully we can stay on topic...