I made two changes to util-linux in that patch and the commit is for both (taskset and renice)

diff --git a/package/utils/util-linux/Makefile b/package/utils/util-linux/Makefile

index f4b870cc47..deccea7707 100644

--- a/package/utils/util-linux/Makefile

+++ b/package/utils/util-linux/Makefile

@@ -398,6 +398,15 @@ define Package/partx-utils/description

contains partx, addpart, delpart

endef

+define Package/renice

+$(call Package/util-linux/Default)

+ TITLE:=Alter the priority of running processes

+endef

+

+define Package/renice/description

+ Alter the priority of running processes

+endef

+

define Package/script-utils

$(call Package/util-linux/Default)

TITLE:=make and replay typescript of terminal session

@@ -443,6 +452,15 @@ define Package/swap-utils/description

contains: mkswap, swaplabel

endef

+define Package/taskset

+$(call Package/util-linux/Default)

+ TITLE:=Set or retrieve a task's CPU affinity

+endef

+

+define Package/taskset/description

+ Alter the priority of running processes

+endef

+

define Package/unshare

$(call Package/util-linux/Default)

TITLE:=unshare userspace tool

@@ -739,6 +757,11 @@ define Package/partx-utils/install

$(INSTALL_BIN) $(PKG_INSTALL_DIR)/usr/sbin/delpart $(1)/usr/sbin/

endef

+define Package/renice/install

+ $(INSTALL_DIR) $(1)/usr/bin

+ $(INSTALL_BIN) $(PKG_INSTALL_DIR)/usr/bin/renice $(1)/usr/bin/

+endef

+

define Package/script-utils/install

$(INSTALL_DIR) $(1)/usr/bin

$(INSTALL_BIN) $(PKG_INSTALL_DIR)/usr/bin/script $(1)/usr/bin/

@@ -761,6 +784,11 @@ define Package/swap-utils/install

$(INSTALL_BIN) $(PKG_INSTALL_DIR)/usr/sbin/swaplabel $(1)/usr/sbin/

endef

+define Package/taskset/install

+ $(INSTALL_DIR) $(1)/usr/bin

+ $(INSTALL_BIN) $(PKG_INSTALL_DIR)/usr/bin/taskset $(1)/usr/bin/

+endef

+

define Package/unshare/install

$(INSTALL_DIR) $(1)/usr/bin

$(INSTALL_BIN) $(PKG_INSTALL_DIR)/usr/bin/unshare $(1)/usr/bin/

@@ -823,10 +851,12 @@ $(eval $(call BuildPackage,nsenter))

$(eval $(call BuildPackage,prlimit))

$(eval $(call BuildPackage,rename))

$(eval $(call BuildPackage,partx-utils))

+$(eval $(call BuildPackage,renice))

$(eval $(call BuildPackage,script-utils))

$(eval $(call BuildPackage,setterm))

$(eval $(call BuildPackage,sfdisk))

$(eval $(call BuildPackage,swap-utils))

+$(eval $(call BuildPackage,taskset))

$(eval $(call BuildPackage,unshare))

$(eval $(call BuildPackage,uuidd))

$(eval $(call BuildPackage,uuidgen))

Successfully applied, can you leave some documentation on how am I supposed to configure such a patch?

google man taskset

It uses a mask. So if you want to run a process and pin it to a specific core, say core 7 of 8, you'd specify core 7 as the binary value 0100 0000, which would be 0x40 in hexadecimal.

Then you'd call

/usr/bin/taskset 0x40 /usr/bin/snort <snort-arguments>

So I have edited my /etc/init.d/snort script so it's procd command looks as follows

procd_open_instance

procd_set_param command /usr/bin/taskset ${affinity_mask}

procd_append_param command $PROG "-q" "-c" "$config_file" "--daq-dir" "/usr/lib/daq/" \

"-i" "$device" "-s" "-N" \

"$arguments" \

"--pid-path=${PID_PATH}" \

"--nolock-pidfile"

procd_set_param respawn

procd_close_instance

dl12345:

/etc/init.d/snort

So I have to do this for every task that runs on openwrt?

No, you'd only really want to do it for a couple of processes that are cpu intensive.

For example, on my 8-core, I have optimized irq affinity so that cores 1 - 6 run IRQs for network rx/tx interrupts while cores 0 and 7 don't.

Core 0 runs system timer tasks and other things.

On a gigabit flow, snort uses quite a bit of cpu, so I prefer to put it on a core that isn't processing interrupts. I pin my softflowd to core 0, which also uses a fair chunk of cpu on a gigabit flow, to core 0.

It really depends on what you're running and the right optimization will be different depending on the workload you're running.

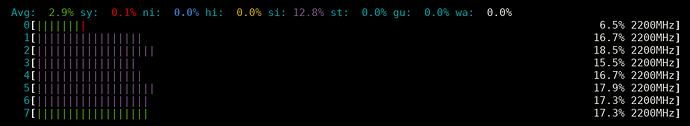

Here's a graphical indication of what happens when optimizing with both taskset and interrupt affinity: In this screenshot, I'm running an iperf3 at 940mbps through my router. You can see snort consuming cpu on core 7 and softflowd consuming cpu on core 0 (they're green for user processes)

Then cores 1-6 (which I've tuned by manually assigning interrupt affinities) are nicely balanced, processing the network interrupts for the flow (which is why the bars are purple). A lot of the cpu usage on the purple bars is because I'm running SQM layer cake on ingress, which is very cpu intensive.

Does that help?

1 Like

hnyman

March 23, 2021, 6:28pm

26

You have to at least edit the irqbalance config file to set irqbalance enabled.

2 Likes

So I can use htop and then change the init for it to be on the other core?

hnyman

March 23, 2021, 6:34pm

29

Where all normal uci config files are...

/etc/config/irqbalance

1 Like

htop doesn't really enter into it except that i used it to show the cpu usage and then screenshot it as an illustration....

To figure out what processes I need to change, is it not a valid strategy?

Sure, yeah - it's a very useful tool for that

dl12345:

So if you want to run a process and pin it to a specific core, say core 7 of 8, you'd specify core 7 as the binary value 0100 1000, which would be 0x40 in hexadecimal.

Then you'd call

/usr/bin/taskset 0x40 /usr/bin/snort <snort-arguments>

Could you also share how you distributed the IRQs for the ixgbe NIC driver, to spread them across cores 1-6?

This is the setting that yields the result shown in the htop screenshot above

This is very specific to the NIC. If you don't have the same NICs as me, this won't help you at all

<interface> <cpu core> <hex mask> <interrupt>

eth0 - my wan interface

eth0 1 0x02 -> /proc/irq/47/smp_affinity

eth0 2 0x04 -> /proc/irq/48/smp_affinity

eth0 3 0x08 -> /proc/irq/49/smp_affinity

eth0 4 0x10 -> /proc/irq/50/smp_affinity

eth0 5 0x20 -> /proc/irq/51/smp_affinity

eth0 6 0x40 -> /proc/irq/52/smp_affinity

eth0 1 0x02 -> /proc/irq/53/smp_affinity

eth0 2 0x04 -> /proc/irq/54/smp_affinity

eth3 - my lan interface

eth3 0 0x01 -> /proc/irq/74/smp_affinity

eth3 1 0x02 -> /proc/irq/75/smp_affinity

eth3 2 0x04 -> /proc/irq/76/smp_affinity

eth3 3 0x08 -> /proc/irq/77/smp_affinity

eth3 4 0x10 -> /proc/irq/78/smp_affinity

eth3 5 0x20 -> /proc/irq/79/smp_affinity

eth3 6 0x40 -> /proc/irq/80/smp_affinity

eth3 7 0x80 -> /proc/irq/81/smp_affinity

1 Like

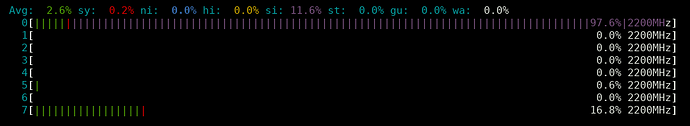

And to contrast this, the following settings yield the following core load with a 1Gbps download stream

<interface> <cpu core> <hex mask> <interrupt>

eth0 - my wan interface

eth0 0 0x01 -> /proc/irq/47/smp_affinity

eth0 1 0x02 -> /proc/irq/48/smp_affinity

eth0 2 0x04 -> /proc/irq/49/smp_affinity

eth0 3 0x08 -> /proc/irq/50/smp_affinity

eth0 4 0x10 -> /proc/irq/51/smp_affinity

eth0 5 0x20 -> /proc/irq/52/smp_affinity

eth0 6 0x40 -> /proc/irq/53/smp_affinity

eth0 7 0x80 -> /proc/irq/54/smp_affinity

eth3 - my lan interface

eth3 0 0x01 -> /proc/irq/74/smp_affinity

eth3 1 0x02 -> /proc/irq/75/smp_affinity

eth3 2 0x04 -> /proc/irq/76/smp_affinity

eth3 3 0x08 -> /proc/irq/77/smp_affinity

eth3 4 0x10 -> /proc/irq/78/smp_affinity

eth3 5 0x20 -> /proc/irq/79/smp_affinity

eth3 6 0x40 -> /proc/irq/80/smp_affinity

eth3 7 0x80 -> /proc/irq/81/smp_affinity

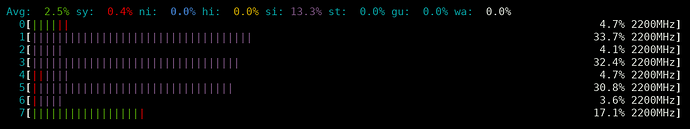

And these ones yield this result

<interface> <cpu core> <hex mask> <interrupt>

eth0 - my wan interface

eth0 1 0x02 -> /proc/irq/47/smp_affinity

eth0 2 0x04 -> /proc/irq/48/smp_affinity

eth0 3 0x08 -> /proc/irq/49/smp_affinity

eth0 4 0x10 -> /proc/irq/50/smp_affinity

eth0 5 0x20 -> /proc/irq/51/smp_affinity

eth0 6 0x40 -> /proc/irq/52/smp_affinity

eth0 1 0x02 -> /proc/irq/53/smp_affinity

eth0 2 0x04 -> /proc/irq/54/smp_affinity

eth3 - my lan interface

eth3 1 0x02 -> /proc/irq/74/smp_affinity

eth3 2 0x04 -> /proc/irq/75/smp_affinity

eth3 3 0x08 -> /proc/irq/76/smp_affinity

eth3 4 0x10 -> /proc/irq/77/smp_affinity

eth3 5 0x20 -> /proc/irq/78/smp_affinity

eth3 6 0x40 -> /proc/irq/79/smp_affinity

eth3 1 0x02 -> /proc/irq/80/smp_affinity

eth3 2 0x04 -> /proc/irq/81/smp_affinity

1 Like

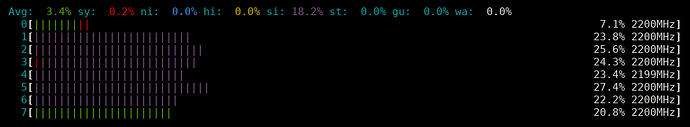

And this is 8 download and upload streams all with dscp marks on them corresponding to one of SQM's diffserv4 buckets, so exercising the cpu more heavily using the first optimized set of affinity masks I posted above

So, IMHO, manual affinity configuration is always superior to irqbalance, as even a slight difference can result in a huge change to the way your cores are utilized in a multi-core system.

And it's all very specific to the NIC. It just requires a bit of experimentation to find the best set of affinity masks for your hardware.

1 Like

I can't use that. seems like the ICU for core 2 on xrx200 is non existent or not used..

CPU0 CPU1

7: 373508 365968 MIPS 7 timer

8: 5757 4202 MIPS 0 IPI call

9: 30815 231492 MIPS 1 IPI resched

22: 149245 0 icu 22 spi_rx

23: 52631 0 icu 23 spi_tx

24: 0 0 icu 24 spi_err

62: 0 0 icu 62 1e101000.usb, dwc2_hsotg:usb1

63: 61322 0 icu 63 mei_cpe

72: 1243041 0 icu 72 xrx200_net_rx

73: 2332056 0 icu 73 xrx200_net_tx

91: 0 0 icu 91 1e106000.usb, dwc2_hsotg:usb2

96: 3648074 0 icu 96 ptm_mailbox_isr

112: 300 0 icu 112 asc_tx

113: 0 0 icu 113 asc_rx

114: 0 0 icu 114 asc_err

126: 0 0 icu 126 gptu

127: 0 0 icu 127 gptu

128: 0 0 icu 128 gptu

129: 0 0 icu 129 gptu

130: 0 0 icu 130 gptu

131: 0 0 icu 131 gptu

144: 0 0 icu 144 ath9k

161: 0 0 icu 161 ifx_pcie_rc0

All traffic goes though core 0 for some reason,will investigate, reply if you have ideas.

It does not look like it's unused. It looks like its affinity is set to core 0. Try

echo 0x02 > /proc/irq/73/smp_affinity

What does it do? This should set the affinity for net_tx to cpu 1

Crashes Ethernet communications and does not work, I needed to change 0x02 to 1 cause it gave me ash: write error: Invalid argument. ICE even under irqbalance does not get touched

hello how make 50/50 to dual core belkin rt3200

thanks