Definitely shouldn't happen but very possible. Still in experimental phase. Details appreciated if you can recreate.

Downloaded the latest script after posting, ran fine for a good while until it didn't:

# tail -f /tmp/sqm-autorate.log

0.00;sh: invalid number ''

0.00;

(standard_in) 2: syntax error

(standard_in) 1: syntax error

sh: 1: unknown operand

(standard_in) 1: syntax error

(standard_in) 1: syntax error

sh: 1: unknown operand

(standard_in) 1: syntax error

sh: 1: unknown operand

20211112T030454.076664808; 0.00; 0.01; 59.13; 20000.00; 5000.00;sh: invalid number ''

0.00;sh: invalid number ''

0.00;

(standard_in) 2: syntax error

(standard_in) 1: syntax error

sh: 1: unknown operand

(standard_in) 1: syntax error

(standard_in) 1: syntax error

sh: 1: unknown operand

(standard_in) 1: syntax error

sh: 1: unknown operand

20211112T030455.077655493; 0.00; 0.01; 54.94; 20000.00; 5000.00;sh: invalid number ''

0.00;sh: invalid number ''

0.00;

(standard_in) 2: syntax error

(standard_in) 1: syntax error

sh: 1: unknown operand

(standard_in) 1: syntax error

(standard_in) 1: syntax error

sh: 1: unknown operand

(standard_in) 1: syntax error

sh: 1: unknown operand

20211112T030456.112563950; 0.01; 0.02; 48.02; 20000.00; 5000.00;sh: invalid number ''

0.00;sh: invalid number ''

0.00;

(standard_in) 2: syntax error

(standard_in) 1: syntax error

sh: 1: unknown operand

Haven't looked at all into what's going wrong with it yet, I'll play with it in the weekend.

Mmmh, could that have been during an epoch witout internet access? Because when no reflector can be reached the scrpt breaks. I am working on a fix, but at earliest tonight....

I had thought running with:

exec &> /tmp/sqm-autorate.log

would ensure it keeps going despite the errors?

As you indicated above it should just decay to minimum if it cannot reach.

But I suppose how to handle this properly will hinge upon the ultimate form of ping settled upon. And I am mindful of the hotly anticipated timing based directional ping.

It should. Here is my current half finished state https://github.com/moeller0/sqm-autorate/tree/more_changes of trying to improve the delay measurement robustness. But you are right the rate update code should special case empty RTT and simply decay towards the resting_rate/minimum... but the logging should learn to deal with empty RTT/baseline_RTT values as well. Please note that this is my play around branch with lots of gratuitous changes from playing around with things.

Nah, I am sure we can make this robust while still allowing different delay measurement methods. Why different ones? Well, ping with its "flaw" of only measuring RTT is in all likelihood going to be universally availble, with ICMP timestamps I am not so sure, so this needs to be configurable (maybe even including an automatic fall-back).

I see this as something to tackle after the existing script has been robustified though. You are already getting a lot of publicity in the latency-focussed fora and mailing list, so people will use/tests this as is and then let's make it worth their while (which in turn might get us better testing results, concerning the algorithm/concept instead of the implementation)?

Is the idea that prune reflectors (or perhaps rather 'validate reflectors'?) will work with an array with 0 or 1 for each reflector and if reflector bad it will temporarily set that value to 0 until timeout and then retry it again? Just to avoid killing off all reflectors once all have temporarily gone down. And then 'for each good reflector {ping ..}'?

The first idea was/is to test the static set of reflectors whether they work and remove (and report) those that do not answer at all, sort of to weed out impossible reflectors (each of which will cause get_RTT to run into one of the -w or -W timeouts of 1 second). On could periodically try to simply run the original reflector list through prune/validate to see whether some have come back, especially if all went missing... But that pruning/validation is potentially time consuming so I would not do this unless absolutely required?

Oh I see, an initial check before first run to verify that e.g. the user has not typed 8.8.X.5? Or 8.8.1.8 or something?

And then just have ping code such that each cycle if reflector doesn't respond it won't kill everything?

Saving periodically checking whether reflectors are good and working through array of good reflectors.

Yes, or 1.2.3.4 which is a well-formed IP address which will not respond, or private IP addresses....

But the same function can also be run (even in a loop) if we have no valid Delay measurements (at that point cycle time becomes less of an issue, sure we want the rate to decay to the minimum/resting_rate) eventually, but if a cycle takes 1 or 10 seconds will not matter too much....

Sidenote, such a function in theory could also be used to select the X best reflectors out of a much larger set... but let me first get the initial simple in scope version to actually do something/do the desired action ![]()

Yes, I have recently became aware of a whole universe of "lint for shell scripts" tools:

- Use

"$FOO"instead of$FOO(add double quote to prevent globbing and word splitting) - Use

$(...)notation instead of legacy backticks `...`.

I had fun with www.shellcheck.net on one of my scripts ![]()

I'm not certain. The connection does rarely fail for a few seconds (<30), so that could have something to do with it.

Testing the current version some more, it seems to keep running like it should. Starting a long-running speedtest does trigger it to change the bandwidth on the qdisc. So looks like it's just the output that breaks.

Please can you feed us with some data / graphs to show how it scales up and down on your connection Mr @Lochnair?

The funny thing is, I typically use curly braces for all variables, just here where it would matter I ceaped out

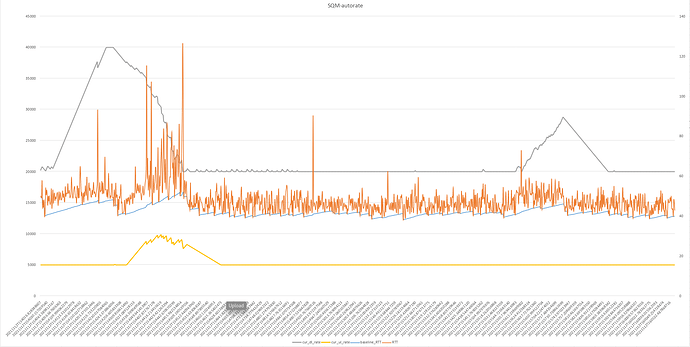

Tried to make something that looks alright, not used to making charts in OnlyOffice (or at all)

This is mostly without much traffic happening, but you can see the Fast.com test running pretty early there. I set it to run for ~2 minutes so it has good time to get the rates up.

Mmmh, if the blue line is the base-line, we are "learning" too fast when deltaRTT is large....

Looks OK but would be good to have more data with steady state when max capacity has been reached to see how it oscillates around max capacity. So if you set a couple of .ISO downloads (Linux MATE or something) going then you can see what happens there.

Thankfully I imagine for many connections maintaining the sustained heavy load well is not as important as scaling down during light / no-load times. Because for many connections most activity is surely light, latency sensitive traffic, and heavy, sustained downloads the exception.

For baseline RTT I am testing p95 percentile with minimum step of 1/3600. This set the baseline higher than mean/average, so it accommodates most 'normal' pings and allows real baseline fluctuations at a rate of 1 ms per hour.

So far, I think it works fine.

class RunningPercentile:

def __init__(self,x,step=1):

self.step = max(x/2.0,step)

self.x = x

self.step_up = 1.0 - 0.95

self.step_down = 0.95

#print(x,self.step)

def push(self, observation):

if self.x > observation:

self.x -= self.step * self.step_up

elif self.x < observation:

self.x += self.step * self.step_down

if abs(observation - self.x) < self.step:

self.step /= 2.0

if self.step <= 1.0/3600.0:

self.step=1.0/3600.0

Mmmh, I am uncertain whether for a biased distribution like RTT (hard lower limit, soft upper limit) we can easily extract and percentile from our EWMA value...?

I had a look at your links, and I am still pondering how this works... The linked paper makes sense to me in that it basically tries to approximate the CDF (from which percentiles can be estimated) by taking a small number of sample points and interpolates intelligently between them to get decent estimates for those locations not stored. But IMHO even that requires some assumptions on the underlaying distribution?

Otther than that, I share your sentiment that we should be able to live with a very slow learning rate for higher baseline delay.

Also, shouldn't we look at the p5-ile instead, after all the minimum seems to be the measure closest to the measure we are after the true path delay?

I've made some significant changes to my lua implementation over the last 2 days, simplified a lot of the statistics tracking. I have also returned the RTT tracking to the streaming median method as this seems to better cater for an LTE link with a variable ping.

Additionally @dlakelan I've taken inspiration from your work on the alternative HSFC qdisc design work and incorporated an optional setting for the wanmonitor script to clamp/unclamp egress or ingress MSS for to 540 when their rate falls below 3000kbps to keep jitter down.

This setting will not survive firewall reloads unless a supporting script is added to your firewall config to send a SIGUSR1 signal to the wanmonitor PID upon firewall reload, I will add a script to the wanmonitor repository for this purpose.

I would appreciate it if people could validate these changes - on my end at least I'm seeing significant improvements in accuracy and reactivity (do note that the version on the repo over the last week would have been quite unstable due to experimentation so please take the latest version).

The service now tracks and uses just the following stats:

- Streaming median target ping (sampled from each interval's minimum)

- Maximum achieved rate (used in bandwidth increase calculation)

- Assured rate (used as the floor for bandwidth decreases)

- Current bandwidth utilisation

- Stable rate (derived from the last assured rate prior to the direction entering a latent state)

- This is used as the base factor prior to adjustment to determine decrease chance in each direction

@Lynx to your last question (appreciate it was a while back), the above combination of parameters avoids the need for ICMP timestamps when determining the direction of latency.

The significant reduction in statistic logic means that that some of these methods might now be a better fit for re-implementation in shell if we wanted to incorporate into the SQM scripts - although shell probably still isn't the lightest of languages.

With all that said, I'm happy to keep maintaining the service in its standalone form as it currently performs more of a generalist 'wan monitoring' function, also handling events such as auto-reconnect.

In my mind at least this is useful since it would avoid having one service pinging remote hosts to adjust SQM, and then a separate service also pinging hosts to determine if the link is down etc.

With my architecture hat on, perhaps the pinging and line stats functionality should be broken into one purpose built microservice, and then separate SQM adjustment/connection handling processes could use those stats - conscious though that this would come with additional complexity and overhead.