Hi

I have a couple of servers, some old desktop computers and micro PCs that I have turned into servers which I will be using for various things. Some of the things I will be running are Nextcloud, a development web server, Plex, Wireguard and many more but I would like to isolate them and I've thinking about using DMZs to achieve this. I believe in OpenWrt and pfSense this is done by creating multiple interfaces and then defining rules to allow granular access such as DHCP and DNS communication.

My WRT1900ACSv2 is the centre of my network setup and therefore should be capable of setting multiple DMZs.

Below I've outlined what I intend to do with each server.

TrueNas Server

My tower server has a HBA card for running ZFS in TrueNas/FreeNAS. This server is purely a private LAN storage/backup system using nothing but just SMB shares. As this will be for private use this will be staying on my existing private VLAN.

Proxmox Server

This is my most powerful and highest installed memory server. This has Debian 11 Bullseye installed as the base OS with Proxmox 7 installed on top being the hypervisor for varying virtual machines. This server gets turned on a ad-hoc basis at the moment because non of the things running from it need to be running 24/7 nor do I have the air conditioning, noise isolation and money to run it for ever and a day.

Such VMs I will be running from this server are the following:

-

Minecraft server

-

NGINX RTMP streaming server with ffmpeg encoding

-

A Ubuntu VM for compiling OpenWrt

-

Large Plex server

-

Windows Server for re-imaging windows PCs

OMV Server

This again has Debian 11 Bullseye installed as the base OS but this has OpenMediaVault installed on top which I will be closely utilising Docker with. Such things I will be running are:

-

Home Assistant

-

Nextcloud

-

Small Plex server

-

Wireguard

-

SMB shares

-

FTP server

-

Pi-hole DNS

-

DNSCrypt-Proxy

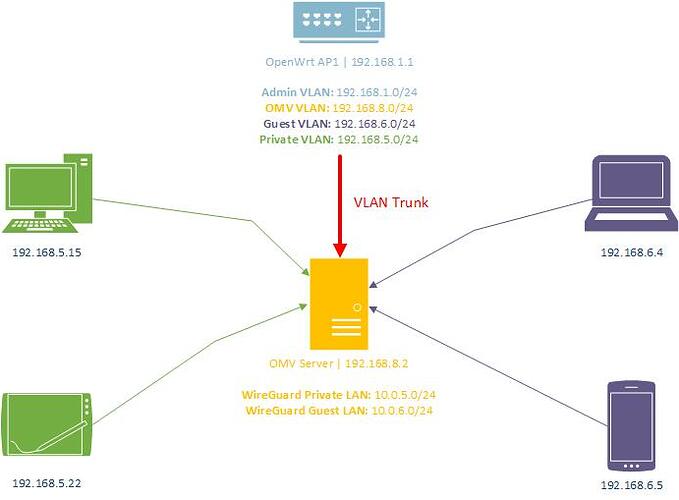

I am still considering running this server in its own DMZ/VLAN as I would like to use OpenMediaVault for private and guest users such as friends and other family members outside of my household. I will be essentially creating two public shares; one for private LAN users accessing via my private VLAN/Wireguard and the other for guest Wireguard users accessing via the guest VLAN/Wireguard.

On OpenWrt I would simply need to create firewall rules to allow traffic to traverse from the private VLAN to the VLAN OMV is situated on, vice-a-versa additional rules for guest VLAN users to be able to access the same OMV server too. Each public SAMBA share would then utilise the hosts allow parameter so only traffic originating from a specific subnet could connect.

My thinking is, placing the OMV server onto one of the private or Guest VLANS without the middle network would pose more of a security risk as there would be direct connection between the private and guest VLANs? Having that server in the middle in a third VLAN hosting shares and Wireguard instances for both VLANs seems like a safer approach. Correct me if I'm wrong.

Web Server

I'm hoping to run a development web server stack on Linux and this is a computer I would like to have full isolation. Is there any risk to opening HTTP/HTTPS ports

With the hypervisors servers and Docker hosts such as my Proxmox and OMV server I would like to configure them with a VLAN trunk allowing me to pass the VLANs configured in OpenWrt and then define VLANs on the Docker containers and virtual machines that are running on these hypervisors.

However, these hypervisors will need to sit on a management VLAN of their own. My question is, can all the hypervisor servers sit on the same management VLAN and would be this considered safe practice?

I have achieved this setup with my Debian/Proxmox server by creating a VLAN-aware bridge, creating a Proxmox management VLAN vmbr0.8 and in OpenWrt setup a servers VLAN interface. I've tagged all of the appropriate VLANs onto the physical ports that the Proxmox server is connected to and by using the VLAN-aware bridge in Proxmox I'm able to set the untagged VLAN for VMs and treat them as though they are on my private VLAN for example.

"Proxmox Network Configuration

# network interface settings; autogenerated

# Please do NOT modify this file directly, unless you know what

# you're doing.

#

# If you want to manage parts of the network configuration manually,

# please utilize the 'source' or 'source-directory' directives to do

# so.

# PVE will preserve these directives, but will NOT read its network

# configuration from sourced files, so do not attempt to move any of

# the PVE managed interfaces into external files!

source /etc/network/interfaces.d/*

auto lo

iface lo inet loopback

auto eno1

iface eno1 inet manual

auto eno2

iface eno2 inet manual

auto bond0

iface bond0 inet manual

bond-slaves eno1 eno2

bond-miimon 100

bond-mode 802.3ad

bond-xmit-hash-policy layer2+3

bond-downdelay 200

bond-updelay 200

band-lacp-rate 1

auto vmbr0

iface vmbr0 inet manual

bridge-ports bond0

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 5-4094

bridge-pvid 8

auto vmbr0.8

iface vmbr0.8 inet static

address 192.168.8.122/24

gateway 192.168.8.1