:bated breath:

` Here are the eye-popping results of the tests, only minutes

old. The only difference between these tests is the OpenWRT

build.

Bravo. ``` I can scarcely grasp the enormity of this

accomplishment, Dave. Indeed, I can hardly even believe these

results, but there they are. Sometimes a great notion, eh?

Steve

` ## BEFORE AQL, build r12234-7d7aa2fd92, ath10k (not

ath10k-ct) ##

200416 21:27 /persist/home/srn root@nutkin# flent rrul -H rpc160

Started Flent 1.2.2 using Python 3.7.3.

Starting rrul test. Expected run time: 70 seconds.

Data file written to ./rrul-2020-04-16T212920.834707.flent.gz.

Summary of rrul test run at 2020-04-17 01:29:20.834707:

avg median # data pts

Ping (ms) ICMP : 3266.06 2255.00 ms 195

Ping (ms) UDP BE : 104.00 3.05 ms 83

Ping (ms) UDP BK : 78.56 2.88 ms 68

Ping (ms) UDP EF : 48.77 69.40 ms 267

Ping (ms) avg : 874.35 1128.27 ms 348

TCP download BE : 0.58 0.47 Mbits/s 126

TCP download BK : 0.08 0.04 Mbits/s 59

TCP download CS5 : 19.31 19.29 Mbits/s 291

TCP download EF : 20.78 20.52 Mbits/s 290

TCP download avg : 10.19 12.62 Mbits/s 300

TCP download sum : 40.75 41.03 Mbits/s 300

TCP totals : 55.13 55.15 Mbits/s 302

TCP upload BE : 0.03 0.20 Mbits/s 2

TCP upload BK : 0.01 0.07 Mbits/s 1

TCP upload CS5 : 6.14 4.62 Mbits/s 142

TCP upload EF : 8.20 8.39 Mbits/s 144

TCP upload avg : 3.59 7.23 Mbits/s 301

TCP upload sum : 14.38 14.52 Mbits/s 301

200416 21:31 /persist/home/srn root@nutkin# flent rrul_be -H

rpc160

Started Flent 1.2.2 using Python 3.7.3.

Starting rrul_be test. Expected run time: 70 seconds.

Data file written to ./rrul_be-2020-04-16T213145.337942.flent.gz.

Summary of rrul_be test run at 2020-04-17 01:31:45.337942:

avg median # data pts

Ping (ms) ICMP : 380.24 409.00 ms 290

Ping (ms) UDP BE1 : 47.59 198.41 ms 127

Ping (ms) UDP BE2 : 48.16 166.94 ms 121

Ping (ms) UDP BE3 : 47.44 105.24 ms 110

Ping (ms) avg : 130.86 414.18 ms 351

TCP download BE : 11.56 9.64 Mbits/s 179

TCP download BE2 : 11.54 10.54 Mbits/s 132

TCP download BE3 : 9.07 7.50 Mbits/s 155

TCP download BE4 : 12.85 13.30 Mbits/s 146

TCP download avg : 11.25 11.75 Mbits/s 301

TCP download sum : 45.02 45.53 Mbits/s 301

TCP totals : 45.35 45.52 Mbits/s 302

TCP upload BE : 0.07 0.08 Mbits/s 15

TCP upload BE2 : 0.09 0.12 Mbits/s 12

TCP upload BE3 : 0.09 0.11 Mbits/s 16

TCP upload BE4 : 0.08 0.10 Mbits/s 16

TCP upload avg : 0.08 0.07 Mbits/s 149

TCP upload sum : 0.33 0.09 Mbits/s 149

## AFTER AQL, build r12991-75ef28be59, ath10k (not ath10k-ct) ##

200417 12:08 /persist/home/srn root@nutkin# flent rrul -H rpc160

Started Flent 1.2.2 using Python 3.7.3.

Starting rrul test. Expected run time: 70 seconds.

Data file written to ./rrul-2020-04-17T120940.630122.flent.gz.

Summary of rrul test run at 2020-04-17 16:09:40.630122:

avg median # data pts

Ping (ms) ICMP : 44.11 43.90 ms 349

Ping (ms) UDP BE : 77.41 47.48 ms 300

Ping (ms) UDP BK : 77.40 50.03 ms 303

Ping (ms) UDP EF : 78.21 50.40 ms 308

Ping (ms) avg : 69.28 50.64 ms 351

TCP download BE : 8.64 7.46 Mbits/s 248

TCP download BK : 9.02 7.60 Mbits/s 251

TCP download CS5 : 9.04 7.54 Mbits/s 252

TCP download EF : 8.78 7.60 Mbits/s 245

TCP download avg : 8.87 8.17 Mbits/s 301

TCP download sum : 35.48 32.68 Mbits/s 301

TCP totals : 104.89 107.37 Mbits/s 305

TCP upload BE : 17.28 17.34 Mbits/s 185

TCP upload BK : 17.21 17.06 Mbits/s 188

TCP upload CS5 : 16.27 17.58 Mbits/s 182

TCP upload EF : 18.65 19.35 Mbits/s 189

TCP upload avg : 17.35 18.17 Mbits/s 302

TCP upload sum : 69.41 72.58 Mbits/s 302

200417 12:10 /persist/home/srn root@nutkin# flent rrul_be -H

rpc160

Started Flent 1.2.2 using Python 3.7.3.

Starting rrul_be test. Expected run time: 70 seconds.

Data file written to ./rrul_be-2020-04-17T121114.896308.flent.gz.

Summary of rrul_be test run at 2020-04-17 16:11:14.896308:

avg median # data pts

Ping (ms) ICMP : 41.30 43.10 ms 349

Ping (ms) UDP BE1 : 79.81 44.68 ms 313

Ping (ms) UDP BE2 : 80.77 45.60 ms 311

Ping (ms) UDP BE3 : 80.31 44.51 ms 312

Ping (ms) avg : 70.55 47.27 ms 351

TCP download BE : 9.28 8.06 Mbits/s 257

TCP download BE2 : 10.23 8.23 Mbits/s 261

TCP download BE3 : 9.68 8.13 Mbits/s 258

TCP download BE4 : 9.09 8.40 Mbits/s 257

TCP download avg : 9.57 8.60 Mbits/s 301

TCP download sum : 38.28 34.39 Mbits/s 301

TCP totals : 108.46 109.56 Mbits/s 304

TCP upload BE : 16.81 16.41 Mbits/s 185

TCP upload BE2 : 16.39 17.21 Mbits/s 185

TCP upload BE3 : 19.92 21.25 Mbits/s 183

TCP upload BE4 : 17.06 16.73 Mbits/s 191

TCP upload avg : 17.55 18.55 Mbits/s 301

TCP upload sum : 70.18 74.17 Mbits/s 301

`I would be as happy as you are, if I wasn't painfully aware of the 100s of millions of wifi APs shipped in the last 4 years that behave as per your "before" result. And I think it's still possible to do a great deal better on the _be test, but honestly I don't know enough about batman's encapsulation to tell.

could you slam the associated *.flent.gz files up somewhere?

For this test:

flent -H the_server_ip --step-size=.04 --socket-stats --te=upload_streams=16 tcp_nup

If you can get a packet cap of the "after" result (from the server) I'd love to see that. (tcpdump -i whatever_the_device_is -s 140 -n -w tcp_nup.cap) (note if you run the capture on the ap it will slow it down by a lot and heisenbug the result, better to be on the ultimate target)

` Alas, I have to declare the test we are celebrating very

flawed. I ran the "before AQL" test via my notebook's wifi

interface but the "after AQL" test via wire.

I discovered this by looking at the *.flent.gz files. (Which are

available now at rosepark dot us slash flentDotGzs1.tar)

To even things up, here are the much more comparable (and

believable by comparison, at least) results I get by using the

wifi interface again with the post-AQL build. I must go back,

sigh, and reload the old build and run the pre-AQL test by wire.

Science means apples to apples, among other things.

200417 12:12 /persist/home/srn root@nutkin# flent rrul -H rpc160

Started Flent 1.2.2 using Python 3.7.3.

Starting rrul test. Expected run time: 70 seconds.

Data file written to ./rrul-2020-04-17T131955.475716.flent.gz.

Summary of rrul test run at 2020-04-17 17:19:55.475716:

avg median # data pts

Ping (ms) ICMP : 2803.39 2622.00 ms 226

Ping (ms) UDP BE : 99.09 16.87 ms 93

Ping (ms) UDP BK : 85.10 2.73 ms 69

Ping (ms) UDP EF : 45.00 74.43 ms 270

Ping (ms) avg : 758.15 1239.33 ms 351

TCP download BE : 0.81 0.64 Mbits/s 138

TCP download BK : 0.07 0.04 Mbits/s 63

TCP download CS5 : 18.90 18.67 Mbits/s 286

TCP download EF : 19.11 18.99 Mbits/s 287

TCP download avg : 9.72 11.63 Mbits/s 301

TCP download sum : 38.89 38.82 Mbits/s 301

TCP totals : 53.73 53.94 Mbits/s 304

TCP upload BE : 0.02 0.46 Mbits/s 1

TCP upload BK : 0.01 0.03 Mbits/s 1

TCP upload CS5 : 8.30 8.96 Mbits/s 156

TCP upload EF : 6.51 6.63 Mbits/s 162

TCP upload avg : 3.71 7.49 Mbits/s 302

TCP upload sum : 14.84 14.96 Mbits/s 302

200417 13:21 /persist/home/srn root@nutkin# flent rrul_be -H

rpc160

Started Flent 1.2.2 using Python 3.7.3.

Starting rrul_be test. Expected run time: 70 seconds.

Data file written to ./rrul_be-2020-04-17T132127.818583.flent.gz.

Summary of rrul_be test run at 2020-04-17 17:21:27.818583:

avg median # data pts

Ping (ms) ICMP : 376.73 429.00 ms 283

Ping (ms) UDP BE1 : 41.21 212.31 ms 107

Ping (ms) UDP BE2 : 51.99 131.07 ms 116

Ping (ms) UDP BE3 : 50.60 209.64 ms 117

Ping (ms) avg : 130.13 434.71 ms 351

TCP download BE : 9.58 8.58 Mbits/s 136

TCP download BE2 : 14.19 12.68 Mbits/s 162

TCP download BE3 : 11.76 11.59 Mbits/s 197

TCP download BE4 : 11.15 10.20 Mbits/s 183

TCP download avg : 11.67 12.31 Mbits/s 301

TCP download sum : 46.68 44.44 Mbits/s 301

TCP totals : 47.01 44.24 Mbits/s 303

TCP upload BE : 0.08 0.08 Mbits/s 16

TCP upload BE2 : 0.08 0.08 Mbits/s 17

TCP upload BE3 : 0.09 0.11 Mbits/s 14

TCP upload BE4 : 0.08 0.12 Mbits/s 12

TCP upload avg : 0.08 0.07 Mbits/s 146

TCP upload sum : 0.33 0.09 Mbits/s 146

200417 13:22 /persist/home/srn root@nutkin#` Using the wired interface of my notebook for both the

before-AQL and after-AQL flent tests seems to indicate that

after-AQL is actually not such a big advantage, if indeed any

advantage at all.

I was hopeful; now I don't know what to think.

Steve

## BEFORE AQL, build r12234-7d7aa2fd92, ath10k (not ath10k-ct) ##

200417 14:54 /tmp root@nutkin# flent rrul -H rpc160

Started Flent 1.2.2 using Python 3.7.3.

Starting rrul test. Expected run time: 70 seconds.

Data file written to ./rrul-2020-04-17T145454.743300.flent.gz.

Summary of rrul test run at 2020-04-17 18:54:54.743300:

avg median # data pts

Ping (ms) ICMP : 27.22 26.70 ms 349

Ping (ms) UDP BE : 83.97 33.42 ms 309

Ping (ms) UDP BK : 84.61 31.98 ms 311

Ping (ms) UDP EF : 83.56 31.41 ms 311

Ping (ms) avg : 69.84 34.18 ms 351

TCP download BE : 7.84 6.72 Mbits/s 248

TCP download BK : 8.01 6.88 Mbits/s 245

TCP download CS5 : 8.31 6.72 Mbits/s 248

TCP download EF : 8.49 7.19 Mbits/s 245

TCP download avg : 8.16 7.39 Mbits/s 301

TCP download sum : 32.65 29.46 Mbits/s 301

TCP totals : 116.70 118.72 Mbits/s 305

TCP upload BE : 19.85 20.44 Mbits/s 175

TCP upload BK : 22.81 22.92 Mbits/s 195

TCP upload CS5 : 19.86 19.43 Mbits/s 207

TCP upload EF : 21.53 22.10 Mbits/s 194

TCP upload avg : 21.01 21.96 Mbits/s 302

TCP upload sum : 84.05 87.71 Mbits/s 302

200417 14:56 /tmp root@nutkin# flent rrul_be -H rpc160

Started Flent 1.2.2 using Python 3.7.3.

Starting rrul_be test. Expected run time: 70 seconds.

Data file written to ./rrul_be-2020-04-17T145629.817434.flent.gz.

Summary of rrul_be test run at 2020-04-17 18:56:29.817434:

avg median # data pts

Ping (ms) ICMP : 24.44 25.10 ms 349

Ping (ms) UDP BE1 : 87.96 30.52 ms 316

Ping (ms) UDP BE2 : 88.17 30.55 ms 313

Ping (ms) UDP BE3 : 87.99 30.22 ms 315

Ping (ms) avg : 72.14 31.99 ms 351

TCP download BE : 7.56 6.70 Mbits/s 243

TCP download BE2 : 7.69 6.74 Mbits/s 244

TCP download BE3 : 7.54 6.48 Mbits/s 236

TCP download BE4 : 7.75 6.73 Mbits/s 241

TCP download avg : 7.63 7.37 Mbits/s 301

TCP download sum : 30.54 29.47 Mbits/s 301

TCP totals : 116.69 118.76 Mbits/s 304

TCP upload BE : 24.79 23.37 Mbits/s 196

TCP upload BE2 : 20.23 21.01 Mbits/s 206

TCP upload BE3 : 19.05 20.34 Mbits/s 203

TCP upload BE4 : 22.08 22.47 Mbits/s 217

TCP upload avg : 21.54 22.17 Mbits/s 301

TCP upload sum : 86.15 88.59 Mbits/s 301

## AFTER AQL, build r12991-75ef28be59, ath10k (not ath10k-ct) ##

200417 12:08 /persist/home/srn root@nutkin# flent rrul -H rpc160

Started Flent 1.2.2 using Python 3.7.3.

Starting rrul test. Expected run time: 70 seconds.

Data file written to ./rrul-2020-04-17T120940.630122.flent.gz.

Summary of rrul test run at 2020-04-17 16:09:40.630122:

avg median # data pts

Ping (ms) ICMP : 44.11 43.90 ms 349

Ping (ms) UDP BE : 77.41 47.48 ms 300

Ping (ms) UDP BK : 77.40 50.03 ms 303

Ping (ms) UDP EF : 78.21 50.40 ms 308

Ping (ms) avg : 69.28 50.64 ms 351

TCP download BE : 8.64 7.46 Mbits/s 248

TCP download BK : 9.02 7.60 Mbits/s 251

TCP download CS5 : 9.04 7.54 Mbits/s 252

TCP download EF : 8.78 7.60 Mbits/s 245

TCP download avg : 8.87 8.17 Mbits/s 301

TCP download sum : 35.48 32.68 Mbits/s 301

TCP totals : 104.89 107.37 Mbits/s 305

TCP upload BE : 17.28 17.34 Mbits/s 185

TCP upload BK : 17.21 17.06 Mbits/s 188

TCP upload CS5 : 16.27 17.58 Mbits/s 182

TCP upload EF : 18.65 19.35 Mbits/s 189

TCP upload avg : 17.35 18.17 Mbits/s 302

TCP upload sum : 69.41 72.58 Mbits/s 302

200417 12:10 /persist/home/srn root@nutkin# flent rrul_be -H

rpc160

Started Flent 1.2.2 using Python 3.7.3.

Starting rrul_be test. Expected run time: 70 seconds.

Data file written to ./rrul_be-2020-04-17T121114.896308.flent.gz.

Summary of rrul_be test run at 2020-04-17 16:11:14.896308:

avg median # data pts

Ping (ms) ICMP : 41.30 43.10 ms 349

Ping (ms) UDP BE1 : 79.81 44.68 ms 313

Ping (ms) UDP BE2 : 80.77 45.60 ms 311

Ping (ms) UDP BE3 : 80.31 44.51 ms 312

Ping (ms) avg : 70.55 47.27 ms 351

TCP download BE : 9.28 8.06 Mbits/s 257

TCP download BE2 : 10.23 8.23 Mbits/s 261

TCP download BE3 : 9.68 8.13 Mbits/s 258

TCP download BE4 : 9.09 8.40 Mbits/s 257

TCP download avg : 9.57 8.60 Mbits/s 301

TCP download sum : 38.28 34.39 Mbits/s 301

TCP totals : 108.46 109.56 Mbits/s 304

TCP upload BE : 16.81 16.41 Mbits/s 185

TCP upload BE2 : 16.39 17.21 Mbits/s 185

TCP upload BE3 : 19.92 21.25 Mbits/s 183

TCP upload BE4 : 17.06 16.73 Mbits/s 191

TCP upload avg : 17.55 18.55 Mbits/s 301

TCP upload sum : 70.18 74.17 Mbits/s 301

`I don't know what to think either. What is your topology?

laptop - wifi AP - wifi AP - server was the basic structure of the test. Certainly going wifi to the wifi ap will drive it harder to distraction - it is multiplexing more than one wifi client that really messes up wifi.

Looking over your two tarballs, really, the result you had there is about what I expected from aql - about 40-60ms of latency on the rrul test () and from what I expected pre-aql (2-3 sec).

what is the laptop's wifi chip?

what is the laptop's wifi chip?

From lshw:

*-network

description: Wireless interface

product: Centrino Advanced-N 6205 [Taylor Peak]

vendor: Intel Corporation

physical id: 0

bus info: pci@0000:03:00.0

logical name: wlp3s0

version: 34

serial: 8c:70:5a:f8:c8:14

width: 64 bits

clock: 33MHz

capabilities: pm msi pciexpress bus_master cap_list ethernet physical wireless

configuration: broadcast=yes driver=iwlwifi driverversion=4.19.0-8-amd64 firmware=18.168.6.1 ip=192.168.159.190 latency=0 link=yes multicast=yes wireless=IEEE 802.11

resources: irq:36 memory:f2500000-f2501fff

...but I was trying to limit the wifi exposure of the test to just the radios involved in batman-adv, so I tried to get that chip out of the circuit.

so it was laptop - gige ethernet - batman - batman - gige ethernet - server where you could discern no difference?

Looks to me like you've got an extra Gbit ethernet in your chain

above. I would characterize it as:

laptop - 1Gbit ethernet - batmanrouter (- 5GHz radio -) batmanrouter

with netserver running right on the second batmanrouter. But maybe

that's a distinction without a difference. Dave, I dunno how to

read these tests, really. I'm just guessing. But the performance

with the new build seemed (trivially) worse, as opposed to (much)

better. The problem I'm having in the field is with VOIP, which I gather is

very sensitive to bufferbloat. That chain looks like this:

(internet actually around 120Mbit but claimed to be 1Gbit, ha ha) -

batman (- 5Ghz radio -) batman - 100Mbit ethernet - VOIP box -

analog wiring - telephone. Since no wifi is involved in that chain, other than the batman

radio, I was particularly interested in factoring out all other wifi

from the test. The tests make me think maybe the new build isn't

going to help me with the VOIP drop-out issue. The same VOIP

arrangement with the same vendors (Obihai, Google Voice) works

essentially perfectly when the chain is:

(internet actually around 120Mbit but claimed to be 1Gbit, ha ha) -

1Gbit router (irrelevantly running batman) - VOIP box - analog

wiring - telephone. (Right now I'm trying to work out a safe way to upgrade all 10 mesh

routers without having to invade universally-quarantined homes here

in New York. If I break the mesh, I will lose the access required

to fix it. Still, I'd rather endure this safety-programming

headache than to spread or contract the virus.)

@dtaht I have been following your research and efforts for some time and I am in awe of the improvements you've introduced in the WiFi world. Thank you so much for continuing to develop and innovate!

I am the happy owner of a Netgear R7800 router--IMHO one of the finest out there. That said, I have moved my routing/firewall over to a pfSense box for reasons that do not center around the R7800's performance capabilities. But, I still use my R7800 entirely as an AP at this point.

I have been running @hnyman's snapshot builds and have been blown away by the low WiFi latency with the 5.4 kernel and new ath10k-ct driver. Astonishing difference, really.

Now to my question for you, given the R7800 has 512MB RAM, is there any advantage to be gained still by using the kmod-ath10k-ct-smallbuffers module? Does it in any way introduce further latency improvements due to smaller buffers even on devices that are not otherwise RAM bound?

More public benchmarks between the two versions are good.  I'm happy to hear the 7800 is working well. I have one in my junk bin somewhere.

I'm happy to hear the 7800 is working well. I have one in my junk bin somewhere.

I'm weird, I go for low latency and I care not at all about peak throughput on a lab bench. (what kind of throughput are you getting?) Having less buffering always means less latency.

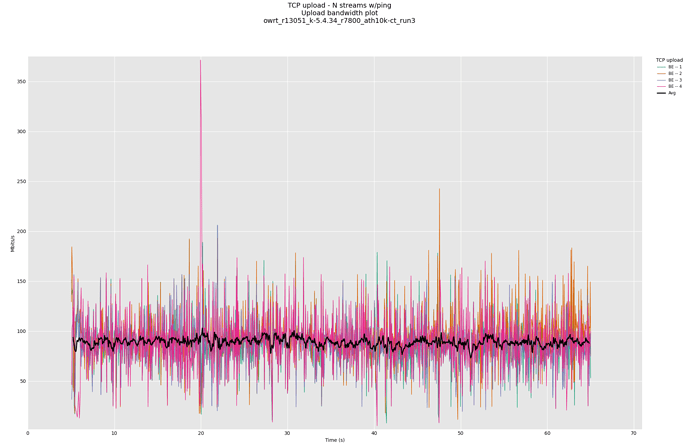

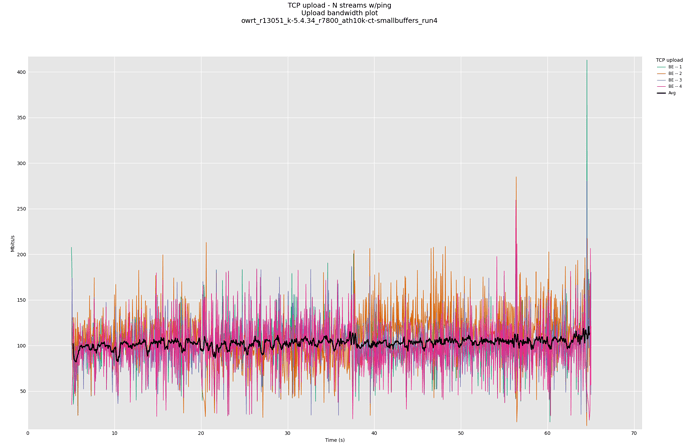

My big test at the moment is that I'm trying to get the codel target down to sanity for tcp for the ath10k, and jiggle AQL, so what I've been doing is running tests like this:

flent -H the_server_box --socket-stats --te=upload_streams=4 --step-size=.04 -t 'whatever_is_under_test' tcp_nup

And... running out of cpu on the low end uap mesh routers I'm using at 300+Mbit with VHT80. Assuming this patchset works out would love to see @hnyman give 'em a shot.

running netperf on the router itself will generally A) invoke TSQ and automatically rate limit and B) run you out of cpu. You can beat A) by running more flows (16 or more) but that means you hit B) sooner.

A valid test is of the customer environment - a real client (or set thereof) and real server(s).

oh, good, the QCA9984 is the firmware in the netgear. QCA9988 is what I have tested. We had so many issues with the darn firmware that I'd given up on the ath10k entirely, so many chipset variants... I'm glad so many have perservered in fixing so many other bugs, here (huge hat tip to ben greer) and I go back to asking what kind of throughput you are getting out of it....

Thanks for the quick reply! If you ever dig your R7800 out of your junk pile and need to find it a new home, let me know...

I'm in the process of building a stripped down build from the latest snapshot that will be bare-bones for my AP needs. I'll build for ath10k-ct and a separate build for ath10k-ct-smallbuffers and do some benchmarking with flent tomorrow AM. What a fantastic tool it is!

Looking forward to seeing the results in the morning and sharing with you.

I forgot a key param to flent above. Also add --socket-stats to gather tcp info. should be a nice day tomorrow.

Oops again. Testing is a can of worms. I'll do this over again ASAP, but not as soon as I'd like.

I have some results from both ath10k-ct and ath10k-ct-smallbuffers tests. What is the best way to get them to you?

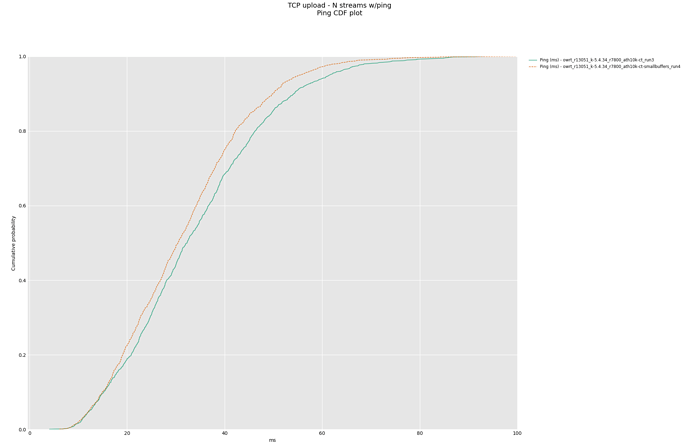

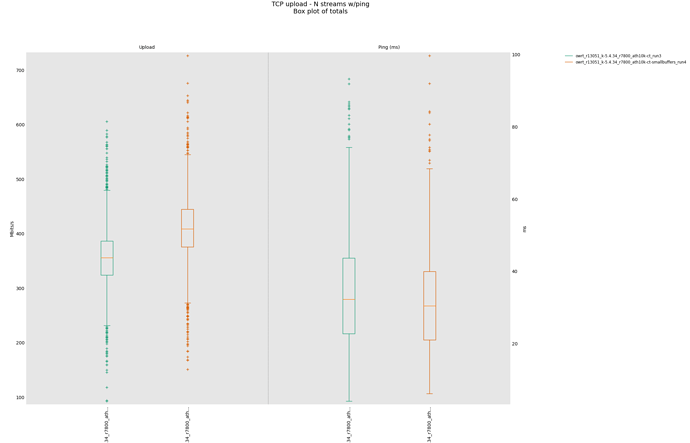

R7800 ath10k-ct:

R7800 ath10k-ct-smallbuffers:

hahaha, you are showing smallbuffers works mildly better but not so much better that it could just merely be noise in the two test results.

If you can tar up the flent.gz files and send those to dave dot taht at gmail dot come I'd appreciate it.

If you have some captures, that's too big for email, dropbox? scp to some site you control? I can give you an account on a bufferbloat.net server if you like, give me a desired login and ssh key.....

f you feel like patching yer openwrt kernel, you can patch the mac80211 backports in openwrt to reduce the codel target. Please note this is still the wrong patch for 2.4ghz, but... my guess is you'll see quite a reduction in tcp rtt at no cost in throughput (For this particular test). put http://www.taht.net/~d/982-do-codel-right.patch into package/kernel/mac80211/patches/ath/982-do-codel-right.patch which I think is the right thing and right place.

diff --git a/net/mac80211/sta_info.c b/net/mac80211/sta_info.c

index c431722..92ba09b 100644

--- a/net/mac80211/sta_info.c

+++ b/net/mac80211/sta_info.c

@@ -476,10 +476,11 @@ struct sta_info *sta_info_alloc(struct ieee80211_sub_if_data *sdata,

sta->sta.max_rc_amsdu_len = IEEE80211_MAX_MPDU_LEN_HT_BA;

sta->cparams.ce_threshold = CODEL_DISABLED_THRESHOLD;

- sta->cparams.target = MS2TIME(20);

+ sta->cparams.target = MS2TIME(5);

sta->cparams.interval = MS2TIME(100);

sta->cparams.ecn = true;

-

+ sta_dbg(sdata, "Codel target, interval %d, %d\n", sta->cparams.target,

+ sta->cparams.interval);

sta_dbg(sdata, "Allocated STA %pM\n", sta->sta.addr);

return sta;

@@ -2468,15 +2469,9 @@ static void sta_update_codel_params(struct sta_info *sta, u32 thr)

if (!sta->sdata->local->ops->wake_tx_queue)

return;

- if (thr && thr < STA_SLOW_THRESHOLD * sta->local->num_sta) {

- sta->cparams.target = MS2TIME(50);

- sta->cparams.interval = MS2TIME(300);

- sta->cparams.ecn = false;

- } else {

- sta->cparams.target = MS2TIME(20);

- sta->cparams.interval = MS2TIME(100);

- sta->cparams.ecn = true;

- }

+ sta->cparams.target = MS2TIME(5);

+ sta->cparams.interval = MS2TIME(100);

+ sta->cparams.ecn = true;

}

diff --git a/net/mac80211/tx.c b/net/mac80211/tx.c

index 535911b..ca50d0a 100644

--- a/net/mac80211/tx.c

+++ b/net/mac80211/tx.c

@@ -1551,7 +1551,7 @@ int ieee80211_txq_setup_flows(struct ieee80211_local *local)

codel_params_init(&local->cparams);

local->cparams.interval = MS2TIME(100);

- local->cparams.target = MS2TIME(20);

+ local->cparams.target = MS2TIME(5);

local->cparams.ecn = true;

local->cvars = kcalloc(fq->flows_cnt, sizeof(local->cvars[0]),