+1; this will affect throughput though, given that WiFi has a relative high temporal overhead per txop, no? I guess that just means that the optimization needs to simultaneously look at number of txops available and the goodput/airtime ratio?

Heh. So we've taken this conversation from 3 things to six.

I too have seen mdns melt down a campus network. There was a project started long ago to make it possible for mdns users to switch to unicast. I don't know the current status or where the code is... I'll go look.

yes, there is a marked decline in bulk throughput at a 3ms txop. However, short transactions and short flows do tons better, if you consider that each potential rtt

is shorter in the slow start phase.

Via the author: (arguably if enough people care about improving mdns on this go-round, we should start a different bug!)

The dnssd-pproxy code is here: https://github.com/Abhayakara/mdnsresponder

The dnssd-proxy code is where you’d start; what you’d need would be to run it on the router and have the router block forwarding of multicast. The discovery proxy would have to be discoverable as a browsing domain, per RFC 6763, and it would have to be possible for the router to do multicast and receive multicast replies. So there’s a bit of work to do to make this entirely work on a home router, but it’s totally doable.

to clarify, by “on the router,” I mean that the WiFi access point. If there are multiple access points, you need each access point to have its own browsing domain, each of which appears in the list of legacy browsing domains for the subnet. Each access point would only multicast to the WiFi, not to ethernet. And in the case where you have an ethernet backbone that you also want to participate in discovery, you put a discovery proxy on ethernet as well.

NOTE: I wrote this before seeing @dtaht's post on dnssd-proxy so it's all really in response to

I'm not sure the solution to bad LAN design is to hack perfectly fine working multicast ideas and bork them so WiFi doesn't melt.

Honestly I think we need to move ASAP to ipv6 and use multicast a lot MORE, with proper igmp and MLD snooping in switches.

For example, LAN games should be multicast ipv6. IPTV should be multicast ipv6. http proxies should announce on multicast ipv6 (way way better than the stupid proxy autodiscovery mechanisms we have now). There should be some kind of multicast "super announcer" that can aggregate announcements into larger but fewer packets. A client should be able to send a request to ff02::abc:123 or something and get a list of all devices that have announced on multicast jammed into 1 or 2 packets.

Even $30 small business switches need to get MLD support. We live in a world where people are pissed if they get "only" 100 Mbps on WiFi, and yet by default APs allow data rates down to 1Mbps. To me out of the box 12Mbps should be the min rate, and there should be very clear sliders in the GUIs on APs which say "deployment density: low, medium, high, veryhigh" with low enabling 6Mbps, high setting 24 as the minimum, and very high setting 36.

And the last hackathon has some improvements and openwrt packaging:

Still there should be another bug for this, I just need a good title.

MDNS MAKES YOUR WIFI NETWORK SUCK THIS IS THE FRIGGEN FIX.

Sorta - it's not locking up anymore, but it's not working either (i.e., doesn't provide fairness). The good news is that I now have a test setup that can reproduce this, and I have some ideas; will post an update once I do get something working

@tohojo I'm going to nag a bit =) Any news on virtual airtime?

Yeah, sorta: It's not locking up any more, but it doesn't work either

(as in, doesn't provide fairness)... ![]()

The good news is that I now have a test setup that can reproduce this,

and I have some ideas that I need to try out. Will post an update as

soon as I have something that (appears to be) working... ![]()

Pushed an update now to https://github.com/tohojo/openwrt/tree/virtual-airtime

It appears to work to provide fairness on ath9k most of the time, and on ath10k in cases where stations actually become backlogged (which, notably, doesn't include single TCP flows). And it doesn't lock up anymore

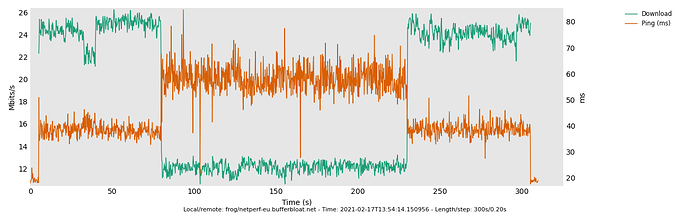

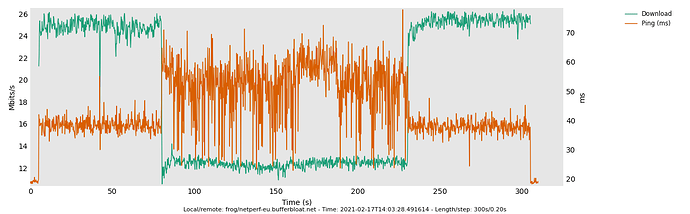

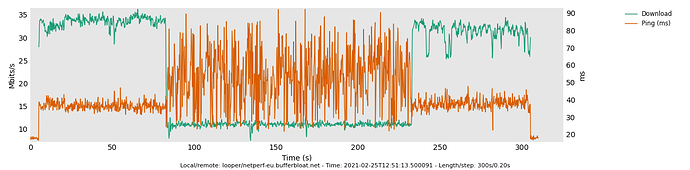

I did some tests with your virtual airtime patch to compare it to the DRR that's default in AQL right now. I found a place in my house where the signal level was low enough to cause the wireless connection to become the bottleneck, and I placed two laptops there that both ran a 4-stream TCP download. One laptop was set to run the test for 5 minutes while the other would start a 2.5 minute download in the middle of the 5 minute download of the first laptop. Here are the results:

First laptop (default AQL)

First laptop (virtual airtime AQL)

Second laptop (default AQL)

Second laptop (virtual airtime AQL)

I see some differences between the default AQL and virtual airtime AQL. Throughput for the first laptop is pretty much the same in both tests, but the ping has a different behaviour in the virtual airtime case. During the period when the second laptop is downloading the ping continually dips to lower values. I don't know if this is good or bad, but I guess less jitter would be better.

The throughput of the second laptop is more stable with virtual airtime, and the ping has the same features as in the tests of the first laptop. You can see that the ping also tends to drop to lower values in this case. During the default test it actually seems to do the opposite in that it occasionally jumps to higher values.

Hmm, that's interesting! I'm a bit vary of concluding anything on the basis of a single test, but at least it didn't blow up completely like before

What firmware version did you use for the "before" test? The same build from master just without the virt-time patch, or is it also an older firmware?

Thanks for testing!

Both builds are based on OpenWrt master up to and including the following commit, and the virtual airtime build has your patch applied on top.

Right, great - just checking that they are really comparable results.

Looking at the graphs in a bit more detail, I'm not actually sure if the scheduler actually succeeds in providing fairness. The throughput of the initial station drops to less than half; but some of that can be efficiency drop due to worse aggregation. We'd need to grab the airtime data from the AP to get a better view of that; Flent can do this, but it doesn't work well over the same WiFi connection that you're measuring.

On the bright side, it doesn't perform worse either, so it doesn't appear to have broken anything. And the second laptop does appear to get better performance in the second run. I wonder how repeatable this is? Did you do just the one test or could you try repeating the whole thing and posting another set of graphs?

Are you sure about the lack of fairness? Looking at the results from the virtual airtime run the first laptop initially has a throughput of ~25 mbps, and when the second laptop starts its download the throughput is split between the two at ~12.5 mbps each. The total throughput still remains ~25 mbps. However, in the control test with default AQL there's something strange going on with the second laptop jumping between ~12.5 and ~8.5 mbps.

I only ran a single test. I need to figure out how to automate these things since the workload is quite high when you do these things manually. How can I get flent to look at airtime data from the AP?

Ohhhhh, right, I was looking at the wrong axis, silly me

So this means that they both share pretty evently, but considering that they are both similar devices with similar distance to the AP that doesn't have to be because the scheduler is working. One way to check if it is, is to try to enforce an uneven distribution between the two, by setting a station weight on one of them (on the AP):

iw dev <devname> station set <MAC address> airtime_weight 512

(the default weight is 256, so this should try to give one station twice the airtime of the other).

As for having Flent capture airtime statistics this is what I do:

flent --test-parameter wifi_stats_hosts=root@10.42.3.19 --test-parameter wifi_stats_stations=ec:08:6b:e8:26:0a,dc:a6:32:cb:ea:49 --test-parameter wifi_stats_interfaces=wlp1s0 rtt_fair_var_up 10.44.1.102 10.44.1.146 -t "uneven weights ath9k no patch"

where 10.42.3.19 is my AP, and of course the station MAC addresses and the interface name has to match what the AP uses. This will make Flent connect via SSH and poll the debugfs files to read the airtime as the test progresses. The -t argument makes a note of what it is that I'm running.

This test most also uses the rtt_fair_var_up test to streams from the device running the test towards the devices. I.e., I run this on a machine that's on the other side of the AP connected via Ethernet, and it will start flows towards the stations (the two IPs on 10.44.1). The clients should then be running a netserver instance to be able to serve as a test endpoint. Doing it this way saves some manual work, and some running to the clients to start tests

I note that I'm still trying to get on this myself...

So do we have to tune those airtime settings in snapshot? Or default values are OK as well?

Does it only work difference when in congestion?

edit: does this also apply to ath9 2.4GHz?

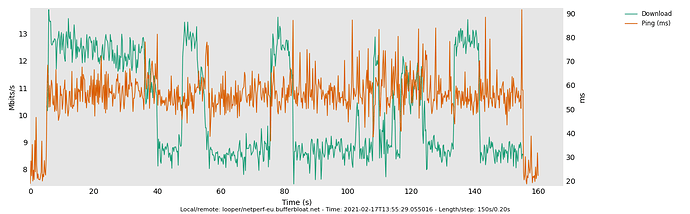

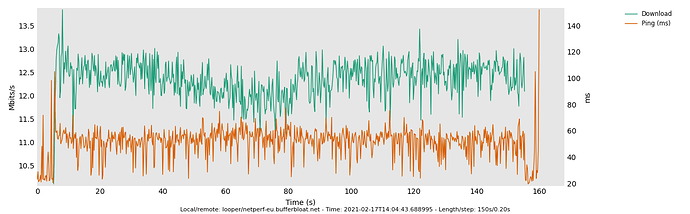

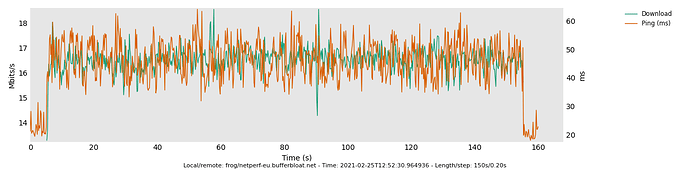

Unfortunately, I don't have enough clients to be able to run your suggested setup, but I have run another test. What I did was repeat the same test as in the previous case, but this time the laptop that starts downloading in the middle of the test of the other laptop has an airtime weight of 512. Here are the results:

First laptop (virtual airtime AQL)

Second laptop (virtual airtime AQL)

I don't quite know how the weights work, but if it's supposed to be the weight of the station divided by the total weight of all stations then it appears to be working. In this case we have a total weight of 512 + 256 = 768 which means that the second laptop should have ~2/3 of the bandwidth (and it does!).

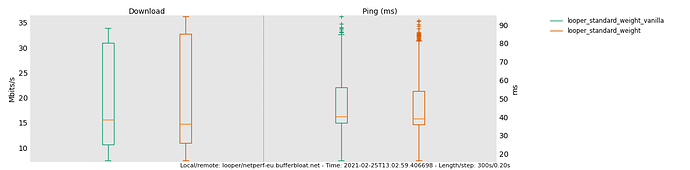

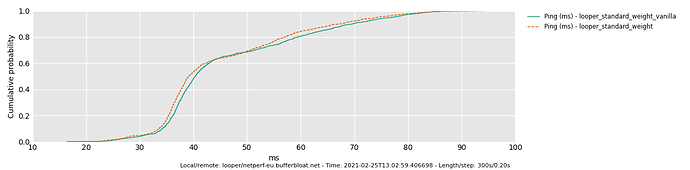

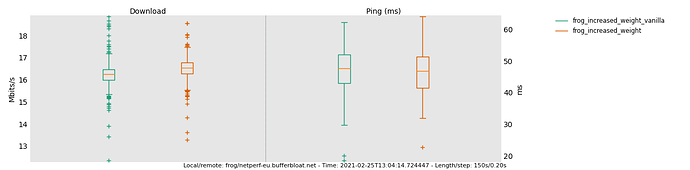

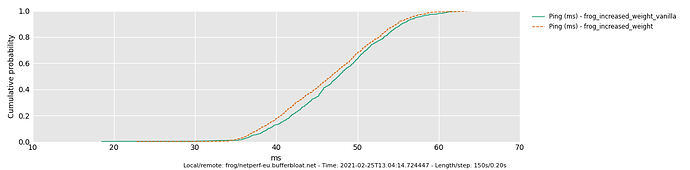

The test with the default DRR gives pretty much the same results. The box plots and CDF plots show that it's very similar (the vanilla suffix in the labels denotes the DRR test):

First laptop (box plot comparison between DRR and virtual airtime)

First laptop (CDF plot comparison between DRR and virtual airtime)

Second laptop (box plot comparison between DRR and virtual airtime)

Second laptop (CDF plot comparison between DRR and virtual airtime)

These results are based on single runs for each of the two cases, so take them for what they're worth.

The default is to give every station the same weight, which is probably what you want unless you have some specific requirements. See the Polifi paper for examples: https://arxiv.org/abs/1902.03439

Yes, it should ![]()