I think there is one strange thing. As far as I can remeber the aql q depth was always round about 12000 for the iperf udp "flood" test. Now it is changing rapidly random betwenn 0 and 12000. Was something changed?

I've pushed the airtime fairness rework to master, along with some more improvements (commits 6d49a25804d78d639e08a67c86b26991ce6485d8 and 7bf5233a2a2b1c81fae2f3a85bb971ef2a7f9b27).

The quantum change in the last commit might help with cases where the AQL budget isn't fully utilized.

Is it possible that previously published fixes caused link errors due to missing definition in mac80211.h file?

I added patch (botton), and will tests.

--- a/include/net/mac80211.h

+++ b/include/net/mac80211.h

@@ -153,6 +153,7 @@

IEEE80211_AC_BE = 2,

IEEE80211_AC_BK = 3,

};

+#define IEEE80211_NUM_ACS 4

/**

* struct ieee80211_tx_queue_params - transmit queue configuration

In version OpenWrt 18.06 was a above definition, in 19.07 and later not was. This has been moved to the file linux/ieee80211.h. Definition IEEE80211_NUM_ACS is used in files cfg.c and main.c (information from patches), but to cfg.c file is included only linux/ieee80211.h and to file main.c is included only/net/mac80211.h. Sorry for not understanding the topic or thinking wrong, but I'm not a programmer, although I have some basics.

EDIT: First tests some very simmilar to my best from stable on 5.4.154 kernel (OpenWrt 21.02.1; NoTengoBattery v3.01 image)- link

EDIT2: Propably patches 336 and 337 they will also be helpful in some way...

Is without bug and better speed so far, ping is probably better for a small number of clients (I won't test it today because it's Sunday and there are only 3 devices connected).

I also encountered your errors on R7800 when running a 22.03 image from May 8 2022 (aka without any recent Felix' commits), with both AQL and ATF enabled.

dmesg | grep -i tx

[ 25.527953] debugfs: File 'c2c_tx' in directory 'stats' already present!

[ 2566.673679] ath10k_pci 0000:01:00.0: received unexpected tx_fetch_ind event: in push mode

[ 2566.673712] ath10k_pci 0000:01:00.0: received unexpected tx_fetch_ind event: in push mode

[ 2566.680934] ath10k_pci 0000:01:00.0: received unexpected tx_fetch_ind event: in push mode

[ 2566.689057] ath10k_pci 0000:01:00.0: received unexpected tx_fetch_ind event: in push mode

[ 2566.697231] ath10k_pci 0000:01:00.0: received unexpected tx_fetch_ind event: in push mode

[ 2566.705384] ath10k_pci 0000:01:00.0: received unexpected tx_fetch_ind event: in push mode

[ 2566.713473] ath10k_pci 0000:01:00.0: received unexpected tx_fetch_ind event: in push mode

[ 2566.721696] ath10k_pci 0000:01:00.0: received unexpected tx_fetch_ind event: in push mode

[ 2566.729846] ath10k_pci 0000:01:00.0: received unexpected tx_fetch_ind event: in push mode

[ 2566.738031] ath10k_pci 0000:01:00.0: received unexpected tx_fetch_ind event: in push mode

# uname -a

Linux 5.10.113 #0 SMP Sun May 8 13:53:12 2022 armv7l GNU/Linux

# cat /etc/os-release

NAME="OpenWrt"

VERSION="22.03-SNAPSHOT"

ID="openwrt"

ID_LIKE="lede openwrt"

PRETTY_NAME="OpenWrt 22.03-SNAPSHOT"

VERSION_ID="22.03-snapshot"

HOME_URL="https://openwrt.org/"

BUG_URL="https://bugs.openwrt.org/"

SUPPORT_URL="https://forum.openwrt.org/"

BUILD_ID="r19338+20-ae64d0624c"

OPENWRT_BOARD="ipq806x/generic"

OPENWRT_ARCH="arm_cortex-a15_neon-vfpv4"

OPENWRT_TAINTS="busybox"

OPENWRT_DEVICE_MANUFACTURER="OpenWrt"

OPENWRT_DEVICE_MANUFACTURER_URL="https://openwrt.org/"

OPENWRT_DEVICE_PRODUCT="Generic"

OPENWRT_DEVICE_REVISION="v0"

OPENWRT_RELEASE="OpenWrt 22.03-SNAPSHOT r19338+20-ae64d0624c"

Maybe problems with airtime fairness, pings, speed or other things are not so much problems with airtime, but with omissions or unnecessary corrections... maybe patches type link are not needed at all (clarity sometimes not indicated) and, for example, link- old junk from prehistory may be helpful in development. To solve the problem sometimes you have to dig deeper. I would like to use this second patch from the post ![]() - similar elements are in the

- similar elements are in the cfg.c file (IEEE80211_NUM_ACS) but I do not know if it makes sense to play.

Hi Dave,

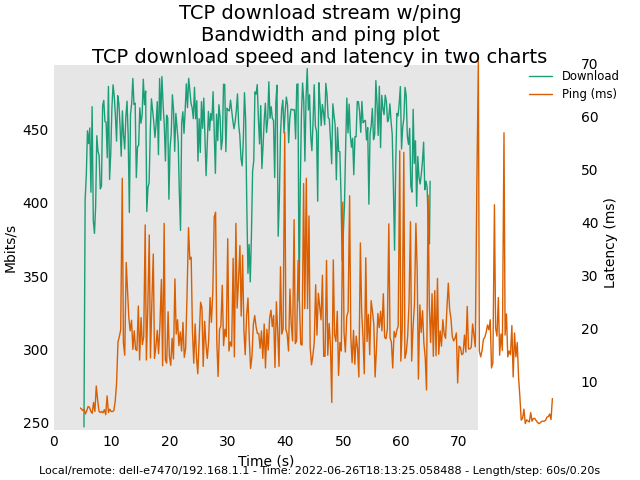

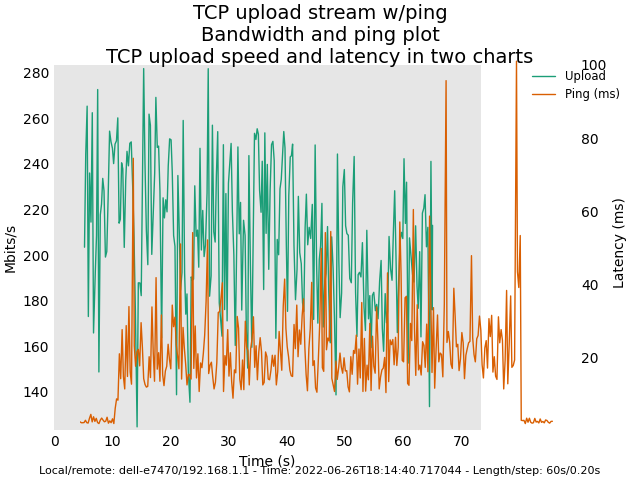

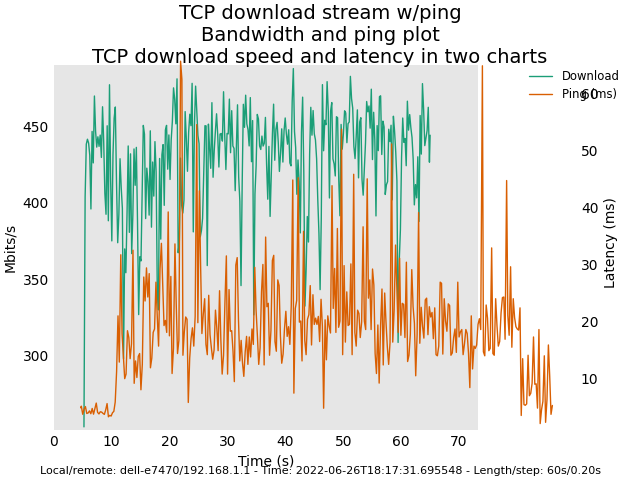

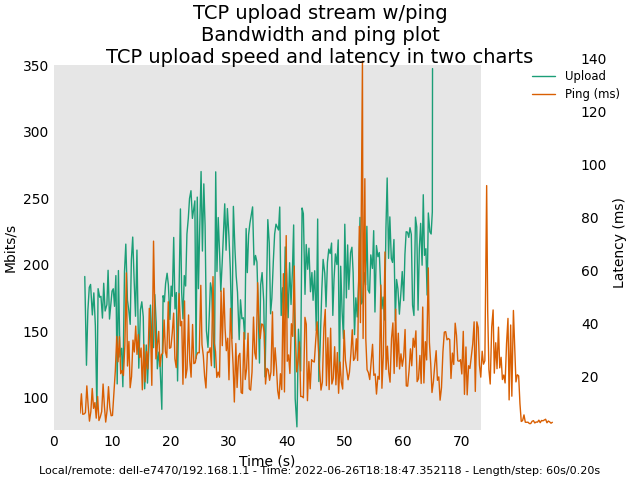

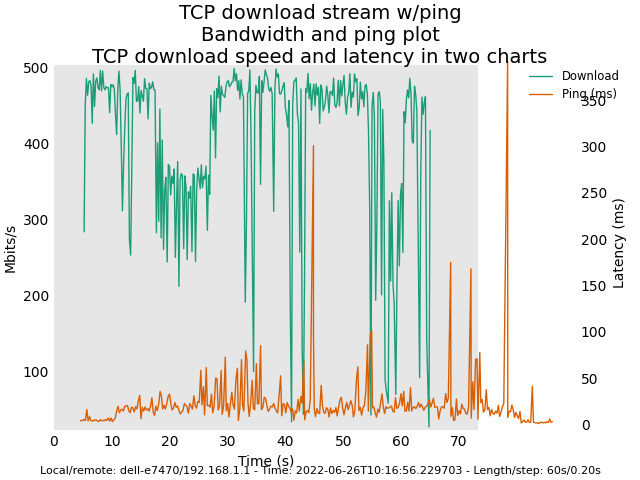

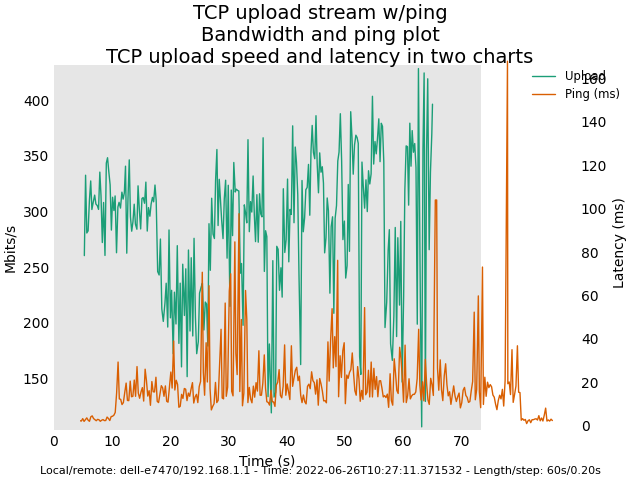

Here are some plots for you. Latency plots for original AQL / VTBA look smoother than the new AQL / RR. However, AQL / VTBA may exhibit extremely high latency in corner cases (e.g. clients leaving the WIFI network etc.). My tests for the new AQL/RR in the next several days is to observe whether the high latency problem would no longer exist in these corner cases.

There were 12 associated 5GHz clients. 3 of them were streaming Youtube and the rest was mostly idling.

For all these tests, I used vanilla ath10k instead of ath10k-ct.

Edit 1: Looking at the plots more carefully, it seems the latency for the previous AQL/VTBA may look smoother just because its latency scale range is bigger, so it flattens the fluctuations to some extent.

Edit 2: As we can see, the reason for the much bigger scale range for latency in the plots for the previous AQL/VTBA implementation is the random drastic spikes in latency during the test runs. These big spikes rendered the latency scale range quite larger in order to accommodate them in the plots. In other words, the previous AQL/VTBA may look superficially good but not necessarily better than the new AQL/RR. I will try much longer runs (each of the shown plots was about 70 seconds in length) to see if the large latency spike occurrences are more or less suppressed in the new AQL/RR.

Master snapshot (Sunday 26 2022) with Felix' commits (revert back to deficit round-robin scheduler)

======

1st try:

======

2nd try:

22.03 snapshot (May 8 2022) with default AQL/ATF enabled

======

1st try:

=======

2nd try:

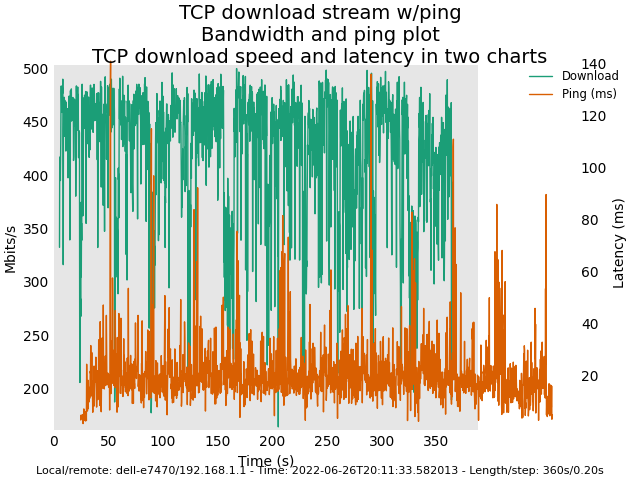

The following plots were from longer run times: 6 minutes per download and per upload.

Image: Master snapshot (Sunday 26 2022) with Felix' commits (revert back to deficit round-robin scheduler)

Hi Felix,

I have tried the master snapshot with all your most recent commits, and so far I have not observed any extreme latency (that might last a few minutes) when clients were leaving the WIFI network. I have no longer seen log messages similar to the following in such cases.

Wed Mar 9 09:45:51 2022 kern.warn kernel: [150007.211061] ath10k_pci 0000:01:00.0: failed to lookup txq for peer_id 16 tid 0

Wed Mar 9 09:45:51 2022 kern.warn kernel: [150007.218685] ath10k_pci 0000:01:00.0: failed to lookup txq for peer_id 16 tid 7

However, I have still seen some of the following log messages with the latest commits (also seen in past builds prior to your recent commits). Unfortunately, I have not yet been able to associate them to any particular event.

[14509.223048] ath10k_pci 0000:01:00.0: received unexpected tx_fetch_ind event: in push mode

[14509.223080] ath10k_pci 0000:01:00.0: received unexpected tx_fetch_ind event: in push mode

Thanks so much for all your great contributions to the betterment of FOSS.

Not answering on behalf of @nbd here, but the recent commits are all on the mac80211 driver code. The logs shown are from the ath10k driver. So the issues or logs thrown by ath10k will continue until that has been identified and fixed.

For me, my R7800 (running 21.02 and mainline ath10k driver) and my E8450 (running master) is not experiencing high latency often (still see them once in a while) but it is not any difference from when before the switch to VTBA scheduler, so it looks like the patch is working. I have yet to try the latest patches committed into master, only those in @nbd's staging tree. Since then, there's additional fixes commited into master with a later mt76 commit as well, so I'll be trying that with E8450 and my R7800 as well.

Thanks for testing. I have now backported the changes to the 22.03 branch as well, since it's an obvious improvement compared to the VTBA code.

thx for using flent!!

those look a great deal better, also showing a clear periodicity in the longer trace. It looks to me that a 200sec tests will suffice to show that. (as to why it is there, don't know - multicast? probe? other traffic? aqm acting up? rate controller? Gravitational flux?)

you can get finer granularity with --step-size=.05. It's helpful for comparison plots if you either scale them the same or put the two plots on the same graph with different names via -t the_test_conditions when you take them.

flent-tools running on the router (setup with ssh), can poll the aqm stats. @toke_dk ?

See how less noisy my data was 6 years ago?

Only had 2 machines at close range on that network. And that was only achieving 60Mbits. I am inclined to suggest lowering the max rate advertised to see if the perodicity remains. I started off this whole bug by only allowing mcs1 - mcs4 (I think!)

when I started this bug I was using -ct-shortbuffers

Continuing from AQL and the ath10k is lovely:

The speed also drops drastically as there are more clients - no matter if they are active or not ... Ping is smaller but not stable.

But this is not this... Six clients and block all in short time.

39.043137] ath10k_ahb: added a new wireless network interface: wlan0 [phy0]

[38781.533558] usb usb2-port1: Cannot enable. Maybe the USB cable is bad?

[78111.449574] ath10k_ahb a000000.wifi: Invalid peer id 283 peer stats buffer

[91846.621269] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 463 tid 0

[91846.621487] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 463 tid 0

[91846.627560] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 463 tid 0

[91846.634848] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 463 tid 0

[91846.642297] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 463 tid 4

[91846.649408] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 463 tid 4

[91846.656658] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 463 tid 4

[91846.663985] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 463 tid 4

[91846.671276] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 463 tid 0

[91846.678559] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 463 tid 0

[91916.808358] ath10k_warn: 102 callbacks suppressed

[91916.808521] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 287 tid 3

[91916.812232] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 287 tid 3

[91916.819333] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 287 tid 3

[91916.826620] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 287 tid 3

[91916.834339] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 287 tid 0

[91916.841251] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 287 tid 0

[91916.848540] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 287 tid 0

[91916.855757] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 287 tid 0

[91916.863122] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 287 tid 3

[91916.870410] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 287 tid 0

[92166.985614] ath10k_warn: 319 callbacks suppressed

[92166.985788] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 291 tid 0

[92166.989482] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 291 tid 0

[92166.996563] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 291 tid 0

[92167.003831] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 291 tid 0

[92167.011352] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 291 tid 4

[92167.018442] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 291 tid 4

[92167.025731] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 291 tid 4

[92167.033023] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 291 tid 4

[92167.040281] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 291 tid 0

[92167.047600] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 291 tid 0

[92247.485593] ath10k_warn: 110 callbacks suppressed

[92247.485661] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 296 tid 4

[92247.489474] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 296 tid 4

[92247.496707] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 296 tid 4

[92247.503944] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 296 tid 4

[92247.511703] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 296 tid 0

[92247.518463] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 296 tid 0

[92247.525853] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 296 tid 0

[92247.533109] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 296 tid 0

[92247.540333] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 296 tid 4

[92247.547691] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 296 tid 4

[92356.123155] ath10k_warn: 510 callbacks suppressed

[92356.123324] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 298 tid 0

[92356.127033] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 298 tid 0

[92356.134129] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 298 tid 0

[92356.141429] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 298 tid 0

[92356.149120] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 298 tid 0

[92356.155987] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 298 tid 0

[92356.163350] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 298 tid 0

[92356.170637] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 298 tid 0

[92356.177848] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 298 tid 0

[92356.185233] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 298 tid 0

[92418.158329] ath10k_warn: 82 callbacks suppressed

[92418.158497] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 299 tid 0

[92418.162199] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 299 tid 0

[92418.169221] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 299 tid 0

[92418.176509] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 299 tid 0

[92492.669923] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 300 tid 0

[92492.670144] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 300 tid 0

[92492.676213] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 300 tid 0

[92492.683725] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 300 tid 3

[92492.690832] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 300 tid 3

[92492.698125] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 300 tid 3

[92492.705344] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 300 tid 3

[92492.712706] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 300 tid 0

[92492.719994] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 300 tid 0

[92492.727276] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 300 tid 0

[92510.540171] ath10k_warn: 65 callbacks suppressed

[92510.540343] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 301 tid 0

[92510.544042] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 301 tid 0

[92510.551064] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 301 tid 0

[92510.558348] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 301 tid 0

[92510.566015] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 301 tid 0

[92510.572986] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 301 tid 0

[92510.580270] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 301 tid 0

[92510.587562] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 301 tid 0

[92510.594916] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 301 tid 0

[92510.602662] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 301 tid 0

[92576.659154] ath10k_warn: 43 callbacks suppressed

[92576.659321] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 302 tid 0

[92576.663020] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 302 tid 0

[92576.670044] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 302 tid 0

[92576.677336] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 302 tid 0

[92576.685038] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 302 tid 0

[92576.691962] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 302 tid 0

[92576.699252] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 302 tid 0

[92576.706452] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 302 tid 0

[92576.713721] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 302 tid 0

[92576.721031] ath10k_ahb a000000.wifi: failed to lookup txq for peer_id 302 tid 0

I will test @nbd patches new (from number 330 to 336 and 337) now...

I've always had the errors above on my R7800, my dmesg is full of them (on mainline driver and firmware, not ct). They seem to be harmless, but maybe they do point to issues that are not immediately noticeable.

ipq40xx (ea6350v3) may behave differently as ipq806x....

It throws errors and there is blocking of wireless users....

Brief testing of the new patches- it's better in terms of latency, but the speed is just as crappy

Hi @nbd, I noticed that the ATF/AQL codes manages the airtime by each station's Access Categories (AC) queues. Wouldn't this results in certain AC queues using excessive airtime? If a station has loads of packet in each of the 4 AC queues, would this result in one station using more than it's fair share of airtime?

I would think that airtime should be accounted for per station, regardless of AC and for each station, the packets in each of AC queues getting sent out by weighted priority in round-robin fashion, e.g. VO > VI > BE > BK, where each AC queue will at least get one of their packets sent when it's the station's turn to transmit, provided it has enough deficit.

Hope I'm making sense above.

Oh, you noticed that. I was always demonstrating how bad things could still get if you actually used all four queues, as part of the unfunded phase II of the make-wifi-fast project. There is another, more devastating queue, the multicast queue, which at the time had unlimited depth (it's been 6+ years, maybe this was addressed), where the standard had always said to rate limit and "spread out somehow".

What queue gets used is not as simple as weighting them via priority in sw, you have to have them "in the hardware" to win the airtime election ( https://en.wikipedia.org/wiki/IEEE_802.11e-2005 ) which is over a very precise 10us guard interval.

So great! to do this, we end up with 4 queues in hw, 4 ready to go, at a loss in "smartness" as to what to schedule next. A compromise might have been only filling two - at most - hw queues at a time.

In practice, until recently (see qosify), the other access classes were largely unused. Multicast was (and IMHO remains) the biggest problem backward compatible wifi has. I like to think that fq_codel derived, BE scheduling only, doing packet aggregation well, is the right approach for APs, but that clients could benefit from the VI class especially. We showed excellent VOIP MOS with just BE in "ending the anomaly".

This does not mean I'm still right, particularly for wifi-6. I still hath no knowledge of a working gang scheduler on that front tho.

Hmm ... wireless traffic is indeed a complicated subject.

From what I understand of the ath10k driver code, it looks like it will push frames to the firmware according to how mac80211 controls it via the transmission queues ATF/AQL algo. So if mac80211 pushes frames in order of the AC priority, I would think the ath10k firmware will similarly push it out in the same relative order. I'm just guessing here, as I have no idea how the firmware operates.

Edit: Re-reading what you wrote made me realised that it is more complicated than that. With a single AC, there may be multiple streams of traffic, where fq_codel will help. So in additional to ATF/AQL, there's also fq_codel to split packets into streams to ensure all streams have their fair share of traffic. This is indeed complicated.

FWIW, I do see a change with commit 6d49a25 (I still need to load an image the that includes the latest patch 7bf5233a "improve the quantum doohickey" to see if that affects me so don't jump on this).

The throughput streaming from the AP to a fast client is now almost half what I've observed before (~270 mbps comparted to ~500 mbps - even when it is the only client on the 5 GHz band btw). Interestingly, streaming from the same client to the AP is unchanged at about 500 mbps.

EDIT Restarting the client (it's a mac) fixed the slowdown. AP uptime is almost 5 days. Once I get an updated image on, I'll report back if this happens again.