Not sure. I like and use htop though?

htop doesn't monitor free /tmp space that I know of, so I just monitored it via the Luci status page...

And the winner is...... echo awk

Same memory usage as awk alone (approx 2.5 x the blocklist file size), but no increase at all in /tmp usage

@a-z Please ignore trying to use gawk inplace, it uses looooots of memory. Apologies.

Would you possibly mind real world testing echo awk with 23.05.2 and both blocklists causing you issues previously?

echo "$(awk '!seen[$0]++' /tmp/blocklist)" > /tmp/blocklist

I did the changes, but hangs and didn't even show the echo changes.

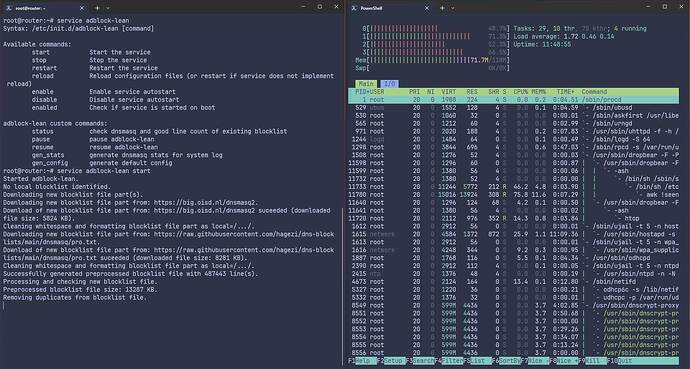

using normal script with the OISD and Hagezi Multi Pro, the ram didn't raise to much, but I think htop doesn't show at all the memory but the 4 cores were working in the removing duplicates when it happened.

it's not a complete hang up, because I still have internet, is just the terminals are stuck and if I reach the router via GUI Luci at 192.168.1.1 the website is unaccessible.

after 5-7 minutes was able to enter again to the shell and access via LuCi, the adblock-lean service is running.

I think the new version of OpenWrt consumes more hardware resources than the previous version and that is why this router in particular struggles when after downloading both lists and deleting and cleaning the files.

Pity! I had hoped this would turn out to be our magic bullet.

I think it worked well, but I think that in this particular case the recommendation would be to limit the blocking lists. It works very well for me with Hagezi Light which is less than 5 megabytes with 60k domains or Hagezi Multi Pro which is around 8 megabytes with 160k lists. I still have 20 megabytes free for other services. The truth is that it was a good idea to leave the lists open and optional.

Perhaps later it would be to structure the steps in the process of organizing and joining multiple lists, perhaps fragmenting those processes although it takes a little longer so that it does not consume all the resources of routers with limited hardware, but that would mean restructuring the script. But I don't see it as urgent or priority.

Perhaps what could be done is to expand the readme file and explain about routers with limited hardware, perhaps try smaller and lighter lists.

Also I'll run

- WireGuard Client/Peer

- SQM for Guest Wifi

- DNS Encrypt to Hijack DNS to work with Adblock-Lean

- Travelmate

and I want to test them with Tailscale so that will consume more ram.

Thanks @a-z for testing these options even if they didn't work out. Good suggestion about adding an eg recommended low ram block list.

Hi, I just created an account to add some more options for those memory problems. I have some expirience in shell scripting, and wrote a very simple version of adblock-lean with a minimum amount of memory usage. To eliminate the temp-files, I did it with pipes.

You can even pipe shell functions. All files written to tmp are compressed. Unfortunately dnsmasq cant read gz and ignores named pipes - so, at least the final config has to be there uncompressed.

As written, it is a quite simple script mostly without error handling - not to use but to have some ideas how to solve those memory issues.

On my Archer C7v4, with both default lists, it took about 100sec including download time.

Fell free to test and send me some feedback/questions - cheers!

Script

#!/bin/sh

set -o pipefail

# Fail, if one pipe member fails - not only on error on last pipe member

# ( false | true; echo $? ) # -> 0

# ( set -o pipefail; false | true; echo $? ) # -> 1

exit_on_err()

{

[ $1 -ne 0 ] && { echo "ERROR $1: $2" >&2 ; exit $1; }

echo "OK: $2" >&2

}

dl_url()

{

fn=$( echo "${1}" | tr -d "[/:.?&=]" )

echo "start DL of $1" >&2

# store download Output to a compressed file

uclient-fetch "${1}" -O- --timeout=2 2> /tmp/abt/uclient-fetch_err | head -c "10m" | gzip > /tmp/abt/${fn}.new.gz

res=$?

[ $res -eq 0 ] || echo "dl_url FAILED: $1"

if [ $res -eq 0 ]

then

mv /tmp/abt/${fn}.new.gz /tmp/abt/${fn}.gz

grep "^Download completed" /tmp/abt/uclient-fetch_err >&2

else

rm /tmp/abt/${fn}.new.gz

[ -f /tmp/abt/${fn}.gz ] && res=0

echo "Download failed" >&2

fi

exit_on_err $res "dl_url $1"

# download ok or backup file there - keep file and output to stdout

gunzip -ck /tmp/abt/${fn}.gz

}

dl_all_urls()

{

echo "start DL LIST" >&2

for url in https://big.oisd.nl/dnsmasq2 https://raw.githubusercontent.com/hagezi/dns-blocklists/main/dnsmasq/pro.txt

do

dl_url $url

done

echo "end DL LIST" >&2

}

clean_list()

{

sed -n "s~^\(local\|address\)=/\([^/]*\)/.*~\2~p" | sed "y/ABCDEFGHIJKLMNOPQRSTUVWXYZ/abcdefghijklmnopqrstuvwxyz/"

}

remove_duplicate()

{

echo "start dedup" >&2

awk '!seen[$0]++'

echo "end dedup" >&2

}

remove_allow()

{

echo "start remove allow" >&2

if [ -f /tmp/abt/allowlist ]

then

grep -F -x -v -f /tmp/abt/allowlist

else

cat

fi

echo "end remove allow" >&2

}

add_blacklist()

{

echo "start add block" >&2

cat

[ -f /tmp/abt/blocklist ] && cat /tmp/abt/blocklist

echo "end add block" >&2

}

format_final_list()

{

sed "s~^.*$~local=/&/#~"

}

echo "start OVERALL" >&2

dl_all_urls | clean_list | remove_duplicate | remove_allow | add_blacklist | format_final_list | gzip > /tmp/abt/dnsmasq.new.gz

res=$?

if [ $res -eq 0 ]

then

mv /tmp/abt/dnsmasq.new.gz /tmp/abt/dnsmasq.gz

gunzip -ck /tmp/abt/dnsmasq.gz > /tmp/dnsmasq.d/adblock-lean

/etc/init.d/dnsmasq restart

echo "finished OVERALL with success" >&2

else

echo "finished OVERALL with ERROR $res" >&2

fi

exit $resSuper cool! I really like the way you pipe those functions together. Does working in the compressed space not eat up more CPU cycles? So for devices with more memory the downside would be lengthier processing time?

That is a nifty idea! Did you by any chance check the total peak memory usage for both methods? Would be great to compare

Hi, yes, but, that is the idea - save some ram but need to do more CPU work. We could add a Config Option like "RAM_VS_CPU".

e.g. 100 means, try hard to save RAM, 0 means, try hard to save CPU.

On each function, we could add different code options depending what is most important for the user - with a default to save RAM...

So I need some more knowledge of how tmp files work. When a command like awk works on a temp file, doesn’t it work directly on that file rather than copying it into memory? If so, how does piping gunzip into awk save memory? I’m asking out of ignorance here.

So, currently, the line

awk '!seen[$0]++'

takes the most RAM, because it holds the uncompressed (edit) deduplicated (/edit) list once in RAM. I'm thinking of better solutions, but, this could be tricky. The idea would be, that there is a lot redundanancy in e.g.

ad.abc.xyz.com

and ad.xyz.us

and store ad and xyz only once in memory - but, to get things together later, you need some infos and that costs also RAM...

So, I think no - awk reads a file and it does not matter if it is on tmp or not. It is doing its processing and output - you redirect it to a tmp file again, but you could also store to a usb stick...

As far as I know, the tmp filesystem eats about 50% of RAM of the Computer. The rest is available to execute programs like awk etc...

Is zram-swap not an option?

The awk seen command is using the most memory out of any functions used here. I'm not sure compressed file will actually reduce the awk memory usage for this particular function, but it is worth checking total peak memory use both ways.

I believe awk creates a hash table in memory for the whole file, instead of straight byte comparison. Which is also why it's so much faster than pure byte comparison methods.

I could try a sed method to remove duplicates only for low ram devices. But I'd have to check if it even saves memory first. And it will be slower than awk by a margin.

Things will get better with it, but, with some more effort with the code, we could do even better than with zram

seen is not a comman, it is a simply array - store each line into the array and if it is not already in the array, print line - that is how this simple code works

Instead of seen you could also write "S" oer "some_array"

Yes correct, seen just happens to be the commonly used variable name for this array and function.

Ah I think I get the gunzip approach now. The point is that by working with pipes gunzip doesn’t uncompress the whole thing it uncompresses chunk by chunk as the processing in awk ensues.

yes - as far as I know, each process has a 4kb input and a 4kb output buffer, and, maybe a 4kb pipe buffer inside the os - so, in sum about 12kb per pipe in place