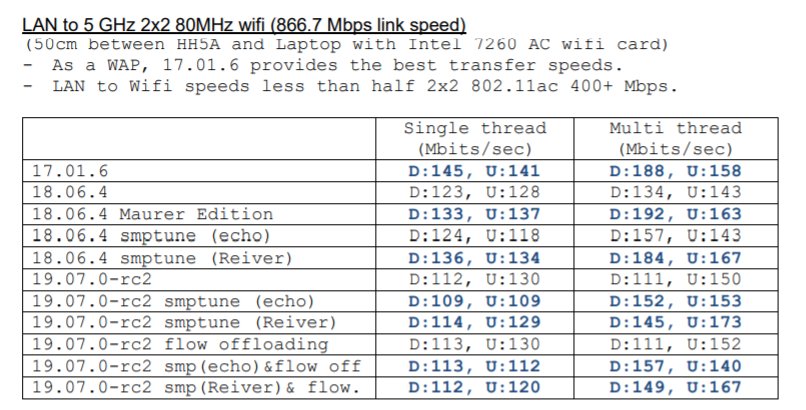

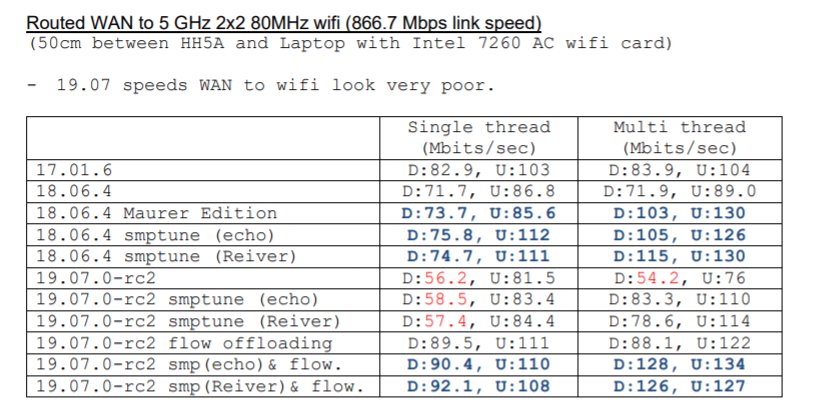

For anyone who is interested, I ran iperf3 against LEDE 17.01.6, 18.06.4 and 19.07.0-rc2, with and without software flow offloading and fixes for 20-smp-tune script (20 line untested fix). Using Windows 10 laptops. Server connected to red WAN port (static IP) or a LAN port. Client connected to LAN port or 5 GHz wifi.

Example iperf3 commands:

Single thread: iperf3 -c 192.168.111.2 -t 10 -R

Single thread: iperf3 -c 192.168.111.2 -t 10

Multi thread: iperf3 -c 192.168.111.2 -t 10 -P 5 -R

Multi thread: iperf3 -c 192.168.111.2 -t 10 -P 5