Ahoy friends.

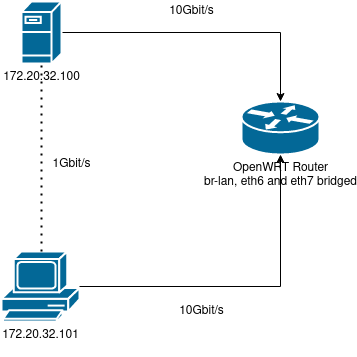

Currently i got also my pc as well as my server connected with 10G with my OpenWRT router.

Both use different subnets, but when i try to ping my server it takes a extremly long time between each echos, but there is no packet loss, and also a low latency. Might a DNS issue be the cause?

When i try to send traffic vom client to server (both 10G connection) it is only being send with 1G.

According to iperf, client to openwrt, and server to openwrt both have full 10gbit connection. But for some reason, iperf from server to client or client to server does not work, i receive an error message: no route to host. But pinging is possible. How can i route 10G traffic?

Where is the 10 Gbps switch and how is it connected to the OpenWRT?

That's how it looks like. My OpenWRT device is my switch, eth7 and eth6 are my 10Gbit interfaces, when i do an iperf from server, or client, to router i got the 10gbit. But when i do iperf from server to client, or client to server, only 1gbit.

Why does OpenWRT only forward traffic limited to 1gbit?

Client to server:

Server listening on TCP port 5001

TCP window size: 128 KByte (default)

------------------------------------------------------------

[ 4] local 172.20.96.128 port 5001 connected with 172.20.96.164 port 56282

[ ID] Interval Transfer Bandwidth

[ 4] 0.0-10.0 sec 1.16 GBytes 994 Mbits/sec

Client to OpenWRT

------------------------------------------------------------

Client connecting to openwrt.lan, TCP port 5001

TCP window size: 1.74 MByte (default)

------------------------------------------------------------

[ 3] local 172.20.96.128 port 33318 connected with 172.20.96.1 port 5001

[ ID] Interval Transfer Bandwidth

[ 3] 0.0-10.0 sec 10.9 GBytes 9.39 Gbits/sec

From fileserver to OpenWRT

------------------------------------------------------------

Client connecting to 172.20.96.1, TCP port 5001

TCP window size: 3.20 MByte (default)

------------------------------------------------------------

[ 3] local 172.20.96.164 port 49500 connected with 172.20.96.1 port 5001

[ ID] Interval Transfer Bandwidth

[ 3] 0.0-10.0 sec 10.9 GBytes 9.40 Gbits/sec

Server to OpenWRT

Are you using the default MTU of 1500 on the bridge, or jumbo frames?

Ahoy friends.

Currently i got also my pc as well as my server connected with 10G with my OpenWRT router.

Both use different subnets, but when i try to ping my server it takes a extremly long time between each echos, but there is no packet loss, and also a low latency. Might a DNS issue be the cause?

Since it's two subnets, that means traffic might have to be routed by the kernel instead of just being forwarded by the internal switch. (Someone correct me on this, I'm not 100% sure)

A DNS delay would cause a slow start for ping when resolving the IP address, but after that the echo/reply is all based on sending ICMP packets between IP addresses.

When i try to send traffic vom client to server (both 10G connection) it is only being send with 1G.

According to iperf, client to openwrt, and server to openwrt both have full 10gbit connection. But for some reason, iperf from server to client or client to server does not work, i receive an error message: no route to host. But pinging is possible. How can i route 10G traffic?

Iperf in server mode needs a port open to allow incoming Iperf client connections. Is the port being blocked by a firewall?

What device are you running OpenWRT on?

Yeah iperf is not being blocked, i've checked that before.

I got a x86 OpenWRT device based on Supermicro X10SRi-F and Xeon E5-2603 v4.

Also i got 2 10Gbit NICs from Mellanox, ConnectX-2 series.

Dumb question, what transfer speed do you get running the transfer in the reverse direction (-R parameter on iPerf, Server send, client receive) between client/server<>openwrt?

Also I've noticed the much smaller window size on the client to server test (128KB versus 1.74/3.2MB on the other tests). Have you tried increasing the window size manually?

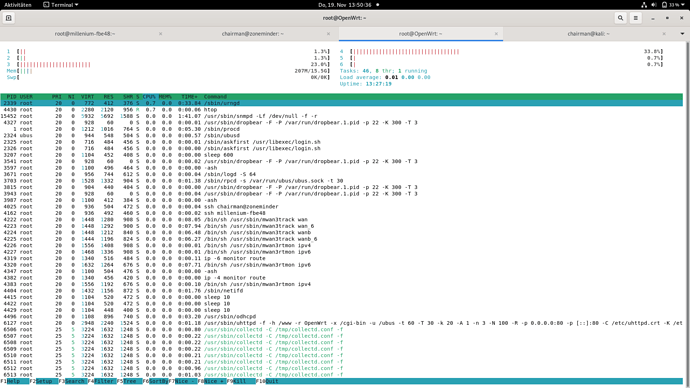

Notice any strange CPU activity during the test runs? Any threads pegged to capacity doing IRQ?

What does the dotted line represent? Is it a real connection?

That's just representing a data flow from client, through the openwrt router, to the server.

What is the actual device, and is it using a switch chip or did you just bridge some 10G nic's and call it a day?

EDIT:From the description it sounds like you are bridging two 10G nics. You'll never saturate them in a bridge mode, and the routing is going to take up extra CPU, and you will still not hit 10G.

A nic is not an ASIC switching fabric.

Yeah 2 bridged 10G Nics. So it's not possible to get more than 1G? Btw. according to htop the CPU load on all the 6 cores is very very low.

Disclaimer: I have no personal experiences with 10 GBit/s equipment so far, nor the mellanox cards in question.

…but for me, 'even' numbers are always suspicious. Yes, 994 MBit/s is above the expected values for 1000BASE-T (those would be more around 930 MBit/s), but it's still suspiciously close to 1 GBit/s (if it were >=1200 MBit/s or at least >=1100 MBit/s - or significantly below <=850 MBit/s, I might buy that explanation), suggesting an artificial limit instead. While I can't provide any smoking gun, I'd keep debugging this further - among other things, do you have enough PCIe lanes available to achieve your desired throughput (the packets need to pass through the CPU, so there mustn't be any bottlenecks between CPU, PCIe host controller and the network cards), is link speed negotiation working (ethtool will become your best friend), any warnings or errors messages (hello dmesg and logread/ logread -f), are you dealing with excessive packet drop (ip), load issues (top, htop, iotop, iftop, ntop, …), how do other full-blown distributions react (Debian, Fedora/ CentOS, …), what about xBSD (e.g. opnsense), …

Bridging is a poor-man’s switching, so it’s never as efficient as switching. However, in your case neither switching nor bridging is used. If the hosts are in two different subnets (you didn’t specify the subnet masks in the diagram), then the packets between the two hosts are routed. If you bridged these two 10 Gbps interfaces, I would recommend unbridging them.

Did you run “top” in OpenWRT when you are running iPerf between the two hosts? It’s not a trivial task for a CPU to route at 10 Gbps.

Yeah, i have also tried routing instead of bridging. I had the same issue, it was limited to more or less 995 mbits as well. CPU load was around 4% on one core.

I am using htop, but i could try with top as well.

So currently they are bridged. My subnet is 172.20.32.0/19

Btw. thats what i tried now.

On my servers interface, there are also virtual machines bridged onto it.

So i have tried routing, from one of the virtual machines onto the host, so from server to router back to server, they are in a different subnet.

There is no bottleneck in this case, at least not in case of routing.

Load @ 10Gbit is almost 40% on one core.

So to conclude, i got my bridge br-lan, 2x 10Gbit interfaces briged, and 1x the rest of my whole 1Gbit home network.

Is there maybe some problem because of the home network? Do have to assign different priorities in order to make the br-lan in the OpenWRT device the root bridge? As far as i know it should not matter, because there are 10G switches with 1G ports as well, and they will not limit the 10Gbit port′s bandwidth.

[ 4] local 192.168.200.144 port 5001 connected with 172.20.96.164 port 50782

[ ID] Interval Transfer Bandwidth

[ 4] 0.0-10.0 sec 9.97 GBytes 8.56 Gbits/sec

I misread your diagram. The two hosts in the diagram are definitely in the same subnet. So, no routing is involved.

I would suggest putting the hosts in two different subnets so that traffic between them is routed. Remove them from the bridge and run iPerf.

If that doesn’t improve throughout, then create a separate bridge that consists only of these two interfaces - do not bridge 1 Gbps interfaces into the same bridge. Put the two hosts in the same subnet and try to run iPerf to see if the throughput improves beyond 1 Gbps.

It's going to be load on the cards, it should go more than a GB, but it definitely won't go all the way to 10GB. I do see a lot on Google about extremely poor bridged performance on some 10GB Intel cards. It's possible there's just some issue with the cards firmware or drivers.

I found someone with something similar on proxmox. It's a long thread, but it seems like a similar issue.